A Wrapped Normal Distribution on Hyperbolic Spacefor Gradient

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

C 2013 Johannes Traa

c 2013 Johannes Traa MULTICHANNEL SOURCE SEPARATION AND TRACKING WITH PHASE DIFFERENCES BY RANDOM SAMPLE CONSENSUS BY JOHANNES TRAA THESIS Submitted in partial fulfillment of the requirements for the degree of Master of Science in Electrical and Computer Engineering in the Graduate College of the University of Illinois at Urbana-Champaign, 2013 Urbana, Illinois Adviser: Assistant Professor Paris Smaragdis ABSTRACT Blind audio source separation (BASS) is a fascinating problem that has been tackled from many different angles. The use case of interest in this thesis is that of multiple moving and simultaneously-active speakers in a reverberant room. This is a common situation, for example, in social gatherings. We human beings have the remarkable ability to focus attention on a particular speaker while effectively ignoring the rest. This is referred to as the \cocktail party effect” and has been the holy grail of source separation for many decades. Replicating this feat in real-time with a machine is the goal of BASS. Single-channel methods attempt to identify the individual speakers from a single recording. However, with the advent of hand-held consumer electronics, techniques based on microphone array processing are becoming increasingly popular. Multichannel methods record a sound field from various locations to incorporate spatial information. If the speakers move over time, we need an algorithm capable of tracking their positions in the room. For compact arrays with 1-10 cm of separation between the microphones, this can be accomplished by applying a temporal filter on estimates of the directions-of-arrival (DOA) of the speakers. In this thesis, we review recent work on BSS with inter-channel phase difference (IPD) features and provide extensions to the case of moving speakers. -

Wrapped Log Kumaraswamy Distribution and Its Applications

International Journal of Mathematics and Computer Research ISSN: 2320-7167 Volume 06 Issue 10 October-2018, Page no. - 1924-1930 Index Copernicus ICV: 57.55 DOI: 10.31142/ijmcr/v6i10.01 Wrapped Log Kumaraswamy Distribution and its Applications K.K. Jose1, Jisha Varghese2 1,2Department of Statistics, St.Thomas College, Palai,Arunapuram Mahatma Gandhi University, Kottayam, Kerala- 686 574, India ARTICLE INFO ABSTRACT Published Online: A new circular distribution called Wrapped Log Kumaraswamy Distribution (WLKD) is introduced 10 October 2018 in this paper. We obtain explicit form for the probability density function and derive expressions for distribution function, characteristic function and trigonometric moments. Method of maximum likelihood estimation is used for estimation of parameters. The proposed model is also applied to a Corresponding Author: real data set on repair times and it is established that the WLKD is better than log Kumaraswamy K.K. Jose distribution for modeling the data. KEYWORDS: Log Kumaraswamy-Geometric distribution, Wrapped Log Kumaraswamy distribution, Trigonometric moments. 1. Introduction a test, atmospheric temperatures, hydrological data, etc. Also, Kumaraswamy (1980) introduced a two-parameter this distribution could be appropriate in situations where distribution over the support [0, 1], called Kumaraswamy scientists use probability distributions which have infinite distribution (KD), for double bounded random processes for lower and (or) upper bounds to fit data, where as in reality the hydrological applications. The Kumaraswamy distribution is bounds are finite. Gupta and Kirmani (1988) discussed the very similar to the Beta distribution, but has the important connection between non-homogeneous Poisson process advantage of an invertible closed form cumulative (NHPP) and record values. -

Chapter 2 Parameter Estimation for Wrapped Distributions

ABSTRACT RAVINDRAN, PALANIKUMAR. Bayesian Analysis of Circular Data Using Wrapped Dis- tributions. (Under the direction of Associate Professor Sujit K. Ghosh). Circular data arise in a number of different areas such as geological, meteorolog- ical, biological and industrial sciences. We cannot use standard statistical techniques to model circular data, due to the circular geometry of the sample space. One of the com- mon methods used to analyze such data is the wrapping approach. Using the wrapping approach, we assume that, by wrapping a probability distribution from the real line onto the circle, we obtain the probability distribution for circular data. This approach creates a vast class of probability distributions that are flexible to account for different features of circular data. However, the likelihood-based inference for such distributions can be very complicated and computationally intensive. The EM algorithm used to compute the MLE is feasible, but is computationally unsatisfactory. Instead, we use Markov Chain Monte Carlo (MCMC) methods with a data augmentation step, to overcome such computational difficul- ties. Given a probability distribution on the circle, we assume that the original distribution was distributed on the real line, and then wrapped onto the circle. If we can unwrap the distribution off the circle and obtain a distribution on the real line, then the standard sta- tistical techniques for data on the real line can be used. Our proposed methods are flexible and computationally efficient to fit a wide class of wrapped distributions. Furthermore, we can easily compute the usual summary statistics. We present extensive simulation studies to validate the performance of our method. -

A General Approach for Obtaining Wrapped Circular Distributions Via Mixtures

UC Santa Barbara UC Santa Barbara Previously Published Works Title A General Approach for obtaining wrapped circular distributions via mixtures Permalink https://escholarship.org/uc/item/5r7521z5 Journal Sankhya, 79 Author Jammalamadaka, Sreenivasa Rao Publication Date 2017 Peer reviewed eScholarship.org Powered by the California Digital Library University of California 1 23 Your article is protected by copyright and all rights are held exclusively by Indian Statistical Institute. This e-offprint is for personal use only and shall not be self- archived in electronic repositories. If you wish to self-archive your article, please use the accepted manuscript version for posting on your own website. You may further deposit the accepted manuscript version in any repository, provided it is only made publicly available 12 months after official publication or later and provided acknowledgement is given to the original source of publication and a link is inserted to the published article on Springer's website. The link must be accompanied by the following text: "The final publication is available at link.springer.com”. 1 23 Author's personal copy Sankhy¯a:TheIndianJournalofStatistics 2017, Volume 79-A, Part 1, pp. 133-157 c 2017, Indian Statistical Institute ! AGeneralApproachforObtainingWrappedCircular Distributions via Mixtures S. Rao Jammalamadaka University of California, Santa Barbara, USA Tomasz J. Kozubowski University of Nevada, Reno, USA Abstract We show that the operations of mixing and wrapping linear distributions around a unit circle commute, and can produce a wide variety of circular models. In particular, we show that many wrapped circular models studied in the literature can be obtained as scale mixtures of just the wrapped Gaussian and the wrapped exponential distributions, and inherit many properties from these two basic models. -

On Wrapped Binomial Model Characteristics

Mathematics and Statistics 2(7): 231-234, 2014 http://www.hrpub.org DOI: 10.13189/ms.2014.020701 On Wrapped Binomial Model Characteristics S.V.S.Girija1,*, A.V. Dattatreya Rao2, G.V.L.N. Srihari 3 1Mathematics, Hindu College, Guntur, India 2Acharya Nagarjuna University, Guntur, India 3Mathematics, Aurora Engineering College, Bhongir *Corresponding Author: [email protected] Copyright © 2014 Horizon Research Publishing All rights reserved. Abstract In this paper, a new discrete circular model, the latter case, the probability density function f (θ ) the Wrapped Binomial model is constructed by applying the method of wrapping a discrete linear model. The exists and has the following basic properties. characteristic function of the Wrapped Binomial Distribution f (θ ) ≥ 0 is also derived and the population characteristics are studied. 2π Keywords Characteristic Function, Circular Models, ∫ fd(θθ) =1 Trigonometric Moments, Wrapped Models 0 ff(θ) =( θπ + 2 k) , for any integer k, That is , f (θ ) is periodic with period 2.π 1. Introduction 2.1. Wrapped Discrete Circular Random Variables Circular or directional data arise in many diverse fields, such as Biology, Geology, Physics, Meteorology, and If X is a discrete random variable on the set of integers, + psychology, Medicine, Image Processing, Political Science, then reduction modulo 2π mm( ∈ ) wraps the integers Economics and Astronomy (Mardia and Jupp (2000). on to the group of mth roots of unity which is a sub group of Wrapped circular models (Girija (2010)), stereographic unit circle. circular models (Phani (2013)) and Offset circular models i.e. Φ=2ππxm( mod 2 ) (Radhika (2014)) provide a rich and very useful class of models for circular as well as l-axial data. -

On Some Circular Distributions Induced by Inverse Stereographic Projection

On Some Circular Distributions Induced by Inverse Stereographic Projection Shamal Chandra Karmaker A Thesis for The Department of Mathematics and Statistics Presented in Partial Fulfillment of the Requirements for the Degree of Master of Science (Mathematics) at Concordia University Montr´eal, Qu´ebec, Canada November 2016 c Shamal Chandra Karmaker 2016 CONCORDIA UNIVERSITY School of Graduate Studies This is to certify that the thesis prepared By: Shamal Chandra Karmaker Entitled: On Some Circular Distributions Induced by Inverse Stereographic Projection and submitted in partial fulfillment of the requirements for the degree of Master of Science (Mathematics) complies with the regulations of the University and meets the accepted standards with respect to originality and quality. Signed by the final examining committee: Examiner Dr. Arusharka Sen Examiner Dr. Wei Sun Thesis Supervisor Dr. Y.P. Chaubey Thesis Co-supervisor Dr. L. Kakinami Approved by Chair of Department or Graduate Program Director Dean of Faculty Date Abstract On Some Circular Distributions Induced by Inverse Stereographic Projection Shamal Chandra Karmaker In earlier studies of circular data, mostly circular distributions were considered and many biological data sets were assumed to be symmetric. However, presently inter- est has increased for skewed circular distributions as the assumption of symmetry may not be meaningful for some data. This thesis introduces three skewed circular models based on inverse stereographic projection, introduced by Minh and Farnum (2003), by considering three different versions of skewed-t considered in the litera- ture, namely Azzalini skewed-t, two-piece skewed-t and Jones and Faddy skewed-t. Shape properties of the resulting distributions along with estimation of parameters using maximum likelihood are discussed in this thesis. -

Journal of Environmental Statistics August 2014, Volume 6, Issue 2

jes Journal of Environmental Statistics August 2014, Volume 6, Issue 2. http://www.jenvstat.org Wrapped variance gamma distribution with an application to Wind Direction Mian Arif Shams Adnan Shongkour Roy Jahangirnagar University Jahangirnagar University and icddr, b Abstract Since some important variables are axial in weather study such as turbulent wind direction, the study of variance gamma distribution in case of circular data can be an amiable perspective; as such the "Wrapped variance gamma" distribution along with its probability density function has been derived. Some of the other wrapped distributions have also been unfolded through proper specification of the concern parameters. Explicit forms of trigonometric moments, related parameters and some other properties of the same distribution are also obtained. As an example the methods are applied to a data set which consists of the wind directions of a Black Mountain ACT. Keywords: Directional data,Modified Bessel function, Trigonometric Moments,Watson's U 2 statistic. 1. Introduction An axis is an undirected line where there is no reason to distinguish one end of the line from the other (Fisher 1993, p. xvii). Examples of such phenomena include a dance direction of bees, movement of sea stars, fracture in a rock exposure, face-cleat in a coal seam, long-axis orientations of feldspar laths in basalt, horizontal axes of outwash pebbles and orientations of rock cores (Fisher 1993). To model axial data, Arnold and SenGupta (2006) discussed a method of construction for axial distributions, which is seen as a wrapping of circular distribu- tion. While in real line, variance gamma distribution was first derived by Madan and Seneta (1990). -

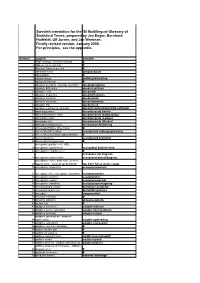

Swedish Translation for the ISI Multilingual Glossary of Statistical Terms, Prepared by Jan Enger, Bernhard Huitfeldt, Ulf Jorner, and Jan Wretman

Swedish translation for the ISI Multilingual Glossary of Statistical Terms, prepared by Jan Enger, Bernhard Huitfeldt, Ulf Jorner, and Jan Wretman. Finally revised version, January 2008. For principles, see the appendix. -

Multimodal Circular Filtering Using Fourier Series

Multimodal Circular Filtering Using Fourier Series Florian Pfaff, Gerhard Kurz, and Uwe D. Hanebeck Intelligent Sensor-Actuator-Systems Laboratory (ISAS) Institute for Anthropomatics and Robotics Karlsruhe Institute of Technology (KIT), Germany [email protected] [email protected] [email protected] Abstract—Recursive filtering with multimodal likelihoods and transition densities on periodic manifolds is, despite the compact domain, still an open problem. We propose a novel filter for the circular case that performs well compared to other state- of-the-art filters adopted from linear domains. The filter uses a limited number of Fourier coefficients of the square root of the density. This representation is preserved throughout filter and prediction steps and allows obtaining a valid density at any point in time. Additionally, analytic formulae for calculating Fourier coefficients of the square root of some common circular densities are provided. In our evaluation, we show that this new filter performs well in both unimodal and multimodal scenarios while requiring only a reasonable number of coefficients. Keywords—Directional statistics, density estimation, nonlinear filtering, recursive Bayesian estimation. I. INTRODUCTION Figure 1. A von Mises distribution with κ = 10 and corresponding approximations using 7 Fourier coefficients. Although many users are unaware of the assumption, a lot of results in Bayesian statistics rely on a linear topology of the underlying domain. While a wide range of tools have operation. Therefore, approximations are necessary in either emerged in the field of estimation for linear domains, many the prediction or filter step to maintain such a representation. of them perform significantly worse on periodic domains. -

Generalization of Polynomial Chaos for Estimation of Angular Random Variables

Scholars' Mine Doctoral Dissertations Student Theses and Dissertations Summer 2020 Generalization of polynomial chaos for estimation of angular random variables Christine Louise Schmid Follow this and additional works at: https://scholarsmine.mst.edu/doctoral_dissertations Part of the Aerospace Engineering Commons Department: Mechanical and Aerospace Engineering Recommended Citation Schmid, Christine Louise, "Generalization of polynomial chaos for estimation of angular random variables" (2020). Doctoral Dissertations. 2919. https://scholarsmine.mst.edu/doctoral_dissertations/2919 This thesis is brought to you by Scholars' Mine, a service of the Missouri S&T Library and Learning Resources. This work is protected by U. S. Copyright Law. Unauthorized use including reproduction for redistribution requires the permission of the copyright holder. For more information, please contact [email protected]. GENERALIZATION OF POLYNOMIAL CHAOS FOR ESTIMATION OF ANGULAR RANDOM VARIABLES by CHRISTINE LOUISE SCHMID A DISSERTATION Presented to the Graduate Faculty of the MISSOURI UNIVERSITY OF SCIENCE AND TECHNOLOGY In Partial Fulfillment of the Requirements for the Degree DOCTOR OF PHILOSOPHY in AEROSPACE ENGINEERING 2020 Approved by: Kyle J. DeMars, Advisor Jacob E. Darling Serhat Hosder Robert Paige Henry Pernicka Copyright 2020 CHRISTINE LOUISE SCHMID All Rights Reserved iii ABSTRACT The state of a dynamical system will rarely be known perfectly, requiring the vari able elements in the state to become random variables. More accurate estimation of the uncertainty in the random variable results in a better understanding of how the random variable will behave at future points in time. Many methods exist for representing a random variable within a system including a polynomial chaos expansion (PCE), which expresses a random variable as a linear combination of basis polynomials. -

Wrapped Hb-Skewed Laplace Distribution and Its Application in Meteorology

Biometrics & Biostatistics International Journal Research Article Open Access Wrapped hb-skewed laplace distribution and its application in meteorology Abstract Volume 7 Issue 6 - 2018 In this paper,we introduce a new circular distribution called Wrapped Holla and Jisha Varghese, KK Jose Bhattacharya’s (HB) Skewed Laplace distribution.We obtain explicit form for the Department of Statistics, Mahatma Gandhi University, India density and derive expressions for distribution function, characteristic function and trigonometric moments. Method of maximum likelihood estimation is used for Correspondence: KK Jose, Department of Statistics, estimation of parameters. The methods are applied to a data set which consists of St.Thomas College, Mahatma Gandhi University, the wind directions of a Black Mountain ACT and compared with that of wrapped Kottayam, Kerala 686 574, India, variance gamma distribution (WvG) and generalised von Mises (GvM) distribution. Email Keywords: circular distribution, laplace distribution, hb skewed laplace, Received: Novmber 08, 2017 | Published: Novmber 20, 2018 trigonometric moments, meteorology, wind direction Introduction study of circular distribution because of their wide applicability. Jammalamadakka & Kozubowski3 discussed circular distributions Circular or directional data arise in different ways. The two main obtained by wrapping the classical exponential and Laplace ways correspond to the two principal circular measuring instruments, distributions on the real line around the circle. Rao et al.,4 derived the compass and the clock. Typical observations measured by the new circular models by wrapping the well known life testing models compass include wind directions and directions of migrating birds. like log normal, logistic, Weibull and extreme-value distributions. Data of a similar type arise from measurements by spirit level or Roy & Adnan5 developed a new class of circular distributions namely protractor. -

On Initial Direction, Orientation and Discreteness in the Analysis of Circular Variables

On initial direction, orientation and discreteness in the analysis of circular variables Gianluca Mastrantonio Department of Economics, University of Roma Tre and Giovanna Jona Lasinio Department of Statistical Sciences, University of Rome \Sapienza" and Antonello Maruotti Department of Economic, Political Sciences and Modern Languages, LUMSA and Gianfranco Calise Department of Earth Science, University of Rome \Sapienza" Abstract In this paper, we propose a discrete circular distribution obtained by extending the wrapped Poisson distribution. This new distribution, the Invariant Wrapped Poisson (IWP), enjoys numerous advantages: simple tractable density, parameter-parsimony and interpretability, good circular dependence structure and easy random number generation thanks to known marginal/conditional distributions. Existing discrete circular distributions strongly depend on the initial direction and orientation, i.e. a change of the reference system on the circle may lead to misleading inferential arXiv:1509.08638v1 [stat.ME] 29 Sep 2015 results. We investigate the invariance properties, i.e. invariance under change of initial direction and of the reference system orientation, for several continuous and discrete distributions. We prove that the introduced IWP distribution satisfies these two crucial properties. We estimate parameters in a Bayesian framework and provide all computational details to implement the algorithm. Inferential issues related to the invariance properties are discussed through numerical examples on artificial and real