Interactive and Explorable 360 VR System with Visual Guidance User Interfaces

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Zoomtext Quick Reference Guide Version 10

ZoomText Quick Reference Guide version 10 Contents Welcome to ZoomText 10 ................ 3 Cursor Enhancements ............................24 System Requirements ............................... 4 Focus Enhancements ..............................25 Installing ZoomText ................................. 5 Font Enhancements .................................26 Activating ZoomText ............................... 6 Desktop Finder ........................................27 Starting ZoomText .................................... 8 Web Finder ...............................................28 ZoomText User Interface ......................... 9 Text Finder ...............................................31 Enabling and Disabling ZoomText ...... 11 Smooth Panning ......................................33 Magnifier Toolbar .......................... 12 Reader Toolbar .............................. 35 Setting the Magnification level ............. 13 Turning Speech On and Off ...................36 Selecting a Zoom Window .................... 14 Adjusting the Speech Rate .....................37 Adjusting a Zoom Window ................... 16 Synthesizer Settings ................................38 Using the Freeze Window ..................... 17 Typing Echo .............................................39 Using View Mode ................................... 18 Mouse Echo ..............................................40 Dual Monitor Support ............................ 19 Verbosity...................................................41 Color Enhancements ............................. -

2020 IEEE Conference on Virtual Reality and 3D User Interfaces

2020 IEEE Conference on Virtual Reality and 3D User Interfaces (VR 2020) Atlanta , Georgia, USA 22 – 26 March 2020 IEEE Catalog Number: CFP20VIR-POD ISBN: 978-1-7281-5609-5 Copyright © 2020 by the Institute of Electrical and Electronics Engineers, Inc. All Rights Reserved Copyright and Reprint Permissions: Abstracting is permitted with credit to the source. Libraries are permitted to photocopy beyond the limit of U.S. copyright law for private use of patrons those articles in this volume that carry a code at the bottom of the first page, provided the per-copy fee indicated in the code is paid through Copyright Clearance Center, 222 Rosewood Drive, Danvers, MA 01923. For other copying, reprint or republication permission, write to IEEE Copyrights Manager, IEEE Service Center, 445 Hoes Lane, Piscataway, NJ 08854. All rights reserved. *** This is a print representation of what appears in the IEEE Digital Library. Some format issues inherent in the e-media version may also appear in this print version. IEEE Catalog Number: CFP20VIR-POD ISBN (Print-On-Demand): 978-1-7281-5609-5 ISBN (Online): 978-1-7281-5608-8 ISSN: 2642-5246 Additional Copies of This Publication Are Available From: Curran Associates, Inc 57 Morehouse Lane Red Hook, NY 12571 USA Phone: (845) 758-0400 Fax: (845) 758-2633 E-mail: [email protected] Web: www.proceedings.com 2020 IEEE Conference on Virtual Reality and 3D User Interfaces (VR) VR 2020 Table of Contents General Chairs Message xix Conference Paper Program Chairs Message xx IEEE Visualization and Graphics Technical -

An Empirical Study of Virtual Reality Menu Interaction and Design

Mississippi State University Scholars Junction Theses and Dissertations Theses and Dissertations 4-30-2021 An empirical study of virtual reality menu interaction and design Emily Salmon Wall [email protected] Follow this and additional works at: https://scholarsjunction.msstate.edu/td Recommended Citation Wall, Emily Salmon, "An empirical study of virtual reality menu interaction and design" (2021). Theses and Dissertations. 5161. https://scholarsjunction.msstate.edu/td/5161 This Dissertation - Open Access is brought to you for free and open access by the Theses and Dissertations at Scholars Junction. It has been accepted for inclusion in Theses and Dissertations by an authorized administrator of Scholars Junction. For more information, please contact [email protected]. Template C with Schemes v4.1 (beta): Created by L. 11/15/19 An empirical study of virtual reality menu interaction and design By TITLE PAGE Emily Salmon Wall Approved by: Reuben F. Burch V (Major Professor) Michael Hamilton Daniel Carruth Brian Smith Ginnie Hsu Linkan Bian (Graduate Coordinator) Jason M. Keith (Dean, Bagley College of Engineering) A Dissertation Submitted to the Faculty of Mississippi State University in Partial Fulfillment of the Requirements for the Degree of Doctor of Philosophy in Industrial and Systems Engineering in the Department of Industrial and Systems Engineering Mississippi State, Mississippi April 2021 Copyright by COPYRIGHT PAGE Emily Salmon Wall 2021 Name: Emily Salmon Wall ABSTRACT Date of Degree: April 30, 2021 Institution: Mississippi State University Major Field: Industrial and Systems Engineering Major Professor: Reuben F. Burch V Title of Study: An empirical study of virtual reality menu interaction and design Pages in Study: 181 Candidate for Degree of Doctor of Philosophy This study focused on three different menu designs each with their own unique interactions and organizational structures to determine which design features would perform the best. -

Real-Time 3D Graphic Augmentation of Therapeutic Music Sessions for People on the Autism Spectrum

Real-time 3D Graphic Augmentation of Therapeutic Music Sessions for People on the Autism Spectrum John Joseph McGowan Submitted in partial fulfilment of the requirements of Edinburgh Napier University for the degree of Doctor of Philosophy October 2018 Declaration I, John McGowan, declare that the work contained within this thesis has not been submitted for any other degree or professional qualification. Furthermore, the thesis is the result of the student’s own independent work. Published material associated with the thesis is detailed within the section on Associate Publications. Signed: Date: 12th October 2019 J J McGowan Abstract i Abstract This thesis looks at the requirements analysis, design, development and evaluation of an application, CymaSense, as a means of improving the communicative behaviours of autistic participants through therapeutic music sessions, via the addition of a visual modality. Autism spectrum condition (ASC) is a lifelong neurodevelopmental disorder that can affect people in a number of ways, commonly through difficulties in communication. Interactive audio-visual feedback can be an effective way to enhance music therapy for people on the autism spectrum. A multi-sensory approach encourages musical engagement within clients, increasing levels of communication and social interaction beyond the sessions. Cymatics describes a resultant visualised geometry of vibration through a variety of mediums, typically through salt on a brass plate or via water. The research reported in this thesis focuses on how an interactive audio-visual application, based on Cymatics, might improve communication for people on the autism spectrum. A requirements analysis was conducted through interviews with four therapeutic music practitioners, aimed at identifying working practices with autistic clients. -

Manipulation of 3D Objects in Immersive Virtual Environments

PhD Dissertation Proposal Manipulation of 3D Objects in Immersive Virtual Environments Daniel Filipe Martins Tavares Mendes [email protected] September 2015 Abstract Interactions within virtual environments (VE) often require manipu- lation of 3D virtual objects. For this purpose, several research have been carried out focusing on mouse, touch and gestural input. While exist- ing mid-air gestures in immersive VE (IVE) can offer natural manipula- tions, mimicking interactions with physical objects, they cannot achieve the same levels of precision attained with mouse-based applications for computer-aided design (CAD). Following prevailing trends in previous research, such as degrees-of- freedom (DOF) separation, virtual widgets and scaled user motion, we intend to explore techniques for IVEs that combine the naturalness of mid-air gestures and the precision found in traditional CAD systems. In this document we survey and discuss the state-of-the-art in 3D object manipulation. With challenges identified, we present a research proposal and corresponding work plan. 1 Contents 1 Introduction 3 2 Background and Related Work 4 2.1 Key players . .4 2.2 Relevant events and journals . .5 2.3 Virtual Environments Overview . .7 2.4 Mouse and Keyboard based 3D Interaction . .9 2.5 3D Manipulation on Interactive Surfaces . 12 2.6 Touching Stereoscopic Tabletops . 16 2.7 Mid-Air Interactions . 17 2.8 Within Immersive Virtual Environments . 20 2.9 Discussion . 25 3 Research Proposal 28 3.1 Problem . 28 3.2 Hypotheses . 28 3.3 Objectives . 29 4 Preliminary Study of Mid-Air Manipulations 31 4.1 Implemented Techniques . 31 4.2 User Evaluation . -

UI Design and Interaction Guide for Windows Phone 7

UI Design and Interaction Guide 7 for Windows Phone 7 July 2010 Version 2.0 UI Design and Interaction Guide for Windows Phone 7 July 2010 Version 2.0 This is pre-release documentation and is subject to change in future releases. This document supports a preliminary release of a software product that may be changed substantially prior to final commercial release. This docu- ment is provided for informational purposes only and Microsoft makes no warranties, either express or implied, in this document. Information in this document, including URL and other Internet Web site references, is subject to change without notice. The entire risk of the use or the results from the use of this document remains with the user. Unless otherwise noted, the companies, organizations, products, domain names, e-mail addresses, logos, people, places, and events depicted in examples herein are fictitious. No association with any real company, organization, product, domain name, e-mail address, logo, person, place, or event is intended or should be inferred. Complying with all applicable copyright laws is the responsibility of the user. Without limiting the rights under copyright, no part of this document may be reproduced, stored in or introduced into a retrieval system, or transmitted in any form or by any means (electronic, mechanical, photocopying, recording, or otherwise), or for any purpose, without the express written permission of Microsoft Corporation. Microsoft may have patents, patent applications, trademarks, copyrights, or other intellectual property rights covering subject matter in this docu- ment. Except as expressly provided in any written license agreement from Microsoft, the furnishing of this document does not give you any license to these patents, trademarks, copyrights, or other intellectual property. -

Programming Pattern PF

PHP PROGRAMMING PATTERN FOR THE PROGRESS FEEDBACK USABILITY MECHANISM Drafted by Francy Diomar Rodríguez, Software and Systems PhD Student, Facultad de Informática. Universidad Politécnica de Madrid. ([email protected]) Content 1 Introduction ..................................................................................................................... 1 2 Progress Feedback UM design pattern ............................................................................. 1 3 Progress Feedback UM Pattern (PHP) ............................................................................. 3 1 Introduction This document presents an implementation-oriented design pattern and the respective programming pattern developed in PHP for the Progress Feedback usability mechanism (UM). 2 Progress Feedback UM design pattern This is a design pattern for implementing the Progress Feedback usability mechanism. The Solution section details the responsibilities that the usability functionality has to fulfil. The Structure section contains the proposed design and the Implementation section describes the steps necessary for codifying the proposed design. NAME Progress Feedback UM PROBLEM When a system process is likely to block the UI for longer than two seconds, the user must be informed about the progress of the requested task. CONTEXT Highly interactive web applications and time-consuming processes that interrupt the UI for longer than two seconds SOLUTION Components are required to fulfil the responsibilities associated with the UM. They are: A component that display the right progress indicator depending on the available information: time, percentage, number of units processed, task completed, if no quantities are known: Indeterminate Progress Indicator. Generate a server-side mechanism for the active process to update and report progress Create a cyclical process that queries the progress of a task until completion. A component to inform the user of task completion. A component that display the completion message and close the progress indicator. -

Progressbar for ASP.NET AJAX Copyright 1987-2010 Componentone LLC

ComponentOne ProgressBar for ASP.NET AJAX Copyright 1987-2010 ComponentOne LLC. All rights reserved. Corporate Headquarters ComponentOne LLC 201 South Highland Avenue 3rd Floor Pittsburgh, PA 15206 ∙ USA Internet: [email protected] Web site: http://www.componentone.com Sales E-mail: [email protected] Telephone: 1.800.858.2739 or 1.412.681.4343 (Pittsburgh, PA USA Office) Trademarks The ComponentOne product name is a trademark and ComponentOne is a registered trademark of ComponentOne LLC. All other trademarks used herein are the properties of their respective owners. Warranty ComponentOne warrants that the original CD (or diskettes) are free from defects in material and workmanship, assuming normal use, for a period of 90 days from the date of purchase. If a defect occurs during this time, you may return the defective CD (or disk) to ComponentOne, along with a dated proof of purchase, and ComponentOne will replace it at no charge. After 90 days, you can obtain a replacement for a defective CD (or disk) by sending it and a check for $25 (to cover postage and handling) to ComponentOne. Except for the express warranty of the original CD (or disks) set forth here, ComponentOne makes no other warranties, express or implied. Every attempt has been made to ensure that the information contained in this manual is correct as of the time it was written. We are not responsible for any errors or omissions. ComponentOne’s liability is limited to the amount you paid for the product. ComponentOne is not liable for any special, consequential, or other damages for any reason. -

The Data Surface Interaction Paradigm

EG UK Theory and Practice of Computer Graphics (2005) L. Lever, M. McDerby (Editors) The Data Surface Interaction Paradigm Rikard Lindell and Thomas Larsson Department of Computer Science Mälardalen University Sweden Abstract This paper presents, in contrasts to the desktop metaphor, a content centric data surface interaction paradigm for graphical user interfaces applied to music creativity improvisation. Issues taken into account were navigation and retrieval of information, collaboration, and creative open-ended tasks. In this system there are no windows, icons, menus, files or applications. Content is presented on an infinitely large two-dimensional surface navigated by incremental search, zoom, and pan. Commands are typed aided by contextual help, visual feedback, and text completion. Components provide services related to different content modalities. Synchronisation of data surface content sustains mutual awareness of actions and mutual modifiability. The prototype music tool was evaluated with 10 users; it supported services expected by users, their creativity in action, and awareness in collaboration. User responses to the prototype tool were: It feels free, it feels good for creativity, and it’s easy and fun to use. Categories and Subject Descriptors (according to ACM CCS): H.5.2 [Information interfaces and presentation]: User Interfaces 1. Introduction a component framework for creating compound documents that removed the need of monolithic applications on desktop The graphical user interface of today’s computers has its platforms [CDE95]. origin in the findings and inventions of Alan Kay at Xe- rox PARC in the 70s. With it came a lot of interaction ele- With the DSIP users do not have to conduct any explicit ments, techniques, and metaphors to explain and convey the file management. -

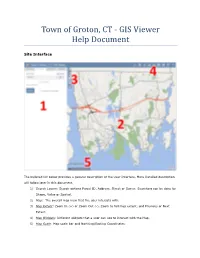

GIS Viewer Help Document

Town of Groton, CT - GIS Viewer Help Document Site Interface The bulleted list below provides a general description of the User Interface. More Detailed description will follow later in this document. 1) Search Layers: Search options Parcel ID, Address, Street or Owner. Searching can be done by Shape, Value or Spatial. 2) Map: The overall map view that the user interacts with. 3) Map Extent: Zoom In (+) or Zoom Out (-), Zoom to full map extent, and Previous or Next Extent. 4) Map Widgets: Different widgets that a user can use to interact with the Map. 5) Map Scale: Map scale bar and Northing/Easting Coordinates. Mouse and Keyboard Navigation • Click and drag to pan • SHIFT + Click to recenter • SHIFT + Drag to zoom in • SHIFT + CTRL + Drag to zoom out • Mouse Scroll Forward to zoom in • Mouse Scroll Backward to zoom out • Use Arrow keys to pan • + key to zoom in a level • - key to zoom out a level • Double Click to Center and Zoom in Touch Screen Navigation: (Only for Smartphones and Tablets) • Touch and drag to pan • Double tap or unpinch to zoom in • Pinch to zoom out. Click on the Town of Groton Seal (upper left corner) will bring you to the town’s website. Search Layers This widget can be searched By Shape or By Value. By Value The By Value view has a dropdown list of predefined search layers. Selecting a search layer updates the dropdown list of search aliases for that layer. In the search bar type in the Parcel ID, Address, Street or Owner that you're looking for. -

The Openxr Specification

The OpenXR Specification Copyright (c) 2017-2021, The Khronos Group Inc. Version 1.0.19, Tue, 24 Aug 2021 16:39:15 +0000: from git ref release-1.0.19 commit: 808fdcd5fbf02c9f4b801126489b73d902495ad9 Table of Contents 1. Introduction . 2 1.1. What is OpenXR?. 2 1.2. The Programmer’s View of OpenXR. 2 1.3. The Implementor’s View of OpenXR . 2 1.4. Our View of OpenXR. 3 1.5. Filing Bug Reports. 3 1.6. Document Conventions . 3 2. Fundamentals . 5 2.1. API Version Numbers and Semantics. 5 2.2. String Encoding . 7 2.3. Threading Behavior . 7 2.4. Multiprocessing Behavior . 8 2.5. Runtime . 8 2.6. Extensions. 9 2.7. API Layers. 9 2.8. Return Codes . 16 2.9. Handles . 23 2.10. Object Handle Types . 24 2.11. Buffer Size Parameters . 25 2.12. Time . 27 2.13. Duration . 28 2.14. Prediction Time Limits . 29 2.15. Colors. 29 2.16. Coordinate System . 30 2.17. Common Object Types. 33 2.18. Angles . 36 2.19. Boolean Values . 37 2.20. Events . 37 2.21. System resource lifetime. 42 3. API Initialization. 43 3.1. Exported Functions . 43 3.2. Function Pointers . 43 4. Instance. 47 4.1. API Layers and Extensions . 47 4.2. Instance Lifecycle . 53 4.3. Instance Information . 58 4.4. Platform-Specific Instance Creation. 60 4.5. Instance Enumerated Type String Functions. 61 5. System . 64 5.1. Form Factors . 64 5.2. Getting the XrSystemId . 65 5.3. System Properties . 68 6. Path Tree and Semantic Paths. -

CAP 6121: 3D User Interfaces for Games and Virtual Reality Spring 2011; MW 4:30Pm-5:45Pm HEC -0111

1 CAP 6121: 3D User Interfaces for Games and Virtual Reality Spring 2011; MW 4:30pm-5:45pm HEC -0111 Instructor: Dr. Joseph J. LaViola Jr. Website: www.eecs.ucf.edu/courses/cap6121/spr11/ Office Hours: T: 4:00pm-5:30pm Office: ENGRIII – 321, phone: x2285 W: 5:45pm-6:45pm [email protected] READINGS: Text: Bowman, D., Kruijff, E., LaViola, J., and Poupyrev, I. 3D User Interfaces: Theory and Practice, Addison Wesley, July 2004. Creighton, Ryan H. Unity 3D Game Development by Example, Packt Publishing, 2010. Papers: student/professor selected research papers Catalog Description: 3D user interaction, spatial user interfaces, selection and manipulation, 3D navigation, system control, evaluation methodologies, augmented and mixed reality, input and output hardware Course Objectives: 3D User Interfaces for Games and Virtual Reality is a course designed to give students a rigorous introduction to the design, implementation, and evaluation of the fundamental techniques in spatial 3D interaction. Student Requirements: 1. Star Wars Game -- Students will create a lightsaber game where they control the saber with and use the force using the Microsoft Kinect. 2. 3D Pac-Man -- Students will create a 3D Pac-Man game where they will travel through a maze using the using the Microsoft Kinect. 3. Game UI paper -- Students will examine existing 3D user interaction techniques and see how they can be applied in a video game setting. 4. Paper Presentations -- Students will have to present at least one paper on a topic in 3DUIs. 5. Final Project -- Students will do a final project of their choice that explores a particular concept in 3D user interfaces tailored to video games.