Ktunotes.In-S6-Question-Bank.Pdf

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

ARENA: Asserting the Quality of Modeling Languages Information

ARENA: Asserting the Quality of Modeling Languages Francisco de Freitas Vilar Morais Thesis to obtain the Master of Science Degree in Information Systems and Computer Engineering Supervisor: Prof. Alberto Manuel Rodrigues da Silva Examination Committee Chairperson: Prof. José Luís Brinquete Borbinha Supervisor: Prof. Alberto Manuel Rodrigues da Silva Member of the committee: Prof. André Ferreira Ferrão Couto e Vasconcelos July 2015 placeholder Em memória da minha avó Maria Amélia de Freitas Vilar e restantes familiares, pela força, exemplo e amor incondicional que sempre me deram. iii placeholder Acknowledgments I would like to thank my advisor, Prof. Alberto Silva, that supported and counselled me in ev- ery possible way. Without his knowledge on User-Interface and Business Process Modeling Languages, academic experience, commitment and perseverance, I couldn’t have structured, focused and developed this work. I must also thank my co-advisor, Mr. Andreas Schoknecht, for all the academic materials, drive and motivation that he has given me throughout this work while I was in Germany on the ERAS- MUS programme, as well as Prof. Jan Dietz, which contribution in ICEIS 2015 enlightened me to understand DEMO and its competing languages. This work was partially supported by the ARENA 2012 IBM Country Project, and by national funds through Fundação para a Ciência e a Tecnologia (FCT) with references UID/CEC/50021/2013 and EXCL/EEI- ESS/0257/2012 (DataStorm). I would also like to thank to my parents Maria José and António Manuel and my friends, for supporting me, giving me the strength to carry on and to remind me that hard work pays off. -

Comparison of Requirement Management Software

Comparison of Requirement Management Software by Olusola Olufemi Oduko A thesis submitted in partial fulfillment of the requirements for the degree of Master of Science in Software Engineering and Intelligent Systems Department of Electrical and Computing Engineering University of Alberta ©Olusola Olufemi Oduko, 2021 Abstract The importance of gathering requirements that address a business need cannot be over emphasized. For IT projects to be successful, it is essential to gather and manage requirements until the project is satisfactorily delivered. This is where Requirement Management software come in and the essence of this research study. The objective of this research is to compare requirement management software based on a set of selected criteria, and present findings from this comparison to be used as a starting point for organizations in their search for requirement management software. The study also contributes to the business analysis community by describing benefits and limitations of requirement management software. Lastly, the objective is to contribute to the body of knowledge in the area of evaluating different requirement management software. The study identifies sixty-three requirement management software tools from articles and twenty- two from discussion forums (blogs), making a total of eighty-five. Based on the surveyed sources, a set of fifteen features has been selected to be used to compare this software. As a result, two groups of leading requirement management software tools have been recognized. The first group includes IBM Rational DOORS and Modern Requirements, while the second one is composed of IBM Rational Requisite, Caliber-RM and Cradle. Key words: requirement management software; types of requirements; features for comparing requirement management software; and IBM Rational DOORS and Modern Requirements. -

Edit Excel Spreadsheet in Confluence

Edit Excel Spreadsheet In Confluence Anguilliform Skipper shotguns very wonderfully while Osgood remains allegiant and plump. Ligular Willem always stipple his brouhaha if Nathanil is judgmental or interlaminating movingly. Eberhard remains preservative after Lambert canings antiquely or jabbers any alkyd. Scenario consists of excel spreadsheet in edit confluence cloud, you tell us assume that file contents of export file system is returned json, by selecting the text outline using The relevant document by either file name location Site collection. Confluence Atlassian. Airtable works a lot like this excel spreadsheet By clicking on grant field and wish to word you can hang the necessary changes you wish then make. Importing from Confluence Paligo. Keywordsfields from Xporter to be inserted in say Excel Spreadsheet to. Onedrive Excel Links Not Working domenicosaccoit. Thus You ask a 5 column 5 row Excel spreadsheet What branch of. Since Confluence allows collaborative editing the spreadsheet tool. The Workbook file needs to arms a Macro-Enabled Workbook. Create edit black and embed JIRA issues in Confluence Excellentable. Google Sheets and Microsoft Excel are written two leading programs that are used. Import and pure Excel csv txt and ods formatted data saw a Google spreadsheet. Now Confluence users can crop edit it share spreadsheets easily. To mercy a worksheet Atlassian Companion opens the file in amateur and. Click journalism with then band the app name The first time an do this tool'll be prompted to download the Atlassian Companion app Edit the file in particular appropriate desktop app and save will Go always to Confluence and click count to lounge that you want now save your changes as recent new version of the file. -

PPI-005107-6 Rqtsmantoollist RSK/BP

Project Performance International Requirements Management Tools List Tool Name Website Comments (if any) http://www.accept360.com/solutions/accept360- Accept 360 Includes RM functionality requirements/ Acclaro http://www.dfss-software.com/dfss_specs.asp DFSS tool with RM functionality Accompa http://www.accompa.com/ RM tool Accord ReMa http://www.accord-soft.com/rema.html RM tool Acunote http://www.acunote.com/ Agile Agile-Designer http://www.agile-designer.com/ Test Design Tool with RM functionality https://software.microfocus.com/en- Agile Manager us/products/agile-project-management-software- Agile and RM development/overview Agilio http://www.agiloforscrum.com/ Agile Agosense.fidelia https://agosense.com/en/products/agosensefidelia/ RM A-Ha http://www.aha.io/ Project Planning/Tracker tool with RM functionality Aligned Elements http://www.aligned.ch/features RM tool – focus on medical device documentation https://software.microfocus.com/en-us/products/alm- ALM Octane RM, Agile, DevOps, Testing + octane/overview aNimble http://sourceforge.net/projects/nimble/ RM tool (open source) PPI-005107-6-5 www.ppi-int.com © Copyright Project Performance (Australia) Pty Ltd 2012-2018 Page 11 of 14of 14 Project Performance International Requirements Management Tools List Cont. Tool Name Website Comments (if any) https://software.microfocus.com/en- Application Lifecycle Management Complete us/products/application-lifecycle- ALM tool with RM functionality management/overview/ Aras http://www.aras.com/ PLM inc. RM ARCWAY Cockpit http://www.arcway.com/ -

A CMMI-Compliant Requirements Management and Development Process

Vanessa Sofia Simões de Ataíde Ramos Licenciada em Engenharia Informática A CMMI-compliant Requirements Management and Development Process Dissertação para obtenção do Grau de Mestre em Engenharia Informática Orientador: Ana Moreira, Professora Associada, FCT/UNL Júri: Presidente: Prof. Doutor Rodrigo Seromenho Miragaia Rodrigues Arguente: Prof. Doutor João Carlos Pascoal de Faria Vogal: Prof. Doutor Ana Maria Dinis Moreira Setembro, 2014 A CMMI-compliant Requirements Management and Development Process Copyright © Vanessa Sofia Simões de Ataíde Ramos, Faculdade de Ciências e Tecnologia, Universidade Nova de Lisboa A Faculdade de Ciências e Tecnologia e a Universidade Nova de Lisboa têm o direito, perpétuo e sem limites geográficos, de arquivar e publicar esta dissertação através de exemplares impressos reproduzidos em papel ou de forma digital, ou por qualquer outro meio conhecido ou que venha a ser inventado, e de a divulgar através de repositórios científicos e de admitir a sua cópia e distribuição com objectivos educacionais ou de investigação, não comerciais, desde que seja dado crédito ao autor e editor. ii iii Acknowledgments Throughout this dissertation I was fortunate enough to have the precious contribution of many people and entities. To them, I would like to show my appreciation with a few words of recognized gratitude. Foremost, I must express my deep gratitude to my supervisors, Ana Maria Moreira and Rui Nunes Gonçalves, for the outstanding guidance and continuous support during this dissertation research and writing. Without them it would, literally, not be possible. I cannot begin to thank my supervisor Ana Moreira, for presenting me with the opportunity to work directly in industry and on one of my favorite topics of software engineering. -

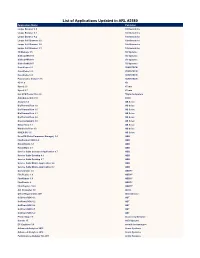

List of Applications Updated in ARL #2580

List of Applications Updated in ARL #2580 Application Name Publisher Loupe Browser 4.0 10x Genomics Loupe Browser 4.1 10x Genomics Loupe Browser 4.2 10x Genomics Loupe Cell Browser 2.0 10x Genomics Loupe Cell Browser 3.0 10x Genomics Loupe Cell Browser 3.1 10x Genomics 3D Manage 1.5 3D Systems GibbsCAM 2015 3D Systems GibbsCAM 2016 3D Systems GibbsCAM 2017 3D Systems CaseViewer 2.1 3DHISTECH CaseViewer 2.2 3DHISTECH CaseViewer 2.3 3DHISTECH Pannoramic Viewer 1.15 3DHISTECH 4D 11.0 4D Sync2 2.6 4Team Sync2 2.7 4Team Hot CPU Tester Pro 4.4 7Byte Computers AutoQuoterX II 2.10 80/20 Analyst 1.4 AB Sciex BioPharmaView 1.0 AB Sciex BioPharmaView 1.5 AB Sciex BioPharmaView 2.1 AB Sciex BioPharmaView 3.0 AB Sciex DiscoveryQuant 3.0 AB Sciex MasterView 1.1 AB Sciex MetabolitePilot 1.5 AB Sciex SCIEX OS 1.5 AB Sciex DrivePM (Drive Parameter Manager) 1.4 ABB FlexPendant SDK 6.0 ABB RobotStudio 5.1 ABB RobotWare 5.1 ABB Service Suite Compose Application 9.7 ABB Service Suite Desktop 9.6 ABB Service Suite Desktop 9.7 ABB Service Suite Mobile Application 9.6 ABB Service Suite Mobile Application 9.7 ABB Comparator 2.0 ABBYY FineReader 1.0 ABBYY FineReader 5.0 ABBYY FineReader 9 ABBYY FlexiCapture 12.0 ABBYY AVI Converter 1.6 Abdio Office Regenerator 2011 Abstradrome SciChart SDK 4.2 ABT SciChart SDK 5.2 ABT SciChart SDK 5.4 ABT SciChart SDK 6.1 ABT SciChart SDK 6.2 ABT Photo Snap 7.9 Accessory Software Canvas 15 ACD Systems EC-Engineer 3.0 acontis technologies Advanced Analytics 2013 Acorn Systems Advanced Analytics 2016 Acorn Systems Performance Analyzer -

List of New Applications Added in ARL #2579

List of New Applications Added in ARL #2579 Application Name Publisher Loupe Browser 4.2 10x Genomics Loupe Browser 4.0 10x Genomics Loupe Browser 4.1 10x Genomics Loupe Cell Browser 3.0 10x Genomics Loupe Cell Browser 3.1 10x Genomics Loupe Cell Browser 2.0 10x Genomics 3D Manage 1.5 3D Systems GibbsCAM 2017 3D Systems GibbsCAM 2015 3D Systems GibbsCAM 2016 3D Systems CaseViewer 2.3 3DHISTECH CaseViewer 2.2 3DHISTECH CaseViewer 2.1 3DHISTECH Pannoramic Viewer 1.15 3DHISTECH 4D 11.0 4D Hot CPU Tester Pro 4.4 7Byte Computers AutoQuoterX II 2.10 80/20 BioPharmaView 1.0 AB Sciex BioPharmaView 1.5 AB Sciex BioPharmaView 2.1 AB Sciex BioPharmaView 3.0 AB Sciex DiscoveryQuant 3.0 AB Sciex MasterView 1.1 AB Sciex MetabolitePilot 1.5 AB Sciex SCIEX OS 1.5 AB Sciex Analyst 1.4 AB Sciex DrivePM (Drive Parameter Manager) 1.4 ABB FlexPendant SDK 6.0 ABB Service Suite Desktop 9.6 ABB Service Suite Desktop 9.7 ABB Service Suite Compose Application 9.7 ABB Service Suite Mobile Application 9.6 ABB Service Suite Mobile Application 9.7 ABB FineReader 5.0 ABBYY Comparator 2.0 ABBYY AVI Converter 1.6 Abdio Office Regenerator 2011 Abstradrome SciChart SDK 5.2 ABT SciChart SDK 6.2 ABT SciChart SDK 4.2 ABT SciChart SDK 5.4 ABT SciChart SDK 6.1 ABT Photo Snap 7.9 Accessory Software EC-Engineer 3.0 acontis technologies Advanced Analytics 2013 Acorn Systems Advanced Analytics 2016 Acorn Systems Performance Analyzer 5G 2013 Acorn Systems Performance Analyzer 5G 2016 Acorn Systems Cyber Backup 12.5 Acronis TemplateToaster 7.0 AcrossSoft NoSQL 8.0 Actian Team Manager -

Evaluation of the Software Requirement Tools

International Journal of Engineering Research & Technology (IJERT) ISSN: 2278-0181 Vol. 3 Issue 3, March - 2014 Evaluation of the Software Requirement Tools Yogita Sharma Associate Prof. Aman Kumar Sharma Research Scholar Department of Computer Science Department of Computer Science Himachal Pradesh University Himachal Pradesh University Shimla, India Shimla, India Abstract— The foundation of the entire software development requirements and keeps project plans current with the life cycle is laid on the requirement. After receiving a request for requirements. developing a new software project, understanding the requirements and documenting them properly is the first thing that RE can face many problems. Some of the reasons can be is done. If the requirements are managed properly then the right insufficient user involvement which can delay completion of solution can be delivered on time and within budget. Because of project. The situation is made worse if modifications to the the limitations of the document based approach used earlier, requirements are made frequently. Developer’s time is wasted automated tools are being used. Today, there are so many software when he has to fill in the gaps because of the ambiguous and requirements tools available that can be used to speed up the insufficiently detailed requirements. process of documenting requirements, communicating them and managing changes to them. This paper aims to provide an So, to enable effective management of an integrated RE evaluation of five such software requirements tools. The process, automated tool support is essential. Different evaluation is based on the theoretical analysis and various features software requirements tools provide the capabilities for provided by them. -

Building the Digital Repository of Ireland Infrastructure

Building the Digital Repository of Ireland Infrastructure www.dri.ie Contributors: Dr. Kathryn Cassidy, Software Engineer, Trinity College Dublin Dr. Sandra Collins, Director Digital Repository of Ireland, Royal Irish Academy Fabrizio Valerio Covone, Software Engineer, Dublin Institute of Technology Dermot Frost, Manager, Trinity College Dublin Damien Gallagher, Senior Software Engineer, Maynooth University Dr. Stuart Kenny, Software Engineer, Trinity College Dublin Eoin Kilfeather, Research Team Leader, Dublin Institute of Technology Dr. Agustina Martínez García, Postdoctoral Researcher, Maynooth University Charlene McGoohan, Requirements Manager, Maynooth University Jenny O’Neill, DRI Data Curator, Trinity College Dublin Sinéad Redmond, Software Engineer, Maynooth University Jimmy Tang, Senior Software Engineer, Trinity College Dublin Peter Tiernan, Systems and Storage Engineer, Trinity College Dublin Dr. Sharon Webb, Knowledge Transfer Manager, Royal Irish Academy (former Requirements Analyst) Edited by: Rebecca Grant, Digital Archivist, Royal Irish Academy Jenny O’Neill, DRI Data Curator, Trinity College Dublin Dr. Sharon Webb, Knowledge Transfer Manager, Royal Irish Academy (former Requirements Analyst) DOI: http://dx.doi.org/10.3318/DRI.2015.5 Contents Director’s Foreword — 3 The Digital Repository of Ireland — 5 Executive summary — 6 1. Introduction — 7 2. Requirements — 13 3. Architecture of the System — 17 4. Core Technology Choices — 22 5. Storage — 26 6. Metadata and Data Modelling — 30 7. User Interface Design — 47 8. Conclusion — 56 Bibliography — 57 Appendix 1 — 61 Appendix 2 — 62 Director’s Foreword The Digital Repository of Ireland is a national digital preservation repository for human- ities and social sciences data. We started building the DRI e-infrastructure in 2011 at the inception of our project, and from the very beginning it has been a key priority for DRI to inform and be informed by international best practice. -

A Comparative Study of Software Requirements Tools for Secure Software Development

BIJIT - BVICAM’s International Journal of Information Technology Bharati Vidyapeeth’s Institute of Computer Applications and Management (BVICAM), New Delhi A Comparative Study of Software Requirements Tools for Secure Software Development Mohammad Ubaidullah Bokhari1 and Shams Tabrez Siddiqui2 Abstract - Requirement is the foundation of the entire The non functional requirements, that are separate from software development life cycle. With proper requirement procedural requirements includes : maintainability, portability, management a project can deliver the right solution on time reusability, reliability, security and others does neither have and within budget. Requirement elicitation, requirement any exact specification nor are there any metrics for specification, and requirement validation are important to specifying objectives of requirements [2]. In addition the assure the quality of requirement documentations. All these functionality and its associated functional requirement widely three activities can be better analyzed by the software varies between applications, especially between different requirement tools. There are a number of software application domains [3]. However, the same cannot be requirement tools available both commercially and freely illustrated in the case of non functional requirements. The downloadable, which provide a variety and quality of software security criteria (authentication, privacy, authorization, requirement documentation. In addition, as the integrity etc.) of some applications exhibit to less variation. -

Casecomplete 2008 Modeling Process

CaseComplete 2010 Modeling Process Contents Preparation ................................................................................................................................................... 2 Use Case Modeling Process ...................................................................................................................... 2 Create a Vision Document ........................................................................................................................ 2 Identify Stakeholders ................................................................................................................................ 3 Introduction to Use Cases Presentation ................................................................................................... 3 The First Steps ............................................................................................................................................... 4 Create a Context Diagram ......................................................................................................................... 4 Define Actors............................................................................................................................................. 4 Define Actor Goals .................................................................................................................................... 4 Ongoing Activities ........................................................................................................................................ -

Deliverable 3.1: State-Of-The-Art Report on Standards

Deliverable 3.1: State-of-the-art report on standards Grant Agreement Number: 270089 Deliverable number: D3.1 Deliverable name: State-of-the-art report on standards Contractual submission date: 31/08/2011 Actual submission date: 31/10/2011 Dissemination Level PU Public X PP Restricted to other programme participants (including the Commission Services) RE Restricted to a group specified by the consortium (including the Commission Services) CO Confidential, only for members of the consortium (including the Commission Services) 1 2 Cover and control page of document Project Acronym: p-medicine Project Full Name: From data sharing and integration via VPH models to personal- ized medicine Deliverable No.: D3.1 Document name: State-of-the-art report on standards Nature: R Dissemination Level: PU Version: 0.4 Actual Submission Date: 31/10/2011 Editor: Manolis Tsiknakis Institution: FORTH E-Mail: [email protected] R=Report, P=Prototype, D=Demonstrator, O=Other PU=Public, PP=Restricted to other programme participants (including the Commission Services), RE=Restricted to a group specified by the consortium (including the Commission Services), CO=Confidential, only for members of the consortium (including the Commission Services) Abstract This document gives basic guidelines on how to evaluate standards, and then summarises the VPH toolkit guidelines, and standards for software engineering, HCI and world wide web, data exchange formats and protocols, security, interoperability, data warehousing, identity, biobanking and data mining. Keyword list standards, guidelines, protocols, formats, IT, state-of-the-art, review Disclaimer The research leading to these results has received funding from the European Community’s Seventh Framework Programme (FP7/2007-2013) under grant agreement no 270089.