Chapter 1: Some Basic Definitions

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

NQ-A4060, NQ-A4120, NQ-A4300 4 Channel Audio Power Amplifiers

4-Channel Audio Power Amplifiers Configuration Manual NQ-A4060, NQ-A4120, NQ-A4300 2019 Bogen Communications, Inc. All rights reserved. 740-00099D 191101 Contents List of Figures ............................................................................... v List of Tables .............................................................................. vii Configuring the Four-Channel Audio Power Amplifiers 1-1 1 Using the Dashboard ..............................................................................3 2 Updating Firmware ..................................................................................4 3 Setting Network Tab Parameters .......................................................6 4 Setting Configuration Tab Parameters ............................................8 5 Accessing Log Files ............................................................................... 10 6 Setting DSP Parameters ...................................................................... 13 6.1 Setting the Channel Level .................................................. 15 6.2 Signal LED, Clip LED, and VU Meter .............................. 15 6.3 Muting a Channel ................................................................. 16 6.4 Adjusting Volume Levels ................................................... 16 6.5 Adjusting Compression Settings .................................... 16 6.6 Adjusting the Graphic Equalizer ..................................... 18 6.7 Setting High/Low Pass Parameters ................................ 20 6.8 Adjusting -

PRODUCT CATALOG Home Control - Loudspeakers - General Products NAVIGATION CATALOG

PRODUCT CATALOG Home Control - Loudspeakers - General Products CATALOG NAVIGATION Products are grouped by category of interest. Sections are differentiated by color coding on the bottom right of each page. HOME CONTROL Multi-room audio control, now with lighting and climate, plus remote access. Page 4 LOUDSPEAKERS Architectural audio solutions where you live, work, and play. Page 27 GENERAL PRODUCTS Complete the connected experience here. Page 85 2 CALL 1-800-BUY-HIFI – www.nilesaudio.com 3 HOME CONTROL A Heritage of Recognition The Niles name is synonymous with premier whole home audio solutions. For nearly four decades, Niles has delivered innovative products that enable simple and easy access to home entertainment, and we are now creating audio solutions that seamlessly integrate with lighting and climate control. Niles products enable custom integrators to design and install systems that deliver truly exceptional entertainment solutions for customers. 4 Home Control HOME CONTROL SOLUTIONS Auriel - One Touch to Control . 6 MRC-6430 Multi-Room Controller . 12 nTP7 Touch Panel .....................14 nTP4 Touch Panel .....................15 nKP7 Keypad .........................16 nHR200 Remote Control. 17 SYSTEMS INTEGRATION AMPLIFIERS® 16-Channel Amplifier . 20 12-Channel Amplifier . 21 2-Channel Amplifiers . 22 CALL 1-800-BUY-HIFI – www.nilesaudio.com Home Control 5 One Touch to Control. Niles Auriel now adds built-in streaming audio, plus climate and lighting control to the award-winning multi- room audio platform. The result is an exceptional home control experience. The wizard whisks you through simple decisions that quickly configure the system for lighting scenes and thermostat programming, audio sources, zone preferences, user interface customization and home theater control. -

15W Stereo Class-D Audio Power Amplifier

TPA3121D2 www.ti.com SLOS537B –MAY 2008–REVISED JANUARY 2014 15-W STEREO CLASS-D AUDIO POWER AMPLIFIER Check for Samples: TPA3121D2 1FEATURES APPLICATIONS 23• 10-W/Ch Stereo Into an 8-Ω Load From a 24-V • Flat Panel Display TVs Supply • DLP® TVs • 15-W/Ch Stereo Into a 4-Ω Load from a 22-V • CRT TVs Supply • Powered Speakers • 30-W/Ch Mono Into an 8-Ω Load from a 22-V Supply DESCRIPTION • Operates From 10 V to 26 V The TPA3121D2 is a 15-W (per channel), efficient, • Can Run From +24 V LCD Backlight Supply class-D audio power amplifier for driving stereo speakers in a single-ended configuration or a mono • Efficient Class-D Operation Eliminates Need speaker in a bridge-tied-load configuration. The for Heat Sinks TPA3121D2 can drive stereo speakers as low as 4 Ω. • Four Selectable, Fixed-Gain Settings The efficiency of the TPA3121D2 eliminates the need • Internal Oscillator to Set Class D Frequency for an external heat sink when playing music. (No External Components Required) The gain of the amplifier is controlled by two gain • Single-Ended Analog Inputs select pins. The gain selections are 20, 26, 32, and • Thermal and Short-Circuit Protection With 36 dB. Auto Recovery The patented start-up and shutdown sequences • Space-Saving Surface Mount 24-Pin TSSOP minimize pop noise in the speakers without additional Package circuitry. • Advanced Power-Off Pop Reduction SIMPLIFIED APPLICATIONCIRCUIT TPA3121D2 1m F 0.22m F LeftChannel LIN BSR 33m H 470m F RightChannel RIN ROUT 1m F 0.22m F PGNDR PGNDL 1m F 0.22m F LOUT BYPASS 33m H 470m F AGND BSL 0.22m F PVCCL 10Vto26V 10Vto26V AVCC PVCCR VCLAMP ShutdownControl SD 1m F MuteControl MUTE GAIN0 4-StepGainControl GAIN1 S0267-01 1 Please be aware that an important notice concerning availability, standard warranty, and use in critical applications of Texas Instruments semiconductor products and disclaimers thereto appears at the end of this data sheet. -

Sound Waves Displacement

Producing sound waves Displacement 1.4 Sound Density Pressure Producing sound waves Speed of sound •Sound waves are longitudinal •Produced by compression and rarefaction of media (air) Energy and Intensity resulting in displacement in the direction of propagation. • The displacements result in oscillations in density and pressure. Spherical and Plane waves. Frequencies of sound wave Speed of sound Speed of sound in a fluid B infra-sonic Audible Sound v = ultra- sonic ρ ∆P B =− Bulk modulus 10 20,000 ∆V/V m Frequency (Hz) ρ= Density V Similarity to speed of a transverse wave on a string 30 0.015 Wavelength (m) in air elastic _property v = int ertial_property Speed of sound in air γP B v = v = ρ ρ γ is a constant that depends on the nature of the gas γ =7/5 for air Density is higher in water than in P - Pressure air. ρ -Density Why is the speed of sound higher in water than in air? Since P is proportional to the absolute temperature T by the ideal gas law. PV=nRT T v331= (m/s) 273 1 Energy and Intensity of sound waves o Find the speed of sound in air at 20 C. energy power P = time T area A v331= 273 273+ 20 v== 331 343m/ s 273 For calculations use v=340 m/s power P intensity I = = (units W/m2) area A Sound intensity level The ear is capable of distinguishing a wide range of sound intensities. The decibel is a measure of the sound intensity level ⎛⎞I What is the intensity β=10log⎜⎟ decibels of sound at a rock I ⎝⎠o concert? (W/m2) -12 2 Io = 10 W/m the threshold of hearing note- decibel is a logarithmic unit. -

Power Demystified Garth Powell

Power Demystified Garth Powell 2621 White Road Irvine CA 92614 USA Tel 949 585 0111 Fax 949 585 0333 www.audioquest.com Contents Introduction AC Surge Suppression AC Power Conditioners/LCR Filters AC Regeneration AC Isolation Transformers DC Battery Isolation Devices with AC Inverters or AC Regeneration Amplifiers AC UPS Battery Backup Devices AC Voltage Regulators DC Blocking Devices for AC Power Harmonic Oscillators for AC Power AC Resonance/Vibration Dampening Power Correction for AC Power Ground Noise Dissipation for AC Power Appendix: Some Practical Matters to Bear in Mind I. Source Component and Power Amplifier Current Draw II. AC Polarity III. Over-voltage and Under-voltage Conditions Index Introduction The source that supplies nearly all of our electronic components is alternating current (AC) power. For most, it is enough that they can rely on a service tap from their power utility to supply the voltage and current our audio-video (A/V) components require. In fact, in many parts of the world, the supplied voltage is quite stable, and if the area is free of catastrophic lightning strikes, there are seemingly no AC power problems at all. Obviously, there are areas where AC voltage can both sag and surge to levels well out of the optimum range, and others where electrical storms can potentially damage sensitive electrical equipment. There are many protection devices and AC power technologies that can ad- dress those dire circumstances, but too many fail to realize that there is no place on Earth that is supplied adequate AC power for today’s sensitive, high-resolution electronic components. -

Exploding the Efficiency Myth of Class D Amplifiers Much Has Been Written About the Efficiency of Class D Amplifiers, with Figures of 90% Or Greater Routinely Quoted

Exploding the efficiency myth of AS-100204-WP Issue 1 Class D amplifiers Exploding the efficiency myth of Class D amplifiers Much has been written about the efficiency of Class D amplifiers, with figures of 90% or greater routinely quoted. Such numbers might seem to suggest that the efficiency problem of audio amplifiers has been well-solved by conventional Class D. However, a closer look shows that this is far from the truth, with these amplifiers frequently seeing only single-digit percentage efficiencies, or less, in real product usage conditions. To address this problem, a new generation of audio amplifier solutions has just emerged, heralding a massive reduction in average power consumption. The problem Figure 1 shows a plot of efficiency versus power output for a typical Class D amplifier, but plotted with a logarithmic power axis, rather than the linear scale invariably seen in data-sheets. Sure enough, the top right of the graph, corresponding to maximum power output, shows the efficiency reaching almost 90%. However, in typical consumer usage, an audio amplifier hits its rated maximum power comparatively rarely – only when the volume is turned right up to the onset of clipping. Even then, maximum power is reached only on the loudest audio peaks, which make up a relatively small proportion of typical content. Across the operating life of an amplifier, it is seen that average power output typically sits at around 20 to 50dB below full scale, a massive 100 to 100,000 times in linear power terms, as we now explore. At this comparatively tiny output level, corresponding to the lower left region of Figure 1, the efficiency of the conventional Class D solution is seen to be disappointingly low. -

Daat Power Amplifier White Paper

ISP Technologies patented Dynamic Adaptive Amplifier Technology™ Audio power is the electrical power off the AC line transferred from an audio amplifier to a loudspeaker, measured in watts. The power delivered to the loudspeaker, based on its efficiency, determines the actual audio power. Some portion of the electrical power in ends up being converted to heat. Recent years have seen a proliferation in what is called specmanship at a minimum and outright fabrication of misleading specifications at worst. The bottom line is power amplifier ratings are virtually meaningless today since there is no standard measurement system in use. This leads to confusion and serious misunderstanding in the audio community. ISP Technologies has for years rated the D-CAT power amplifiers in true RMS output power and as a result have shown modest performance specifications when compared with competitive amplifiers or self powered speakers. Some manufactures have gone so far as to claim they are offering 20,000 watt RMS power amplifiers with power consumption off the line on the order of 30 amps. I would like to see the patent on this amazing technology since there would be countless power companies beating a path to their door to license this technology. This white paper has been written to help shed some light on different types of power amplifier technologies and realistic and actual power amplifier power performance ratings and to also explain the advantages of the new ISP Technologies DAA™ Dynamic Adaptive Amplifier™ Technology now in use by ISP Technologies. An audio power amplifier is theoretically designed to deliver an exact replica of an audio input signal with more voltage and current at the output. -

Investigating Electromagnetic and Acoustic Properties of Loudspeakers Using Phase Sensitive Equipment

Investigating Electromagnetic and Acoustic Properties of Loudspeakers Using Phase Sensitive Equipment Katherine Butler Department of Physics, DePaul University ABSTRACT The goal of this project was to extract detailed information on the electromagnetic and acoustic properties of loudspeakers. Often when speakers are analyzed only the electrical components are considered without taking into account how this effects the mechanical operation of the loudspeaker, which in turn directly relates to the acoustic output. Examining the effect of mounting the speaker on a baffle or in an enclosure is also crucial to determining the speaker’s sound. All electrical and acoustic measurements are done using phase sensitive lock in amplifiers. By analyzing the speaker in such a detailed manner, we can ultimately determine which properties really affect the overall tonal qualities of that speaker. I. Background and Introduction attracted to or repelled by the permanent magnetic field. The moving parts of the The loudspeaker is the most important speaker, the driver, can then turn link in any audio chain. It is the last electrical energy into acoustic energy. piece of equipment the audio signal The electrical components of the speaker passes through before we hear anything. have a certain resonance when the You may have the best amplifier money electrical impedance is greatest. The air can buy, but that means nothing without surrounding the speaker and propagating quality speakers. In the audio chain the sound also has its own resistance to speakers are composed of some of the motion, radiation impedance. simplest electric circuits; it is the quality of manufacturing and physical design that is most important in speaker quality. -

Changes and Challenges in Environmental Noise Measurement * Philip J Dickinson Massey University Wellington, New Zealand

CHANGES AND CHALLENGES IN ENVIRONMENTAL NOISE MEASUREMENT * Philip J Dickinson Massey University Wellington, New Zealand Many changes have occurred in the last seventy years, not least of which are the changes in our environment and interdependently our intellectual and technological development. Sound measurement had its origins in the 1920s at a time when people were still traveling by horse and cart or on steam trains and few people used electricity. The technology of electronics was in its infancy and our predecessors had limited tools at their disposal. Nevertheless, they provided the basis on which we rely for our present day sound measurements. Since then we have come far, but we still await a solution for the lack of accuracy we have come to accept. THE BEGINNINGS independent of frequency and temperature, it was convenient to use a power (or logarithmic) series for its description based on the power developed by a one volt sinusoid across a mile of standard cable. This measure was called the Transmission Unit TU (Martin 1924) Harvey Fletcher (whom this author is very privileged to be able to have called a friend) studied the reactions of (it is believed) 23 of his colleagues to sound in a telephone earpiece generated by an a.c. voltage. He came up with the idea of a “sensation unit” SU, based on a power series compared to the voltage that produced the minimum sound audible. Harvey initially called this the “Loudness Unit” (Fletcher 1923) but later changed his mind following his work on loudness with Steinberg (Fletcher and Steinberg 1924). -

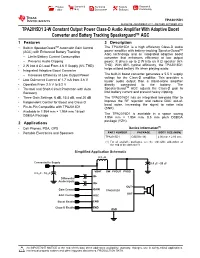

TPA2015D1 2-W Constant Output Power Class-D Audio Amplifier With

Product Sample & Technical Tools & Support & Folder Buy Documents Software Community TPA2015D1 SLOS638B –NOVEMBER 2011–REVISED OCTOBER 2015 TPA2015D1 2-W Constant Output Power Class-D Audio Amplifier With Adaptive Boost Converter and Battery Tracking Speakerguard™ AGC 1 Features 3 Description TM The TPA2015D1 is a high efficiency Class-D audio 1• Built-In SpeakerGuard Automatic Gain Control (AGC) with Enhanced Battery Tracking power amplifier with battery-tracking SpeakerGuard™ AGC technology and an integrated adaptive boost – Limits Battery Current Consumption converter that enhances efficiency at low output – Prevents Audio Clipping power. It drives up to 2 W into an 8 Ω speaker (6% • 2 W into 8 Ω Load From 3.6 V Supply (6% THD) THD). With 85% typical efficiency, the TPA2015D1 helps extend battery life when playing audio. • Integrated Adaptive Boost Converter – Increases Efficiency at Low Output Power The built-in boost converter generates a 5.5 V supply voltage for the Class-D amplifier. This provides a • Low Quiescent Current of 1.7 mA from 3.6 V louder audio output than a stand-alone amplifier • Operates From 2.5 V to 5.2 V directly connected to the battery. The TM • Thermal and Short-Circuit Protection with Auto SpeakerGuard AGC adjusts the Class-D gain to Recovery limit battery current and prevent heavy clipping. • Three Gain Settings: 6 dB, 15.5 dB, and 20 dB The TPA2015D1 has an integrated low-pass filter to • Independent Control for Boost and Class-D improve the RF rejection and reduce DAC out-of- band noise, increasing the signal to noise ratio • Pin-to-Pin Compatible with TPA2013D1 (SNR). -

Audio Impedance Testers and Sound Level Meters

Technical Catalogue 1904 Test & Measurement Hoyt Audio Impedance Testers and Sound Level Meters 1106 IM ................................................................................................. 1 1107 IM ................................................................................................. 2 1506 IM ................................................................................................. 3 2706 IM ................................................................................................. 4 2310 SL ................................................................................................. 5 3310 SL ................................................................................................. 6 Hoyt Electrical Instrument Works, Inc www.hoytmeter.com 1-800-258-3652 Audio Impedance Testers 1106 IM Audio Impedance Tester Features ● Large LCD : 68 × 34mm (2.677" × 1.338"). ● True measurement of speaker systems actural impedance at 1kHz. ● Three test ranges allow testing of home theater and commercial sound systems. ● Measures transformer impedances. ● Battery operation. ● Low battery indication. ● Data hold function. ● 0Ω adjustment. Specifications Measuring ranges 0-20Ω / 0-200Ω / 0-2kΩ Test frequency 1kHz 20Ω: ± 2%rdg ± 2dgt Accuracy or ± 0.1Ω, which is greater 200Ω/2kΩ : ± 2%rdg ± 2dgt COM Low battery indication Symbol appears on the display 2K Data hold indication HOLD Symbol appears on the display 200 20 0 .ADJ OFF TEST HOLD General AUDIO IMPEDANCE TESTER CAT. 200V Dimensions 175(L) × 85(W) × 75(D)mm -

DISTORTION MEASUREMENT in AUDIO AMPLIFIERS. by Michael Renardson

DISTORTION MEASUREMENT IN AUDIO AMPLIFIERS. By Michael Renardson. SUMMARY The requirements for the measurement of non-linear distortion in audio power amplifiers are examined. The limitations of conventional measurement methods are discussed and several improved test methods described in which complex test signals could be used. A detailed examination is made of one method in which the input and output signals of an amplifier are combined in such a way that the undistorted component of the output signal is cancelled by the input signal and the distortion component isolated. The existing literature concerning this method is surveyed. The sources of error when using this technique are examined. These include phase and gain errors at high and low frequencies, earth connection arrangements and the effects of complex loads. Methods of reducing the errors are explained and a practical measuring instrument circuit designed. The instrument has a differential input so that inverting, non-inverting or differential amplifiers can be tested and uses a simple adjustable second order high frequency phase and gain compensation network. The distortion and noise of the instrument are analysed. The practical performance of the instrument is evaluated and its distortion contribution shown to be of an extremely low value. The rejection of test signal distortion is calculated for a particular amplifier test and shown to be more than adequate even when measuring extremely low harmonic distortion. The effectiveness of the load effect compensation arrangement derived is demonstrated. Finally some of the uses of the instrument are illustrated in tests on a typical class-B power amplifier to detect crossover distortion, transient intermodulation distortion and phase modulation.