Applied and Computational Statistics • Sorana D

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Applications of Computational Statistics with Multiple Regressions

International Journal of Computational and Applied Mathematics. ISSN 1819-4966 Volume 12, Number 3 (2017), pp. 923-934 © Research India Publications http://www.ripublication.com Applications of Computational Statistics with Multiple Regressions S MD Riyaz Ahmed Research Scholar, Department of Mathematics, Rayalaseema University, A.P, India Abstract Applications of Computational Statistics with Multiple Regression through the use of Excel, MATLAB and SPSS. It has been widely used for a long time in various fields, such as operations research and analysis, automatic control, probability statistics, statistics, digital signal processing, dynamic system mutilation, etc. The regress function command in MATLAB toolbox provides a helpful tool for multiple linear regression analysis and model checking. Multiple regression analysis is additionally extremely helpful in experimental scenario wherever the experimenter will management the predictor variables. Keywords - Multiple Linear Regression, Excel, MATLAB, SPSS, least square, Matrix notations 1. INTRODUCTION Multiple linear regressions are one of the foremost wide used of all applied mathematics strategies. Multiple regression analysis is additionally extremely helpful in experimental scenario wherever the experimenter will management the predictor variables [1]. A single variable quantity within the model would have provided an inadequate description since a number of key variables have an effect on the response variable in necessary and distinctive ways that [2]. It makes an attempt to model the link between two or more variables and a response variable by fitting a equation to determined information. Each value of the independent variable x is related to a value of the dependent variable y. The population regression line for p informative variables 924 S MD Riyaz Ahmed x1, x 2 , x 3 ...... -

Isye 6416: Computational Statistics Lecture 1: Introduction

ISyE 6416: Computational Statistics Lecture 1: Introduction Prof. Yao Xie H. Milton Stewart School of Industrial and Systems Engineering Georgia Institute of Technology What this course is about I Interface between statistics and computer science I Closely related to data mining, machine learning and data analytics I Aim at the design of algorithm for implementing statistical methods on computers I computationally intensive statistical methods including resampling methods, Markov chain Monte Carlo methods, local regression, kernel density estimation, artificial neural networks and generalized additive models. Statistics data: images, video, audio, text, etc. sensor networks, social networks, internet, genome. statistics provide tools to I model data e.g. distributions, Gaussian mixture models, hidden Markov models I formulate problems or ask questions e.g. maximum likelihood, Bayesian methods, point estimators, hypothesis tests, how to design experiments Computing I how do we solve these problems? I computing: find efficient algorithms to solve them e.g. maximum likelihood requires finding maximum of a cost function I \Before there were computers, there were algorithms. But now that there are computers, there are even more algorithms, and algorithms lie at the heart of computing. " I an algorithm: a tool for solving a well-specified computational problem I examples: I The Internet enables people all around the world to quickly access and retrieve large amounts of information. With the aid of clever algorithms, sites on the Internet are able to manage and manipulate this large volume of data. I The Human Genome Project has made great progress toward the goals of identifying all the 100,000 genes in human DNA, determining the sequences of the 3 billion chemical base pairs that make up human DNA, storing this information in databases, and developing tools for data analysis. -

Visualizing Research Collaboration in Statistical Science: a Scientometric

University of Nebraska - Lincoln DigitalCommons@University of Nebraska - Lincoln Library Philosophy and Practice (e-journal) Libraries at University of Nebraska-Lincoln 9-13-2019 Visualizing Research Collaboration in Statistical Science: A Scientometric Perspective Prabir Kumar Das Library, Documentation & Information Science Division, Indian Statistical Institute, 203 B T Road, Kolkata - 700108, [email protected] Follow this and additional works at: https://digitalcommons.unl.edu/libphilprac Part of the Library and Information Science Commons Das, Prabir Kumar, "Visualizing Research Collaboration in Statistical Science: A Scientometric Perspective" (2019). Library Philosophy and Practice (e-journal). 3039. https://digitalcommons.unl.edu/libphilprac/3039 Viisualliiziing Research Collllaborattiion iin Sttattiisttiicall Sciience:: A Sciienttomettriic Perspecttiive Prrabiirr Kumarr Das Sciienttiiffiic Assssiissttantt – A Liibrrarry,, Documenttattiion & IInfforrmattiion Sciience Diiviisiion,, IIndiian Sttattiisttiicall IInsttiittutte,, 203,, B.. T.. Road,, Kollkatta – 700108,, IIndiia,, Emaiill:: [email protected] Abstract Using Sankhyā – The Indian Journal of Statistics as a case, present study aims to identify scholarly collaboration pattern of statistical science based on research articles appeared during 2008 to 2017. This is an attempt to visualize and quantify statistical science research collaboration in multiple dimensions by exploring the co-authorship data. It investigates chronological variations of collaboration pattern, nodes and links established among the affiliated institutions and countries of all contributing authors. The study also examines the impact of research collaboration on citation scores. Findings reveal steady influx of statistical publications with clear tendency towards collaborative ventures, of which double-authored publications dominate. Small team of 2 to 3 authors is responsible for production of majority of collaborative research, whereas mega-authored communications are quite low. -

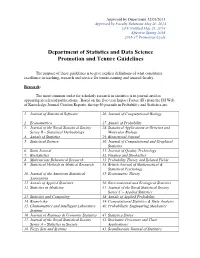

Department of Statistics and Data Science Promotion and Tenure Guidelines

Approved by Department 12/05/2013 Approved by Faculty Relations May 20, 2014 UFF Notified May 21, 2014 Effective Spring 2016 2016-17 Promotion Cycle Department of Statistics and Data Science Promotion and Tenure Guidelines The purpose of these guidelines is to give explicit definitions of what constitutes excellence in teaching, research and service for tenure-earning and tenured faculty. Research: The most common outlet for scholarly research in statistics is in journal articles appearing in refereed publications. Based on the five-year Impact Factor (IF) from the ISI Web of Knowledge Journal Citation Reports, the top 50 journals in Probability and Statistics are: 1. Journal of Statistical Software 26. Journal of Computational Biology 2. Econometrica 27. Annals of Probability 3. Journal of the Royal Statistical Society 28. Statistical Applications in Genetics and Series B – Statistical Methodology Molecular Biology 4. Annals of Statistics 29. Biometrical Journal 5. Statistical Science 30. Journal of Computational and Graphical Statistics 6. Stata Journal 31. Journal of Quality Technology 7. Biostatistics 32. Finance and Stochastics 8. Multivariate Behavioral Research 33. Probability Theory and Related Fields 9. Statistical Methods in Medical Research 34. British Journal of Mathematical & Statistical Psychology 10. Journal of the American Statistical 35. Econometric Theory Association 11. Annals of Applied Statistics 36. Environmental and Ecological Statistics 12. Statistics in Medicine 37. Journal of the Royal Statistical Society Series C – Applied Statistics 13. Statistics and Computing 38. Annals of Applied Probability 14. Biometrika 39. Computational Statistics & Data Analysis 15. Chemometrics and Intelligent Laboratory 40. Probabilistic Engineering Mechanics Systems 16. Journal of Business & Economic Statistics 41. -

Factor Dynamic Analysis with STATA

Dynamic Factor Analysis with STATA Alessandro Federici Department of Economic Sciences University of Rome La Sapienza [email protected] Abstract The aim of the paper is to develop a procedure able to implement Dynamic Factor Analysis (DFA henceforth) in STATA. DFA is a statistical multiway analysis technique1, where quantitative “units x variables x times” arrays are considered: X(I,J,T) = {xijt}, i=1…I, j=1…J, t=1…T, where i is the unit, j the variable and t the time. Broadly speaking, this kind of methodology manages to combine, from a descriptive point of view (not probabilistic), the cross-section analysis through Principal Component Analysis (PCA henceforth) and the time series dimension of data through linear regression model. The paper is organized as follows: firstly, a theoretical overview of the methodology will be provided in order to show the statistical and econometric framework that the procedure of STATA will implement. The DFA framework has been introduced and developed by Coppi and Zannella (1978), and then re-examined by Coppi et al. (1986) and Corazziari (1997): in this paper the original approach will be followed. The goal of the methodology is to decompose the variance and covariance matrix S relative to X(IT,J), where the role of the units is played by the pair “units-times”. The matrix S, concerning the JxT observations over the I units, may be decomposed into the sum of three distinct variance and covariance matrices: S = *SI + *ST + SIT, (1) where: 1 That is a methodology where three or more indexes are simultaneously analysed. -

The Stata Journal Publishes Reviewed Papers Together with Shorter Notes Or Comments, Regular Columns, Book Reviews, and Other Material of Interest to Stata Users

Editors H. Joseph Newton Nicholas J. Cox Department of Statistics Department of Geography Texas A&M University Durham University College Station, Texas Durham, UK [email protected] [email protected] Associate Editors Christopher F. Baum, Boston College Frauke Kreuter, Univ. of Maryland–College Park Nathaniel Beck, New York University Peter A. Lachenbruch, Oregon State University Rino Bellocco, Karolinska Institutet, Sweden, and Jens Lauritsen, Odense University Hospital University of Milano-Bicocca, Italy Stanley Lemeshow, Ohio State University Maarten L. Buis, University of Konstanz, Germany J. Scott Long, Indiana University A. Colin Cameron, University of California–Davis Roger Newson, Imperial College, London Mario A. Cleves, University of Arkansas for Austin Nichols, Urban Institute, Washington DC Medical Sciences Marcello Pagano, Harvard School of Public Health William D. Dupont , Vanderbilt University Sophia Rabe-Hesketh, Univ. of California–Berkeley Philip Ender , University of California–Los Angeles J. Patrick Royston, MRC Clinical Trials Unit, David Epstein, Columbia University London Allan Gregory, Queen’s University Philip Ryan, University of Adelaide James Hardin, University of South Carolina Mark E. Schaffer, Heriot-Watt Univ., Edinburgh Ben Jann, University of Bern, Switzerland Jeroen Weesie, Utrecht University Stephen Jenkins, London School of Economics and Ian White, MRC Biostatistics Unit, Cambridge Political Science Nicholas J. G. Winter, University of Virginia Ulrich Kohler , University of Potsdam, Germany Jeffrey Wooldridge, Michigan State University Stata Press Editorial Manager Stata Press Copy Editors Lisa Gilmore David Culwell, Shelbi Seiner, and Deirdre Skaggs The Stata Journal publishes reviewed papers together with shorter notes or comments, regular columns, book reviews, and other material of interest to Stata users. -

Rank Full Journal Title Journal Impact Factor 1 Journal of Statistical

Journal Data Filtered By: Selected JCR Year: 2019 Selected Editions: SCIE Selected Categories: 'STATISTICS & PROBABILITY' Selected Category Scheme: WoS Rank Full Journal Title Journal Impact Eigenfactor Total Cites Factor Score Journal of Statistical Software 1 25,372 13.642 0.053040 Annual Review of Statistics and Its Application 2 515 5.095 0.004250 ECONOMETRICA 3 35,846 3.992 0.040750 JOURNAL OF THE AMERICAN STATISTICAL ASSOCIATION 4 36,843 3.989 0.032370 JOURNAL OF THE ROYAL STATISTICAL SOCIETY SERIES B-STATISTICAL METHODOLOGY 5 25,492 3.965 0.018040 STATISTICAL SCIENCE 6 6,545 3.583 0.007500 R Journal 7 1,811 3.312 0.007320 FUZZY SETS AND SYSTEMS 8 17,605 3.305 0.008740 BIOSTATISTICS 9 4,048 3.098 0.006780 STATISTICS AND COMPUTING 10 4,519 3.035 0.011050 IEEE-ACM Transactions on Computational Biology and Bioinformatics 11 3,542 3.015 0.006930 JOURNAL OF BUSINESS & ECONOMIC STATISTICS 12 5,921 2.935 0.008680 CHEMOMETRICS AND INTELLIGENT LABORATORY SYSTEMS 13 9,421 2.895 0.007790 MULTIVARIATE BEHAVIORAL RESEARCH 14 7,112 2.750 0.007880 INTERNATIONAL STATISTICAL REVIEW 15 1,807 2.740 0.002560 Bayesian Analysis 16 2,000 2.696 0.006600 ANNALS OF STATISTICS 17 21,466 2.650 0.027080 PROBABILISTIC ENGINEERING MECHANICS 18 2,689 2.411 0.002430 BRITISH JOURNAL OF MATHEMATICAL & STATISTICAL PSYCHOLOGY 19 1,965 2.388 0.003480 ANNALS OF PROBABILITY 20 5,892 2.377 0.017230 STOCHASTIC ENVIRONMENTAL RESEARCH AND RISK ASSESSMENT 21 4,272 2.351 0.006810 JOURNAL OF COMPUTATIONAL AND GRAPHICAL STATISTICS 22 4,369 2.319 0.008900 STATISTICAL METHODS IN -

Abbreviations of Names of Serials

Abbreviations of Names of Serials This list gives the form of references used in Mathematical Reviews (MR). ∗ not previously listed The abbreviation is followed by the complete title, the place of publication x journal indexed cover-to-cover and other pertinent information. y monographic series Update date: January 30, 2018 4OR 4OR. A Quarterly Journal of Operations Research. Springer, Berlin. ISSN xActa Math. Appl. Sin. Engl. Ser. Acta Mathematicae Applicatae Sinica. English 1619-4500. Series. Springer, Heidelberg. ISSN 0168-9673. y 30o Col´oq.Bras. Mat. 30o Col´oquioBrasileiro de Matem´atica. [30th Brazilian xActa Math. Hungar. Acta Mathematica Hungarica. Akad. Kiad´o,Budapest. Mathematics Colloquium] Inst. Nac. Mat. Pura Apl. (IMPA), Rio de Janeiro. ISSN 0236-5294. y Aastaraam. Eesti Mat. Selts Aastaraamat. Eesti Matemaatika Selts. [Annual. xActa Math. Sci. Ser. A Chin. Ed. Acta Mathematica Scientia. Series A. Shuxue Estonian Mathematical Society] Eesti Mat. Selts, Tartu. ISSN 1406-4316. Wuli Xuebao. Chinese Edition. Kexue Chubanshe (Science Press), Beijing. ISSN y Abel Symp. Abel Symposia. Springer, Heidelberg. ISSN 2193-2808. 1003-3998. y Abh. Akad. Wiss. G¨ottingenNeue Folge Abhandlungen der Akademie der xActa Math. Sci. Ser. B Engl. Ed. Acta Mathematica Scientia. Series B. English Wissenschaften zu G¨ottingen.Neue Folge. [Papers of the Academy of Sciences Edition. Sci. Press Beijing, Beijing. ISSN 0252-9602. in G¨ottingen.New Series] De Gruyter/Akademie Forschung, Berlin. ISSN 0930- xActa Math. Sin. (Engl. Ser.) Acta Mathematica Sinica (English Series). 4304. Springer, Berlin. ISSN 1439-8516. y Abh. Akad. Wiss. Hamburg Abhandlungen der Akademie der Wissenschaften xActa Math. Sinica (Chin. Ser.) Acta Mathematica Sinica. -

The Importance of Endogenous Peer Group Formation

Econometrica, Vol. 81, No. 3 (May, 2013), 855–882 FROM NATURAL VARIATION TO OPTIMAL POLICY? THE IMPORTANCE OF ENDOGENOUS PEER GROUP FORMATION BY SCOTT E. CARRELL,BRUCE I. SACERDOTE, AND JAMES E. WEST1 We take cohorts of entering freshmen at the United States Air Force Academy and assign half to peer groups designed to maximize the academic performance of the low- est ability students. Our assignment algorithm uses nonlinear peer effects estimates from the historical pre-treatment data, in which students were randomly assigned to peer groups. We find a negative and significant treatment effect for the students we in- tended to help. We provide evidence that within our “optimally” designed peer groups, students avoided the peers with whom we intended them to interact and instead formed more homogeneous subgroups. These results illustrate how policies that manipulate peer groups for a desired social outcome can be confounded by changes in the endoge- nous patterns of social interactions within the group. KEYWORDS: Peer effects, social network formation, homophily. 0. INTRODUCTION PEER EFFECTS HAVE BEEN widely studied in the economics literature due to the perceived importance peers play in workplace, educational, and behavioral outcomes. Previous studies in the economics literature have focused almost ex- clusively on the identification of peer effects and have only hinted at the poten- tial policy implications of the results.2 Recent econometric studies on assorta- tive matching by Graham, Imbens, and Ridder (2009) and Bhattacharya (2009) have theorized that individuals could be sorted into peer groups to maximize productivity.3 This study takes a first step in determining whether student academic per- formance can be improved through the systematic sorting of students into peer groups. -

Computational Tools for Economics Using R

Student Workshop Student Workshop Computational Tools for Economics Using R Matteo Sostero [email protected] International Doctoral Program in Economics Sant’Anna School of Advanced Studies, Pisa October 2013 Maeo Sostero| Sant’Anna School of Advanced Studies, Pisa 1 / 86 Student Workshop Outline 1 About the workshop 2 Getting to know R 3 Array Manipulation and Linear Algebra 4 Functions, Plotting and Optimisation 5 Computational Statistics 6 Data Analysis 7 Graphics with ggplot2 8 Econometrics 9 Procedural programming Maeo Sostero| Sant’Anna School of Advanced Studies, Pisa 2 / 86 Student Workshop| About the workshop Outline 1 About the workshop 2 Getting to know R 3 Array Manipulation and Linear Algebra 4 Functions, Plotting and Optimisation 5 Computational Statistics 6 Data Analysis 7 Graphics with ggplot2 8 Econometrics 9 Procedural programming Maeo Sostero| Sant’Anna School of Advanced Studies, Pisa 3 / 86 Student Workshop| About the workshop About this Workshop Computational Tools Part of the ‘toolkit’ of computational and empirical research. Direct applications to many subjects (Maths, Stats, Econometrics...). Develop a problem-solving mindset. The R language I Free, open source, cross-platform, with nice ide. I Very wide range of applications (Matlab + Stata + spss + ...). I Easy coding and plenty of (free) packages suited to particular needs. I Large (and growing) user base and online community. I Steep learning curve (occasionally cryptic syntax). I Scattered references. Maeo Sostero| Sant’Anna School of Advanced Studies, Pisa 4 / 86 Student Workshop| About the workshop Workshop material The online resource for this workshop is: http://bit.ly/IDPEctw It will contain the following material: The syllabus for the workshop. -

Robust Statistics Part 1: Introduction and Univariate Data General References

Robust Statistics Part 1: Introduction and univariate data Peter Rousseeuw LARS-IASC School, May 2019 Peter Rousseeuw Robust Statistics, Part 1: Univariate data LARS-IASC School, May 2019 p. 1 General references General references Hampel, F.R., Ronchetti, E.M., Rousseeuw, P.J., Stahel, W.A. Robust Statistics: the Approach based on Influence Functions. Wiley Series in Probability and Mathematical Statistics. Wiley, John Wiley and Sons, New York, 1986. Rousseeuw, P.J., Leroy, A. Robust Regression and Outlier Detection. Wiley Series in Probability and Mathematical Statistics. John Wiley and Sons, New York, 1987. Maronna, R.A., Martin, R.D., Yohai, V.J. Robust Statistics: Theory and Methods. Wiley Series in Probability and Statistics. John Wiley and Sons, Chichester, 2006. Hubert, M., Rousseeuw, P.J., Van Aelst, S. (2008), High-breakdown robust multivariate methods, Statistical Science, 23, 92–119. wis.kuleuven.be/stat/robust Peter Rousseeuw Robust Statistics, Part 1: Univariate data LARS-IASC School, May 2019 p. 2 General references Outline of the course General notions of robustness Robustness for univariate data Multivariate location and scatter Linear regression Principal component analysis Advanced topics Peter Rousseeuw Robust Statistics, Part 1: Univariate data LARS-IASC School, May 2019 p. 3 General notions of robustness General notions of robustness: Outline 1 Introduction: outliers and their effect on classical estimators 2 Measures of robustness: breakdown value, sensitivity curve, influence function, gross-error sensitivity, maxbias curve. Peter Rousseeuw Robust Statistics, Part 1: Univariate data LARS-IASC School, May 2019 p. 4 General notions of robustness Introduction What is robust statistics? Real data often contain outliers. -

V1603419 Discriminant Analysis When the Initial

TECHNOMETRICSO, VOL. 16, NO. 3, AUGUST 1974 Discriminant Analysis When the Initial Samples are Misclassified II: Non-Random Misclassification Models Peter A. Lachenbruch Department of Biostatistics, University of North Carolina Chapel Hill, North Carolina Two models of non-random initial misclassifications are studied. In these models, observations which are closer to the mean of the “wrong” population have a greater chance of being misclassified than others. Sampling st,udies show that (a) the actual error rates of the rules from samples with initial misclassification are only slightly affected; (b) the apparent error rates, obtained by resubstituting the observations into the calculated discriminant function, are drastically affected, and cannot be used; and (c) the Mahalanobis 02 is greatly inflated. KEYWORDS misclassified. This clearly is unrealistic. The present Discriminant Analysis study attempts to present two other models which Robustness Non-Random Initial Misclassification it is hoped are more realistic than the “equal chance” model. To do this, we sacrifice some mathematical rigor, as the equations involved are 1. INTR~DUOTI~N very difficult to set down. Thus, Monte Carlo Lachenbruch (1966) studied the effects of mis- experiments are used to evaluat’e the behavior of classification of the initial samples on the per- the LDF. formance of the linear discriminant function (LDF). 2. hlODELSAND EXPERIMENTS More recently, Mclachlan (1972) developed the asymptotic results for this model and found gen- We assume that observations x, from the ith erally good agreement with the earlier results. It population, 7~; , i = 1, 2 are normally distributed was found that if the total proportion was not with mean p, and covariance matrix I.