Or. Just What Is a Tar File, Anyway?

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

![User Commands GZIP ( 1 ) Gzip, Gunzip, Gzcat – Compress Or Expand Files Gzip [ –Acdfhllnnrtvv19 ] [–S Suffix] [ Name ... ]](https://docslib.b-cdn.net/cover/1609/user-commands-gzip-1-gzip-gunzip-gzcat-compress-or-expand-files-gzip-acdfhllnnrtvv19-s-suffix-name-561609.webp)

User Commands GZIP ( 1 ) Gzip, Gunzip, Gzcat – Compress Or Expand Files Gzip [ –Acdfhllnnrtvv19 ] [–S Suffix] [ Name ... ]

User Commands GZIP ( 1 ) NAME gzip, gunzip, gzcat – compress or expand files SYNOPSIS gzip [–acdfhlLnNrtvV19 ] [– S suffix] [ name ... ] gunzip [–acfhlLnNrtvV ] [– S suffix] [ name ... ] gzcat [–fhLV ] [ name ... ] DESCRIPTION Gzip reduces the size of the named files using Lempel-Ziv coding (LZ77). Whenever possible, each file is replaced by one with the extension .gz, while keeping the same ownership modes, access and modification times. (The default extension is – gz for VMS, z for MSDOS, OS/2 FAT, Windows NT FAT and Atari.) If no files are specified, or if a file name is "-", the standard input is compressed to the standard output. Gzip will only attempt to compress regular files. In particular, it will ignore symbolic links. If the compressed file name is too long for its file system, gzip truncates it. Gzip attempts to truncate only the parts of the file name longer than 3 characters. (A part is delimited by dots.) If the name con- sists of small parts only, the longest parts are truncated. For example, if file names are limited to 14 characters, gzip.msdos.exe is compressed to gzi.msd.exe.gz. Names are not truncated on systems which do not have a limit on file name length. By default, gzip keeps the original file name and timestamp in the compressed file. These are used when decompressing the file with the – N option. This is useful when the compressed file name was truncated or when the time stamp was not preserved after a file transfer. Compressed files can be restored to their original form using gzip -d or gunzip or gzcat. -

Software to Extract Cab Files

Software to extract cab files click here to download You can use WinZip to extract CAB files by following the steps listed below. file extension associated with WinZip program, just double-click on the file. PeaZip offers read-only support (open and extract cab files) for Microsoft Cabinet file format, providing a free alternative utility to open (list content) and www.doorway.ru packages, or disassemble single files from the container, under Windows and Linux operating systems. Moreover, the OS can create, extract, or rebuild cab files. This means you do not require any additional third-party software for this task. All CAB. For a number of years, Microsoft has www.doorway.ru files to compress software that was distributed on disks. Originally, these files were used to minimize the number . The InstallShield installer program makes files with the CAB However, you can also open or extract CAB files with a file decompression tool. Open, browse, extract, or view Microsoft CAB files with Altap Salamander File Manager. High quality software with emphasis on error states. Affordable cost: . Microsoft uses cab files to package software programs. You can view the contents of a cab file by unzipping it and extracting its contents to a. Hi, I need some help www.doorway.ru files. I have to extract a patch for one game, so i used universal extractor for to extract www.doorway.ru Now I have to. cab Extension - List of programs that can www.doorway.ru files. www.doorway.ru, Inventoria Stock Manager, NCH Software, Extract with Express Zip, Low. -

The Ark Handbook

The Ark Handbook Matt Johnston Henrique Pinto Ragnar Thomsen The Ark Handbook 2 Contents 1 Introduction 5 2 Using Ark 6 2.1 Opening Archives . .6 2.1.1 Archive Operations . .6 2.1.2 Archive Comments . .6 2.2 Working with Files . .7 2.2.1 Editing Files . .7 2.3 Extracting Files . .7 2.3.1 The Extract dialog . .8 2.4 Creating Archives and Adding Files . .8 2.4.1 Compression . .9 2.4.2 Password Protection . .9 2.4.3 Multi-volume Archive . 10 3 Using Ark in the Filemanager 11 4 Advanced Batch Mode 12 5 Credits and License 13 Abstract Ark is an archive manager by KDE. The Ark Handbook Chapter 1 Introduction Ark is a program for viewing, extracting, creating and modifying archives. Ark can handle vari- ous archive formats such as tar, gzip, bzip2, zip, rar, 7zip, xz, rpm, cab, deb, xar and AppImage (support for certain archive formats depends on the appropriate command-line programs being installed). In order to successfully use Ark, you need KDE Frameworks 5. The library libarchive version 3.1 or above is needed to handle most archive types, including tar, compressed tar, rpm, deb and cab archives. To handle other file formats, you need the appropriate command line programs, such as zipinfo, zip, unzip, rar, unrar, 7z, lsar, unar and lrzip. 5 The Ark Handbook Chapter 2 Using Ark 2.1 Opening Archives To open an archive in Ark, choose Open... (Ctrl+O) from the Archive menu. You can also open archive files by dragging and dropping from Dolphin. -

File Management Tools

File Management Tools ● gzip and gunzip ● tar ● find ● df and du ● od ● nm and strip ● sftp and scp Gzip and Gunzip ● The gzip utility compresses a specified list of files. After compressing each specified file, it renames it to have a “.gz” extension. ● General form. gzip [filename]* ● The gunzip utility uncompresses a specified list of files that had been previously compressed with gzip. ● General form. gunzip [filename]* Tar (38.2) ● Tar is a utility for creating and extracting archives. It was originally setup for archives on tape, but it now is mostly used for archives on disk. It is very useful for sending a set of files to someone over the network. Tar is also useful for making backups. ● General form. tar options filenames Commonly Used Tar Options c # insert files into a tar file f # use the name of the tar file that is specified v # output the name of each file as it is inserted into or # extracted from a tar file x # extract the files from a tar file Creating an Archive with Tar ● Below is the typical tar command used to create an archive from a set of files. Note that each specified filename can also be a directory. Tar will insert all files in that directory and any subdirectories. tar cvf tarfilename filenames ● Examples: tar cvf proj.tar proj # insert proj directory # files into proj.tar tar cvf code.tar *.c *.h # insert *.c and *.h files # into code.tar Extracting Files from a Tar Archive ● Below is the typical tar command used to extract the files from a tar archive. -

K.2 Firmware Updater Technical Note

K.2 Series Active Loudspeakers Technical Notes K.2 Firmware Updater—Mac OS Installation Purpose: This Technical Note is for users who get an error message from the Mac Operating System (Mac OS) when attempting to install the K2Updater-2.0.1-osx-installer app. The error message recommends deletion of the app. If the K2Updater-2.0.1-osx-installer app was successfully downloaded, extracted, and installed, this document is unnecessary. If the error message appears when you double-click on the K2Updater-2.0.1-osx-installer app, use the following procedure to work around it and successfully install the K.2 Firmware updater. Software required: • Internet browser (Safari, Firefox, Chrome, etc.) • K2Updater-2.0.1-osx-installer.tar.gz MacOS utilities required: • Archive Utility • Terminal Utility Using Terminal to enable the K.2 Update Installer NOTE: If you have not done so already, download and extract the fileK2Updater-2.0.1-osx-installer.tar.gz from the K.2 firmware update page at www.qsc.com. The Terminal app in the Mac OS is similar to the Command Prompt (CMD) app in Windows. By accessing the OS shell, it allows changes at the root level that allow the K2Updater-2.0.1-osx-installer.app to run. Revision A—8 April 2020 1 K.2 Series Active Loudspeakers Technical Notes K.2 Firmware Updater—Mac OS Installation 1. Open the folder Applcations/Go/Utilities/Terminal. Double-click Terminal to launch. 2. In the Terminal window at the prompt, type xattr<space>-cr<space> but do not press Return yet. -

UNIX (Solaris/Linux) Quick Reference Card Logging in Directory Commands at the Login: Prompt, Enter Your Username

UNIX (Solaris/Linux) QUICK REFERENCE CARD Logging In Directory Commands At the Login: prompt, enter your username. At the Password: prompt, enter ls Lists files in current directory your system password. Linux is case-sensitive, so enter upper and lower case ls -l Long listing of files letters as required for your username, password and commands. ls -a List all files, including hidden files ls -lat Long listing of all files sorted by last Exiting or Logging Out modification time. ls wcp List all files matching the wildcard Enter logout and press <Enter> or type <Ctrl>-D. pattern Changing your Password ls dn List files in the directory dn tree List files in tree format Type passwd at the command prompt. Type in your old password, then your new cd dn Change current directory to dn password, then re-enter your new password for verification. If the new password cd pub Changes to subdirectory “pub” is verified, your password will be changed. Many systems age passwords; this cd .. Changes to next higher level directory forces users to change their passwords at predetermined intervals. (previous directory) cd / Changes to the root directory Changing your MS Network Password cd Changes to the users home directory cd /usr/xx Changes to the subdirectory “xx” in the Some servers maintain a second password exclusively for use with Microsoft windows directory “usr” networking, allowing you to mount your home directory as a Network Drive. mkdir dn Makes a new directory named dn Type smbpasswd at the command prompt. Type in your old SMB passwword, rmdir dn Removes the directory dn (the then your new password, then re-enter your new password for verification. -

Z/VM Version 7 Release 2

z/VM Version 7 Release 2 OpenExtensions User's Guide IBM SC24-6299-01 Note: Before you use this information and the product it supports, read the information in “Notices” on page 201. This edition applies to Version 7.2 of IBM z/VM (product number 5741-A09) and to all subsequent releases and modifications until otherwise indicated in new editions. Last updated: 2020-09-08 © Copyright International Business Machines Corporation 1993, 2020. US Government Users Restricted Rights – Use, duplication or disclosure restricted by GSA ADP Schedule Contract with IBM Corp. Contents Figures................................................................................................................. xi Tables................................................................................................................ xiii About this Document........................................................................................... xv Intended Audience..................................................................................................................................... xv Conventions Used in This Document......................................................................................................... xv Escape Character Notation................................................................................................................... xv Case-Sensitivity.....................................................................................................................................xv Typography............................................................................................................................................xv -

Gzip, Bzip2 and Tar EXPERT PACKING

LINUXUSER Command Line: gzip, bzip2, tar gzip, bzip2 and tar EXPERT PACKING A short command is all it takes to pack your data or extract it from an archive. BY HEIKE JURZIK rchiving provides many bene- fits: packed and compressed Afiles occupy less space on your disk and require less bandwidth on the Internet. Linux has both GUI-based pro- grams, such as File Roller or Ark, and www.sxc.hu command-line tools for creating and un- packing various archive types. This arti- cle examines some shell tools for ar- chiving files and demonstrates the kind of expert packing that clever combina- tained by the packing process. If you A gzip file can be unpacked using either tions of Linux commands offer the com- prefer to use a different extension, you gunzip or gzip -d. If the tool discovers a mand line user. can set the -S (suffix) flag to specify your file of the same name in the working di- own instead. For example, the command rectory, it prompts you to make sure that Nicely Packed with “gzip” you know you are overwriting this file: The gzip (GNU Zip) program is the de- gzip -S .z image.bmp fault packer on Linux. Gzip compresses $ gunzip screenie.jpg.gz simple files, but it does not create com- creates a compressed file titled image. gunzip: screenie.jpg U plete directory archives. In its simplest bmp.z. form, the gzip command looks like this: The size of the compressed file de- Listing 1: Compression pends on the distribution of identical Compared gzip file strings in the original file. -

Computational Tool for Calculation of Tissue Air Ratio and Tissue Maximum Ratio in Radiation Dosimetry

Research Article Curr Trends Biomedical Eng & Biosci Volume 17 Issue 4 - January 2019 Copyright © All rights are reserved by Atia Atiq DOI: 10.19080/CTBEB.2019.17.555966 Computational Tool for Calculation of Tissue Air Ratio and Tissue Maximum Ratio in Radiation Dosimetry Atia Atiq*, Maria Atiq and Saeed Ahmad Buzdar Department of Physics, The Islamia University of Bahawalpur, Pakistan Submission: November 27, 2018; Published: January 03, 2019 *Corresponding author: Atia Atiq, Department of Physics, The Islamia University of Bahawalpur, Pakistan Abstract Air RatioThe radiation (TAR) and therapy Tissue field Maximum is advancing Ratio continuously(TMR). Newton to achieveDivided higherDifference degrees interpolation of accuracy method and efficiency. was used The to purposeinterpolate of thisdata work between is to tabulatedenhance the values. efficiency In order of toradiotherapy shorten the machinecommissioning commissioning time, technique by applying of interpolation computational was used;tools into which interpolate calculation dosimetric of dosimetric quantities quantities Tissue were made for certain depths and field sizes with reasonable step size and remaining values at different depths and field sizes were obtained ofby tabulatedinterpolation. data It to was make found it continuous. that interpolated The results results obtained considerably in this agree technique with the were measured in agreement data and with so this measured method data could and be henceused efficiently could be usedfor interpolation as input data of in above radiotherapy mentioned treatment dosimetric planning quantities. process. The The data interpolated obtained by results this technique were well is within highly accuracyreliable andlimits can and fill this the methoddiscrete canset significantlyKeywords: Radiotherapy; improve the efficiency Interpolation; of machine Tissue commissioning maximum ratio; and Tissue resultantly air ratio; improve Commissioning treatment planning process. -

Linux/Unix: the Command Line

Linux/Unix: The Command Line Adapted from materials by Dan Hood Essentials of Linux • Kernel ú Allocates time and memory, handles file operations and system calls. • Shell ú An interface between the user and the kernel. • File System ú Groups all files together in a hierarchical tree structure. 2 Format of UNIX Commands • UNIX commands can be very simple one word commands, or they can take a number of additional arguments (parameters) as part of the command. In general, a UNIX command has the following form: command options(s) filename(s) • The command is the name of the utility or program that we are going to execute. • The options modify the way the command works. It is typical for these options to have be a hyphen followed by a single character, such as -a. It is also a common convention under Linux to have options that are in the form of 2 hyphens followed by a word or hyphenated words, such as --color or --pretty-print. • The filename is the last argument for a lot of UNIX commands. It is simply the file or files that you want the command to work on. Not all commands work on files, such as ssh, which takes the name of a host as its argument. Common UNIX Conventions • In UNIX, the command is almost always entered in all lowercase characters. • Typically any options come before filenames. • Many times, individual options may need a word after them to designate some additional meaning to the command. Familiar Commands: scp (Secure CoPy) • The scp command is a way to copy files back and forth between multiple computers. -

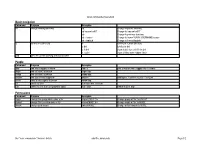

Linux Command Cheat Sheet

Linux commands cheat sheet Basic navigation Command Purpose Examples cd change working directory cd .. Change to parent directory cd /course/cs017 Change to /course/cs017 cd - Change to previous directory cd ~/course Change to /home/YOUR_USERNAME/course cd ~dap/pub Change to /home/dap/pub ls list files in a directory ls list files in current directory ls dir1 list files in dir1 ls -l dir1 show details for each file in dir1 ls -a dir1 show all files (even hidden files) pwd print the current working directory©s name People Command Purpose Examples floor see who©s logged in where floor ta print a map of who©s logged into TA areas finger find out about a person finger dap snoop find out about a person snoop dap anyone find your friends logged in anyone Add logins, 1 per line to your ~/.anyone zwrite send a message to a person zwrite dap su switch to user Don©t use this. Use sux. sux switch to user (can use graphical apps) sux ± dap Switch to user ©dap© Permissions Command Purpose Examples chgrp change the group which owns a file chgrp GROUP file Change group of ©file© to GROUP chmod change the user who owns a file chmod MODE file Change mode of ©file© to MODE umask change your umask umask MODE Change default umask to MODE Use "man <command>" for more details [email protected] Page 1/2 Linux commands cheat sheet Specific file types Command Purpose Command Purpose acroread Views pdf files gzip/gunzip Gzip compression xpdf Views pdf files zip/unzip Windows-compatible compression xdvi Views dvi files gv Views postscript files (.ps) Miscellaneous -

Doing More in UNIX: Command-Line Tools HORT 59000 Lecture 3 Instructor: Kranthi Varala UNIX Features

Doing more in UNIX: Command-line tools HORT 59000 Lecture 3 Instructor: Kranthi Varala UNIX features • Command-line based. • Supports thousands of small programs running simultaneously. • Easy to create pipelines from individual programs. • Each command has 3 Input/Output streams: STDIN, STDOUT, STDERR. • Be aware of your location in the file system and permissions. Using a compute node interactively 1. ssh <yourID>@scholar.rcac.purdue.edu Log in to the head node. 2. qsub -I -l nodes=1:ppn=2,walltime=02:00:00 Log in to the compute node to run jobs interactively. Controlling processes • Foreground: Default mode for running commands. The shell waits on the process to finish. • Process retains control of the command line. • Key input is directed to the active process. • Background: Process is initiated and pushed to the background. • Control of command-line is returned to the user. • Key input and other interactions are no longer passed to the process. • Processes can be pushed to background at initiation using & • E.g., cat North_of_Boston.txt & Stopping a process • Active processes can be stopped or terminated (killed) using the SIGSTOP and SIGKILL signals. • SIGSTOP in UNIX shell is issued by ctrl+z • Once a process receives SIGSTOP it is suspended and the job number [j] is shown. • Suspended processes can pushed to background using the command bg %j . Killing a process • Active processes can be stopped or terminated (killed) using the SIGSTOP and SIGKILL signals. • SIGKILL in UNIX shell is given by ctrl+c • SIGKILL kills the process immediately and returns control to the user. • Stopped processes can be killed using kill command.