Bureaucrat Time-Use and Productivity: Evidence from a Survey Experiment

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Districts of Ethiopia

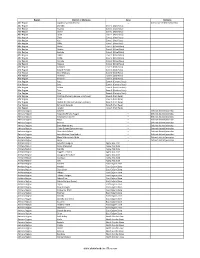

Region District or Woredas Zone Remarks Afar Region Argobba Special Woreda -- Independent district/woredas Afar Region Afambo Zone 1 (Awsi Rasu) Afar Region Asayita Zone 1 (Awsi Rasu) Afar Region Chifra Zone 1 (Awsi Rasu) Afar Region Dubti Zone 1 (Awsi Rasu) Afar Region Elidar Zone 1 (Awsi Rasu) Afar Region Kori Zone 1 (Awsi Rasu) Afar Region Mille Zone 1 (Awsi Rasu) Afar Region Abala Zone 2 (Kilbet Rasu) Afar Region Afdera Zone 2 (Kilbet Rasu) Afar Region Berhale Zone 2 (Kilbet Rasu) Afar Region Dallol Zone 2 (Kilbet Rasu) Afar Region Erebti Zone 2 (Kilbet Rasu) Afar Region Koneba Zone 2 (Kilbet Rasu) Afar Region Megale Zone 2 (Kilbet Rasu) Afar Region Amibara Zone 3 (Gabi Rasu) Afar Region Awash Fentale Zone 3 (Gabi Rasu) Afar Region Bure Mudaytu Zone 3 (Gabi Rasu) Afar Region Dulecha Zone 3 (Gabi Rasu) Afar Region Gewane Zone 3 (Gabi Rasu) Afar Region Aura Zone 4 (Fantena Rasu) Afar Region Ewa Zone 4 (Fantena Rasu) Afar Region Gulina Zone 4 (Fantena Rasu) Afar Region Teru Zone 4 (Fantena Rasu) Afar Region Yalo Zone 4 (Fantena Rasu) Afar Region Dalifage (formerly known as Artuma) Zone 5 (Hari Rasu) Afar Region Dewe Zone 5 (Hari Rasu) Afar Region Hadele Ele (formerly known as Fursi) Zone 5 (Hari Rasu) Afar Region Simurobi Gele'alo Zone 5 (Hari Rasu) Afar Region Telalak Zone 5 (Hari Rasu) Amhara Region Achefer -- Defunct district/woredas Amhara Region Angolalla Terana Asagirt -- Defunct district/woredas Amhara Region Artuma Fursina Jile -- Defunct district/woredas Amhara Region Banja -- Defunct district/woredas Amhara Region Belessa -- -

Ethiopia Early Grade Reading Assessment Regional Findings Annex

Ethiopia Early Grade Reading Assessment Regional Findings Annex Ethiopia Early Grade Reading Assessment Ed Data II Task Number 7 and Ed Data II Task Number 9 October 15, 2010 This publication was produced for review by the United States Agency for International Development. It was prepared by RTI International and the Center for Development Consulting. Ethiopia Early Grade Reading Assessment Regional Findings Annex Ed Data II Task 7 Ed Data II Task 9 October 15, 2010 Prepared for USAID/Ethiopia Prepared by: Benjamin Piper RTI International 3040 Cornwallis Road Post Office Box 12194 Research Triangle Park, NC 27709-2194 RTI International is a trade name of Research Triangle Institute. The authors’ views expressed in this publication do not necessarily reflect the views of the United States Agency for International Development or the United States Government Table of Contents List of Figures .................................................................................................................... iv List of Tables ......................................................................................................................v Regional Analysis Annex ................................................................................................... vi 1. Tigray Region EGRA Scores ...................................................................................1 2. Amhara Region EGRA Scores .................................................................................6 3. Oromiya Region EGRA Scores .............................................................................12 -

African Journal of Plant Science

OPEN ACCESS African Journal of Plant Science May 2020 ISSN 1996-0824 DOI: 10.5897/AJPS www.academicjournals.org About AJPS African Journal of Plant Science (AJPS) provides rapid publication (monthly) of articles in all areas of Plant Science and Botany. The Journal welcomes the submission of manuscripts that meet the general criteria of significance and scientific excellence. Papers will be published shortly after acceptance. All articles published in AJPS are peer-reviewed. Indexing The African Journal of Plant Science is indexed in: CAB Abstracts, CABI’s Global Health Database, Chemical Abstracts (CAS Source Index), China National Knowledge Infrastructure (CNKI), Dimensions Database Google Scholar, Matrix of Information for The Analysis of Journals (MIAR) Microsoft Academic AJPS has an h5-index of 12 on Google Scholar Metrics Open Access Policy Open Access is a publication model that enables the dissemination of research articles to the global community without restriction through the internet. All articles published under open access can be accessed by anyone with internet connection. The African Journal of Plant Science is an Open Access journal. Abstracts and full texts of all articles published in this journal are freely accessible to everyone immediately after publication without any form of restriction. Article License All articles published by African Journal of Plant Science are licensed under the Creative Commons Attribution 4.0 International License. This permits anyone to copy, redistribute, remix, transmit and adapt the -

On-Farm Phenotypic Characterization And

ON-FARM PHENOTYPIC CHARACTERIZATION AND CONSUMER PREFERENCE TRAITS OF INDIGENOUS SHEEP TYPE AS AN INPUT FOR DESIGNING COMMUNITY BASED BREEDING PROGRAM IN BENSA DISTRICT, SOUTHERN ETHIOPIA MSc THESIS HIZKEL KENFO DANDO FEBRUARY 2017 HARAMAYA UNIVERSITY, HARAMAYA On-Farm Phenotypic Characterization and Consumer Preference Traits of Indigenous Sheep Type as an Input for Designing Community Based Breeding Program in Bensa District, Southern Ethiopia A Thesis Submitted to the School of Animal and Range Sciences, Directorate for Post Graduate Program HARAMAYA UNIVERSITY In Partial Fulfilment of the Requirements for the Degree of MASTER OF SCIENCE IN ANIMAL GENETICS AND BREEDING Hizkel Kenfo Dando February 2017 Haramaya University, Haramaya HARAMAYA UNIVERSITY DIRECTORATE FOR POST GRADUATE PROGRAM We hereby certify that we have read and evaluated this Thesis entitled “On-Farm Phenotypic Characterization and Consumer Preference Traits of Indigenous Sheep Type as an Input for Designing Community Based Breeding Program in Bensa District, Southern Ethiopia” prepared under our guidance by Hizkel Kenfo Dando. We recommend that it be submitted as fulfilling the Thesis requirement. Yoseph Mekasha (PhD) __________________ ________________ Major Advisor Signature Date Yosef Tadesse (PhD) __________________ ________________ Co-Advisor Signature Date As a member of the Board of Examiners of the MSc Thesis Open Defense Examination, we certify that we have read and evaluated the Thesis prepared by Hizkel Kenfo Dando and examined the candidate. We recommend -

WCBS III Supply Side Report 1

Federal Democratic Republic of Ethiopia Ministry of Capacity Building in Collaboration with PSCAP Donors "Woreda and City Administrations Benchmarking Survey III” Supply Side Report Survey of Service Delivery Satisfaction Status Final Addis Ababa July, 2010 ACKNOWLEDGEMENT The survey work was lead and coordinated by Berhanu Legesse (AFTPR, World Bank) and Ato Tesfaye Atire from Ministry of Capacity Building. The Supply side has been designed and analysis was produced by Dr. Alexander Wagner while the data was collected by Selam Development Consultants firm with quality control from Mr. Sebastian Jilke. The survey was sponsored through PSCAP’s multi‐donor trust fund facility financed by DFID and CIDA and managed by the World Bank. All stages of the survey work was evaluated and guided by a steering committee comprises of representatives from Ministry of Capacity Building, Central Statistical Agency, the World Bank, DFID, and CIDA. Large thanks are due to the Regional Bureaus of Capacity Building and all PSCAP executing agencies as well as PSCAP Support Project team in the World Bank and in the participating donors for their inputs in the Production of this analysis. Without them, it would have been impossible to produce. Table of Content 1 Executive Summary ...................................................................................................... 1 1.1 Key results by thematic areas............................................................................................................ 1 1.1.1 Local government finance ................................................................................................... -

Essays on Labour Economics in Developing Countries and the Role of the Public Sector

Essays on Labour Economics in Developing Countries and the Role of the Public Sector Ravi Somani December 2018 A dissertation submitted in partial fulfilment of the requirements for the degree of: Doctor of Philosophy University College London Department of Economics University College London 1 Declaration I, Ravi Somani, confirm that the work presented in this thesis is my own.Where information has been derived from other sources, I confirm that this has been indicated in the thesis. Signature: 2 Abstract This thesis presents a study of the internal functions of public-sector organisations in developing countries and how public-sector hiring policies interact with the wider economy, using Ethiopia as a case study. The first chapter introduces the thesis. The second chapter provides empirical evidence on how public-sector hiring policies impact education choices and private-sector productivity. I study the expansion of universities in Ethiopia and find that it leads to an increase in public-sector productivity but a decrease in private-sector productivity. The findings are rationalised by the following labour-market conditions, consistent with most developing country contexts: (i) entry into public employment is favoured towards those with a tertiary education; (ii) the public sector provides a large wage premium; (iii) entry into public employment requires costly search effort. The third chapter uses novel survey instruments and administrative data to mea- sure the individual-level information that bureaucrats have on local conditions and a field experiment to provide exogenous variation in information access. Civil servants make large errors and information acquisition is consistent with classical theoretical pre- dictions. -

18 Policy Research Working Paper 8644

Policy Research Working Paper 8644 Public Disclosure Authorized Hierarchy and Information Daniel Rogger Public Disclosure Authorized Ravi Somani Public Disclosure Authorized Public Disclosure Authorized Development Economics Development Research Group November 2018 Policy Research Working Paper 8644 Abstract The information that public officials use to make decisions accuracy. The errors of public officials are large, with 49 determines the distribution of public resources and the effi- percent of officials making errors that are at least 50 percent cacy of public policy. This paper develops a measurement of objective benchmark data. The provision of briefings framework for assessing the accuracy of a set of fundamen- reduces these errors by a quarter of a standard deviation, but tal bureaucratic beliefs and provides experimental evidence in line with theoretical predictions, organizational incen- on the possibility of ‘evidence briefings’ improving that tives mediate their effectiveness. This paper is a product of the Development Research Group, Development Economics. It is part of a larger effort by the World Bank to provide open access to its research and make a contribution to development policy discussions around the world. Policy Research Working Papers are also posted on the Web at http://www.worldbank.org/research. The authors may be contacted at [email protected]. The Policy Research Working Paper Series disseminates the findings of work in progress to encourage the exchange of ideas about development issues. An objective of the series is to get the findings out quickly, even if the presentations are less than fully polished. The papers carry the names of the authors and should be cited accordingly. -

On Farm Phenotypic Characterization of Indigenous Sheep Type in Bensa District, Southern Ethiopia

Journal of Biology, Agriculture and Healthcare www.iiste.org ISSN 2224-3208 (Paper) ISSN 2225-093X (Online) Vol.7, No.19, 2017 On Farm Phenotypic Characterization of Indigenous Sheep Type in Bensa District, Southern Ethiopia Hizkel Kenfo 1 Yoseph Mekasha 2 Yosef Tadesse 3 1.Southern Agricultural Research Institute (SARI),P.O.Box 6, Hawassa, Ethiopia 2.International Livestock Research Institute (ILRI),P.O.Box 5689, Addis Ababa, Ethiopia 3.Haramaya University (HU), School of Animal and Range Sciences, P.O.Box 138, Dire Dawa, Ethiopia Abstract The study was carried out in Bensa district of Sidama Zone, southern Ethiopia; with an objective to characterize indigenous sheep type with respect to morphology characteristics and physical linear traits. A total of 574 sheep were sampled randomly, and stratified into sex and dentition groups (0PPI, 1PPI, 2PPI, 3PPI). Both qualitative and quantitative data were analyzed using SAS versions 9.1.3 (2008). The main frequently observed coat color pattern of sampled male and female sheep populations was patchy (51.9%) while the most observed coat color type was red followed by mixture of red and brown. Sex of the sheep had significant (p>0.05) effect on the body weight and linear body measurements except ear length, pelvic width, tail length and rump length. Dentition classes of sheep contributed significant differences to body weight and the linear body measurements except ear length. The correlation coefficient between body weight and other linear body measurements were positive and significant (p<0.05) both for male and female sheep. The result of the multiple regression analysis showed that chest girth alone could accurately predict body weight both in female and male of sampled population of indigenous sheep with the equation y =-20 + 0.67x for females and y = -29+ 0.8x for males, where y and x are body weight and chest girth, respectively. -

Ethiopia Census 2007

Table 1 : POPULATION SIZE OF REGIONS BY SEX AND PLACE OF RESIDENCE: 2007 Urban + Rural Urban Rural Sex No. % No. % No. % COUNTRY TOTAL * Both Sexes 73,918,505 100.00 11,956,170 100.00 61,962,335 100.00 Male 37,296,657 50.46 5,942,170 49.70 31,354,487 50.60 Female 36,621,848 49.54 6,014,000 50.30 30,607,848 49.40 TIGRAY Region Both Sexes 4,314,456 100.00 842,723 100.00 3,471,733 100.00 Male 2,124,853 49.25 398,072 47.24 1,726,781 49.74 Female 2,189,603 50.75 444,651 52.76 1,744,952 50.26 AFFAR Region * Both Sexes 1,411,092 100.00 188,973 100.00 1,222,119 100.00 Male 786,338 55.73 100,915 53.40 685,423 56.08 Female 624,754 44.27 88,058 46.60 536,696 43.92 AMHARA Region Both Sexes 17,214,056 100.00 2,112,220 100.00 15,101,836 100.00 Male 8,636,875 50.17 1,024,136 48.49 7,612,739 50.41 Female 8,577,181 49.83 1,088,084 51.51 7,489,097 49.59 ORORMIYA Region Both Sexes 27,158,471 100.00 3,370,040 100.00 23,788,431 100.00 Male 13,676,159 50.36 1,705,316 50.60 11,970,843 50.32 Female 13,482,312 49.64 1,664,724 49.40 11,817,588 49.68 SOMALI Region * Both Sexes 4,439,147 100.00 621,210 100.00 3,817,937 100.00 Male 2,468,784 55.61 339,343 54.63 2,129,441 55.77 Female 1,970,363 44.39 281,867 45.37 1,688,496 44.23 BENISHANGUL-GUMUZ Region Both Sexes 670,847 100.00 97,965 100.00 572,882 100.00 Male 340,378 50.74 49,784 50.82 290,594 50.72 Female 330,469 49.26 48,181 49.18 282,288 49.28 SNNP Region Both Sexes 15,042,531 100.00 1,545,710 100.00 13,496,821 100.00 Male 7,482,051 49.74 797,796 51.61 6,684,255 49.52 Female 7,560,480 50.26 747,914 48.39 6,812,566 -

Urban Water Supply Universal Access Plan.Pdf

Federal Democratic Republic of Ethiopia Ministry of Water and Energy PART III Urban Water Supply Universal Access Plan (UWSPUAP) 2011-2015 December 2011 Addis Ababa 1 URBAN WATER SUPPLY UAP Table of Contents Executive Summary .............................................................................................................................................. 1 1. Introduction ................................................................................................................................................... 2 2. Background ................................................................................................................................................... 2 3. The Urban UAP model and Assumptions ..................................................................................................... 4 4. Sector policy and Strategy ............................................................................................................................. 7 5. Cross cutting Issues ..................................................................................................................................... 10 6. Physical Plan ............................................................................................................................................... 10 7. Financial Plan .............................................................................................................................................. 12 7.1. Financial plan for Project Implementation ......................................................................................... -

Federal Democratic Republic of Ethiopia National Disaster Risk Management Commission, Early Warning and Emergency Response Directorate

Federal Democratic Republic of Ethiopia National Disaster Risk Management Commission, Early Warning and Emergency Response Directorate Flood Alert #3 Landslide incident in Oromia region, 29 May 2018 June 2018 FLOOD ALERT INTRODUCTION In April 2018, the NDRMC-led multi-sector National Flood Task Force issued the first Flood Alert based on the National Meteorology Agency (NMA) Mid-Season Forecast for the belg/gu/ganna season (April to May 2018). Subsequently, on 20 May, the National Flood Task Force updated and issued a second Flood Alert based on the monthly NMA weather update for the month of May 2018 which indicates a geographic shift in rainfall from the southeastern parts of Ethiopia (Somali region) towards the western, central and some parts of northern Ethiopia including southern Oromia, some parts of SNNPR, Amhara, Gambella, Afar and Tigray during the month of May 2018. On 29 May, the NMA issued a new weather forecast for the 2018 kiremt season indicating that many areas of northern, northeastern, central, western, southwestern, eastern and adjoining rift valleys are expected to receive dominantly above normal rainfall. In addition, southern highlands and southern Ethiopia are likely to receive normal to above normal rainfall activity, while normal rainfall activity is expected over pocket areas of northwestern Ethiopia. It is also likely that occasional heavy rainfall may inundate low-lying areas in and around river basins. The Flood Alert has therefore been revised for the third time to provide updated information on the probable weather condition for the 2018 kiremt season and identify areas likely to be affected in the country to prompt timely mitigation, preparedness and response measures. -

Ethiopia's Fifth National Health Accounts, 2010/2011

Federal Democratic Republic of Ethiopia Ministry of Health ETHIOPIAN HEALTH ACCOUNTS HOUSEHOLD HEALTH SERVICE UTILIZATION AND EXPENDITURE SURVEY ETHIOPIA’S 2015/16 FIFTH NATIONAL HEALTH ACCOUNTS, 2010/2011 August 2017, Addis Ababa April 2014, Addis Ababa Ethiopian Health Accounts Household Health Service Utilization and Expenditure Survey 2015/16 August 2017 Additional information about the 2015/2016 Ethiopian Health Accounts Household Health Service Utilization and Expenditure Survey may be obtained from the Federal Democratic Republic of Ethiopia Ministry of Health, Resource Mobilization Directorate Lideta Sub City, Addis Ababa Ethiopia. P.O.Box:1234, Telephone: +251115517011/535157; Fax: +251115527033; Email: [email protected]; website: http://www.moh.gov.et Recommended Citation: Federal Democratic Republic of Ethiopia Ministry of Health. August 2017. Ethiopian Health Accounts Household Health Service Utilization and Expenditure Survey 2015/2016, Addis Ababa, Ethiopia. B.I.C.B.I.C. BreakthroughBreakthrough International International Consultancy Consultancy PLC PLC Household Health Service Utilization and Expenditure Survey 2015/16 - Ethiopia Acknowledgements The FDRE Ministry of Health would like to express its gratitude to all institutions and individuals involved in data collection and analysis, and writing up of this household health service utilization and expenditure survey. The Ministry is grateful for the work done by Breakthrough International Consultancy PLC and Fenot Project (Harvard T.H. Chan School of Public Health) in conducting this household survey. The Ministry also acknowledges the Central Statistics Agency (CSA) for selecting sample enumeration areas (EAs) and sharing of the cartographic map and list of EAs, which were critical for successfully completion of the household survey, and the Ethiopian Public Health Institute (EPHI) for checking the quality of data while data was being submitted directly from the enumeration areas to the institute’s cloud server.