A Survey on the Challenges of Implementing Physical Memory Pools∗†

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Novel Memory and I/O Virtualization Techniques for Next Generation Data-Centers Based on Disaggregated Hardware Maciej Bielski

Novel memory and I/O virtualization techniques for next generation data-centers based on disaggregated hardware Maciej Bielski To cite this version: Maciej Bielski. Novel memory and I/O virtualization techniques for next generation data-centers based on disaggregated hardware. Hardware Architecture [cs.AR]. Université Paris Saclay (COmUE), 2019. English. NNT : 2019SACLT022. tel-02464021 HAL Id: tel-02464021 https://pastel.archives-ouvertes.fr/tel-02464021 Submitted on 2 Feb 2020 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Nouvelles techniques de virtualisation de la memoire´ et des entrees-sorties´ vers les periph´ eriques´ pour les prochaines gen´ erations´ de centres de traitement de donnees´ bases´ sur des equipements´ NNT : 2019SACLT022 repartis´ destructur´ es´ These` de doctorat de l’Universite´ Paris-Saclay prepar´ ee´ a` Tel´ ecom´ ParisTech Ecole doctorale n◦580 Sciences et Technologies de l’Information et de la Communication (STIC) Specialit´ e´ de doctorat: Informatique These` present´ ee´ et soutenue a` Biot Sophia Antipolis, le 18/03/2019, -

Analysis of Wasted Computing Resources in Data Centers in Terms of CPU, RAM And

Review Article iMedPub Journals American Journal of Computer Science and 2020 http://www.imedpub.com Engineering Survey Vol. 8 No.2:8 DOI: 10.36648/computer-science-engineering-survey.08.02.08. Analysis of Wasted Computing Resources in Robert Method Karamagi1* 2 Data Centers in terms of CPU, RAM and HDD and Said Ally Abstract 1Department of Information & Despite the vast benefits offered by the data center computing systems, the Communication Technology, The Open University of Tanzania, Dar es salaam, efficient analysis of the wasted computing resources is vital. In this paper we shall Tanzania analyze the amount of wasted computing resources in terms of CPU, RAM and HDD. Keywords: Virtualization; CPU: Central Processing Unit; RAM: Random Access 2Department of Science, Technology Memory; HDD: Hard Disk Drive. and Environmental Studies, The Open University of Tanzania, Dar es salaam, Tanzania Received: September 16, 2020; Accepted: September 30, 2020; Published: October 07, 2020 *Corresponding author: Robert Method Introduction Karamagi, Department of Information & A data center contains tens of thousands of server machines Communication Technology, The Open located in a single warehouse to reduce operational and University of Tanzania, Dar es salaam, capital costs. Within a single data center modern application Tanzania, E-mail: robertokaramagi@gmail. are typically deployed over multiple servers to cope with their com resource requirements. The task of allocating resources to applications is referred to as resource management. In particular, resource management aims at allocating cloud resources in a Citation: Karamagi RM, Ally S (2020) Analysis way to align with application performance requirements and to of Wasted Computing Resources in Data reduce the operational cost of the hosted data center. -

Towards Virtual Infiniband Clusters with Network and Performance

Towards Virtual InfiniBand Clusters with Network and Performance Isolation Diplomarbeit von cand. inform. Marius Hillenbrand an der Fakultät für Informatik Erstgutachter: Prof. Dr. Frank Bellosa Betreuende Mitarbeiter: Dr.-Ing. Jan Stoess Dipl.-Phys. Viktor Mauch Bearbeitungszeit: 17. Dezember 2010 – 16. Juni 2011 KIT – Universität des Landes Baden-Württemberg und nationales Forschungszentrum in der Helmholtz-Gemeinschaft www.kit.edu Abstract Today’s high-performance computing clusters (HPC) are typically operated and used by a sin- gle organization. Demand is fluctuating, resulting in periods of underutilization or overload. In addition, the static OS installation on cluster nodes leaves hardly any room for customization. The concepts of cloud computing transferred to HPC clusters — that is, an Infrastructure-as- a-Service (IaaS) model for HPC computing — promises increased flexibility and cost savings. Elastic virtual clusters provide precisely that capacity that suits actual demand and workload. Elasticity and flexibility come at a price, however: Virtualization overhead, jitter, and additional OS background activity can severely reduce parallel application performance. In addition, HPC workloads typically require distinct cluster interconnects, such as InfiniBand, because of the features they provide, mainly low latency. General-purpose clouds with virtualized Ethernet fail to fulfill these requirements. In this work, we present a novel architecture for HPC clouds. Our architecture comprises the facets node virtualization, network virtualization, and cloud management. We raise the question, whether a commodity hypervisor (the kernel-based virtual machine, KVM, on Linux) can be transformed to provide virtual cluster nodes — that is, virtual machines (VMs) intended for HPC workloads. We provide a concept for cluster network virtualization, using the example of InfiniBand, that provides each user with the impression of using a dedicated network. -

Cloud Computing Principles, Systems and Applications.Pdf

Computer Communications and Networks Nick Antonopoulos Lee Gillam Editors Cloud Computing Principles, Systems and Applications Second Edition Computer Communications and Networks Series editor A.J. Sammes Centre for Forensic Computing Cranfield University, Shrivenham Campus Swindon, UK The Computer Communications and Networks series is a range of textbooks, monographs and handbooks. It sets out to provide students, researchers, and non- specialists alike with a sure grounding in current knowledge, together with com- prehensible access to the latest developments in computer communications and networking. Emphasis is placed on clear and explanatory styles that support a tutorial approach, so that even the most complex of topics is presented in a lucid and intelligible manner. More information about this series at http://www.springer.com/series/4198 Nick Antonopoulos • Lee Gillam Editors Cloud Computing Principles, Systems and Applications Second Edition 123 Editors Nick Antonopoulos Lee Gillam University of Derby University of Surrey Derby, Derbyshire, UK Guildford, Surrey, UK ISSN 1617-7975 ISSN 2197-8433 (electronic) Computer Communications and Networks ISBN 978-3-319-54644-5 ISBN 978-3-319-54645-2 (eBook) DOI 10.1007/978-3-319-54645-2 Library of Congress Control Number: 2017942811 1st edition: © Springer-Verlag London Limited 2010 © Springer International Publishing AG 2017 This work is subject to copyright. All rights are reserved by the Publisher, whether the whole or part of the material is concerned, specifically the rights of translation, reprinting, reuse of illustrations, recitation, broadcasting, reproduction on microfilms or in any other physical way, and transmission or information storage and retrieval, electronic adaptation, computer software, or by similar or dissimilar methodology now known or hereafter developed. -

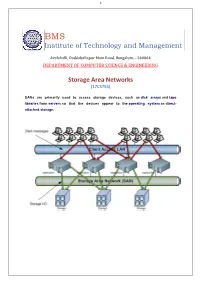

Institute of Technology and Management Storage Area Networks

1 BMS Institute of Technology and Management Avalahalli, Doddaballapur Main Road, Bengaluru – 560064 DEPARTMENT OF COMPUTER SCIENCE & ENGINEERING Storage Area Networks (17CS754) SANs are primarily used to access storage devices, such as disk arrays and tape libraries from servers so that the devices appear to the operating system as direct- attached storage. 2 STORAGE AREA NETWORKS [As per Choice Based Credit System (CBCS) scheme] (Effective from the academic year 2017 - 2018) SEMESTER – VII Subject Code 17CS754 IA Marks 40 Number of Lecture Hours/Week 3 Exam Marks 60 Total Number of Lecture Hours 40 Exam Hours 03 CREDITS – 03 Module – 1 Teaching Hours Storage System Introduction to evolution of storage architecture, key data centre 8 Hours Elements, virtualization, and cloud computing. Key data centre elements – Host (or compute), connectivity, storage, and application in both classic and virtual Environments. RAID implementations, techniques, and levels along with the Impact of RAID on application performance. Components of intelligent storage systems and virtual storage provisioning and intelligent storage system Implementations. Module – 2 Storage Networking Technologies and Virtualization Fibre Channel SAN 8 Hours components, connectivity options, and topologies including access protection mechanism „zoning”, FC protocol stack, addressing and operations, SAN-based virtualization and VSAN technology, iSCSI and FCIP(Fibre Channel over IP) protocols for storage access over IP network, Converged protocol FCoE and its components, Network Attached Storage (NAS) - components, protocol and operations, File level storage virtualization, Object based storage and unified storage platform. Module – 3 Backup, Archive, and Replication This unit focuses on information availability 8 Hours and business continuity solutions in both virtualized and non-virtualized environments. -

Younge Phd-Thesis.Pdf

ARCHITECTURAL PRINCIPLES AND EXPERIMENTATION OF DISTRIBUTED HIGH PERFORMANCE VIRTUAL CLUSTERS by Andrew J. Younge Submitted to the faculty of Indiana University in partial fulfillment of the requirements for the degree of Doctor of Philosophy Department of Computer Science Indiana University October 2016 Copyright c 2016 Andrew J. Younge All Rights Reserved INDIANA UNIVERSITY GRADUATE COMMITTEE APPROVAL of a dissertation submitted by Andrew J. Younge This dissertation has been read by each member of the following graduate committee and by majority vote has been found to be satisfactory. Date Geoffrey C. Fox, Ph.D, Chair Date Judy Qiu, Ph.D Date Thomas Sterling, Ph.D Date D. Martin Swany, Ph.D ABSTRACT ARCHITECTURAL PRINCIPLES AND EXPERIMENTATION OF DISTRIBUTED HIGH PERFORMANCE VIRTUAL CLUSTERS Andrew J. Younge Department of Computer Science Doctor of Philosophy With the advent of virtualization and Infrastructure-as-a-Service (IaaS), the broader scientific computing community is considering the use of clouds for their scientific computing needs. This is due to the relative scalability, ease of use, advanced user environment customization abilities, and the many novel computing paradigms available for data-intensive applications. However, a notable performance gap exists between IaaS and typical high performance computing (HPC) resources. This has limited the applicability of IaaS for many potential users, not only for those who look to leverage the benefits of virtualization with traditional scientific computing applications, but also for the growing number of big data scientists whose platforms are unable to build on HPCs advanced hardware resources. Concurrently, we are at the forefront of a convergence in infrastructure between Big Data and HPC, the implications of which suggest that a unified distributed computing architecture could provide computing and storage ca- pabilities for both differing distributed systems use cases. -

Grid and Cloud Computing

CS6703 – GRID AND CLOUD COMPUTING M.I.E.T. ENGINEERING COLLEGE (Approved by AICTE and Affiliated to Anna University Chennai) TRICHY PUDUKKOTTAI ROAD, TIRUCHIRAPPALLI 620 007 – – DEPARTMENT OF COMPUTER SCIENCE AND ENGINEERING COURSE MATERIAL CS6703-GRID AND CLOUD COMPUTING M.I.E.T./CSE/IV YR/ GRID AND CLOUD COMPUTING CS6703 – GRID AND CLOUD COMPUTING M.I.E.T. ENGINEERING COLLEGE (Approved by AICTE and Affiliated to Anna University Chennai) TRICHY – PUDUKKOTTAI ROAD, TIRUCHIRAPPALLI – 620 007 DEPARTMENT OF COMPUTER SCIENCE AND ENGINEERING SYLLABUS (THEORY) Sub. Code : CS6703 Branch/Year/Sem : CSE/IV/VII Sub Name : GRID AND CLOUD COMPUTING Batch : 2015-2019 Staff Name : A.BARVEEN Academic Year : 2018-2019 L T P C 3 0 0 3 UNIT I INTRODUCTION 9 Evolution of Distributed computing: Scalable computing over the Internet Technologies for network based systems clusters of cooperative computers- Grid computing– Infrastructures cloud computing - service– oriented architecture Introduction to Grid Architecture and standard– s Elements of Grid Overview of Grid Architecture.– – UNIT II GRID– SERVICE 9 Introduction to Open Grid Services Architecture (OGSA) Motivation Functionality Requirements Practical & Detailed view of OGSA/OGSI Data –intensive grid servi– ce models OGSA services. – – – UNIT III VIRTUALIZATION 9 Cloud deployment models: public, private, hybrid, community Categories of cloud computing: Everything as a service: Infrastructure, platform, software -– Pros and Cons of cloud computing Implementation levels of virtualization virtualization -

International Journal of Advance Research in Computer Science And

ISSN: 2321-7782 (Online) Volume 2, Issue 4, April 2014 International Journal of Advance Research in Computer Science and Management Studies Research Article / Paper / Case Study Available online at: www.ijarcsms.com Role of virtualization in cloud computing Kamyab Khajehei Islamic Azad University Dashtestan Branch Borazjan Iran Abstract: Cloud computing in these days is the hottest area for research because of the major issues such as reducing costs, and also its scalability and flexibility in computer services. When we talk about the cloud computing technology and its characteristics like high availability or pool of resources or even when we talk about different instances in cloud computing, we should not forget that how it can achieve in cloud technology. The virtualization technology (server, network and storage virtualization technologies) is not a new concept in hardware and software development, it used for developing new concepts like cloud computing technology in an IT global application. Keywords: Cloud Computing; Virtualization technology; Server Virtualization; Network Virtualization; Storage Virtualization. I. INTRODUCTION In these days all the software developers are creating packages based on the cloud computing technology, and for cloud providers, it is a good opportunity to use strobe bond systems with low cost [1]. Cloud computing is an internet based computing with the ability to share resources (hardware, software, storage, network) on-demand and dynamically, but most of the IT people do not pay attention to one point and it is the evolution of cloud computing could not be possible without existing of other technologies like virtualization technology. We can see the footprint of virtualization technology in most of the major abilities which provides by cloud computing technology. -

Lightweight and Generic RDMA Engine Para- Virtualization for the KVM Hypervisor

Lightweight and Generic RDMA Engine Para- Virtualization for the KVM Hypervisor Angelos Mouzakitis, Christian Pinto, Nikolay Babis Aronis, Manolis Marazakis Nikolaev, Alvise Rigo, Daniel Raho Foundation for Research and Technology – Hellas (FORTH) Virtual Open Systems Nikolaou Plastira 100 17, Rue Lakanal Heraklion, Crete, Greece Grenoble, France Abstract—Remote DMA (RDMA) engines are widely used in application instances. In parallel, large clusters/data-centres are clusters/data-centres to improve the performance of data extensively relying on virtualization as a tool for improved transfers between applications running on different nodes of a utilization of system resources (e.g., memory, disk, CPU) and computing system. RDMAs are today supported by most network hardware consolidation. This is achieved by running multiple architectures and distributed programming models. However, virtual instances of a system on the same hardware machine. In with the massive usage of virtualization most applications will use addition virtualization is used for resilience thanks to facilities RDMAs from virtual machines, and the virtualization of such I/O like virtual machines live migration and snapshots. devices poses several challenges. This paper describes a generic para-virtualization framework based on API Remoting, The virtualization of an I/O peripheral such as an RDMA providing at the same time the flexibility of software based can be implemented mainly in the following ways: direct virtualization, and the low overhead of hardware-assisted device pass-through, exploiting hardware support from the solutions. The solution presented in this paper is targeting the hardware with PCI Single-Root I/O Virtualization (SR-IOV) KVM hypervisor, but is not bound to any target network [10,4] or by para-virtualization [14]. -

Fast Local Page-Tables for Virtualized NUMA Servers with Vmitosis

Fast Local Page-Tables for Virtualized NUMA Servers with vMitosis Ashish Panwar Reto Achermann Arkaprava Basu Indian Institute of Science University of British Columbia Indian Institute of Science Abhishek Bhattacharjee K. Gopinath Jayneel Gandhi Yale University Indian Institute of Science VMware Research ABSTRACT 1 INTRODUCTION Increasing heterogeneity in the memory system mandates careful Applications suffer non-uniform memory access (NUMA) latencies data placement to hide the non-uniform memory access (NUMA) on modern multi-tier memory systems. As computer systems em- effects on applications. However, NUMA optimizations have pre- brace even more heterogeneity in the memory subsystem, with dominantly focused on application data in the past decades, largely innovations in die-stacked DRAM, high-bandwidth HBM, more ignoring the placement of kernel data structures due to their small socket counts and multi-chip module-based designs, the speed dif- memory footprint; this is evident in typical OS designs that pin ferences between local and remote memory continue to grow and kernel objects in memory. In this paper, we show that careful place- become more complex to reason about [52, 77]. Carefully placing, ment of kernel data structures is gaining importance in the context replicating, and migrating data among memory devices with vari- of page-tables: sub-optimal placement of page-tables causes severe able latency and bandwidth is of paramount importance to the slowdown (up to 3.1×) on virtualized NUMA servers. success of these technologies, and much work remains to be done In response, we present vMitosis ś a system for explicit manage- on these topics. ment of two-level page-tables, i.e., the guest and extended page- However, while there is at least prior work on data placement tables, on virtualized NUMA servers. -

The Case for Physical Memory Pools: a Vision Paper

The Case for Physical Memory Pools: A Vision Paper B Heather Craddock( ), Lakshmi Prasanna Konudula, Kun Cheng, and G¨okhan Kul Division of Physical and Computational Sciences, Delaware State University, 1200 N DuPont Hwy, Dover, DE 19901, USA {hcraddock,lkonudula,kcheng14,gkul}@desu.edu http://delawaresec.com Abstract. The cloud is a rapidly expanding and increasingly prominent component of modern computing. Monolithic servers limit the flexibil- ity of cloud-based systems, however, due to static memory limitations. Developments in OS design, distributed memory systems, and address translation have been crucial in aiding the progress of the cloud. In this paper, we discuss recent developments in virtualization, OS design and distributed memory structures with regards to their current impact and relevance to future work on eliminating memory limits in cloud com- puting. We argue that creating physical memory pools is essential for cheaper and more efficient cloud computing infrastructures, and we iden- tify research challenges to implement these structures. Keywords: Cloud computing · Memory · Operating systems · Virtualization 1 Introduction The growth of cloud computing is fuelled by the rise of big data and the global requirements for the inter-connectivity of computing resources. The cloud allows for data and resources to be shared on demand to connected hardware devices, reducing the need for reliance on purely local resources. According to the Data Never Sleeps report, 1.7 MB of data is expected to be created every second for every single person on earth by 2020 [6]. With such a vast amount of data being generated, it is imperative that hardware and software architectures are modified or created to manage modern requirements. -

QUESTION BANK Subject Name: INFORMATION MANAGEMENT Year/Sem: IV/VII

V.S.B ENGINEERING COLLEGE, KARUR – 639 111 DEPARTMENT OF INFORMATION TECHNOLOGY CLASS / SEMESTER: IV YEAR/VII SEMESTER QUESTION BANK Subject Name: INFORMATION MANAGEMENT Year/Sem: IV/VII UNIT I PART-A 1. What is a data model? List the types of data model used. A database model is the theoretical foundation of a database and fundamentally determines in which manner data can be stored, organized, and manipulated in a database system. It thereby defines the infrastructure offered by a particular database system. The most popular example of a database model is the relational model. Types of data model used Hierarchical model Network model Relational model Entity-relationship Object-relational model Object model 2. Give the levels of data abstraction? Physical level Logical level View level 3. Distinguish between Hadoop 1.x and Hadoop 2.x In Hadoop 1.x, Map Reduce is responsible for both processing and cluster management whereas in Hadoop 2.x processing is taken care of by other processing models and YARN is responsible for cluster management. Hadoop 2.x scales better when compared to Hadoop 1.x with close to 10000 nodes per cluster. Hadoop 1.x has single point of failure problem and whenever the Name Node fails it has to be recovered manually. However, in case of Hadoop 2.x Stand By Name Node overcomes the problem and whenever the Name Node fails it is configured for automatic recovery 4. Define data model? A data model is a collection of conceptual tools for describing data, data relationships, data semantics and consistency constraints. 5.