Operating Systems and Middleware: Supporting Controlled Interaction

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Lecture 1: Introduction to UNIX

The Operating System Course Overview Getting Started Lecture 1: Introduction to UNIX CS2042 - UNIX Tools September 29, 2008 Lecture 1: UNIX Intro The Operating System Description and History Course Overview UNIX Flavors Getting Started Advantages and Disadvantages Lecture Outline 1 The Operating System Description and History UNIX Flavors Advantages and Disadvantages 2 Course Overview Class Specifics 3 Getting Started Login Information Lecture 1: UNIX Intro The Operating System Description and History Course Overview UNIX Flavors Getting Started Advantages and Disadvantages What is UNIX? One of the first widely-used operating systems Basis for many modern OSes Helped set the standard for multi-tasking, multi-user systems Strictly a teaching tool (in its original form) Lecture 1: UNIX Intro The Operating System Description and History Course Overview UNIX Flavors Getting Started Advantages and Disadvantages A Brief History of UNIX Origins The first version of UNIX was created in 1969 by a group of guys working for AT&T's Bell Labs. It was one of the first big projects written in the emerging C language. It gained popularity throughout the '70s and '80s, although non-AT&T versions eventually took the lion's share of the market. Predates Microsoft's DOS by 12 years! Lecture 1: UNIX Intro The Operating System Description and History Course Overview UNIX Flavors Getting Started Advantages and Disadvantages Lecture Outline 1 The Operating System Description and History UNIX Flavors Advantages and Disadvantages 2 Course Overview Class Specifics 3 -

The Strange Birth and Long Life of Unix - IEEE Spectrum Page 1 of 6

The Strange Birth and Long Life of Unix - IEEE Spectrum Page 1 of 6 COMPUTING / SOFTWARE FEATURE The Strange Birth and Long Life of Unix The classic operating system turns 40, and its progeny abound By WARREN TOOMEY / DECEMBER 2011 They say that when one door closes on you, another opens. People generally offer this bit of wisdom just to lend some solace after a misfortune. But sometimes it's actually true. It certainly was for Ken Thompson and the late Dennis Ritchie, two of the greats of 20th-century information technology, when they created the Unix operating system, now considered one of the most inspiring and influential pieces of software ever written. A door had slammed shut for Thompson and Ritchie in March of 1969, when their employer, the American Telephone & Telegraph Co., withdrew from a collaborative project with the Photo: Alcatel-Lucent Massachusetts Institute of KEY FIGURES: Ken Thompson [seated] types as Dennis Ritchie looks on in 1972, shortly Technology and General Electric after they and their Bell Labs colleagues invented Unix. to create an interactive time- sharing system called Multics, which stood for "Multiplexed Information and Computing Service." Time-sharing, a technique that lets multiple people use a single computer simultaneously, had been invented only a decade earlier. Multics was to combine time-sharing with other technological advances of the era, allowing users to phone a computer from remote terminals and then read e -mail, edit documents, run calculations, and so forth. It was to be a great leap forward from the way computers were mostly being used, with people tediously preparing and submitting batch jobs on punch cards to be run one by one. -

The Strange Birth and Long Life of Unix - IEEE Spectrum

The Strange Birth and Long Life of Unix - IEEE Spectrum http://spectrum.ieee.org/computing/software/the-strange-birth-and-long-li... COMPUTING / SOFTWARE FEATURE The Strange Birth and Long Life of Unix The classic operating system turns 40, and its progeny abound By WARREN TOOMEY / DECEMBER 2011 They say that when one door closes on you, another opens. People generally offer this bit of wisdom just to lend some solace after a misfortune. But sometimes it's actually true. It certainly was for Ken Thompson and the late Dennis Ritchie, two of the greats of 20th-century information technology, when they created the Unix operating system, now considered one of the most inspiring and influential pieces of software ever written. A door had slammed shut for Thompson and Ritchie in March of 1969, when their employer, the American Telephone & Telegraph Co., withdrew from a collaborative project with the Photo: Alcatel-Lucent Massachusetts Institute of KEY FIGURES: Ken Thompson [seated] types as Dennis Ritchie looks on in 1972, shortly Technology and General Electric after they and their Bell Labs colleagues invented Unix. to create an interactive time-sharing system called Multics, which stood for "Multiplexed Information and Computing Service." Time-sharing, a technique that lets multiple people use a single computer simultaneously, had been invented only a decade earlier. Multics was to combine time-sharing with other technological advances of the era, allowing users to phone a computer from remote terminals and then read e-mail, edit documents, run calculations, and so forth. It was to be a great leap forward from the way computers were mostly being used, with people tediously preparing and submitting batch jobs on punch cards to be run one by one. -

Introduction to UNIX What Is UNIX? Why UNIX? Brief History of UNIX Early UNIX History UNIX Variants

What is UNIX? A modern computer operating system Introduction to UNIX Operating system: “a program that acts as an intermediary between a user of the computer and the computer hardware” CS 2204 Software that manages your computer’s resources (files, programs, disks, network, …) Class meeting 1 e.g. Windows, MacOS Modern: features for stability, flexibility, multiple users and programs, configurability, etc. *Notes by Doug Bowman and other members of the CS faculty at Virginia Tech. Copyright 2001-2003. (C) Doug Bowman, Virginia Tech, 2001- 2 Why UNIX? Brief history of UNIX Used in many scientific and industrial settings Ken Thompson & Dennis Richie Huge number of free and well-written originally developed the earliest software programs versions of UNIX at Bell Labs for Open-source OS internal use in 1970s Internet servers and services run on UNIX Borrowed best ideas from other Oss Largely hardware-independent Meant for programmers and computer Based on standards experts Meant to run on “mini computers” (C) Doug Bowman, Virginia Tech, 2001- 3 (C) Doug Bowman, Virginia Tech, 2001- 4 Early UNIX History UNIX variants Thompson also rewrote the operating system Two main threads of development: in high level language of his own design Berkeley software distribution (BSD) which he called B. Unix System Laboratories System V Sun: SunOS, Solaris The B language lacked many features and Ritchie decided to design a successor to B GNU: Linux (many flavors) which he called C. SGI: Irix They then rewrote UNIX in the C FreeBSD programming language to aid in portability. Hewlett-Packard: HP-UX Apple: OS X (Darwin) … (C) Doug Bowman, Virginia Tech, 2001- 5 (C) Doug Bowman, Virginia Tech, 2001- 6 1 Layers in the UNIX System UNIX Structure User Interface The kernel is the core of the UNIX Library Interface Users system, controlling the system Standard Utility Programs hardware and performing various low- (shell, editors, compilers, etc.) System Interface calls User Mode level functions. -

A Brief History of Unix

A Brief History of Unix Tom Ryder [email protected] https://sanctum.geek.nz/ I Love Unix ∴ I Love Linux ● When I started using Linux, I was impressed because of the ethics behind it. ● I loved the idea that an operating system could be both free to customise, and free of charge. – Being a cash-strapped student helped a lot, too. ● As my experience grew, I came to appreciate the design behind it. ● And the design is UNIX. ● Linux isn’t a perfect Unix, but it has all the really important bits. What do we actually mean? ● We’re referring to the Unix family of operating systems. – Unix from Bell Labs (Research Unix) – GNU/Linux – Berkeley Software Distribution (BSD) Unix – Mac OS X – Minix (Intel loves it) – ...and many more Warning signs: 1/2 If your operating system shows many of the following symptoms, it may be a Unix: – Multi-user, multi-tasking – Hierarchical filesystem, with a single root – Devices represented as files – Streams of text everywhere as a user interface – “Formatless” files ● Data is just data: streams of bytes saved in sequence ● There isn’t a “text file” attribute, for example Warning signs: 2/2 – Bourne-style shell with a “pipe”: ● $ program1 | program2 – “Shebangs” specifying file interpreters: ● #!/bin/sh – C programming language baked in everywhere – Classic programs: sh(1), awk(1), grep(1), sed(1) – Users with beards, long hair, glasses, and very strong opinions... Nobody saw it coming! “The number of Unix installations has grown to 10, with more expected.” — Ken Thompson and Dennis Ritchie (1972) ● Unix in some flavour is in servers, desktops, embedded software (including Intel’s management engine), mobile phones, network equipment, single-board computers.. -

History of Unix.Pdf

History of Unix In order to define UNIX, it helps to look at its history. In 1969, Ken Thompson, Dennis Ritchie and others started work on what was to become UNIX on a "little-used PDP-7 in a corner" at AT&T Bell Labs. For ten years, the development of UNIX proceeded at AT&T in numbered versions. V4 (1974) was re-written in C -- a major milestone for the operating system's portability among different systems. V6 (1975) was the first to become available outside Bell Labs -- it became the basis of the first version of UNIX developed at the University of California Berkeley. Bell Labs continued work on UNIX into the 1980s, culminating in the release of System V (as in "five," not the letter) in 1983 and System V, Release 4 (abbreviated SVR4) in 1989. Meanwhile, programmers at the University of California hacked mightily on the source code AT&T had released, leading to many a master thesis. The Berkeley Standard Distribution (BSD) became a second major variant of "UNIX." It was widely deployed in both university and corporate computing environments starting with the release of BSD 4.2 in 1984. Some of its features were incorporated into SVR4. As the 1990s opened, AT&T's source code licensing had created a flourishing market for hundreds of UNIX variants by different manufacturers. AT&T sold its UNIX business to Novell in 1993, and Novell sold it to the Santa Cruz Operation two years later. In the meantime, the UNIX trademark had been passed to the X/Open consortium, which eventually merged to form The Open Group.1 While the stewardship of UNIX was passing from entity to entity, several long- running development efforts started bearing fruit. -

UNIX History Page 1 Tuesday, December 10, 2002 7:02 PM

UNIX History Page 1 Tuesday, December 10, 2002 7:02 PM CHAPTER 1 UNIX Evolution and Standardization This chapter introduces UNIX from a historical perspective, showing how the various UNIX versions have evolved over the years since the very first implementation in 1969 to the present day. The chapter also traces the history of the different attempts at standardization that have produced widely adopted standards such as POSIX and the Single UNIX Specification. The material presented here is not intended to document all of the UNIX variants, but rather describes the early UNIX implementations along with those companies and bodies that have had a major impact on the direction and evolution of UNIX. A Brief Walk through Time There are numerous events in the computer industry that have occurred since UNIX started life as a small project in Bell Labs in 1969. UNIX history has been largely influenced by Bell Labs’ Research Editions of UNIX, AT&T’s System V UNIX, Berkeley’s Software Distribution (BSD), and Sun Microsystems’ SunOS and Solaris operating systems. The following list shows the major events that have happened throughout the history of UNIX. Later sections describe some of these events in more detail. 1 UNIX History Page 2 Tuesday, December 10, 2002 7:02 PM 2 UNIX Filesystems—Evolution, Design, and Implementation 1969. Development on UNIX starts in AT&T’s Bell Labs. 1971. 1st Edition UNIX is released. 1973. 4th Edition UNIX is released. This is the first version of UNIX that had the kernel written in C. 1974. Ken Thompson and Dennis Ritchie publish their classic paper, “The UNIX Timesharing System” [RITC74]. -

The History of Unix in the History of Software Haigh Thomas

The History of Unix in the History of Software Haigh Thomas To cite this version: Haigh Thomas. The History of Unix in the History of Software. Cahiers d’histoire du Cnam, Cnam, 2017, La recherche sur les systèmes : des pivots dans l’histoire de l’informatique – II/II, 7-8 (7-8), pp77-90. hal-03027081 HAL Id: hal-03027081 https://hal.archives-ouvertes.fr/hal-03027081 Submitted on 9 Dec 2020 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. 77 The History of Unix in the History of Software Thomas Haigh University of Wisconsin - Milwaukee & Siegen University. You might wonder what I am doing The “software crisis” and here, at an event on this history of Unix. the 1968 NATO Conference As I have not researched or written about on Software Engineering the history of Unix I had the same question myself. But I have looked at many other The topic of the “software crisis” things around the history of software and has been written about a lot by profes- this morning will be talking about how sional historians, more than anything some of those topics, including the 1968 else in the entire history of software2. -

Chapter 4 Introduction to UNIX Systems Programming

Chapter 4 Introduction to UNIX Systems Programming 4.1 Introduction Last chapter covered how to use UNIX from from a shell program using UNIX commands. These commands are programs that are written in C that interact with the UNIX environment using functions called Systems Calls. This chapter covers this Systems Calls and how to use them inside a program. 4.2 What is an Operating System An Operating System is a program that sits between the hardware and the application programs. Like any other program it has a main() function and it is built like any other program with a compiler and a linker. However it is built with some special parameters so the starting address is the boot address where the CPU will jump to start the operating system when the system boots. Draft An operating system typically offers the following functionality: ● Multitasking The Operating System will allow multiple programs to run simultaneously in the same computer. The Operating System will schedule the programs in the multiple processors of the computer even when the number of running programs exceeds the number of processors or cores. ● Multiuser The Operating System will allow multiple users to use simultaneously in the same computer. ● File system © 2014 Gustavo Rodriguez-Rivera and Justin Ennen,Introduction to Systems Programming: a Hands-on Approach (V2014-10-27) (systemsprogrammingbook.com) It allows to store files in disk or other media. ● Networking It gives access to the local network and internet ● Window System It provides a Graphical User Interface ● Standard Programs It also includes programs such as file utilities, task manager, editors, compilers, web browser, etc. -

CBCS) for M.Sc

Scheme of teaching and examination under semester pattern Choice Based Credit System (CBCS) for M.Sc. Information Technology Semester I for M.Sc. Information Technology Code Teaching Examination Scheme scheme (Hours / Week) Max. Marks Minimum Passing Marks Theory / Practical Theory Th Pract Total Credits in hrs. Duration External Marks Internal Ass Marks Total Th Pract Core 1 Paper 1 4 - 4 4 3 80 20 100 40 Computer Architecture and Organization Core 2 Paper 2 4 - 4 4 3 80 20 100 40 Internet Computing With ASP.NET Core 3 Paper 3 4 - 4 4 3 80 20 100 40 Distributed Operating System Core 4 Paper 4 4 - 4 4 3 80 20 100 40 Advanced DBMS and Administration Pract. Practical 1 - 8 8 4 3-8* 100 - 100 40 Core 1 & based on theory ** 2 paper-1 and 2 Pract. Practical 2 - 8 8 4 3-8* 100 - 100 40 Core 3 & based on theory ** 4 paper-3 and 4 Seminar 1 Seminar 1 2 - 2 1 25 25 10 TOTAL 18 16 34 25 520 105 625 170 80 1 Semester II for M.Sc. Information Technology Code Teaching Examination Scheme scheme (Hours / Week) Max. Marks Minimum Passing Marks Theory / Practical Theory Th Pract Total Credits in hrs. Duration External Marks Internal Ass Marks Total Th Pract Core 5 Paper 5 4 - 4 4 3 80 20 100 40 Windows Programming using VC++ Core 6 Paper 6 4 - 4 4 3 80 20 100 40 Theory of Computation and Compiler Construction Core 7 Paper 7 4 - 4 4 3 80 20 100 40 Network Programming Core 8 Paper 8 4 - 4 4 3 80 20 100 40 Open source Web Programming using PHP Pract. -

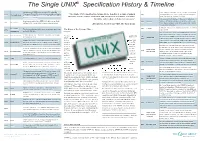

The Single UNIX® Ingle UNIX Specification History & Timeline

The Single UNIX® Specifi cationcation HistoryHistory && TTimelineimeline The history of UNIX starts back in 1969, when Ken UNIX System Laboratories (USL) becomes a company Thompson, Dennis Ritchie and others started working on 1969 The Beginning “The Single UNIX Specifi cation brings all the benefi ts of a single standard 1991 - majority-owned by AT&T. Linus Torvalds commences the “little-used PDP-7 in a corner” at Bell Labs and what operating system, namely application and information portability, scalability, Linux development. Solaris 1.0 debuts. was to become UNIX. fl exibility and freedom of choice for customers” USL releases UNIX System V Release 4.2 (Destiny). It had an assembler for a PDP-11/20, fi le system, fork(), October - XPG4 Brand launched by X/Open. December 1992 SVR4.2 1971 First Edition roff and ed. It was used for text processing of patent Allen Brown, President and CEO, The Open Group 22nd - Novell announces intent to acquire USL. Solaris documents. 2.0 and HP-UX 9.0 ship. 4.4BSD the fi nal release from Berkeley. June 16 - Novell First UNIX The fi rst installations had 3 users, no memory protection, 1993 4.4BSD 1972 The Story of the License Plate... acquires USL Installations and a 500 KB disk. Novell decides to get out of the UNIX business. Rather It was rewritten in C. This made it portable and changed than sell the business as a single entity, Novell transfers 1973 Fourth Edition In 1983 Digital Equipment Corporation the middle of it, Late the rights to the UNIX trademark and the specifi cation the history of OS’s. -

A Brief History of Unix This Article Will Give People with No Previous UNIX Experience Some Sense of What UNIX Is

A Brief History of Unix This article will give people with no previous UNIX experience some sense of what UNIX is. This article will cover the history of UNIX and an introduction to UNIX. HISTORY OF UNIX AND CAUSES FOR ITS POPULARITY Most discussions of UNIX begin with the history of UNIX without explaining why the history of UNIX is important to understanding UNIX. The remainder of this document will describe some strengths and weaknesses of UNIX and attempt to explain why UNIX is becoming popular. All of UNIX's strengths and weaknesses can be directly related to the history of its development, hence a discussion of history is very useful. UNIX was originally developed at Bell Laboratories as a private research project by a small group of people starting in 1969. This group had experience with a number of different operating systems research efforts in the 1970's. The goals of the group were to design an operating system to satisfy the following objectives: • Simple and elegant • Written in a high level language rather than assembly language • Allow re-use of code Typical vendor operating systems of the time were extremely large and all written in assembly language. UNIX had a relatively small amount of code written in assembly language (this is called the kernel) and the remaining code for the operating system was written in a high level language called C. The group worked primarily in the high level language in developing the operating system. As this development continued, small changes were necessary in the kernel and the language to allow the operating system to be completed.