Smart Home Personal Assistants: a Security and Privacy Review

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Listen Only When Spoken To: Interpersonal Communication Cues As Smart Speaker Privacy Controls

Proceedings on Privacy Enhancing Technologies ; 2020 (2):251–270 Abraham Mhaidli*, Manikandan Kandadai Venkatesh, Yixin Zou, and Florian Schaub Listen Only When Spoken To: Interpersonal Communication Cues as Smart Speaker Privacy Controls Abstract: Internet of Things and smart home technolo- 1 Introduction gies pose challenges for providing effective privacy con- trols to users, as smart devices lack both traditional Internet of Things (IoT) and smart home devices have screens and input interfaces. We investigate the poten- gained considerable traction in the consumer market [4]. tial for leveraging interpersonal communication cues as Technologies such as smart door locks, smart ther- privacy controls in the IoT context, in particular for mostats, and smart bulbs offer convenience and utility smart speakers. We propose privacy controls based on to users [4]. IoT devices often incorporate numerous sen- two kinds of interpersonal communication cues – gaze sors from microphones to cameras. Though these sensors direction and voice volume level – that only selectively are essential for the functionality of these devices, they activate a smart speaker’s microphone or voice recog- may cause privacy concerns over what data such devices nition when the device is being addressed, in order to and their sensors collect, how the data is processed, and avoid constant listening and speech recognition by the for what purposes the data is used [35, 41, 46, 47, 70]. smart speaker microphones and reduce false device acti- Additionally, these sensors are often in the background vation. We implement these privacy controls in a smart or hidden from sight, continuously collecting informa- speaker prototype and assess their feasibility, usability tion. -

Personification and Ontological Categorization of Smart Speaker-Based Voice Assistants by Older Adults

“Phantom Friend” or “Just a Box with Information”: Personification and Ontological Categorization of Smart Speaker-based Voice Assistants by Older Adults ALISHA PRADHAN, University of Maryland, College Park, USA LEAH FINDLATER, University of Washington, USA AMANDA LAZAR, University of Maryland, College Park, USA As voice-based conversational agents such as Amazon Alexa and Google Assistant move into our homes, researchers have studied the corresponding privacy implications, embeddedness in these complex social environments, and use by specific user groups. Yet it is unknown how users categorize these devices: are they thought of as just another object, like a toaster? As a social companion? Though past work hints to human- like attributes that are ported onto these devices, the anthropomorphization of voice assistants has not been studied in depth. Through a study deploying Amazon Echo Dot Devices in the homes of older adults, we provide a preliminary assessment of how individuals 1) perceive having social interactions with the voice agent, and 2) ontologically categorize the voice assistants. Our discussion contributes to an understanding of how well-developed theories of anthropomorphism apply to voice assistants, such as how the socioemotional context of the user (e.g., loneliness) drives increased anthropomorphism. We conclude with recommendations for designing voice assistants with the ontological category in mind, as well as implications for the design of technologies for social companionship for older adults. CCS Concepts: • Human-centered computing → Ubiquitous and mobile devices; • Human-centered computing → Personal digital assistants KEYWORDS Personification; anthropomorphism; ontology; voice assistants; smart speakers; older adults. ACM Reference format: Alisha Pradhan, Leah Findlater and Amanda Lazar. -

Samrt Speakers Growth at a Discount.Pdf

Technology, Media, and Telecommunications Predictions 2019 Deloitte’s Technology, Media, and Telecommunications (TMT) group brings together one of the world’s largest pools of industry experts—respected for helping companies of all shapes and sizes thrive in a digital world. Deloitte’s TMT specialists can help companies take advantage of the ever- changing industry through a broad array of services designed to meet companies wherever they are, across the value chain and around the globe. Contact the authors for more information or read more on Deloitte.com. Technology, Media, and Telecommunications Predictions 2019 Contents Foreword | 2 Smart speakers: Growth at a discount | 24 1 Technology, Media, and Telecommunications Predictions 2019 Foreword Dear reader, Welcome to Deloitte Global’s Technology, Media, and Telecommunications Predictions for 2019. The theme this year is one of continuity—as evolution rather than stasis. Predictions has been published since 2001. Back in 2009 and 2010, we wrote about the launch of exciting new fourth-generation wireless networks called 4G (aka LTE). A decade later, we’re now making predic- tions about 5G networks that will be launching this year. Not surprisingly, our forecast for the first year of 5G is that it will look a lot like the first year of 4G in terms of units, revenues, and rollout. But while the forecast may look familiar, the high data speeds and low latency 5G provides could spur the evolution of mobility, health care, manufacturing, and nearly every industry that relies on connectivity. In previous reports, we also wrote about 3D printing (aka additive manufacturing). Our tone was posi- tive but cautious, since 3D printing was growing but also a bit overhyped. -

Your Voice Assistant Is Mine: How to Abuse Speakers to Steal Information and Control Your Phone ∗ †

Your Voice Assistant is Mine: How to Abuse Speakers to Steal Information and Control Your Phone ∗ y Wenrui Diao, Xiangyu Liu, Zhe Zhou, and Kehuan Zhang Department of Information Engineering The Chinese University of Hong Kong {dw013, lx012, zz113, khzhang}@ie.cuhk.edu.hk ABSTRACT General Terms Previous research about sensor based attacks on Android platform Security focused mainly on accessing or controlling over sensitive compo- nents, such as camera, microphone and GPS. These approaches Keywords obtain data from sensors directly and need corresponding sensor invoking permissions. Android Security; Speaker; Voice Assistant; Permission Bypass- This paper presents a novel approach (GVS-Attack) to launch ing; Zero Permission Attack permission bypassing attacks from a zero-permission Android application (VoicEmployer) through the phone speaker. The idea of 1. INTRODUCTION GVS-Attack is to utilize an Android system built-in voice assistant In recent years, smartphones are becoming more and more popu- module – Google Voice Search. With Android Intent mechanism, lar, among which Android OS pushed past 80% market share [32]. VoicEmployer can bring Google Voice Search to foreground, and One attraction of smartphones is that users can install applications then plays prepared audio files (like “call number 1234 5678”) in (apps for short) as their wishes conveniently. But this convenience the background. Google Voice Search can recognize this voice also brings serious problems of malicious application, which have command and perform corresponding operations. With ingenious been noticed by both academic and industry fields. According to design, our GVS-Attack can forge SMS/Email, access privacy Kaspersky’s annual security report [34], Android platform attracted information, transmit sensitive data and achieve remote control a whopping 98.05% of known malware in 2013. -

Smart Speakers & Their Impact on Music Consumption

Everybody’s Talkin’ Smart Speakers & their impact on music consumption A special report by Music Ally for the BPI and the Entertainment Retailers Association Contents 02"Forewords 04"Executive Summary 07"Devices Guide 18"Market Data 22"The Impact on Music 34"What Comes Next? Forewords Geoff Taylor, chief executive of the BPI, and Kim Bayley, chief executive of ERA, on the potential of smart speakers for artists 1 and the music industry Forewords Kim Bayley, CEO! Geoff Taylor, CEO! Entertainment Retailers Association BPI and BRIT Awards Music began with the human voice. It is the instrument which virtually Smart speakers are poised to kickstart the next stage of the music all are born with. So how appropriate that the voice is fast emerging as streaming revolution. With fans consuming more than 100 billion the future of entertainment technology. streams of music in 2017 (audio and video), streaming has overtaken CD to become the dominant format in the music mix. The iTunes Store decoupled music buying from the disc; Spotify decoupled music access from ownership: now voice control frees music Smart speakers will undoubtedly give streaming a further boost, from the keyboard. In the process it promises music fans a more fluid attracting more casual listeners into subscription music services, as and personal relationship with the music they love. It also offers a real music is the killer app for these devices. solution to optimising streaming for the automobile. Playlists curated by streaming services are already an essential Naturally there are challenges too. The music industry has struggled to marketing channel for music, and their influence will only increase as deliver the metadata required in a digital music environment. -

Microsoft Surface Duo Teardown Guide ID: 136576 - Draft: 2021-04-30

Microsoft Surface Duo Teardown Guide ID: 136576 - Draft: 2021-04-30 Microsoft Surface Duo Teardown An exploratory teardown of the Microsoft Surface Duo, a brand-new take on foldables with a surprisingly simple hinge but precious few concessions to repair. Written By: Taylor Dixon This document was generated on 2021-05-02 03:27:09 PM (MST). © iFixit — CC BY-NC-SA www.iFixit.com Page 1 of 16 Microsoft Surface Duo Teardown Guide ID: 136576 - Draft: 2021-04-30 INTRODUCTION Microsoft has reportedly been working on the Surface Duo for six years. We can probably tear it down in less time than that, but with any brand-new form factor, there are no guarantees. Here’s hoping the Duo boasts the repairability of recent Microsoft sequels like the Surface Laptop 3 or the Surface Pro X—otherwise, we could be in for a long haul. Let’s get this teardown started! For more teardowns, we’ve got a trio of social media options for you: for quick text we’ve got Twitter, for sweet pics there’s Instagram, and for the phablet of the media world there’s Facebook. If you’d rather get the full scoop on what we’re up to, sign up for our newsletter! TOOLS: T2 Torx Screwdriver (1) T3 Torx Screwdriver (1) T5 Torx Screwdriver (1) Tri-point Y000 Screwdriver (1) Spudger (1) Tweezers (1) Heat Gun (1) iFixit Opening Picks set of 6 (1) Plastic Cards (1) This document was generated on 2021-05-02 03:27:09 PM (MST). © iFixit — CC BY-NC-SA www.iFixit.com Page 2 of 16 Microsoft Surface Duo Teardown Guide ID: 136576 - Draft: 2021-04-30 Step 1 — Microsoft Surface Duo Teardown The long-awaited Surface Duo is here! For $1,400 you get two impossibly thin slices of hardware that you can fold up and put in your pocket.. -

User Guide Guía Del Usuario Del Guía GH68-43542A Printed in USA SMARTPHONE

User Guide User Guide GH68-43542A Printed in USA Guía del Usuario del Guía SMARTPHONE User Manual Please read this manual before operating your device and keep it for future reference. Legal Notices Warning: This product contains chemicals known create source code from the software. No title to or to the State of California to cause cancer and ownership in the Intellectual Property is transferred to reproductive toxicity. For more information, please call you. All applicable rights of the Intellectual Property 1-800-SAMSUNG (726-7864). shall remain with SAMSUNG and its suppliers. Intellectual Property Open Source Software Some software components of this product All Intellectual Property, as defined below, owned by incorporate source code covered under GNU General or which is otherwise the property of Samsung or its Public License (GPL), GNU Lesser General Public respective suppliers relating to the SAMSUNG Phone, License (LGPL), OpenSSL License, BSD License and including but not limited to, accessories, parts, or other open source licenses. To obtain the source code software relating there to (the “Phone System”), is covered under the open source licenses, please visit: proprietary to Samsung and protected under federal http://opensource.samsung.com. laws, state laws, and international treaty provisions. Intellectual Property includes, but is not limited to, inventions (patentable or unpatentable), patents, trade secrets, copyrights, software, computer programs, and Disclaimer of Warranties; related documentation and other works of authorship. -

Understanding and Mitigating Security Risks of Voice-Controlled Third-Party Functions on Virtual Personal Assistant Systems

Dangerous Skills: Understanding and Mitigating Security Risks of Voice-Controlled Third-Party Functions on Virtual Personal Assistant Systems Nan Zhang∗, Xianghang Mi∗, Xuan Fengy∗, XiaoFeng Wang∗, Yuan Tianz and Feng Qian∗ ∗Indiana University, Bloomington Email: fnz3, xmi, xw7, [email protected] yBeijing Key Laboratory of IOT Information Security Technology, Institute of Information Engineering, CAS, China Email: [email protected] zUniversity of Virginia Email: [email protected] Abstract—Virtual personal assistants (VPA) (e.g., Amazon skills by Amazon and actions by Google1) to offer further Alexa and Google Assistant) today mostly rely on the voice helps to the end users, for example, order food, manage bank channel to communicate with their users, which however is accounts and text friends. In the past year, these ecosystems known to be vulnerable, lacking proper authentication (from the user to the VPA). A new authentication challenge, from the VPA are expanding at a breathtaking pace: Amazon claims that service to the user, has emerged with the rapid growth of the VPA already 25,000 skills have been uploaded to its skill market to ecosystem, which allows a third party to publish a function (called support its VPA (including the Alexa service running through skill) for the service and therefore can be exploited to spread Amazon Echo) [1] and Google also has more than one thousand malicious skills to a large audience during their interactions actions available on its market for its Google Home system with smart speakers like Amazon Echo and Google Home. In this paper, we report a study that concludes such remote, large- (powered by Google Assistant). -

SAMSUNG GALAXY S6 USER GUIDE Table of Contents

SAMSUNG GALAXY S6 USER GUIDE Table of Contents Basics 55 Camera 71 Gallery 4 Read me first 73 Smart Manager 5 Package contents 75 S Planner 6 Device layout 76 S Health 8 SIM or USIM card 79 S Voice 10 Battery 81 Music 14 Turning the device on and off 82 Video 15 Touchscreen 83 Voice Recorder 18 Home screen 85 My Files 24 Lock screen 86 Memo 25 Notification panel 86 Clock 28 Entering text 88 Calculator 31 Screen capture 89 Google apps 31 Opening apps 32 Multi window 37 Device and data management 41 Connecting to a TV Settings 43 Sharing files with contacts 91 Introduction 44 Emergency mode 91 Wi-Fi 93 Bluetooth 95 Flight mode Applications 95 Mobile hotspot and tethering 96 Data usage 45 Installing or uninstalling apps 97 Mobile networks 46 Phone 97 NFC and payment 49 Contacts 100 More connection settings 51 Messages 102 Sounds and notifications 53 Internet 103 Display 54 Email 103 Motions and gestures 2 Table of Contents 104 Applications 104 Wallpaper 105 Themes 105 Lock screen and security 110 Privacy and safety 113 Easy mode 113 Accessibility 114 Accounts 115 Backup and reset 115 Language and input 116 Battery 116 Storage 117 Date and time 117 User manual 117 About device Appendix 118 Accessibility 133 Troubleshooting 3 Basics Read me first Please read this manual before using the device to ensure safe and proper use. • Descriptions are based on the device’s default settings. • Some content may differ from your device depending on the region, service provider, model specifications, or device’s software. -

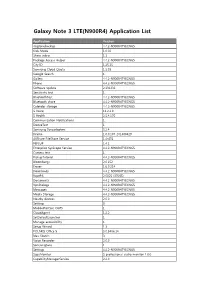

Galaxy Note 3 LTE(N900R4) Application List

Galaxy Note 3 LTE(N900R4) Application List Application Version ringtonebackup 4.4.2-N900R4TYECNG5 Kids Mode 1.0.02 Share video 1.1 Package Access Helper 4.4.2-N900R4TYECNG5 City ID 1.25.15 Samsung Cloud Quota 1.5.03 Google Search 1 Gallery 4.4.2-N900R4TYECNG5 Phone 4.4.2-N900R4TYECNG5 Software update 2.131231 Sensitivity test 1 BluetoothTest 4.4.2-N900R4TYECNG5 Bluetooth share 4.4.2-N900R4TYECNG5 Calendar storage 4.4.2-N900R4TYECNG5 S Voice 11.2.2.0 S Health 2.5.4.170 Communication Notifications 1 DeviceTest 1 Samsung Syncadapters 5.2.4 Drama 1.0.0.107_201400429 AllShare FileShare Service 1.4r476 PEN.UP 1.4.1 Enterprise SysScope Service 4.4.2-N900R4TYECNG5 Camera test 1 PickupTutorial 4.4.2-N900R4TYECNG5 Bloomberg+ 2.0.152 Eraser 1.6.0.214 Downloads 4.4.2-N900R4TYECNG5 RootPA 2.0025 (37085) Documents 4.4.2-N900R4TYECNG5 VpnDialogs 4.4.2-N900R4TYECNG5 Messages 4.4.2-N900R4TYECNG5 Media Storage 4.4.2-N900R4TYECNG5 Nearby devices 2.0.0 Settings 3 MobilePrintSvc_CUPS 1 CloudAgent 1.2.2 SetDefaultLauncher 1 Manage accessibility 1 Setup Wizard 1.3 POLARIS Office 5 5.0.3406.14 Idea Sketch 3 Voice Recorder 2.0.0 SamsungSans 1 Settings 4.4.2-N900R4TYECNG5 SapaMonitor S professional audio monitor 1.0.0 CapabilityManagerService 2.4.0 S Note 3.1.0 Samsung Link 1.8.1904 Samsung WatchON Video 14062601.1.21.78 Street View 1.8.1.2 Alarm 1 PageBuddyNotiSvc 1 Favorite Contacts 4.4.2-N900R4TYECNG5 Google Search 3.4.16.1149292.arm KNOX 2.0.0 Exchange services 4.2 GestureService 1 Weather 140211.01 Samsung Print Service Plugin 1.4.140410 Tasks provider 4.4.2-N900R4TYECNG5 -

Samsung Galaxy A8

SM-A530F SM-A530F/DS SM-A730F SM-A730F/DS User Manual English (LTN). 12/2017. Rev.1.0 www.samsung.com Table of Contents Basics Apps and features 4 Read me first 52 Installing or uninstalling apps 6 Device overheating situations and 54 Bixby solutions 70 Phone 10 Device layout and functions 75 Contacts 14 Battery 79 Messages 17 SIM or USIM card (nano-SIM card) 82 Internet 23 Memory card (microSD card) 84 Email 27 Turning the device on and off 85 Camera 28 Initial setup 100 Gallery 30 Samsung account 106 Always On Display 31 Transferring data from your previous 108 Multi window device 113 Samsung Pay 35 Understanding the screen 117 Samsung Members 47 Notification panel 118 Samsung Notes 49 Entering text 119 Calendar 120 Samsung Health 124 S Voice 126 Voice Recorder 127 My Files 128 Clock 129 Calculator 130 Radio 131 Game Launcher 134 Dual Messenger 135 Samsung Connect 139 Sharing content 140 Google apps 2 Table of Contents Settings 182 Google 182 Accessibility 142 Introduction 183 General management 142 Connections 184 Software update 143 Wi-Fi 185 User manual 146 Bluetooth 185 About phone 148 Data saver 148 NFC and payment 151 Mobile Hotspot and Tethering 152 SIM card manager (dual SIM Appendix models) 186 Troubleshooting 152 More connection settings 155 Sounds and vibration 156 Notifications 157 Display 158 Blue light filter 158 Changing the screen mode or adjusting the display color 160 Screensaver 160 Wallpapers and themes 161 Advanced features 163 Device maintenance 165 Apps 166 Lock screen and security 167 Face recognition 169 Fingerprint recognition 173 Smart Lock 173 Samsung Pass 176 Secure Folder 180 Cloud and accounts 181 Backup and restore 3 Basics Read me first Please read this manual before using the device to ensure safe and proper use. -

No Recharging Or Power Stealing. It's the Smart Thermostat Pros Trust

No recharging or power stealing. It’s the smart thermostat Pros trust. 5-YEAR PRO INSTALL WARRANTY Get our 3-year standard warranty plus 2 years for pro purchase and install. Here’s why pros love us. Here’s why consumers love us. RELIABLY POWERED CONTROL FROM ANYWHERE, ANYTIME No common wire? No problem. Your ecobee comes with a Customers can easily control temperature and settings Power Extender Kit so it doesn’t rely on other equipment from anywhere with an Apple Watch, Android, or to charge itself. iOS device. COMPATIBLE WITH MOST AVERAGE 23% SAVINGS* ecobee3 lite works with most HVAC systems including When customers bring home the ecobee3 lite, they radiant heating systems, multistage, and dual fuel save an average of 23% annually on heating and heat pumps. ecobee.com/compatibility cooling costs. *Learn more at ecobee.com/savings EASY INSTALLATION UPDATES YOU AS NEEDED Installation takes 30 minutes or less in most cases. We also ecobee3 lite monitors heating and cooling systems, and offer easy access to HVAC tech support, wiring diagrams, alerts customers if it senses something is wrong. and manuals if you ever need assistance. SUPPORT HOURS Available 8am–10pm (Mon–Fri) and 9am–9pm (Sat-Sun). 1.866.518.6740 | [email protected] Apple, iPhone, iPad, and iPod touch are trademarks of Apple Inc., registered in the U.S. and other countries. HomeKit is a trademark of Apple Inc. Use of the HomeKit logo means that an electronic accessory has been designed to connect specifically to iPod, iPhone, or iPad, respectively, and has been certified by the developer to meet Apple performance standards.