Scalable Trust Establishment with Software Reputation

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Gnome-Panel Gnome-About 0. Gnome-Panel-Data 0. Gnome-Orca

libnet-daemon-perl libkst2math2 lazarus-ide-gtk2 0. libevent-core-1.4-2 libclass-c3-perl 0. 0. erlang-public-key libphp-swiftmailer 0. python-pygrace 0. 0. 0. libdbi-perl libkst2core2 lazarus-ide 0. 0. 0. sugar-session-0.86 apache2.2-common 0. libslang2-modules 3.33333333333 0. 0. libhugs-base-bundled 0. libevent-dev 2.97619047619 libalgorithm-c3-perl 0. gnuift-perl libgtkhtml-4.0-common erlang-inets 0. 0. typo3-dummy 0. grace 3.22580645161 libmodule-runtime-perl 0. 0. libxau6 cl-alexandria libkst2widgets2 lazarus-src libplrpc-perl 0. 0. libkonq5-templates ttf-unifont 0. 0. 0. 0. 0. 4.16666666667 libts-0.0-0 0. sugar-emulator-0.86 0. 0. libeet-dev 0. apache2.2-bin 0.983534092165 0. jed-common hplip-cups 0.636942675159 cl-trivial-gray-streams libkadm5srv-mit8 dzedit 0.0985761226725 0. python-expeyes libevent-extra-1.4-2 erlang-ssl libmro-compat-perl typo3-src-4.5 0. 0. 0. 1.14942528736 0. 0. hugs python-epsilon acpi-support-base 0.444938820912 0. libgtkhtml-4.0-0 0. 0. 0. 1.88679245283 libclass-load-perl gnuift libecore-input1 0. 5. libxcb1 libx11-6 libx11-data 0. 0. 0.884075588463 0. 0. libkonq-common 0. libdirectfb-1.2-9 libsysfs2 0. 0. 1.66666666667 1.89098998888 0. 0. 0. 2.54777070064 libgs9 libijs-0.35 cl-babel cl-cffi 0. slsh 0. libaprutil1-ldap 0. sugar-artwork-0.86 0.906177478174 0. 4.30107526882 0. libsmokekhtml3 unifont jfbterm 0.444938820912 0. freespacenotifier 0. libecore-dev 0. libeina-dev 5. libdapclient3 0.0219058050383 0. -

Fastbuild: Accelerating Docker Image Building for Efficient Development

FastBuild: Accelerating Docker Image Building for Efficient Development and Deployment of Container Zhuo Huang1, Song Wu1∗, Song Jiang2 and Hai Jin1 1National Engineering Research Center for Big Data Technology and System 1Services Computing Technology and System Lab, Cluster and Grid Computing Lab 1School of Computer Science and Technology, Huazhong University of Science and Technology, Wuhan, 430074, China 2Department of Computer Science and Engineering, University of Texas at Arlington, Texas 76019, U.S 1 fhuangzhuo, wusong, [email protected] [email protected] Abstract—Docker containers have been increasingly adopted is especially desired in a DevOps environment where frequent on various computing platforms to provide a lightweight vir- deployments occur. In the meantime, DevOps also requires tualized execution environment. Compared to virtual machines, repeated adjustments and testing of container images. An this technology can often reduce the launch time from a few minutes to less than 10 seconds, assuming the Docker image image is often iteratively re-built before and after a container is has been locally available. However, Docker images are highly launched from it. To support flexible customization of images, customizable, and are mostly built at runtime from a remote Docker adopts a mechanism to build images at the client side. base image by running instructions in a script (the Dockerfile). An image can be built by running a Dockerfile, which is During the instruction execution, a large number of input files a script containing instructions for building an image, from may have to be retrieved via the Internet. The image building may be an iterative process as one may need to repeatedly modify a base image that provides basic and highly customizable the Dockerfile until a desired image composition is received. -

Zorp Python-Kzorp 0. Python-Radix 0. Libwind0-Heimdal Libroken18

libhugs-base-bundled liboce-modeling-dev 0. linphone-common libcogl-dev libsyncevolution0 libxmlsec1-gcrypt libisc83 libswitch-perl libesd0-dev 0. python-async libgmp-dev liblua50-dev libbsd-dev 0. 0. 0. command-not-found jing libbind9-80 0. 0. 0. libsquizz gir1.2-cogl-1.0 libblacs-mpi-dev 0. 0. 0.943396226415 0.0305436774588 0.2 hugs 0. 0. 0. 0. 0. 3.05 0. liboce-foundation-dev 0. 0. 0. 0. linphone 0. 0. libclutter-1.0-dev libaudiofile-dev 0. libgdbussyncevo0 0. libxmlsec1-dev 0.9433962264150. 0. 0. 1.5518913676 2.15053763441 0. libsane-hpaio hplip hplip-data libjutils-java libjinput-java libjinput-jni ghc node-fstream libqrupdate1 libgtkhtml-4.0-common 0. 0. 0. 0. 0. 0. mobyle kget 0. 0. libisccc80 libclass-isa-perl 0. perl gnustep-gui-common 0. 0. python-gitdb python-git 0. 0.03054367745880. 0. 0. 0. 0. liblua50 0. liblualib50 0. python-syfi 0. 0. 0. apt-file 0. 0. 4.65 1.5 0. 1.05263157895 gir1.2-clutter-1.0 0. libmumps-dev 4.4776119403 root-plugin-graf2d-asimage libfarstream-0.1-dev mobyle-utils 0. 0. linphone-nogtk libcogl-pango-dev 0. libestools2.1-dev squizz xdotool libsikuli-script-java libsikuli-script-jni gcc-avr libgmpxx4ldbl libffi-dev node-tar node-block-stream syncevolution-libs libxmlsec1-gnutls libhugs-haskell98-bundled liboce-ocaf-lite-dev octave octave-common 0. 0. 0. 0. 1.42857142857 0. python-poker-network 0. 0. eucalyptus-common 0.03054367745880. 0. 0. libpcp-gui2 0. reportbug libdns81 libxau6 0. 0. 0. 0. 1.0752688172 0. -

Introduction What Is Mtcp?

Good news, everyone! User Documentation Version: 2020-03-07 M. Brutman ([email protected]) http://www.brutman.com/mTCP/ Table of Contents Introduction and Setup Introduction..............................................................................................................................................................8 What is mTCP?...................................................................................................................................................8 Features...............................................................................................................................................................8 Tested machines/environments...........................................................................................................................9 Licensing...........................................................................................................................................................10 Packaging..........................................................................................................................................................10 Binaries.....................................................................................................................................................................10 Documentation..........................................................................................................................................................11 Support and contact information.......................................................................................................................11 -

Pipenightdreams Osgcal-Doc Mumudvb Mpg123-Alsa Tbb

pipenightdreams osgcal-doc mumudvb mpg123-alsa tbb-examples libgammu4-dbg gcc-4.1-doc snort-rules-default davical cutmp3 libevolution5.0-cil aspell-am python-gobject-doc openoffice.org-l10n-mn libc6-xen xserver-xorg trophy-data t38modem pioneers-console libnb-platform10-java libgtkglext1-ruby libboost-wave1.39-dev drgenius bfbtester libchromexvmcpro1 isdnutils-xtools ubuntuone-client openoffice.org2-math openoffice.org-l10n-lt lsb-cxx-ia32 kdeartwork-emoticons-kde4 wmpuzzle trafshow python-plplot lx-gdb link-monitor-applet libscm-dev liblog-agent-logger-perl libccrtp-doc libclass-throwable-perl kde-i18n-csb jack-jconv hamradio-menus coinor-libvol-doc msx-emulator bitbake nabi language-pack-gnome-zh libpaperg popularity-contest xracer-tools xfont-nexus opendrim-lmp-baseserver libvorbisfile-ruby liblinebreak-doc libgfcui-2.0-0c2a-dbg libblacs-mpi-dev dict-freedict-spa-eng blender-ogrexml aspell-da x11-apps openoffice.org-l10n-lv openoffice.org-l10n-nl pnmtopng libodbcinstq1 libhsqldb-java-doc libmono-addins-gui0.2-cil sg3-utils linux-backports-modules-alsa-2.6.31-19-generic yorick-yeti-gsl python-pymssql plasma-widget-cpuload mcpp gpsim-lcd cl-csv libhtml-clean-perl asterisk-dbg apt-dater-dbg libgnome-mag1-dev language-pack-gnome-yo python-crypto svn-autoreleasedeb sugar-terminal-activity mii-diag maria-doc libplexus-component-api-java-doc libhugs-hgl-bundled libchipcard-libgwenhywfar47-plugins libghc6-random-dev freefem3d ezmlm cakephp-scripts aspell-ar ara-byte not+sparc openoffice.org-l10n-nn linux-backports-modules-karmic-generic-pae -

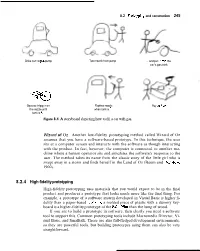

8.2.4 High-Fidelity Prototyping

8.2 Prototyping and construction 245 I Drive car to gas pump Take nozzle from pump ... and put it ~ntothe car's gas tank Squeeze trigger on Replace nozzle Pay cash~er the nozzle until when tank is full tank is full Figure 8.4 A storyboard depicting how to fill a car with gas. Wizard of Oz Another low-fidelity prototyping method called Wizard of Oz assumes that you have a software-based prototype. In this technique, the user sits at a computer screen and interacts with the software as though interacting with the product. In fact, however, the computer is connected to another ma- chine where a human operator sits and simulates the software's response to the user. The method takes its name from the classic story of the little girl who is swept away in a storm and finds herself in the Land of Oz (Baum and Denslow, 1900). 8.2.4 High-fidelity prototyping High-fidelity prototyping uses materials that you would expect to be in the final product and produces a prototype that looks much more like the final thing. For example, a prototype of a software system developed in Visual Basic is higher fi- delity than a paper-based mockup; a molded piece of plastic with a dummy key- board is a higher-fidelity prototype of the PalmPilot than the lump of wood. If you are to build a prototype in software, then clearly you need a software tool to support this. Common prototyping tools include Macromedia Director, Vi- sual Basic, and Smalltalk. -

Jabber & IRC & SIP ICQ-, Chat- Und Skype-Ersatz Für Echte Männer

Jabber & IRC & SIP ICQ-, Chat- und Skype-Ersatz für echte Männer (und Frauen) Schwabacher Linux Tage 09 Überblick „Unschönes“ an proprietärer Kommunikation Rechtliche Grauzone EULAs IRC & Jabber: ICQ erschlagen SIP-Telefonie: Skype erschlagen Zusammenfassung Diskussion & Fragen Schwabacher Linux Tage 09 Worüber reden wir eigentlich? ICQ, MSN, Yahoo! & Co. „Kostenlose“ Chat- und Kurznachrichten-Dienste Unterstützen „Offline-Nachrichten“ Infrastrukturen in Amerika Es gelten amerikanische Bedingungen! Bieten proprietäre Client-Software für Windoos und Mac an Alternative Client-Software ist nicht erlaubt Schwabacher Linux Tage 09 Worüber reden wir eigentlich? (2) Skype „Kostenloser“ Dienst für Internet-Telefonie Ebay bietet proprietäre Client-Software für Windoos, Mac Schwabacher Linux Tage 09 Der Haken Dienste sind „kostenlos“: Der wahre Preis steht in der EULA! EULA Lang (Soll nicht gelesen werden?) Unverständliche juristische Formulierungen Für den Standardnutzer heute nur noch „Auf 'Akzeptieren' klicken. Ist ein bindender Vertrag! Schwabacher Linux Tage 09 Der Haken (2) [...] You agree that [...] you surrender your copyright and any other proprietary right in the posted material or information. You further agree that ICQ LLC. is entitled to use at its own discretion any of the posted material or information in any manner it deems fit, including, but not limited to, publishing the material or distributing it. [...] Schwabacher Linux Tage 09 Unbewusste Probleme Massenhaftes Sammeln personenbezogener Daten Datenschleudern/Datenhandel Abgrasen -

DMK BO2K8.Pdf

Black Ops 2008: It’s The End Of The Cache As We Know It Or: “64K Should Be Good Enough For Anyone” Dan Kaminsky Director of Penetration Testing IOActive, Inc. copyright IOActive, Inc. 2006, all rights reserved. Introduction • Hi! I’m Dan Kaminsky – This is my 9th talk here at Black Hat – I look for interesting design elements – new ways to manipulate old systems, old ways to manipulate new systems – Career thus far spent in Fortune 500 • Consulting now – I found a really bad bug a while ago. • You might have heard about it. • There was a rather coordinated patching effort. • I went out on a very shaky limb, to try to keep the details quiet – Asked people not to publicly speculate » Totally unreasonable request » Had to try. – Said they’d be congratulated here Thanks to the community • First finder: Pieter de Boer – Michael Gersten – 51 hours later – Mike Christian • Best Paper • Left the lists – Bernard Mueller, sec- – Paul Schmehl consult.com – Troy XYZ – Five days later, but had full – Others info/repro • Thanks • Interesting thinking (got close, – Jen Grannick (she contacted kept off lists) me) – Andre Ludwig – DNSStuff (they taught me – Nicholas Weaver LDNS, and reimplemented – “Max”/@skst (got really really my code better) close) – Everyone else (people know – Zeev Rabinovich who they are, and know I owe them a beer). Obviously thanks to the Summit Members • Paul Vixie • People have really been • David Dagon incredible with this. – Georgia Tech – thanks for • What did we accomplish? the net/compute nodes • Florian Weimer • Wouter Wijngaards • Andreas Gustaffon • Microsoft • Nominum • OpenDNS • ISC • Neustar • CERT There are numbers and are there are numbers • 120,000,000 – The number of users protected by Nominum’s carrier patching operation – They’re not the Internet’s most popular server! • That’s BIND, and we saw LOTS of BIND patching – They’re not the only server that got lots of updates • Microsoft’s Automatic Updates swept through lots and lots of users • Do not underestimate MSDNS behind the firewall. -

IRC Internet Relay Chat

IRC Internet Relay Chat Finlandia, 1988,l'idea di IRC prende ispirazione dai primi software quali “MUT” e “Talk”. Da un idea di uno studente finlandese, questa innovazione pian piano si sviluppò in una rete IRC universitaria che permetteva agli studenti di tutte le università del paese di comunicare tramite i loro terminali Unix. Quando divenne disponibile la connessione Internet tra più stati la rete IRC Finlandese si espanse fino a raggiungere alcuni server Statunitensi dando vita poco tempo dopo alla prima rete IRC Internazionale tutt'oggi esistente: Eris Free Net (EfNet). Ben presto, nel giro di poco tempo si ebbe un boom delle reti IRC Nazionali ed Internazionali, con una conseguente crescita esponenziale del numero di utenti nel mondo, tanto da far diventare IRC il sistema di Chat più diffuso. In italia fece la prima comparsa intorno al 1995. L'idea di IRC fu progettato come un sistema di chat "grezzo", dove non ci fosse alcun controllo sulle attività degli utenti. La mancanza di moderazione fu la causa principale della nascita e il conseguente sviluppo del fenomeno dell'IRCWar, che consiste nell'utilizzare tecniche più o meno lecite che vanno dall'attacco della macchina dell'utente fino al disturbo/attacco della stessa chatrom con lo scopo dell'impossersarsene. L'indirizzo principale per collegarsi alla rete di solito è irc.xxx.xxx, quest'ultimo punta a rotazione su tutti i server principali per bilanciarne il carico a favore della stabilità e affidabilità della connessione. DISCLAIMER: Questa guida è stata scritta senza nessun fine di lucro. Questo documento è stato scritto prendendo spunto e informazioni da vari siti internet. -

Application Identification and Control

APPLICATION Arcisdms Networking 3 1 BITS Download Manager File Transfer 4 1 Citrix IMA Remote Access 5 1 Cyworld Social Networking 3 2 Ares File Transfer 1 2 BitTorrent File Transfer 1 5 Citrix Licensing Remote Access 5 1 Daily Mail Web Services 1 2 IDENTIFICATION Ariel Networking 3 3 bizo.com Web Services 3 1 Citrix Online Collaboration 4 3 Dailymotion Streaming Media 1 4 Army Attack Games 2 5 BJNP Networking 4 1 Citrix RTMP Remote Access 5 2 Datagram Authenticated Session Protocol Networking 3 1 Army Proxy Proxy 1 5 Blaze File Server File Transfer 3 2 Citrix WANScaler Remote Access 5 1 Datalogix Web Services 4 1 arns Networking 3 1 BLIDM Database 3 1 Citrix XenDesktop Remote Access 5 1 Datei.to File Transfer 2 5 & CONTROL ARP Networking 3 1 Blogger Web Services 3 1 CitrixSLG Remote Access 5 1 DATEX-ASN Networking 3 1 $100,000 Pyramid Games 2 5 Adobe Updates Web Services 3 1 asa Networking 3 1 Blokus Games 1 2 CityVille Games 1 1 Daum.net Web Services 1 1 050Plus Messaging 2 2 Adometry Web Services 4 1 Ask.com Web Services 2 1 Bloomberg Web Services 4 1 CL1 Networking 3 1 Daytime Network Monitoring 2 5 12306.cn Web Services 4 1 Adready Web Services 4 1 Astaro SSL VPN Client VPN and Tunneling 3 1 Bluekai Web Services 1 1 Clarizen Collaboration 5 1 dBASE Unix Database 3 1 While traditional firewalls block protocols, 126.com Mail 4 2 Adrive.com Web Services 3 3 Astraweb File Transfer 3 5 BlueLithium Web Services 3 1 Classmates Social Networking 1 3 DC Storm Web Services 4 1 and ports, the Network Box Application 17173.com Social Networking -

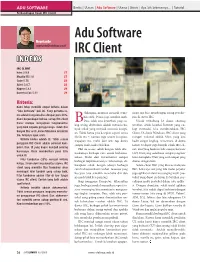

Adu Software IRC Client

ADU SOFTWARE Berita | Ulasan | Adu Software | Utama | Bisnis | Apa Sih Sebenarnya... | Tutorial Perbandingan Enam IRC Client Adu Software Noprianto [email protected] IRC Client IINDENDEKKSS IRC CLIENT kvirc 2.9.9 27 Mozilla IRC 1.6 27 Gaim 0.75 28 Xchat 2.0.7 28 Kopete 0.8.1 29 konversation 0.13 29 Kriteria: Kami tetap memiliki empat kriteria dalam “Adu Software” kali ini. Yang pertama-ta- elakangan, manusia menjadi sema- Siapa saja bisa membangun ruang percaka- ma adalah fungsionalitas dengan porsi 40%. kin aneh. Dunia juga semakin aneh. pan di server IRC. Kami berpendapat bahwa setiap IRC client Dan, salah satu keanehan yang cu- Untuk terhubung ke dunia chatting harus mampu menyajikan fungsionalitas B kup sering ditemukan adalah menusia ba- tersebut, selain koneksi Internet yang cu- yang baik kepada penggunanya. Indah dan nyak sekali yang menjadi manusia kesepi- kup memadai, kita membutuhkan IRC banyak fitur aneh, kalau tidak bisa mengirim an. Tidak hanya pria kesepian seperti cerita Client. Di dunia Windows, IRC client yang file, tentunya agak aneh. Sheila On 7, namun juga wanita kesepian. (sa ngat) terkenal adalah Mirc, yang kini Kriteria kedua adalah UI. Tidak semua Siapapun itu, mulai dari artis top dunia hadir sangat lengkap. Sementara, di dunia pengguna IRC Client adalah peminat kom- sampai anak-anak sekolahan. Linux, terdapat juga banyak sekali IRC cli- puter. Dan, UI yang bagus menjadi penting Hal ini ironis sekali dengan telah dite- ent, dari yang berbasis teks sampai berbasis karenanya. Kami memberikan porsi 30% mukannya berbagai cara untuk berkomu- GUI. Dari yang sederhana sampai yang luar untuk UI. -

Upgrade Issues

Upgrade issues Graph of new conflicts libboost1.46-dev libboost-random-dev (x 18) libboost-mpi-python1.46.1 libboost-mpi-python-dev libwoodstox-java (x 7) liboss4-salsa-asound2 (x 2) libboost1.46-doc libboost-doc libgnutls28-dev libepc-dev libabiword-2.9-dev libcurl4-openssl-dev (x 5) python-cjson (x 2) nova-compute-kvm (x 4) printer-driver-all-enforce lprng (x 2) mdbtools-dev libiodbc2 tesseract-ocr-deu (x 8) tesseract-ocr libjpeg62-dev libcvaux-dev (x 2) ldtp python-pyatspi Explanations of conflicts ldtp python-pyatspi2 python-pyatspi Similar to ldtp: python-ldtp Weight: 29 Problematic packages: ldtp libboost-mpi-python-dev libboost-mpi-python1.48-dev libboost-mpi-python1.48.0 libboost-mpi-python1.46.1 Similar to libboost-mpi-python1.46.1: libboost1.46-all-dev libboost-mpi-python1.46-dev Similar to libboost-mpi-python-dev: libboost-all-dev Weight: 149 Problematic packages: libboost-mpi-python-dev tesseract-ocr-vie tesseract-ocr Similar to tesseract-ocr: slimrat tesseract-ocr-dev slimrat-nox Weight: 61 Problematic packages: tesseract-ocr-vie tesseract-ocr-spa tesseract-ocr Similar to tesseract-ocr: slimrat tesseract-ocr-dev slimrat-nox Weight: 295 Problematic packages: tesseract-ocr-spa tesseract-ocr-por tesseract-ocr Similar to tesseract-ocr: slimrat tesseract-ocr-dev slimrat-nox Weight: 133 Problematic packages: tesseract-ocr-por tesseract-ocr-nld tesseract-ocr Similar to tesseract-ocr: slimrat tesseract-ocr-dev slimrat-nox Weight: 112 Problematic packages: tesseract-ocr-nld tesseract-ocr-ita tesseract-ocr Similar to tesseract-ocr: