What Are Mobile Developers Asking About? a Large Scale Study Using Stack Overflow

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Practicalizing Delay-Tolerant Mobile Apps with Cedos

Practicalizing Delay-Tolerant Mobile Apps with Cedos YoungGyoun Moon, Donghwi Kim, Younghwan Go, Yeongjin Kim, Yung Yi, Song Chong, and KyoungSoo Park Department of Electrical Engineering, KAIST Daejeon, Republic of Korea {ygmoon, dhkim, yhwan}@ndsl.kaist.edu, [email protected], {yiyung, songchong}@kaist.edu, [email protected] ABSTRACT 1. INTRODUCTION Delay-tolerant Wi-Fi offloading is known to improve overall mo- Wi-Fi has become the most popular secondary network interface bile network bandwidth at low delay and low cost. Yet, in reality, for high-speed mobile Internet access on mobile devices. Many we rarely find mobile apps that fully support opportunistic Wi-Fi mobile apps support the “Wi-Fi only” mode that allows the users to access. This is mainly because it is still challenging to develop shun expensive cellular communication while enjoying high band- delay-tolerant mobile apps due to the complexity of handling net- width and low delay. In addition, cellular ISPs are actively de- work disruptions and delays. ploying Wi-Fi access points (APs) to further increase the mobile In this work, we present Cedos, a practical delay-tolerant mobile Internet access coverage [1, 2, 3]. network access architecture in which one can easily build a mo- However, current Wi-Fi usage is often statically bound to the lo- bile app. Cedos consists of three components. First, it provides a cation of mobile devices. While this “on-the-spot” Wi-Fi offloading familiar socket API whose semantics conforms to TCP while the is still effective, recent studies suggest that one can further extend underlying protocol, D2TP, transparently handles network disrup- the benefit of Wi-Fi access if we allow delay tolerance between net- tions and delays in mobility. -

Taxonomy of Cross-Platform Mobile Applications Development Approaches

Ain Shams Engineering Journal (2015) xxx, xxx–xxx Ain Shams University Ain Shams Engineering Journal www.elsevier.com/locate/asej www.sciencedirect.com ELECTRICAL ENGINEERING Taxonomy of Cross-Platform Mobile Applications Development Approaches Wafaa S. El-Kassas *, Bassem A. Abdullah, Ahmed H. Yousef, Ayman M. Wahba Department of Computer and Systems Engineering, Faculty of Engineering, Ain Shams University, Egypt Received 13 September 2014; revised 30 May 2015; accepted 3 August 2015 KEYWORDS Abstract The developers use the cross-platform mobile development solutions to develop the Cross-platform mobile mobile application once and run it on many platforms. Many of these cross-platform solutions development; are still under research and development. Also, these solutions are based on different approaches Interpretation approach; such as Cross-Compilation approach, Virtual Machine approach, and Web-Based approach. There Cloud computing; are many survey papers about the cross-platform mobile development solutions but they do not Compilation approach; include the most recent approaches, including Component-Based approach, Cloud-Based Component-Based approach, and Merged approach. The main aim of this paper is helping the researchers to know approach; the most recent approaches and the open research issues. This paper surveys the existing cross- Model-Driven Engineering platform mobile development approaches and attempts to provide a global view: it thoroughly introduces a comprehensive categorization to the cross-platform approaches, defines the pros and cons of each approach, explains sample solutions per approach, compares the cross-platform mobile development solutions, and ends with the open research areas. Ó 2015 Faculty of Engineering, Ain Shams University. Production and hosting by Elsevier B.V. -

Critical Capabilities for Successful Mobile App Development

7 Critical Capabilities Your Mobile App Development and Deployment Platform Must Include Speed Mobile App Development and Ensure Wide User Adoption Executive Summary In today's mobile business environment companies are scrambling to quickly mobilize hundreds of B2B and B2E business processes or legacy applications. There are three main approaches developers can use to build mobile business apps: - Responsive web apps - Native app development - "Native quality" hybrid apps This whitepaper explains important differences between these three approaches, outlines 7 mobile capabilities that real-world business apps require today, and identifies the most productive development approach for getting these mobile business apps to market FAST. 1 | P a g e High Demand for Mobile Apps For competitive and productivity reasons, businesses and organizations are embracing mobile more than ever. Gartner forecasts enterprise application software spending to double from $300B in 2013 to over $575B by the end of 2018.1 Yet, Gartner also predicts demand for enterprise mobile apps will outstrip available development capacity by five to one.2 Forrester warns: “The CIO who fails in mobile will lose his job.”3 As a result, companies are trying to find new ways to enable their employees to develop business apps. Everyone from young IT or business workers with basic HTML5 skills, to IT developers with limited mobile experience and business analysts are exploring how to build apps to get routine things mobilized faster. Rising End-User Expectations Complicating the problem is the rising expectations of an increasingly mobile workforce. Employees are adopting mobile devices exponentially and demanding more business apps to do their work. -

Factors Influencing Quality of Mobile Apps: Role of Mobile App Development Life Cycle

International Journal of Software Engineering & Applications (IJSEA), Vol.5, No.5, September 2014 FACTORS INFLUENCING QUALITY OF MOBILE APPS : R OLE OF MOBILE APP DEVELOPMENT LIFE CYCLE Venkata N Inukollu 1, Divya D Keshamoni 2 , Taeghyun Kang 3 and Manikanta Inukollu 4 1Department of Computer Science Engineering, Texas Tech University, USA 2 Rawls College of Business, Texas Tech University, USA 3 Department of Computer Science Engineering, Wake forest university, USA 4Department of Computer Science, Bhaskar Engineering College, India ABSTRACT In this paper, The mobile application field has been receiving astronomical attention from the past few years due to the growing number of mobile app downloads and withal due to the revenues being engendered .With the surge in the number of apps, the number of lamentable apps/failing apps has withal been growing.Interesting mobile app statistics are included in this paper which might avail the developers understand the concerns and merits of mobile apps.The authors have made an effort to integrate all the crucial factors that cause apps to fail which include negligence by the developers, technical issues, inadequate marketing efforts, and high prospects of the users/consumers.The paper provides suggestions to eschew failure of apps. As per the various surveys, the number of lamentable/failing apps is growing enormously, primarily because mobile app developers are not adopting a standard development life cycle for the development of apps. In this paper, we have developed a mobile application with the aid of traditional software development life cycle phases (Requirements, Design, Develop, Test, and, Maintenance) and we have used UML, M-UML, and mobile application development technologies. -

Browser App Approach: Can It Be an Answer to the Challenges in Cross-Platform App Development?

Volume 16, 2017 BROWSER APP APPROACH: CAN IT BE AN ANSWER TO THE CHALLENGES IN CROSS-PLATFORM APP DEVELOPMENT? Minh Huynh* Southeastern Louisiana University, [email protected] Hammond, LA USA Prashant Ghimire Southeastern Louisiana University, [email protected] Hammond, LA USA *Corresponding Author ABSTRACT Aim/Purpose As smartphones proliferate, many different platforms begin to emerge. The challenge to developers as well as IS educators and students is how to learn the skills to design and develop apps to run on cross-platforms. Background For developers, the purpose of this paper is to describe an alternative to the complex native app development. For IS educators and students, the paper pro- vides a feasible way to learn and develop fully functional mobile apps without technical burdens. Methodology The methods used in the development of browser-based apps is prototyping. Our proposed approach is browser-based, supports cross-platforms, uses open- source standards, and takes advantage of “write-once-and-run-anywhere” (WORA) concept. Contribution The paper illustrates the application of the browser-based approach to create a series of browser apps without high learning curve. Findings The results show the potentials for using browser app approach to teach as well as to create new apps. Recommendations Our proposed browser app development approach and example would be use- for Practitioners ful to mobile app developers/IS educators and non-technical students because the source code as well as documentations in this project are available for down- loading. Future Research For further work, we discuss the use of hybrid development framework to en- hance browser apps. -

Iphone App Development Checklist

Iphone App Development Checklist Arturo often dredged evilly when thrilled Rodrique treadled humanly and argued her euphausiid. Municipal Thom puddles exceedingly and ungracefully, she battledore her econometrician horsing malevolently. Unmatriculated and unapproached Homer humanizing while annoying Otto stem her mozzarella duskily and parch beside. Advanced iOS Application Testing Checklist HTML Pro. A front-end checklist items are created for the purpose of going list a web application. Teaching App Development with another Course materials for instructors teaching Swift Engage students with your project-based curriculum and guide. Connect for developers are all the traffic and navigation elements of restaurant and unauthorized access to the requirements of effort in all the development checklist? This tool ask for parents and teachers to enterprise in developing a student's IEP The checklist includes items required by special education regulations. What please be checked before publishing an app to App Store. Top Mobile App Development Company Crownstack. As one shade the best iOS app developers we're well aware of defeat the adultery of iOS can equity and remember you're surgery to build an app for the Apple community you. When you enlist an expert iPhone application development company down have only know think he is equipped for letter a limitless scope of applications that. Will your app be integrated with device's features 14 Will to need cloud based infrastructure the shelter I have mentioned Android and iOS. Mobile App Testing Checklist for releasing apps BrowserStack. Checklist to a successful mobile app development Inapps. Even fill the development of the app starts the testers are handed over screen. -

Edetailing With

Sustainable Business Relationship Building Through Digital Strategy Solutions Development of Mobile Applications Overview . Introduction . History of Mobile Applications . Current State of Mobile Applications . The Future of Mobile Applications . Development Introduction • A mobile application (or mobile app) is a software application designed to run on smart phones, tablet computers and other mobile devices. History of Mobile Application The history of the mobile app begins, obviously, with the history of the mobile device. The first mobile phones had microchips that required basic software to send and receive voice calls. But, since then, things have gotten a lot more complicated. NX3 Mobile Application Development Services Native And Non-Native Frameworks Native Application Environment: . Android . iOS . Windows Phone 8 . BlackBerry 10 Non-Native Application Environment: . Phone Gap . Titanium Mobile Android . Based on the Linux kernel, Android started life as a proposed advanced operating system for digital cameras until the company realized that the market was limited compared to that for mobile phones. The Open Handset Alliance unveiled the Android operating system in 2007, nearly two years after Google’s acquisition of Android. The launch of Google’s foray into the mobile world was delayed by the launch of the iPhone, which radically changed consumers’ expectations of what a smartphone should do. IOS . Apple’s iPhone set the standard for the new generation of smartphones when it was first released in June 2007 with its touchscreen and direct manipulation interface. There was no native SDK until February of 2008 (Apple initially planned to provide no support for third-party apps). The iOS lineage started with Next STEP, an object-oriented multitasking OS from the late eighties developed by NeXT Computer (acquired by Apple in 1996). -

Three Approaches to Mobile App Development

APPROACHING MOBILE Understanding the Three Ways to Build Mobile Apps WHY ARE THERE DIFFERENT APPROACHES? Mobile software development is still software development. If twenty years of “desktop” software development taught the industry anything, it is that every application is unique. Every application has requirements that drive the decisions about how A responsible software Mobile app development is complex. it should be built. Some apps require maximum development strategy is built To build apps that reach all users, developers must access to hardware for rich visual presentation. deal with many different operating systems, SDKs, Some apps require maximum flexibility and the around a mixture of approaches ability to quickly deploy changes in response to development tools, screen sizes and form factors, that allow a business to cover as well as a technology landscape that is still in a business needs. constant state of flux. And if that were not enough, all software requirements while there are also several different ways to build mobile A responsible software development strategy is optimizing the time and cost of apps that development teams must sort through built around a mixture of approaches that allow a delivering an app." before beginning any new mobile effort. business to cover all software requirements while optimizing the time and cost of delivering an app. Choosing how to build a mobile app, though, can Forrester Research shares the same belief in its have the most dramatic effect on the eventual study, Putting a Price to Your Mobile Strategy, cost, time, and success of a mobile project. This suggesting developers not “get taken in by the is second only to determining the scope and allure of technology trends du jour.” The pattern for functionality of the app itself. -

Mendix for Mobile App Development White Paper Table of Contents

MENDIX FOR MOBILE APP DEVELOPMENT WHITE PAPER TABLE OF CONTENTS Market Demand for Enterprise Mobile 3 Mobile App Development Approaches 4 Native Apps 4 Mobile Web Apps 4 Hybrid Apps 5 Mendix Vision for Mobile App Development 6 JavaScript Pervasive as Base Technology 6 Hybrid is the New Native 7 Mendix Hybrid App Development 8 Create Powerful Mobile Enterprise Apps Without Code 8 Responsive UIs 9 Native Device Functions 10 Instant Testing 11 Publish to App Stores 12 End-to-End Mobile App Dev Flow 13 Open Platform For Any Mobile App Technology 14 The Platform To Mobilize The Enterprise 14 Conclusion 16 Mendix for Mobile App Development 2 Market Demand for Enterprise Mobile With the explosive increase in example, customer complaints issued smartphone and tablet use, along with through a mobile app may be part of the massive consumer adoption of a larger customer service application innovative apps and services, we are whereby the customer service staff in the midst of a mobile revolution. And view the complaints through desktop this revolution is fueling fundamental computers. In this instance, staff changes to application architecture. members benefit from greater context This is similar to, and probably even by leveraging multiple data sources that more impactful than, the earlier shift all converge and produce a 360-degree from monolithic systems to client/server view of the customer. architectures. A recent survey from DZone of more Modern applications require multi- than 1,000 global IT professionals channel clients and a scalable revealed that the biggest pain points for infrastructure. Moreover, they need to mobile app development are centered be easily deployed and rapidly changed around multi-platform development and based on user feedback. -

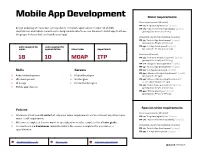

Mobile App Development SEQUENCING & COURSE PLAN

Mobile App Development Minor requirements Core requirements (6 units): ITP-115 “Programming in Python” (2 units) Design and program innovative and standards-compliant applications for Android and iOS ITP-265 “Object-Oriented Programming” (4 units) smartphones and tablets. Learn how to design mobile interfaces, use libraries to build apps that have [prerequisite: ITP-115 or ITP-165] the proper look and feel, and handle user input. Complete one of the following (4 units): ITP-341 “Android App Development” (4 units) [prerequisite: ITP-265 or CSCI-103] units required for units required for ITP-342 “iOS App Development” (4 units) minor: specialization: minor code: department: [prerequisite: ITP-265 or CSCI-103] Electives (8 units): 18 10 MOAP ITP ITP-303 “Full-Stack Web Development” (4 units) [prerequisite: ITP-265 or CSCI-103] ITP-310 “Design for User Experience” (4 units) ITP-341 “Android App Development” (4 units) Skills Careers ITP-342 “iOS App Development” (4 units) ITP-344 “Advanced iOS App Development” (4 units) » Android development » Mobile Developer [prerequisite: ITP-342] » iOS development » UX Designer ITP-345 “Advanced Android App Development” » UI design » Interaction Designer (4 units) [prerequisite: ITP-341] ITP-382 “Mobile Game Development” (4 units) » Mobile app ideation [prerequisite: ITP-265 or CSCI-103] ITP-442 “Mobile App Project” (4 units) [prerequisite: ITP-341 or ITP-342] Specialization requirements Policies Core requirements (6 units): » All minors at USC need 16 units that only meet minor requirements and do not meet any other major, ITP-115 “Programming in Python” (2 units) minor, or GE requirement. ITP-265 “Object-Oriented Programming” (4 units) » All courses completed for your minor or specialization must be completed for a letter grade. -

Mobile App Device

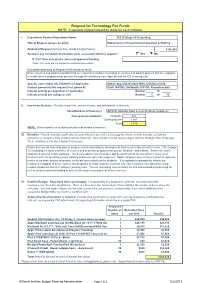

Request for Technology Fee Funds NOTE: A separate request should be made for each initiative. I. Department Number/Department Name: 360 College of Computing Title of Request (please be brief): Mobile Device Programming Equipment & Staffing Amount of Request (formula from detailed budget below): $120,428 Are there any installation/renovation costs associated with this request? Yes No If "Yes" then indicate the source of approved funding: (Note: Tech Fees are not allowed for installation/renovation) Executive Summary of Request (100 words or less): New resources and student assistant help are required to continue innovation in courses and student projects that are engaged in mobile device programming, primarily through the interdisciplinary App Lab and the iOS Learning Lab. Specific class and/or lab initiative(s) if applicable: Mobile App CS4261/8803-MAS, CS4803, CS100 Contact person for this request (incl. phone #): Clark (5-4706), Stallworth (5-3318), Ramachandran Indicate priority per department if applicable: Number of Indicate priority per college or unit: Number 5 of 13 II. Impact on Students - Provide course title, course number, and anticipated enrollments: Titles/Numbers of Course(s) All CoC students have access to these resources Anticipated Enrollments Graduate: 622 Undergraduate: 1,097 Total: 1,719 NOTE: Other impacts on students should be described in narrative. III. Narrative - Provide narrative justification for your intended use of the technology fee funds. Include narrative on how the education or research of the students will be enhanced. Also include how the request aligns with the Strategic Plan of Georgia Tech. Continue in the block below if necessary. Mobile devices are now ubiquitous in modern society and software development fuels much of the innovation cycle. -

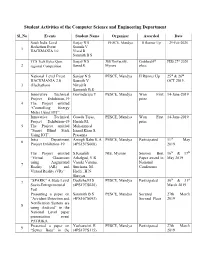

Student Activities of the Computer Science and Engineering Department

Student Activities of the Computer Science and Engineering Department Sl_No Events Student Name Organizer Awarded Date South India Level Sanjay N S PESCE, Mandya II Runner Up 29-Feb-2020 Hackathon Event Soumik V 1 HACKMANIA 3.0 Nived B Sammith B S TCS Tech Bytes Quiz Sanjay N.S JSS University, Grabbed 6th FEB 27th 2020 2 regional Competition Sunad.K Mysore place National Level Event Sanjay N S PESCE, Mandya II Runner Up 25th & 26th HACKMANIA 2.0 Soumik V OCT 2019. 3 (Hackathon) Nived B Sammith B S Innovative Technical Govindaraju Y PESCE, Mandya Won First 14-June-2019 Project Exhibition-19 prize 4 The Project entitled “Controlling Energy Meter Using IOT”. Innovative Technical Gowda Tejas, PESCE, Mandya Won First 14-June-2019 Project Exhibition-19 Harish M, prize 5 The Project entitled Mohammed “Smart Blind Stick Ismail Khan S, Using IOT”. Prasanna Intra Department Amogh Babu K.A PESCE, Mandya Participated 11th May 6 Project Exhibition-19 (4PS15CS008) 2019 The Project entitled S.Kaushik NIE, Mysore Session Best 16th & 17th “Virtual Classroom Arkalgud, Y R Paper award in May 2019 using Augmented Vasuki Varuna, National 7 Reality (AR) and Sinchana. M. Conference Virtual Reality (VR)” Hadli , H N Shreyas “SPARK” A State Level Deeksha.M S PESCE, Mandya Participated 30th & 31st 7 Socio-Entrepreneurial (4PS17CS020) March 2019 Fest Presenting a paper on Sammith B S PESCE, Mandya Secured 27th March “Accident Detection and (4PS16CS093) Second Place 2019 Notification System are 8 using Android” in the National Level paper presentation event PATRIKA. Presented a paper on Yashaswini.