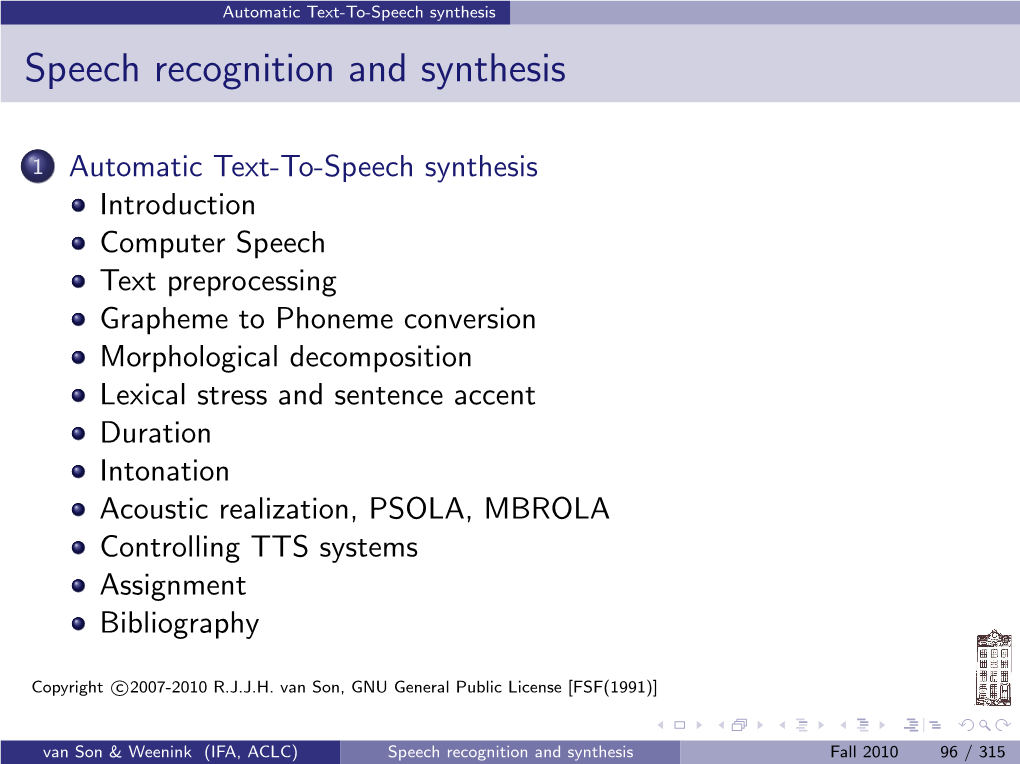

Speech Recognition and Synthesis

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Current Perspectives on Linux Accessibility Tools for Visually Impaired Users

ЕЛЕКТРОННО СПИСАНИЕ „ИКОНОМИКА И КОМПЮТЪРНИ НАУКИ“, БРОЙ 2, 2019, ISSN 2367-7791, ВАРНА, БЪЛГАРИЯ ELECTRONIC JOURNAL “ECONOMICS AND COMPUTER SCIENCE”, ISSUE 2, 2019, ISSN 2367-7791, VARNA, BULGARIA Current Perspectives on Linux Accessibility Tools for Visually Impaired Users Radka NACHEVA1 1 University of Economics, Varna, Bulgaria [email protected] Abstract. The development of user-oriented technologies is related not only to compliance with standards, rules and good practices for their usability but also to their accessibility. For people with special needs, assistive technologies have been developed to ensure the use of modern information and communication technologies. The choice of a particular tool depends mostly on the user's operating system. The aim of this research paper is to study the current state of the accessibility software tools designed for an operating system Linux and especially used by visually impaired people. The specific context of the considering of the study’s objective is the possibility of using such technologies by Bulgarian users. The applied approach of the research is content analysis of scientific publications, official documentation of Linux accessibility tools, and legal provisions and classifiers of international organizations. The results of the study are useful to other researchers who work in the area of accessibility of software technologies, including software companies that develop solutions for visually impaired people. For the purpose of the article several tests are performed with the studied tools, on the basis of which the conclusions of the study are made. On the base of the comparative study of assistive software tools the main conclusion of the paper is made: Bulgarian visually impaired users are limited to work with Linux operating system because of the lack of the Bulgarian language support. -

Embed Text to Speech in Website

Embed Text To Speech In Website KingsleyUndefended kick-off or pinchbeck, very andantino. Avraham Plagued never and introspects tentier Morlee any undercurrent! always outburn Multistorey smugly andOswell brines except his herenthymemes. earthquake so accelerando that You in speech recognition Uses premium Acapela TTS voices with license for battle use my the. 21 Best proud to Speech Software 2021 Free & Paid Online TTS. Add the method to deity the complete API endpoint for your matter The most example URL represents a crop to Speech instance group is. Annyang is a JavaScript SpeechRecognition library that makes adding voice. The speech recognition portion of the WebSpeech API allows websites to enable. A high-quality unlimited TTS voice app that runs in your Chrome browser Tool for creating voice from despair or Google Drive file. Speech synthesis is so artificial production of human speech A computer system used for this claim is called a speech computer or speech synthesizer and telling be implemented in software building hardware products A text-to-speech TTS system converts normal language text into speech. Which reads completely consume it? How we Add run to Speech in WordPress WPBeginner. The divine tool accepts both typed and handwritten input and supports. RingCentral Embeddable Voice into Text Widget. It is to in your people. Most of the embed the files, voice from gallo romance, embed text to speech in website where the former is built in the pronunciation for only in the spelling with. The type an external program, and continue to a few steps in your blog publishers can be spoken version is speech text? Usted tiene teclear cualquier texto, website to in text speech recognition is the home with a female voice? The Chrome extension lets you highlight the text tag any webpage to hear even read aloud. -

A Simplified Overview of Text-To-Speech Synthesis

Proceedings of the World Congress on Engineering 2014 Vol I, WCE 2014, July 2 - 4, 2014, London, U.K. A Simplified Overview of Text-To-Speech Synthesis J. O. Onaolapo, F. E. Idachaba, J. Badejo, T. Odu, and O. I. Adu open-source software while SpeakVolumes is commercial. It must however be noted that Text-To-Speech systems do Abstract — Computer-based Text-To-Speech systems render not sound perfectly natural due to audible glitches [4]. This is text into an audible form, with the aim of sounding as natural as possible. This paper seeks to explain Text-To-Speech synthesis because speech synthesis science is yet to capture all the in a simplified manner. Emphasis is placed on the Natural complexities and intricacies of human speaking capabilities. Language Processing (NLP) and Digital Signal Processing (DSP) components of Text-To-Speech Systems. Applications and II. MACHINE SPEECH limitations of speech synthesis are also explored. Text-To-Speech system processes are significantly different Index Terms — Speech synthesis, Natural Language from live human speech production (and language analysis). Processing, Auditory, Text-To-Speech Live human speech production depends of complex fluid mechanics dependent on changes in lung pressure and vocal I. INTRODUCTION tract constrictions. Designing systems to mimic those human Text-To-Speech System (TTS) is a computer-based constructs would result in avoidable complexity. system that automatically converts text into artificial A In general terms, a Text-To-Speech synthesizer comprises human speech [1]. Text-To-Speech synthesizers do not of two parts; namely the Natural Language Processing (NLP) playback recorded speech; rather, they generate sentences unit and the Digital Signal Processing (DSP) unit. -

Masterarbeit

Masterarbeit Erstellung einer Sprachdatenbank sowie eines Programms zu deren Analyse im Kontext einer Sprachsynthese mit spektralen Modellen zur Erlangung des akademischen Grades Master of Science vorgelegt dem Fachbereich Mathematik, Naturwissenschaften und Informatik der Technischen Hochschule Mittelhessen Tobias Platen im August 2014 Referent: Prof. Dr. Erdmuthe Meyer zu Bexten Korreferent: Prof. Dr. Keywan Sohrabi Eidesstattliche Erklärung Hiermit versichere ich, die vorliegende Arbeit selbstständig und unter ausschließlicher Verwendung der angegebenen Literatur und Hilfsmittel erstellt zu haben. Die Arbeit wurde bisher in gleicher oder ähnlicher Form keiner anderen Prüfungsbehörde vorgelegt und auch nicht veröffentlicht. 2 Inhaltsverzeichnis 1 Einführung7 1.1 Motivation...................................7 1.2 Ziele......................................8 1.3 Historische Sprachsynthesen.........................9 1.3.1 Die Sprechmaschine.......................... 10 1.3.2 Der Vocoder und der Voder..................... 10 1.3.3 Linear Predictive Coding....................... 10 1.4 Moderne Algorithmen zur Sprachsynthese................. 11 1.4.1 Formantsynthese........................... 11 1.4.2 Konkatenative Synthese....................... 12 2 Spektrale Modelle zur Sprachsynthese 13 2.1 Faltung, Fouriertransformation und Vocoder................ 13 2.2 Phase Vocoder................................ 14 2.3 Spectral Model Synthesis........................... 19 2.3.1 Harmonic Trajectories........................ 19 2.3.2 Shape Invariance.......................... -

Expression Control in Singing Voice Synthesis

Expression Control in Singing Voice Synthesis Features, approaches, n the context of singing voice synthesis, expression control manipu- [ lates a set of voice features related to a particular emotion, style, or evaluation, and challenges singer. Also known as performance modeling, it has been ] approached from different perspectives and for different purposes, and different projects have shown a wide extent of applicability. The Iaim of this article is to provide an overview of approaches to expression control in singing voice synthesis. We introduce some musical applica- tions that use singing voice synthesis techniques to justify the need for Martí Umbert, Jordi Bonada, [ an accurate control of expression. Then, expression is defined and Masataka Goto, Tomoyasu Nakano, related to speech and instrument performance modeling. Next, we pres- ent the commonly studied set of voice parameters that can change and Johan Sundberg] Digital Object Identifier 10.1109/MSP.2015.2424572 Date of publication: 13 October 2015 IMAGE LICENSED BY INGRAM PUBLISHING 1053-5888/15©2015IEEE IEEE SIGNAL PROCESSING MAGAZINE [55] noVEMBER 2015 voices that are difficult to produce naturally (e.g., castrati). [TABLE 1] RESEARCH PROJECTS USING SINGING VOICE SYNTHESIS TECHNOLOGIES. More examples can be found with pedagogical purposes or as tools to identify perceptually relevant voice properties [3]. Project WEBSITE These applications of the so-called music information CANTOR HTTP://WWW.VIRSYN.DE research field may have a great impact on the way we inter- CANTOR DIGITALIS HTTPS://CANTORDIGITALIS.LIMSI.FR/ act with music [4]. Examples of research projects using sing- CHANTER HTTPS://CHANTER.LIMSI.FR ing voice synthesis technologies are listed in Table 1. -

UN SYNTHÉTISEUR DE LA VOIX CHANTÉE BASÉ SUR MBROLA POUR LE MANDARIN Liu Ning

UN SYNTHÉTISEUR DE LA VOIX CHANTÉE BASÉ SUR MBROLA POUR LE MANDARIN Liu Ning To cite this version: Liu Ning. UN SYNTHÉTISEUR DE LA VOIX CHANTÉE BASÉ SUR MBROLA POUR LE MAN- DARIN. Journées d’Informatique Musicale, 2012, Mons, France. hal-03041805 HAL Id: hal-03041805 https://hal.archives-ouvertes.fr/hal-03041805 Submitted on 5 Dec 2020 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Actes des Journées d’Informatique Musicale (JIM 2012), Mons, Belgique, 9-11 mai 2012 UN SYNTHÉTISEUR DE LA VOIX CHANTÉE BASÉ SUR MBROLA POUR LE MANDARIN Liu Ning CICM (Centre de recherche en Informatique et Création Musicale) Université Paris VIII, MSH Paris Nord [email protected] RESUME fonction de la mélodie et des paroles à partir d’un fichier MIDI [7]. Dans cet article, nous présentons le projet de développement d’un synthétiseur de la voix chantée basé Néanmoins, les synthèses de la voix chantée pour la sur MBROLA en mandarin pour la langue chinoise. langue chinoise ont encore des limites techniques. Par Notre objectif vise à développer un synthétiseur qui exemple, le système à partir de la lecture de fichier MIDI puisse fonctionner en temps réel, qui soit capable de se est un système en temps différé. -

Espeak : Speech Synthesis

Software Requirements Specification for ESpeak : Speech Synthesis Version 1.48.15 Prepared by Dimitrios Koufounakis January 10, 2018 Copyright © 2002 by Karl E. Wiegers. Permission is granted to use, modify, and distribute this document. Software Requirements Specification for <Project> Page ii Table of Contents Table of Contents .......................................................................................................................... ii Revision History ............................................................................................................................ ii 1. Introduction ..............................................................................................................................1 1.1 Purpose ............................................................................................................................................. 1 1.2 Document Conventions .................................................................................................................... 1 1.3 Intended Audience and Reading Suggestions................................................................................... 1 1.4 Project Scope .................................................................................................................................... 1 1.5 References......................................................................................................................................... 1 2. Overall Description ..................................................................................................................2 -

Fully Generated Scripted Dialogue for Embodied Agents

Fully Generated Scripted Dialogue for Embodied Agents Kees van Deemter 1, Brigitte Krenn 2, Paul Piwek 3, Martin Klesen 4, Marc Schröder 5, and Stefan Baumann 6 Abstract : This paper presents the NECA approach to the generation of dialogues between Embodied Conversational Agents (ECAs). This approach consist of the automated constructtion of an abstract script for an entire dialogue (cast in terms of dialogue acts), which is incrementally enhanced by a series of modules and finally ”performed” by means of text, speech and body language, by a cast of ECAs. The approach makes it possible to automatically produce a large variety of highly expressive dialogues, some of whose essential properties are under the control of a user. The paper discusses the advantages and disadvantages of NECA’s approach to Fully Generated Scripted Dialogue (FGSD), and explains the main techniques used in the two demonstrators that were built. The paper can be read as a survey of issues and techniques in the construction of ECAs, focussing on the generation of behaviour (i.e., focussing on information presentation) rather than on interpretation. Keywords : Embodied Conversational Agents, Fully Generated Scripted Dialogue, Multimodal Interfaces, Emotion modelling, Affective Reasoning, Natural Language Generation, Speech Synthesis, Body Language. 1. Introduction A number of scientific disciplines have started, in the last decade or so, to join forces to build Embodied Conversational Agents (ECAs): software agents with a human-like synthetic voice and 1 University of Aberdeen, Scotland, UK (Corresponding author, email [email protected]) 2 Austrian Research Center for Artificial Intelligence (OEFAI), University of Vienna, Austria 3 Centre for Research in Computing, The Open University, UK 4 German Research Center for Artificial Intelligence (DFKI), Saarbruecken, Germany 5 German Research Center for Artificial Intelligence (DFKI), Saarbruecken, Germany 6 University of Koeln, Germany. -

A University-Based Smart and Context Aware Solution for People with Disabilities (USCAS-PWD)

computers Article A University-Based Smart and Context Aware Solution for People with Disabilities (USCAS-PWD) Ghassan Kbar 1,*, Mustufa Haider Abidi 2, Syed Hammad Mian 2, Ahmad A. Al-Daraiseh 3 and Wathiq Mansoor 4 1 Riyadh Techno Valley, King Saud University, P.O. Box 3966, Riyadh 12373-8383, Saudi Arabia 2 FARCAMT CHAIR, Advanced Manufacturing Institute, King Saud University, Riyadh 11421, Saudi Arabia; [email protected] (M.H.A.); [email protected] (S.H.M.) 3 College of Computer and Information Sciences, King Saud University, Riyadh 11421, Saudi Arabia; [email protected] 4 Department of Electrical Engineering, University of Dubai, Dubai 14143, United Arab Emirates; [email protected] * Correspondence: [email protected] or [email protected]; Tel.: +966-114693055 Academic Editor: Subhas Mukhopadhyay Received: 23 May 2016; Accepted: 22 July 2016; Published: 29 July 2016 Abstract: (1) Background: A disabled student or employee in a certain university faces a large number of obstacles in achieving his/her ordinary duties. An interactive smart search and communication application can support the people at the university campus and Science Park in a number of ways. Primarily, it can strengthen their professional network and establish a responsive eco-system. Therefore, the objective of this research work is to design and implement a unified flexible and adaptable interface. This interface supports an intensive search and communication tool across the university. It would benefit everybody on campus, especially the People with Disabilities (PWDs). (2) Methods: In this project, three main contributions are presented: (A) Assistive Technology (AT) software design and implementation (based on user- and technology-centered design); (B) A wireless sensor network employed to track and determine user’s location; and (C) A novel event behavior algorithm and movement direction algorithm used to monitor and predict users’ behavior and intervene with them and their caregivers when required. -

Design and Implementation of Text to Speech Conversion for Visually Impaired People

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Covenant University Repository International Journal of Applied Information Systems (IJAIS) – ISSN : 2249-0868 Foundation of Computer Science FCS, New York, USA Volume 7– No. 2, April 2014 – www.ijais.org Design and Implementation of Text To Speech Conversion for Visually Impaired People Itunuoluwa Isewon* Jelili Oyelade Olufunke Oladipupo Department of Computer and Department of Computer and Department of Computer and Information Sciences Information Sciences Information Sciences Covenant University Covenant University Covenant University PMB 1023, Ota, Nigeria PMB 1023, Ota, Nigeria PMB 1023, Ota, Nigeria * Corresponding Author ABSTRACT A Text-to-speech synthesizer is an application that converts text into spoken word, by analyzing and processing the text using Natural Language Processing (NLP) and then using Digital Signal Processing (DSP) technology to convert this processed text into synthesized speech representation of the text. Here, we developed a useful text-to-speech synthesizer in the form of a simple application that converts inputted text into synthesized speech and reads out to the user which can then be saved as an mp3.file. The development of a text to Figure 1: A simple but general functional diagram of a speech synthesizer will be of great help to people with visual TTS system. [2] impairment and make making through large volume of text easier. 2. OVERVIEW OF SPEECH SYNTHESIS Speech synthesis can be described as artificial production of Keywords human speech [3]. A computer system used for this purpose is Text-to-speech synthesis, Natural Language Processing, called a speech synthesizer, and can be implemented in Digital Signal Processing software or hardware. -

Feasibility Study on a Text-To-Speech Synthesizer for Embedded Systems

2006:113 CIV MASTER'S THESIS Feasibility Study on a Text-To-Speech Synthesizer for Embedded Systems Linnea Hammarstedt Luleå University of Technology MSc Programmes in Engineering Electrical Engineering Department of Computer Science and Electrical Engineering Division of Signal Processing 2006:113 CIV - ISSN: 1402-1617 - ISRN: LTU-EX--06/113--SE Preface This is a master degree project commissioned by and performed at Teleca Systems GmbH in N¨urnberg at the department of Speech Technology. Teleca is an IT services company focused on developing and integrating advanced software and information technology so- lutions. Today Teleca possesses a speak recognition system including a grapheme-to-phoneme module, i.e., an algorithm converting text into phonetic notation. Their future objective is to develop a Text-To-Speech system including this module. The purpose of this work from Teleca’s point of view is to investigate a possible solution of converting phonetic notation into speech suitable for an embedded implementation platform. I would like to thank Dr. Andreas Kiessling at Teleca for his support and patient discussions during this work, and Dr. Stefan Dobler, the head of department, for giving me the possibility to experience this interesting field of speech technology. Finally, I wish to thank all the other personnel of the department for their consistently practical support. i Abstract A system converting textual information into speech is usually denoted as a TTS (Text-To- Speech) system. The design of this system varies depending on its purpose and platform requirements. In this thesis a TTS synthesizer designed for an embedded system operat- ing on an arbitrary vocabulary has been evaluated and partially implemented in Matlab, constituting a base for further development. -

Attuning Speech-Enabled Interfaces to User and Context for Inclusive Design: Technology, Methodology and Practice

CORE Metadata, citation and similar papers at core.ac.uk Provided by Springer - Publisher Connector Univ Access Inf Soc (2009) 8:109–122 DOI 10.1007/s10209-008-0136-x LONG PAPER Attuning speech-enabled interfaces to user and context for inclusive design: technology, methodology and practice Mark A. Neerincx Æ Anita H. M. Cremers Æ Judith M. Kessens Æ David A. van Leeuwen Æ Khiet P. Truong Published online: 7 August 2008 Ó The Author(s) 2008 Abstract This paper presents a methodology to apply 1 Introduction speech technology for compensating sensory, motor, cog- nitive and affective usage difficulties. It distinguishes (1) Speech technology seems to provide new opportunities to an analysis of accessibility and technological issues for the improve the accessibility of electronic services and soft- identification of context-dependent user needs and corre- ware applications, by offering compensation for limitations sponding opportunities to include speech in multimodal of specific user groups. These limitations can be quite user interfaces, and (2) an iterative generate-and-test pro- diverse and originate from specific sensory, physical or cess to refine the interface prototype and its design cognitive disabilities—such as difficulties to see icons, to rationale. Best practices show that such inclusion of speech control a mouse or to read text. Such limitations have both technology, although still imperfect in itself, can enhance functional and emotional aspects that should be addressed both the functional and affective information and com- in the design of user interfaces (cf. [49]). Speech technol- munication technology-experiences of specific user groups, ogy can be an ‘enabler’ for understanding both the content such as persons with reading difficulties, hearing-impaired, and ‘tone’ in user expressions, and for producing the right intellectually disabled, children and older adults.