Dense Community Detection in Multi-Valued Attributed Networks, Inform

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

COMP11 Lab 1: What the Diff?

COMP11 Lab 1: What the Diff? In this lab you will learn how to write programs that will pass our grading software with flying colors. Navigate into your COMP11 directory and download the starter code for this lab with the following command: pull-code11 lab01 (and then to see what you've got) cd lab01 ls You Do Like We Do (15 mins) There is nothing magical about how we test and grade your programs. We run your program, give it a preselected input, and check to see if its output matches our expectation. This process is repeated until we're convinced that your solution is fully functional. In this lab we will walk you through that process so that you can test your programs in the same way. Surely you recall running our encrypt demo program in Lab 0. Run this program again and specify the word \tufts" as the word to encrypt (recall that you must first enter \use comp11" if you have not added this command to your .cshrc profile). Nothing new here. But what if you wanted to do that again? What if you wanted to do it 100 more times? Having to type \tufts" during every single execution would get old fast. It would be great if you could automate the process of entering that input. Well good news: you can. It's called \redirecting". The basic concept here is simple: instead of manually entering input, you can save that input into a separate file and tell your program to read that file as if the user were entering its contents. -

Andv's Favorite Milk Oualitv Dairv Comp Commands Bulk Tank SCC

Andv's Favorite Milk Oualitv Dairv Comp Commands Bulk Tank SCC Contribution: Command: ECON Select 6 This gives you the contribution of each cow to the bulk tank SCC. I find in most herds, the top 1% high SCC cows usually contribute less than 20o/o of the total SCC. When you see the number go to 25o/o or higher. you know that high producing cows are the main contributors. You can see the effect of removins cows from the herd on the bulk tank SCC. General Herd Information Command: Sum milk dim scc lgscc prvlg logl drylg for milk>o lact>O by lctgp This gives you a quick summary of each lactation to review. It puts all factors on one page so you can determine areas of concern. Herd SCC Status: Command: Sum lgscc=4 lgscc=6 for lgscc>O by lctgp This gives you the status of the SCC of all cows on test by lactation group and by herd. It will show you the number and percentage of cows with SCC of 200,000 and less, the number and percentage of cows with SCC of 201,000 to 800,000 and the number and percentage of cows with SCC over 800,000. My goal is to have at least 80% of all animals in the herd under 200,000. I want at least 85% of first lactation animals, 80% of second lactation animals, andl5o/o of third/older lactation animals with SCC under 200,000. New Infection Rate: Command: Sum lgscc:4 prvlg:4 for lgscc>O prvlg>O by lctgp This command only compares cows that were tested last month to the same cows tested this month. -

Layer Comps Layer Comps a Layer Comp Allows Us to Save the Current State of a File So That We Can Switch Between Various Versions of the Document

video 32 Layer Comps Layer Comps A layer comp allows us to save the current state of a file so that we can switch between various versions of the document. Layer comps can keep track of three things: the position of layers, the visibility of layers and the layer styles (layer ef- fects). Note that layer comps will not keep track of the stacking order of layers. When working with layer comps, you’ll need to open the Layer Comps panel, which can be accessed via the Window menu at the top of the interface. To create a layer comp, first set up your document so that it’s in a state you would like Photoshop to remem- ber. Then, click on the New icon at the bot- tom of the Layer Comps panel. A dialog will appear where you can give the layer comp a name and specify what you’d like it to keep track of. There are check boxes for Visibil- The Layer Comps Panel. ity, Position and Appearance (Layer Style). When you’re done, click the OK button and you will see the name of the comp appear in the Layer Comps panel. To the right of the layer comp name are three icons which indicate what information the comp is keeping track of. From left to right, the icons represent layer visibility, position and styles. When one of the icons is grayed out, it means that the layer comp is not keeping track of that information. You can update the settings for a layer comp at any time by double-clicking to The icons to the right of the name indicate the right of the layer comp name. -

UNC COMP 590-145 Midterm Exam 3 Solutions Monday, April 6, 2020

UNC COMP 590-145 Midterm Exam 3 Solutions Monday, April 6, 2020 1 / 26 General comments Haven't quite finished grading E3 (sorry), but will by Wednesday. But let's walk through the solutions. Random question order and answer order UNC COMP 590-145 Midterm Exam 3 Solutions 2 / 26 Exam 3 solutions Question 3: Write a protocol Write a Clojure protocol for git objects using defprotocol, including methods and parameters. Assume that the implementation will provide a way to access the type and data of a git object. (Hint: what does your program need to do with every git object, regardless of what type it is?) (defprotocol GitObject (address [_]) (write-object [_])) UNC COMP 590-145 Midterm Exam 3 Solutions 3 / 26 Exam 3 solutions Question 4: Testing Write a test for the following code using Speclj. Use a different namespace. (ns math) (defn product "Compute the product of a function mapped over a sequence of numbers. example, (product inc [1 2 3]) is 24, and (product inc []) is 1." [f xs] (reduce * 1 (map f xs))) UNC COMP 590-145 Midterm Exam 3 Solutions 4 / 26 Exam 3 solutions Question 4: Testing (ns math-spec (:require [speclj.core :refer [describe it should=]] [math :as sut])) (describe "product" (it "returns 1 when the sequence is empty" (should= 1 (sut/product inc []))) (it "returns the correct answer for a non-empty sequence" (should= 24 (sut/product inc [1 2 3])))) UNC COMP 590-145 Midterm Exam 3 Solutions 5 / 26 Exam 3 solutions Question 5: Implement a protocol Implement the following protocol for lists (which have type clojure.lang.PersistentList). -

V-Comp User Guide

V-Comp User Guide Updated 16 December 2015 Copyright © 2015-2016 by Verity Software House All Rights Reserved. Table of Contents V-Comp™ User Guide .................................................................................................... 1 License and Warranty ..................................................................................................... 2 Latest Versions ............................................................................................................... 3 New Features in V-Comp™ ............................................................................................ 4 Version 1.0 ............................................................................................................... 4 System Requirements ..................................................................................................... 5 Minimum System Configurations.............................................................................. 5 Compliance Mode Requirements ............................................................................. 5 Installation and Setup of V-Comp™ ................................................................................ 6 Registering your new software ........................................................................................ 8 Frequently Asked Questions ......................................................................................... 15 V-Comp™ Setups ................................................................................................. -

![[D:]Path[...] Data Files](https://docslib.b-cdn.net/cover/6104/d-path-data-files-996104.webp)

[D:]Path[...] Data Files

Command Syntax Comments APPEND APPEND ; Displays or sets the search path for APPEND [d:]path[;][d:]path[...] data files. DOS will search the specified APPEND [/X:on|off][/path:on|off] [/E] path(s) if the file is not found in the current path. ASSIGN ASSIGN x=y [...] /sta Redirects disk drive requests to a different drive. ATTRIB ATTRIB [d:][path]filename [/S] Sets or displays the read-only, archive, ATTRIB [+R|-R] [+A|-A] [+S|-S] [+H|-H] [d:][path]filename [/S] system, and hidden attributes of a file or directory. BACKUP BACKUP d:[path][filename] d:[/S][/M][/A][/F:(size)] [/P][/D:date] [/T:time] Makes a backup copy of one or more [/L:[path]filename] files. (In DOS Version 6, this program is stored on the DOS supplemental disk.) BREAK BREAK =on|off Used from the DOS prompt or in a batch file or in the CONFIG.SYS file to set (or display) whether or not DOS should check for a Ctrl + Break key combination. BUFFERS BUFFERS=(number),(read-ahead number) Used in the CONFIG.SYS file to set the number of disk buffers (number) that will be available for use during data input. Also used to set a value for the number of sectors to be read in advance (read-ahead) during data input operations. CALL CALL [d:][path]batchfilename [options] Calls another batch file and then returns to current batch file to continue. CHCP CHCP (codepage) Displays the current code page or changes the code page that DOS will use. CHDIR CHDIR (CD) [d:]path Displays working (current) directory CHDIR (CD)[..] and/or changes to a different directory. -

Instructions for Adept Utility Programs

Instructions for Adept Utility Programs Version 12.1 ¨ Adept Utility Disk For use with V+ 12.1 (Edit D & Later) FLIST README.TXT for program information General-Purpose Utility Programs Robot/Motion Utility Programs AdeptVision Utility Programs Network Utility Programs AdeptModules SPEC Data 1984-1997 by Adept Technology, Inc. Instructions for Adept Utility Programs Version 12.1 ¨ Adept Utility Disk For use with V+ 12.1 (Edit D & Later) FLIST README.TXT for program information General-Purpose Utility Programs Robot/Motion Utility Programs AdeptVision Utility Programs Network Utility Programs AdeptModules SPEC Data 1984-1997 by Adept Technology, Inc. Part # 00962-01000, Rev. A September, 1997 ® 150 Rose Orchard Way • San Jose, CA 95134 • USA • Phone (408) 432-0888 • Fax (408) 432-8707 Otto-Hahn-Strasse 23 • 44227 Dortmund • Germany • Phone (49) 231.75.89.40 • Fax(49) 231.75.89.450 adept 41, rue du Saule Trapu • 91300 • Massy • France • Phone (33) 1.69.19.16.16 • Fax (33) 1.69.32.04.62 te c hnology, inc. 1-2, Aza Nakahara Mitsuya-Cho • Toyohashi, Aichi-Ken • 441-31 • Japan • (81) 532.65.2391 • Fax (81) 532.65.2390 The information contained herein is the property of Adept Technology, Inc., and shall not be reproduced in whole or in part without prior written approval of Adept Technology, Inc. The information herein is subject to change without notice and should not be construed as a commitment by Adept Technology, Inc. This manual is periodically reviewed and revised. Adept Technology, Inc., assumes no responsibility for any errors or omissions in this document. -

Word Processing)

Government of the people’s Republic of Bangladesh Affiliated University of International Computer Administration Foundation Bangladesh BASIC TRADE COURSE COMPUTER OPERATOR (WORD PROCESSING) Maintenance by Department of Exam Control & Certificate Distribution. House: ICA Administrative Building. Road: 6 Haricharan Roy Road, Faridabad, Sutrapur, Dhaka. Registrar Coll Center: 01742364899. E-mail: [email protected]; [email protected] Name of trades : Computer Applications Total contact hours : 75×4 = 300 hours. a. Computer Application Fundamentals Theory: 1. Basic computer hardware – system unit, monitor, keyboard, disk drives, disks and printer. 2. Computer keyboard and its functions. 3. Computer software and software and software packages. 4. Systematic approaches to start up a computer. 5. DOS and DOS commands CLS, DATE, TIME, Changing drive, DIR, TYPE, VER, VOL, LEVEL, DELETE, ERASE, COPY, RENAME. 6. General rules of typing. 7. Features of a typing CAL package. Practical: 8. Identify the different functional units of a computer. 9. Apply systematic approaches to start up a computer. 10. Recognize the different areas of the keyboard. 11. Apply the basic DOS commands CLS, DATE, TIME, Changing drive, DIR, TYPE, VER, VOL, LEVEL, DELETE, ERASW, COPY, RENAME. 12. Develop keyboard skills by following standard touch typing rules by using a simple Computer Assisted Learning (CAL) KEYBOARD package: 12.1 Drill on home keys (a s d f j k l ; spacebar). 12.2 Drill on (e i enter-key) cumulatively with above keys. 12.3 Drill on (r u) cumulatively with the above keys. 12.4 Drill on (g h) cumulatively with the above keys. 12.5 Drill on (t y) cumulatively with the above keys. -

Finding All Differences in Two SAS® Libraries Using Proc Compare By

Finding All Differences in two SAS® libraries using Proc Compare By: Bharat Kumar Janapala, Company: Individual Contributor Abstract: In the clinical industry validating datasets by parallel programming and comparing these derived datasets is a routine practice, but due to constant updates in raw data it becomes increasingly more difficult to figure out the differences between two datasets. The COMPARE procedure is a valuable tool to help in these situations, and for a variety of other purposes including data validation, data cleaning, and pointing out the differences, in an optimized way, between datasets. The purpose for writing this paper is to demonstrate PROC COMPARE's and SASHELP directories ability to identify the datasets present in various SAS® libraries by listing the uncommon datasets. Secondly, to provide details about the total number of observations and variables present in both datasets by listing the uncommon variables and datasets with differences in observation number. Thirdly, assuming both datasets are identical the COMPARE procedure compares the datasets with like names and captures the differences which could be monitored by assigning the max number of differences by variable for optimization. Finally, the COMPARE procedure reads all the differences and provides a consolidated report followed by a detailed description by dataset. Introduction In most companies, the most common practice to compare multiple datasets is to use the standard code: “PROC COMPARE BASE = BASE_LIB.DATASET COMPARE = COMPARE_LIB.DATASET.” But comparing all the datasets would be a time consuming process. Hence, I made an attempt to point out all the differences between libraries in the most optimized way with the help of PROC COMPARE and SASHELP directories. -

How to Download and Install Microsoft Project Or Other Software Available from CCRI

How to Download and Install Microsoft Project or other software available from CCRI Microsoft Project and other available software is available for free download from CCRI. Go to the following link to begin: https://www.ccri.edu/comp/helpfullinksforstudents.html Next, click on: Microsoft Azure Enrollment for Computer Science Students Follow the instructions below to download and install Microsoft Project on your computer: 1. Click the Sign In button, using your CCRI account credentials (just like you would when signing into Blackboard or your MyCCRI account). 2. After signing in, fill in the necessary information on the Azure Dev Tools form. 3. Use your mobile phone number to receive a text message containing a verification code. 4. After you receive the text message with the verification code, type it into the form and select the Verify code button to proceed, as shown in the screenshot below. 5. On the next screen, agree to the terms by clicking the 'I agree...' checkbox and then click the Accept terms button. 6. Once you reach the Microsoft Azure home page, click on the Software link under the Learning Resources section on the left-hand side of the page, as shown in the screenshot below. 7. At the top of the page, in the search bar, type project professional (or another name of software) and the resulting versions available, as shown below. You should see two items listed: For example: Project Professional 2019 and Project Professional 2016. You can download Project 2019, since it's the latest version available. 8. After you click the software link, in the dialog window, click the View Key button to show the product license key, as shown in the screen shot below. -

Object Compare Object Compare

Object Compare Object Compare Object Compare Using Object Compare, you can compare the contents of two different Natural sources. Supported object types are: program, dialog, class, subprogram, subroutine, text, local data area, parameter data area, global data area, helproutine, copycode, function, and adapter. Note: There is no extra Natural Security support for enabling/disabling the Object Compare plug-in. However, Object Compare uses the system command LIST to read a Natural source. The same Natural Security support that is provided for the LIST command also applies to Object Compare. This chapter covers the following topics: Before You Start Elements of Object Compare Setting the Options Selecting the Objects to be Compared Starting Object Compare Before You Start Object Compare is an optional plug-in for Natural Studio. The installation of this plug-in is part of the Natural installation. You can either install this plug-in during a first-time installation or install it later in a separate setup process. For further information on the installation procedure, see the Installation documentation. Before Object Compare can be used, you have to proceed as described in the following topics: Prerequisites Activating Object Compare Prerequisites Work file 30 is used. Therefore, the profile parameter WORK must be set to 30 or higher. An external tool with a graphical user interface for comparing files must have been installed on the PC. An example of such a tool is ExamDiff. A line command compare tool such as diff.exe does not work. Activating Object Compare When Object Compare has been installed, it has to be activated in the Plug-In Manager. -

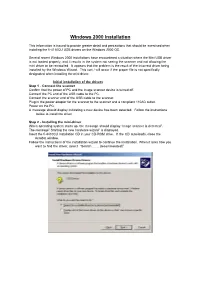

Windows 2000 Installation

Windows 2000 Installation This information is issued to provide greater detail and precautions that should be exercised when installing the fi-4110CU USB drivers on the Windows 2000 OS. Several recent Windows 2000 installations have encountered a situation where the Mini USB driver is not loaded properly, and it results in the system not seeing the scanner and not allowing the mini driver to be reinstalled. It appears that the problem is the result of the incorrect driver being installed by the Windows Wizard. This can / will occur if the proper file is not specifically designated when installing the mini driver. Initial installation of the drivers Step 1 - Connect the scanner Confirm that the power of PC and the image scanner device is turned off. Connect the PC end of the USB cable to the PC. Connect the scanner end of the USB cable to the scanner. Plug in the power adapter for the scanner to the scanner and a compliant 110AC outlet. Power on the PC. A message should display indicating a new device has been detected. Follow the instructions below to install the driver. Step 2 - Installing the mini-driver When operating system starts up, the message should display “image scanner is detected”. The message” Starting the new hardware wizard” is displayed. Insert the fi-4410CU Installation CD in your CD-ROM drive. If the CD auto-loads, close the Acrobat window. Follow the instructions of the installation wizard to continue the installation. When it asks how you want to find the driver, select “Search……. (recommended)”. When asked to “Locate the Driver File”, select the option “Specify Location” - do not let the Wizard look for files on the CD Drive.