Lab 6: Loop-Level Parallelism Instructor: Mackale Joyner, Co-Instructor: Zoran Budimli´C

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Copy — Copy file from Disk Or URL

Title stata.com copy — Copy file from disk or URL Syntax Description Options Remarks and examples Also see Syntax copy filename1 filename2 , options filename1 may be a filename or a URL. filename2 may be the name of a file or a directory. If filename2 is a directory name, filename1 will be copied to that directory. filename2 may not be a URL. Note: Double quotes may be used to enclose the filenames, and the quotes must be used if the filename contains embedded blanks. options Description public make filename2 readable by all text interpret filename1 as text file and translate to native text format replace may overwrite filename2 replace does not appear in the dialog box. Description copy copies filename1 to filename2. Options public specifies that filename2 be readable by everyone; otherwise, the file will be created according to the default permissions of your operating system. text specifies that filename1 be interpreted as a text file and be translated to the native form of text files on your computer. Computers differ on how end-of-line is recorded: Unix systems record one line-feed character, Windows computers record a carriage-return/line-feed combination, and Mac computers record just a carriage return. text specifies that filename1 be examined to determine how it has end-of-line recorded and that the line-end characters be switched to whatever is appropriate for your computer when the copy is made. There is no reason to specify text when copying a file already on your computer to a different location because the file would already be in your computer’s format. -

COMP11 Lab 1: What the Diff?

COMP11 Lab 1: What the Diff? In this lab you will learn how to write programs that will pass our grading software with flying colors. Navigate into your COMP11 directory and download the starter code for this lab with the following command: pull-code11 lab01 (and then to see what you've got) cd lab01 ls You Do Like We Do (15 mins) There is nothing magical about how we test and grade your programs. We run your program, give it a preselected input, and check to see if its output matches our expectation. This process is repeated until we're convinced that your solution is fully functional. In this lab we will walk you through that process so that you can test your programs in the same way. Surely you recall running our encrypt demo program in Lab 0. Run this program again and specify the word \tufts" as the word to encrypt (recall that you must first enter \use comp11" if you have not added this command to your .cshrc profile). Nothing new here. But what if you wanted to do that again? What if you wanted to do it 100 more times? Having to type \tufts" during every single execution would get old fast. It would be great if you could automate the process of entering that input. Well good news: you can. It's called \redirecting". The basic concept here is simple: instead of manually entering input, you can save that input into a separate file and tell your program to read that file as if the user were entering its contents. -

Windows Command Prompt Cheatsheet

Windows Command Prompt Cheatsheet - Command line interface (as opposed to a GUI - graphical user interface) - Used to execute programs - Commands are small programs that do something useful - There are many commands already included with Windows, but we will use a few. - A filepath is where you are in the filesystem • C: is the C drive • C:\user\Documents is the Documents folder • C:\user\Documents\hello.c is a file in the Documents folder Command What it Does Usage dir Displays a list of a folder’s files dir (shows current folder) and subfolders dir myfolder cd Displays the name of the current cd filepath chdir directory or changes the current chdir filepath folder. cd .. (goes one directory up) md Creates a folder (directory) md folder-name mkdir mkdir folder-name rm Deletes a folder (directory) rm folder-name rmdir rmdir folder-name rm /s folder-name rmdir /s folder-name Note: if the folder isn’t empty, you must add the /s. copy Copies a file from one location to copy filepath-from filepath-to another move Moves file from one folder to move folder1\file.txt folder2\ another ren Changes the name of a file ren file1 file2 rename del Deletes one or more files del filename exit Exits batch script or current exit command control echo Used to display a message or to echo message turn off/on messages in batch scripts type Displays contents of a text file type myfile.txt fc Compares two files and displays fc file1 file2 the difference between them cls Clears the screen cls help Provides more details about help (lists all commands) DOS/Command Prompt help command commands Source: https://technet.microsoft.com/en-us/library/cc754340.aspx. -

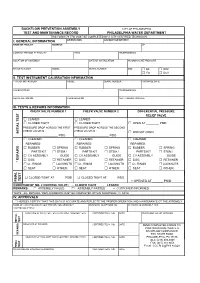

BFP Test Form

BACKFLOW PREVENTION ASSEMBLY CITY OF PHILADELPHIA TEST AND MAINTENANCE RECORD PHILADELPHIA WATER DEPARTMENT THIS FORM (79-770) MUST BE COMPLETED BY A CITY CERTIFIED TECHNICIAN I. GENERAL INFORMATION ORIENTATION ACCOUNT OR METER # NAME OF FACILITY ADDRESS ZIP CONTACT PERSON AT FACILITY TITLE TELEPHONE NO. LOCATION OF ASSEMBLY DATE OF INSTALLATION INCOMING LINE PRESSURE MANUFACTURER MODEL SERIAL NUMBER SIZE □ DS □ RPZ □ FS □ DCV II. TEST INSTRUMENT CALIBRATION INFORMATION TYPE OF INSTRUMENT MODEL SERIAL NUMBER PURCHASE DATE CALIBRATED BY TELEPHONE NO. REGISTRATION NO. CALIBRATED ON NEXT CALIBRATION DUE III. TESTS & REPAIRS INFORMATION CHECK VALVE NUMBER 1 CHECK VALVE NUMBER 2 DIFFERENTIAL PRESSURE RELIEF VALVE □ LEAKED □ LEAKED □ CLOSED TIGHT □ CLOSED TIGHT □ OPEN AT ________ PSID PRESSURE DROP ACROSS THE FIRST PRESSURE DROP ACROSS THE SECOND CHECK VALVE IS : CHECK VALVE IS : INITIAL TEST INITIAL □ DID NOT OPEN ______________________ PSID ______________________ PSID □ CLEANED □ CLEANED □ CLEANED REPAIRED: REPAIRED: REPAIRED: □ RUBBER □ SPRING □ RUBBER □ SPRING □ RUBBER □ SPRING PARTS KIT □ STEM / PARTS KIT □ STEM / PARTS KIT □ STEM / □ CV ASSEMBLY GUIDE □ CV ASSEMBLY GUIDE □ CV ASSEMBLY GUIDE □ DISC □ RETAINER □ DISC □ RETAINER □ DISC □ RETAINER * REPAIRS □ O - RINGS □ LOCKNUTS □ O - RINGS □ LOCKNUTS □ O - RINGS □ LOCKNUTS □ SEAT □ OTHER: □ SEAT □ OTHER: □ SEAT □ OTHER: □ CLOSED TIGHT AT ______ PSID □ CLOSED TIGHT AT ______ PSID TEST FINAL FINAL □ OPENED AT ______ PSID CONDITION OF NO. 2 CONTROL VALVE : □ CLOSED TIGHT □ LEAKED REMARKS : □ ASSEMBLY FAILED □ ASSEMBLY PASSED □ CUSTOMER INFORMED *NOTE : ALL REPAIRS / REPLACEMENTS MUST BE COMPLETED WITHIN FOURTEEN (14) DAYS IV. APPROVALS * I HEREBY CERTIFY THAT THIS DATA IS ACCURATE AND REFLECTS THE PROPER OPERATION AND MAINTENANCE OT THE ASSEMBLY NAME OF CERTIFIED BACKFLOW PREVENTION ASSEMBLY BUSINESS TELEPHONE NO. -

Mac Keyboard Shortcuts Cut, Copy, Paste, and Other Common Shortcuts

Mac keyboard shortcuts By pressing a combination of keys, you can do things that normally need a mouse, trackpad, or other input device. To use a keyboard shortcut, hold down one or more modifier keys while pressing the last key of the shortcut. For example, to use the shortcut Command-C (copy), hold down Command, press C, then release both keys. Mac menus and keyboards often use symbols for certain keys, including the modifier keys: Command ⌘ Option ⌥ Caps Lock ⇪ Shift ⇧ Control ⌃ Fn If you're using a keyboard made for Windows PCs, use the Alt key instead of Option, and the Windows logo key instead of Command. Some Mac keyboards and shortcuts use special keys in the top row, which include icons for volume, display brightness, and other functions. Press the icon key to perform that function, or combine it with the Fn key to use it as an F1, F2, F3, or other standard function key. To learn more shortcuts, check the menus of the app you're using. Every app can have its own shortcuts, and shortcuts that work in one app may not work in another. Cut, copy, paste, and other common shortcuts Shortcut Description Command-X Cut: Remove the selected item and copy it to the Clipboard. Command-C Copy the selected item to the Clipboard. This also works for files in the Finder. Command-V Paste the contents of the Clipboard into the current document or app. This also works for files in the Finder. Command-Z Undo the previous command. You can then press Command-Shift-Z to Redo, reversing the undo command. -

Andv's Favorite Milk Oualitv Dairv Comp Commands Bulk Tank SCC

Andv's Favorite Milk Oualitv Dairv Comp Commands Bulk Tank SCC Contribution: Command: ECON Select 6 This gives you the contribution of each cow to the bulk tank SCC. I find in most herds, the top 1% high SCC cows usually contribute less than 20o/o of the total SCC. When you see the number go to 25o/o or higher. you know that high producing cows are the main contributors. You can see the effect of removins cows from the herd on the bulk tank SCC. General Herd Information Command: Sum milk dim scc lgscc prvlg logl drylg for milk>o lact>O by lctgp This gives you a quick summary of each lactation to review. It puts all factors on one page so you can determine areas of concern. Herd SCC Status: Command: Sum lgscc=4 lgscc=6 for lgscc>O by lctgp This gives you the status of the SCC of all cows on test by lactation group and by herd. It will show you the number and percentage of cows with SCC of 200,000 and less, the number and percentage of cows with SCC of 201,000 to 800,000 and the number and percentage of cows with SCC over 800,000. My goal is to have at least 80% of all animals in the herd under 200,000. I want at least 85% of first lactation animals, 80% of second lactation animals, andl5o/o of third/older lactation animals with SCC under 200,000. New Infection Rate: Command: Sum lgscc:4 prvlg:4 for lgscc>O prvlg>O by lctgp This command only compares cows that were tested last month to the same cows tested this month. -

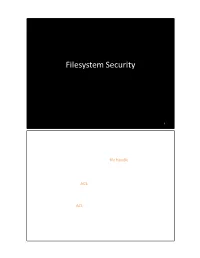

File Permissions Do Not Restrict Root

Filesystem Security 1 General Principles • Files and folders are managed • A file handle provides an by the operating system opaque identifier for a • Applications, including shells, file/folder access files through an API • File operations • Access control entry (ACE) – Open file: returns file handle – Allow/deny a certain type of – Read/write/execute file access to a file/folder by – Close file: invalidates file user/group handle • Access control list (ACL) • Hierarchical file organization – Collection of ACEs for a – Tree (Windows) file/folder – DAG (Linux) 2 Discretionary Access Control (DAC) • Users can protect what they own – The owner may grant access to others – The owner may define the type of access (read/write/execute) given to others • DAC is the standard model used in operating systems • Mandatory Access Control (MAC) – Alternative model not covered in this lecture – Multiple levels of security for users and documents – Read down and write up principles 3 Closed vs. Open Policy Closed policy Open Policy – Also called “default secure” • Deny Tom read access to “foo” • Give Tom read access to “foo” • Deny Bob r/w access to “bar” • Give Bob r/w access to “bar • Tom: I would like to read “foo” • Tom: I would like to read “foo” – Access denied – Access allowed • Tom: I would like to read “bar” • Tom: I would like to read “bar” – Access allowed – Access denied 4 Closed Policy with Negative Authorizations and Deny Priority • Give Tom r/w access to “bar” • Deny Tom write access to “bar” • Tom: I would like to read “bar” – Access -

Instructions for Pwd Candidates Regarding Typing Skill Test

INSTRUCTIONS FOR PWD CANDIDATES REGARDING TYPING SKILL TEST (ON COMPUTER) AND STENOGRAPHY SKILL TEST The following guidelines are applicable to PwD Candidates shortlisted for Typing test or stenography test. 1. Typing test will be conducted on computer. 2. Persons with benchmark disability in the category of Visually impaired, orthopedically handicapped (afflicted by cerebral palsy), orthopedically handicapped (both arm affected), orthopedically handicapped candidates who has a locomotor disability wherein the dominant writing extremity is affected to the extent of slowing down the performance of the candidate (such deficiency to be indicated in the certificate, issued by competent authority, submitted by the candidate) will be allowed compensatory time of 05 minutes. 3. VI Candidates are eligible for scribe/passage dictator. 4. The Scribe/ Passage dictator is identified by the candidate at own cost and as per own choice. 5. The scribe/Passage dictator will read out the passage to VI candidates only within the allotted time. 6. Passage will not be provided in Brail for the VI candidates. 7. A person acting as a Scribe/Passage dictator for one candidate cannot be a Scribe /Passage dictator for another candidate. 8. The scribe/Passage dictator arranged by the candidate should not be a candidate for the same examination. 9. The candidate shall be responsible for any misconduct on the part of the scribe/Passage dictator brought by him during typing test. 10.Persons with Disabilities candidates who claim to be permanently unfit to take the Typing test because of a physical disability may, with the prior approval of the CEPTAM, DRDO be exempted from the requirement of appearing and qualifying at such test, provided such a candidate submits in the attached format (Appendix-I) to the CEPTAM, DRDO from the competent Medical Authority, i.e. -

KEYBOARD SHORTCUTS (Windows)

KEYBOARD SHORTCUTS (Windows) Note: For Mac users, please substitute the Command key for the Ctrl key. This substitution with work for the majority of commands _______________________________________________________________________ General Commands Navigation Windows key + D Desktop to foreground Context menu Right click Alt + underlined letter Menu drop down, Action selection Alt + Tab Toggle between open applications Alt, F + X or Alt + F4 Exit application Alt, Spacebar + X Maximize window Alt, Spacebar + N Minimize window Ctrl + W Closes window F2 Renames a selected file or folder Open Programs To open programs from START menu: Create a program shortcut and drop it into START menu To open programs/files on Desktop: Select first letter, and then press Enter to open Dialog Boxes Enter Selects highlighted button Tab Selects next button Arrow keys Selects next (>) or previous button (<) Shift + Tab Selects previous button _______________________________________________________________________ Microsoft Word Formatting Ctrl + P Print Ctrl + S Save Ctrl + Z Undo Ctrl + Y Redo CTRL+B Make text bold CTRL+I Italicize CTRL+U Underline Ctrl + C Copy Ctrl + V Paste Ctrl + X Copy + delete Shift + F3 Change case of letters Ctrl+Shift+> Increase font size Ctrl+Shift+< Decrease font size Highlight Text Shift + Arrow Keys Selects one letter at a time Shift + Ctrl + Arrow keys Selects one word at a time Shift + End or Home Selects lines of text Change or resize the font CTRL+SHIFT+ > Increase the font size 1 KEYBOARD SHORTCUTS (Windows) CTRL+SHIFT+ < -

Layer Comps Layer Comps a Layer Comp Allows Us to Save the Current State of a File So That We Can Switch Between Various Versions of the Document

video 32 Layer Comps Layer Comps A layer comp allows us to save the current state of a file so that we can switch between various versions of the document. Layer comps can keep track of three things: the position of layers, the visibility of layers and the layer styles (layer ef- fects). Note that layer comps will not keep track of the stacking order of layers. When working with layer comps, you’ll need to open the Layer Comps panel, which can be accessed via the Window menu at the top of the interface. To create a layer comp, first set up your document so that it’s in a state you would like Photoshop to remem- ber. Then, click on the New icon at the bot- tom of the Layer Comps panel. A dialog will appear where you can give the layer comp a name and specify what you’d like it to keep track of. There are check boxes for Visibil- The Layer Comps Panel. ity, Position and Appearance (Layer Style). When you’re done, click the OK button and you will see the name of the comp appear in the Layer Comps panel. To the right of the layer comp name are three icons which indicate what information the comp is keeping track of. From left to right, the icons represent layer visibility, position and styles. When one of the icons is grayed out, it means that the layer comp is not keeping track of that information. You can update the settings for a layer comp at any time by double-clicking to The icons to the right of the name indicate the right of the layer comp name. -

Regression Testing and Conformance Testing Interactive Programs

RegressionTesting and Conformance TestingInteractive Programs Don Libes- NationalInstitute of Standardsand Technology ABSTRACT _ Testinginteractive programs, by its nature,requires interaction - usuallyby real people. Such testing is an expensiveprocess and hencerarely done. Some interactivetools can ¡e used non-interactivelyto a limited extent, and are often tested only this way. Purely interactiveprograms are rarely testedin any systematicway. _ This paper describestesting of interactive line and character-orientedprograms via Expect. An immediate use of this is to build a test suite for automatingstandards conformanceof all of the interactiveprograms in POSH t003.2a (interactiveshells and tools),something which hasnot yet beenaccomplished by any means. Introduction does not produceoutput that is immediatelycon- sumed by another process, Dennis Ritchie said that "A program but may for example, tl] write designedfor inputs from people is usually stressed to a disk or display.) This is the environment beyond the breaking point by computer-generated in which Ritchie'sremark arose. inputs." I would add the following: Any program In practice,there are forms of input that pro- useful to people- interactively- is likely to be use- duction programsdo not generate.For example, ful to programs- non-interactively.A corollary of programsdo not make typing errorsand thereforedo Ritchie's statementis that conect softwarefunction not (press the backspaceor delete key to) delete during normalhuman use is not a very goodtest of a charactersjust produced.Similarly, programs do not program'stotal correctness. enter control characters,such as might be used to process. I claim that even when humansare explicitly interrupt a This suggeststhat Ritchie was too - teqtinginteractive software, the resultsare still quite optimistic even computergenerated inputs still unreliable. -

Timestamp Changes in Case of Copy Command (Win7, Win10)

Timestamp changes in case of copy command (Win7, Win10) Investigating timestamp differences between Windows 7 and Windows 10. I intended to figure out how MACB timestamps of the original and the newly created files are changing during a file copy in Windows. I also checked the differences between the results of the GUI based copy and paste method and the command line based copy command. I compared the changes in case of an in-volume copy and in case of copying to a different volume as well. Tools: These are the tools that were used during my investigation. • Microsoft Windows 10 64-bit v10.0.17134.345 • Microsoft Windows 7 Enterprise SP1 • FTK Imager 4.2 - for creating images about the drives and to save the MFT file • analyzeMFT.py - for MFT parsing (https://github.com/dkovar/analyzeMFT) MACB An NTFS volume stores 8 different timestamps for a single file. These timestamps are the followings: • Modified • Accessed • Changed (Info Entry date change) • Birth (file creation time) All of these 4 information snippets are stored in the $STANDARD_INFO and in the $FILE_NAME as well. The difference between the two attributes: • $STANDARD_INFO: can be modified by user level processes. Therefore it can be altered by anti-forensics utilities. • $FILE_NAME: can only be modified by the system kernel. No known anti-forensics tools can modify it. Method of investigation 1) I generated two files in an NTFS volume. 2) Copied one of the files with copy paste and the other one with copy command from command line into a different directory. 3) Generated two files in an NTFS volume to test out-of-volume copy.