YU GUO 3D GRAPHICS PLATFORMS and TOOLS for MOBILE APPLI- CATIONS Master of Science Thesis

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Select Smartphones and Tablets with Qualcomm® Quick Charge™ 2.0 Technology

Select smartphones and tablets with Qualcomm® Quick Charge™ 2.0 technology + Asus Transformer T100 + Samsung Galaxy S6 + Asus Zenfone 2 + Samsung Galaxy S6 Edge + Droid Turbo by Motorola + Samsung Note 4 + Fujitsu Arrows NX + Samsung Note Edge + Fujitsu F-02G + Sharp Aquos Pad + Fujitsu F-03G + Sharp Aquos Zeta + Fujitsu F-05F + Sharp SH01G/02G + Google Nexus 6 + Sony Xperia Z2 (Japan) + HTC Butterfly 2 + Sony Xperia Z2 Tablet (Japan) + HTC One (M8) + Sony Xperia Z3 + HTC One (M9) + Sony Xperia Z3 Tablet + Kyocera Urbano L03 + Sony Xperia Z4 + LeTV One Max + Sony Xperia Z4 Tablet + LeTV One Pro + Xiaomi Mi 3 + LG G Flex 2 + Xiaomi Mi 4 + LG G4 + Xiaomi Mi Note + New Moto X by Motorola + Xiaomi Mi Note Pro + Panasonic CM-1 + Yota Phone 2 + Samsung Galaxy S5 (Japan) These devices contain the hardware necessary to achieve Quick Charge 2.0. It is at the device manufacturer’s discretion to fully enable this feature. A Quick Charge 2.0 certified power adapter is required. Different Quick Charge 2.0 implementations may result in different charging times. www.qualcomm.com/quickcharge Qualcomm Quick Charge is a product of Qualcom Technologies, Inc. Updated 6/2015 Certified Accessories + Air-J Multi Voltage AC Charger + Motorola TurboPower 15 Wall Charger + APE Technology AC/DC Adapter + Naztech N210 Travel Charger + APE Technology Car Charger + Naztech Phantom Vehicle Charger + APE Technology Power Bank + NTT DOCOMO AC Adapter + Aukey PA-U28 Turbo USB Universal Wall Charger + Power Partners AC Adapter + CellTrend Car Charger + Powermod Car Charger -

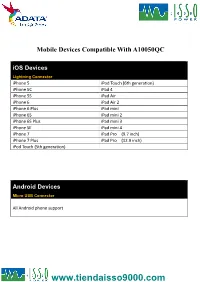

Android Devices

Mobile Devices Compatible With A10050QC iOS Devices Lightning Connector iPhone 5 iPod Touch (6th generation) iPhone 5C iPad 4 iPhone 5S iPad Air iPhone 6 iPad Air 2 iPhone 6 Plus iPad mini iPhone 6S iPad mini 2 iPhone 6S Plus iPad mini 3 iPhone SE iPad mini 4 iPhone 7 iPad Pro (9.7 inch) iPhone 7 Plus iPad Pro (12.9 inch) iPod Touch (5th generation) Android Devices Micro USB Connector All Android phone support Smartphone With Quick Charge 3.0 Technology Type-C Connector Asus ZenFone 3 LG V20 TCL Idol 4S Asus ZenFone 3 Deluxe NuAns NEO VIVO Xplay6 Asus ZenFone 3 Ultra Nubia Z11 Max Wiley Fox Swift 2 Alcatel Idol 4 Nubia Z11miniS Xiaomi Mi 5 Alcatel Idol 4S Nubia Z11 Xiaomi Mi 5s General Mobile GM5+ Qiku Q5 Xiaomi Mi 5s Plus HP Elite x3 Qiku Q5 Plus Xiaomi Mi Note 2 LeEco Le MAX 2 Smartisan M1 Xiaomi MIX LeEco (LeTV) Le MAX Pro Smartisan M1L ZTE Axon 7 Max LeEco Le Pro 3 Sony Xperia XZ ZTE Axon 7 Lenovo ZUK Z2 Pro TCL Idol 4-Pro Smartphone With Quick Charge 3.0 Technology Micro USB Connector HTC One A9 Vodafone Smart platinum 7 Qiku N45 Wiley Fox Swift Sugar F7 Xiaomi Mi Max Compatible With Quick Charge 3.0 Technology Micro USB Connector Asus Zenfone 2 New Moto X by Motorola Sony Xperia Z4 BlackBerry Priv Nextbit Robin Sony Xperia Z4 Tablet Disney Mobile on docomo Panasonic CM-1 Sony Xperia Z5 Droid Turbo by Motorola Ramos Mos1 Sony Xperia Z5 Compact Eben 8848 Samsung Galaxy A8 Sony Xperia Z5 Premium (KDDI Japan) EE 4GEE WiFi (MiFi) Samsung Galaxy Note 4 Vertu Signature Touch Fujitsu Arrows Samsung Galaxy Note 5 Vestel Venus V3 5070 Fujitsu -

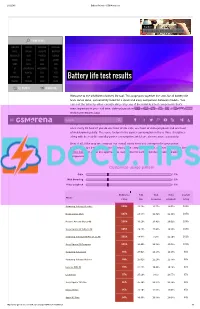

Battery Life Test Results HUAWEI TOSHIBA INTEX PLUM

2/12/2015 Battery life tests GSMArena.com Starborn SAMSUNG GALAXY S6 EDGE+ REVIEW PHONE FINDER SAMSUNG LENOVO VODAFONE VERYKOOL APPLE XIAOMI GIGABYTE MAXWEST MICROSOFT ACER PANTECH CELKON NOKIA ASUS XOLO GIONEE SONY OPPO LAVA VIVO LG BLACKBERRY MICROMAX NIU HTC ALCATEL BLU YEZZ MOTOROLA ZTE SPICE PARLA Battery life test results HUAWEI TOSHIBA INTEX PLUM ALL BRANDS RUMOR MILL Welcome to the GSMArena battery life tool. This page puts together the stats for all battery life tests we've done, conveniently listed for a quick and easy comparison between models. You can sort the table by either overall rating or by any of the individual test components that's most important to you call time, video playback or web browsing.TIP US 828K 100K You can find all about our84K 137K RSS LOG IN SIGN UP testing procedures here. SearchOur overall rating gives you an idea of how much battery backup you can get on a single charge. An overall rating of 40h means that you'll need to fully charge the device in question once every 40 hours if you do one hour of 3G calls, one hour of video playback and one hour of web browsing daily. The score factors in the power consumption in these three disciplines along with the reallife standby power consumption, which we also measure separately. Best of all, if the way we compute our overall rating does not correspond to your usage pattern, you are free to adjust the different usage components to get a closer match. Use the sliders below to adjust the approximate usage time for each of the three battery draining components. -

Compatibility Sheet

COMPATIBILITY SHEET SanDisk Ultra Dual USB Drive Transfer Files Easily from Your Smartphone or Tablet Using the SanDisk Ultra Dual USB Drive, you can easily move files from your Android™ smartphone or tablet1 to your computer, freeing up space for music, photos, or HD videos2 Please check for your phone/tablet or mobile device compatiblity below. If your device is not listed, please check with your device manufacturer for OTG compatibility. Acer Acer A3-A10 Acer EE6 Acer W510 tab Alcatel Alcatel_7049D Flash 2 Pop4S(5095K) Archos Diamond S ASUS ASUS FonePad Note 6 ASUS FonePad 7 LTE ASUS Infinity 2 ASUS MeMo Pad (ME172V) * ASUS MeMo Pad 8 ASUS MeMo Pad 10 ASUS ZenFone 2 ASUS ZenFone 3 Laser ASUS ZenFone 5 (LTE/A500KL) ASUS ZenFone 6 BlackBerry Passport Prevro Z30 Blu Vivo 5R Celkon Celkon Q455 Celkon Q500 Celkon Millenia Epic Q550 CoolPad (酷派) CoolPad 8730 * CoolPad 9190L * CoolPad Note 5 CoolPad X7 大神 * Datawind Ubislate 7Ci Dell Venue 8 Venue 10 Pro Gionee (金立) Gionee E7 * Gionee Elife S5.5 Gionee Elife S7 Gionee Elife E8 Gionee Marathon M3 Gionee S5.5 * Gionee P7 Max HTC HTC Butterfly HTC Butterfly 3 HTC Butterfly S HTC Droid DNA (6435LVW) HTC Droid (htc 6435luw) HTC Desire 10 Pro HTC Desire 500 Dual HTC Desire 601 HTC Desire 620h HTC Desire 700 Dual HTC Desire 816 HTC Desire 816W HTC Desire 828 Dual HTC Desire X * HTC J Butterfly (HTL23) HTC J Butterfly (HTV31) HTC Nexus 9 Tab HTC One (6500LVW) HTC One A9 HTC One E8 HTC One M8 HTC One M9 HTC One M9 Plus HTC One M9 (0PJA1) -

Keep Your Nice Friends Close, but Your Rich Friends Closer – Computation Offloading Using

Keep Your Nice Friends Close, but Your Rich Friends Closer – Computation Offloading Using NFC Kathleen Sucipto∗, Dimitris Chatzopoulos∗, Sokol Kosta±†, and Pan Hui∗ ∗HKUST-DT System and Media Lab, The Hong Kong University of Science and Technology ±CMI, Aalborg University Copenhagen, Denmark ySapienza University of Rome, Italy [email protected], [email protected], [email protected], [email protected] Abstract—The increasing complexity of smartphone applica- surrogates. Not only is this considered to be a potential way tions and services necessitate high battery consumption but the to conserve battery power, it can also reduce the total execu- growth of smartphones’ battery capacity is not keeping pace tion time [5], [6], [7]. So far, many computation offloading with these increasing power demands. To overcome this problem, researchers gave birth to the Mobile Cloud Computing (MCC) frameworks using Bluetooth or Wi-Fi have been developed. research area. In this paper we advance on previous ideas, However, despite having reasonable bandwidth or data rate, by proposing and implementing the first known Near Field they are still facing some limitations, such as: the interference Communication (NFC)-based computation offloading framework. of other WiFi or Bluetooth devices, the Internet connection This research is motivated by the advantages of NFC’s short requirement in the case of MCC, which implies higher energy distance communication, with its better security, and its low battery consumption. We design a new NFC communication needs and higher delay [5], and the difficulty in detecting protocol that overcomes the limitations of the default protocol; available nearby devices for computation offloading through removing the need for constant user interaction, the one–way WiFi-direct or Bluetooth [8]. -

How to Customize Your Xiaomi Mi 3 Phone ? BASIC

How to customize your Xiaomi Mi 3 phone ? BASIC Customize or personalize your Mi 3 to make it look different from others. Here’s a list of suggestions to help you get started. a. Change the display language 1. On your phone, tap Settings > General settings > Language & input > Language. 2. Select the language you want. b. Add additional input languages You can add additional languages on both the Google keyboard and SwiftKey. Here’s how : i. Google keyboard 1. On your phone, tap Settings > General settings > Language & input. 2. Tap next to Google keyboard > Input languages. 3. Slide the Use system language switch to the left to turn it off. With this feature being turned off, you can turn on as many languages as you want below it. 4. On the virtual keyboard, you can tap the key to switch between the languages. ii. SwiftKey 1. On your phone, tap Settings > General settings > Language & input. 2. Tap next to SwiftKey > Languages 3. On the Languages screen, download and select the languages you want. c. Turn on/off the keyboard clicks The keyboard click sound on your Mi 3 is turned off by default. If you wish to turn it on, check out this post for more info. d. Change the ringtone 1. On your phone, tap Settings > General settings > Sound > Phone ringtone 2. Select a ringtone. Related tutorial: How to set a song as your ringtone on Xiaomi Mi 3 ? e. Change the notification tone 1. On your phone, tap Settings > General settings > Sound > Default notification. 2. Select a tone. -

Qualcomm® Quick Charge™ Technology Device List

One charging solution is all you need. Waiting for your phone to charge is a thing of the past. Quick Charge technology is ® designed to deliver lightning-fast charging Qualcomm in phones and smart devices featuring Qualcomm® Snapdragon™ mobile platforms ™ and processors, giving you the power—and Quick Charge the time—to do more. Technology TABLE OF CONTENTS Quick Charge 5 Device List Quick Charge 4/4+ Quick Charge 3.0/3+ Updated 09/2021 Quick Charge 2.0 Other Quick Charge Devices Qualcomm Quick Charge and Qualcomm Snapdragon are products of Qualcomm Technologies, Inc. and/or its subsidiaries. Devices • RedMagic 6 • RedMagic 6Pro Chargers • Baseus wall charger (CCGAN100) Controllers* Cypress • CCG3PA-NFET Injoinic-Technology Co Ltd • IP2726S Ismartware • SW2303 Leadtrend • LD6612 Sonix Technology • SNPD1683FJG To learn more visit www.qualcomm.com/quickcharge *Manufacturers may configure power controllers to support Quick Charge 5 with backwards compatibility. Power controllers have been certified by UL and/or Granite River Labs (GRL) to meet compatibility and interoperability requirements. These devices contain the hardware necessary to achieve Quick Charge 5. It is at the device manufacturer’s discretion to fully enable this feature. A Quick Charge 5 certified power adapter is required. Different Quick Charge 5 implementations may result in different charging times. Devices • AGM X3 • Redmi K20 Pro • ASUS ZenFone 6* • Redmi Note 7* • Black Shark 2 • Redmi Note 7 Pro* • BQ Aquaris X2 • Redmi Note 9 Pro • BQ Aquaris X2 Pro • Samsung Galaxy -

Mobilní Tefony S OTG - USB Host Aktuální, Nám Známý Seznam Mobilních Telefonů K 2.2.2016

Mobilní tefony s OTG - USB Host Aktuální, nám známý seznam mobilních telefonů k 2.2.2016 Seznam mobilních telefonů Poznámka Accer Iconia Tab A200 Accer Iconia Tab A210 Accer Iconia Tab A3 Acer Liquid Z630 16GB LTE Alcatel One Touch Evo 8HD Allview C6 Allview P4 LIFE Dual SIM Allview P6 Energy Dual SIM Allview V1 VIPER S4G Dual SIM Allview W1 S Dual SIM Allview X2 SOUL Extreme Dual SIM Allview X2 SOUL Pro Dual SIM Asus Transformer Pad TF103C ASUS ZenFone 2 ZE551ML 32GB Glamor Dual SIM ASUS ZenFone 2 ZE551ML 32GB Osmium Dual SIM EVOLVEO StrongPhone Q6 LTE EVOLVEO StrongPhone Q8 LTE černý Gionee Elife E7 Gionee Elife S5.5 HTC Butterfly HTC Butterfly S HTC Desire 500 HTC Desire 600 HTC Desire 601 HTC Desire 700 HTC Desire 700 Dual Sim HTC Desire U HTC Desire X HTC HTC J HTC One HTC One (M8) CDMA HTC One (M8) Dual Sim HTC One M8 HTC One M8 Prima HTC One Max HTC One Mini HTC One X HTC One X+ Huawei Ascend D quad Huawei Ascend D1 Huawei Ascend D1 XL U9500E Huawei Ascend G7 Huawei Ascend Honor 3 Huawei Ascend Mate2 4G Huawei Ascend Mate7 Huawei Ascend P1 Huawei Ascend P6 Huawei Ascend P6 S Huawei Ascend P7 mini Huawei Ascend P7 Sapphire Edition 145 Huawei Ascend P7 Huawei MediaPad Huawei MediaPad 7 Lite Huawei MediaPad S7-301w HUAWEI P8 iGET Blackview Alife P1 G Dual SIM iGET Blackview Alife P1 Pro Dual SIM iGET Blackview BV5000 Dual SIM BlackBerry Z30 Lava Lris Pro 30+ Lenovo A6010 Lenovo IdeaTab A1000 Lenovo IdeaTab A3000 Lenovo IdeaTab S5000 Lenovo IdeaTab S6000 Lenovo IdeaTab S6000F Lenovo IdeaTab S6000H Lenovo IdeaTab S6000L Lenovo Lenovo -

Data Quality Issues in Environmental Sensing with Smartphones

Data Quality Issues in Environmental Sensing with Smartphones Tiago C. de Araújo1,2, Lígia T. Silva2 and Adriano J. C. Moreira1 1Centro de Investigação Algoritmi, Universidade do Minho, Azurém, Guimarães, Portugal 2Centro de Investigação CTAC, Universidade do Minho, Azurém, Guimarães, Portugal [email protected], [email protected], [email protected] Keywords: Smartphones, Mobile Sensing, Participatory Sensing. Abstract: This paper presents the results of a study about the performance and, consequently, challenges of using smartphones as data gatherers in mobile sensing campaigns to environmental monitoring. It is shown that there are currently a very large number of devices technologically enabled for tech-sensing with minimal interference of the users. On other hand, the newest devices seem to broke the sensor diversity trend, therefore making the approach of environmental sensing in the ubiquitous computing scope using smartphones sensors a more difficult task. This paper also reports on an experiment, emulating different common scenarios, to evaluate if the performance of environmental sensor-rich smartphones readings obtained in daily situations are reliable enough to enable useful collaborative sensing. The results obtained are promising for temperature measurements only when the smartphone is not being handled because the typical use of the device pollutes the measurements due to heat transfer and other hardware aspects. Also, we have found indicators of data quality issues on humidity sensors embedded in smartphones. The reported study can be useful as initial information about the behaviour of smartphones inner sensors for future crowdsensing application developers. 1 INTRODUCTION the authors could compare their results with official levels measured by proper devices, and they found a According to an industry track report from a high correlation between the results leading to the specialized website (eMarketer Inc. -

Mobile Mcode 3D Cover Mold Apple Iphone 4-EM02 EM01 Yes 0 Apple Iphone 4S-EM01 EM02 Yes 0 Apple Iphone 5/5S/SE-EM04 EM03 Yes

ExclusiveBay Solutions More than 500 models of 3D Sublimation Mobile Covers, Molds, Silicon Whatsapp or Call +91-8199993691, +91-8587095427 Mobile Mcode 3D Cover Mold Apple Iphone 4-EM02 EM01 Yes 0 Apple Iphone 4S-EM01 EM02 Yes 0 Apple Iphone 5/5s/SE-EM04 EM03 Yes 0 Apple Iphone 5S/5/SE-EM03 EM04 Yes 0 Apple Iphone 6-EM06 EM05 Yes 0 Apple Iphone 6S-EM05 EM06 Yes 0 Samsung Galaxy J7 (2015) EM08 Yes 0 Samsung Galaxy J1 (2015) EM09 Yes Yes Samsung Galaxy J5 (2016) EM10 Yes Yes Samsung Galaxy J5 (2015) EM11 Yes 0 Samsung Galaxy J2 (2016)-EM96 EM12 Yes 0 Samsung Galaxy J2 (2015) EM13 Yes 0 Xiaomi Redmi Note 3 EM14 Yes 0 Samsung Galaxy J7 (2016) EM15 Yes 0 Xiaomi Mi 5 EM16 Yes 0 Xiaomi Mi 4 EM17 Yes Yes Xiaomi Mi 4i EM18 Yes 0 Samsung Galaxy E5(E500) (2016) EM19 Yes 0 Samsung Galaxy E7(E700) (2016) EM20 Yes 0 Motorola Moto X Play EM21 Yes 0 Motorola Moto G Turbo-EM24-EM29 EM22 Yes 0 Motorola Moto X Style EM23 Yes 0 Motorola Moto Turbo-EM22-EM29 EM24 Yes 0 Motorola Moto X Force-EM563 EM25 Yes 0 Motorola Moto X 2nd Gen EM26 Yes 0 Motorola Moto G 1st Gen EM27 Yes 0 Motorola Moto G 2nd Gen EM28 Yes Yes Motorola Moto G 3rd Gen-EM22 EM29 Yes 0 Motorola Moto E 1st Gen EM30 Yes Yes Lenovo Vibe K5-EM45 EM31 Yes Yes Lenovo Vibe K4 Note/A7010-EM272 EM32 Yes 0 Lenovo A2010 4G-EM606/441 EM34 Yes 0 Lenovo A7000/K3 Note-EM342 EM35 Yes 0 Huawei P9 EM36 Yes Yes LeEco (LeTV) Le 2 Pro-EM600 EM41 Yes Yes Lenovo K5 Note EM42 Yes 0 Lenovo Vibe K5 Plus-EM31 EM45 Yes 0 Lenovo Vibe P1 Turbo-EM343 EM46 Yes 0 LG G5 EM47 Yes 0 LG Stylus 2 Plus-EM577 EM48 Yes 0 Micromax -

Select Smartphones with Qualcomm® Quick Charge™ 3.0 Technology

Select Smartphones with Qualcomm® Quick Charge™ 3.0 Technology + Asus ZenFone 3 Deluxe + HTC U11 + Asus ZenFone AR + LeEco Le MAX 2 + Baofeng Matrix (VR) + LeEco (LeTV) Le MAX Pro + BlackBerry KEYone + LeEco Le Pro 3 + BQ Aquarius X + Lenovo ZUK Z2 Pro + BQ Aquarius X Pro + LG G5 + Coolpad Cool Changer S1 + LG G6 + DJI FPV goggles (VR) + LG isai Beat LGV34 + General Mobile GM5+ + LG V20 + GeniusIDEA Follow (drone) + LG V30 + Gionee M2017 + Nokia 8 + Gionee M6S Plus + NuAns NEO + HP Elite x3 + Nubia Z11 + HTC 10 + Nubia Z11 Max + HTC One A9 + Nubia Z11miniS + HTC U Ultra + Philips EverPlay (portable speaker) These devices contain the hardware necessary to achieve Quick Charge 3.0. It is at the device manufacturer’s discretion to fully enable this feature. A Quick Charge 3.0 certified power adapter is required. Different Quick Charge 3.0 implementations may result in different charging times. www.qualcomm.com/quickcharge Qualcomm Quick Charge is a product of Qualcom Technologies, Inc. Updated 10/2017 Select Smartphones with Quick Charge 3.0 Technology + Qiku N45 + Wileyfox Swift 2 + Qiku N5S + Wileyfox Swift 2 Plus + Qiku Q5 + Wileyfox Swift 2 X + Qiku Q5 Plus + Xiaomi Mi 5 + Sharp Aquos R + Xiaomi Mi 5s + Smartisan M1 + Xiaomi Mi 5s Plus + Smartisan M1L + Xiaomi Mi 6 + Smartisan U1 Pro + Xiaomi Mi Max + Sony Xperia XZ + Xiaomi Mi Max 2 + Sony Xperia XZ Premium + Xiaomi Mi Note 2 + Sugar F7 + Xiaomi MIX + TCL Idol 4-Pro + ZTE Axon 7 + TCL Idol4S + ZTE Axon 7 Max + Vodafone Smart platinum 7 + ZTE Blade Z Max These devices contain the hardware necessary to achieve Quick Charge 3.0. -

Release Notice

WOPE 5.3.49 Release Notice Copyright Backelite 2012 1. Introduction We are happy to announce the release of the HTML5-based framework WOPE 5.3. Note that this release is no longer compatible with the old BKML markup. HTML5 is now the only sup- ported markup. Read the release notes below to find out about all enhancements. 2. Delivery description This delivery includes: • This document Release_Notice-5.3.49-en-US.pdf • The complete WOPE Web application wope-5.3.49.war • Resources used to customize UI widgets ResourcesClient-5.3.49.zip • Examples of customizable error pages wope-errors-5.3.49.zip • The english version of the Developer Handbook Developer_Handbook-5.3.49-en-US.pdf • The english version of the Operating Handbook Operating_Handbook-5.3.49-en-US.pdf 3. New features 3.1. New UI components with mind blowing effects • 3D coverflow widget. • Animated charts - pie, line, bars - using vector graphics. • Popup widget with overlay effects. 1 Release Notice • Custom skins with native iOS / Android / Windows Phone look and feel. • Advanced carousel widget with rich content. 3.2. iOS 6 specific features • No letterbox black bars on iPhone 5 when viewing the website in the full-screen webapp mode. • New blur and greyscale CSS3 filters available for calendar and popup widgets. • Fully compatible with Smart App Banners, Splash screens and Retina shortcuts icons. • File upload is now available. 3.3. Advanced CSS features • You can now use variables within CSS files for easier customization. • Smoother CSS animations using hardware acceleration if available. 3.4. Enhanced offline adaptation • Automatic rewriting of Manifest file contents with device-tuned resources entries.