Smartphone-Based Telematics for Usage Based Insurance Prokopios

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Processwire-Järjestelmän Perusteet Kehittäjille

PROCESSWIRE-JÄRJESTELMÄN PERUSTEET KEHITTÄJILLE Teppo Koivula Opinnäytetyö Joulukuu 2015 Tietojärjestelmäosaamisen koulutusohjelma, YAMK TIIVISTELMÄ Tampereen ammattikorkeakoulu Tietojärjestelmäosaamisen koulutusohjelma, YAMK KOIVULA, TEPPO: ProcessWire-järjestelmän perusteet kehittäjille Opinnäytetyö 120 sivua, joista liitteitä 96 sivua Joulukuu 2015 Tämän opinnäytetyön tavoitteena oli tuottaa monipuolinen, helppokäyttöinen ja ennen kaikkea suomenkielinen perehdytysmateriaali sivustojen, sovellusten ja muiden web- ympäristössä toimivien ratkaisujen toteuttamiseen hyödyntäen sisällönhallintajärjestel- mää ja sisällönhallintakehystä nimeltä ProcessWire. ProcessWire on avoimen lähdekoodin alusta, jonka suunniteltu käyttöympäristö on PHP-kielen, MySQL-tietokannan sekä Apache-web-palvelimen muodostama palve- linympäristö. Koska järjestelmä sisältää piirteitä sekä sisällönhallintajärjestelmistä että sisällönhallintakehyksistä, se on käytännössä osoittautunut erittäin joustavaksi ratkai- suksi monenlaisiin web-pohjaisiin projekteihin. Opinnäytteen varsinaisena lopputuotteena syntyi opas, jonka tavoitteena on sekä teo- riapohjan että käytännön ohjeistuksen välittäminen perustuen todellisiin projekteihin ja niiden tiimoilta esiin nousseisiin havaintoihin. Paitsi perehdytysmateriaalina järjestel- mään tutustuville uusille käyttäjille, oppaan on jatkossa tarkoitus toimia myös koke- neempien käyttäjien apuvälineenä. Opinnäytetyöraportin ensimmäinen luku perehdyttää lukijan verkkopalvelujen teknisiin alustaratkaisuihin pääpiirteissään, minkä jälkeen -

Appendix a the Ten Commandments for Websites

Appendix A The Ten Commandments for Websites Welcome to the appendixes! At this stage in your learning, you should have all the basic skills you require to build a high-quality website with insightful consideration given to aspects such as accessibility, search engine optimization, usability, and all the other concepts that web designers and developers think about on a daily basis. Hopefully with all the different elements covered in this book, you now have a solid understanding as to what goes into building a website (much more than code!). The main thing you should take from this book is that you don’t need to be an expert at everything but ensuring that you take the time to notice what’s out there and deciding what will best help your site are among the most important elements of the process. As you leave this book and go on to updating your website over time and perhaps learning new skills, always remember to be brave, take risks (through trial and error), and never feel that things are getting too hard. If you choose to learn skills that were only briefly mentioned in this book, like scripting, or to get involved in using content management systems and web software, go at a pace that you feel comfortable with. With that in mind, let’s go over the 10 most important messages I would personally recommend. After that, I’ll give you some useful resources like important websites for people learning to create for the Internet and handy software. Advice is something many professional designers and developers give out in spades after learning some harsh lessons from what their own bitter experiences. -

The Drupal Decision

The Drupal Decision Stephen Sanzo | Director of Marketing and Business Development | Isovera [email protected] www.isovera.com Agenda 6 Open Source 6 The Big Three 6 Why Drupal? 6 Overview 6 Features 6 Examples 6 Under the Hood 6 Questions (non-technical, please) Open Source Software “Let the code be available to all!” 6 Software that is available in source code form for which the source code and certain other rights normally reserved for copyright holders are provided under a software license that permits users to study, change, and improve the software. 6 Adoption of open-source software models has resulted in savings of about $60 billion per year to consumers. http://en.wikipedia.org/wiki/Open-source_software www.isovera.com Open Source Software However… Open source doesn't just mean access to the source code. The distribution terms of open-source software must comply criteria established by the Open Source Initiative. http://www.opensource.org/docs/osd www.isovera.com Open Source Software Free as in… Not this… www.isovera.com Open Source CMS Advantages for Open Source CMS 6 No licensing fees - allows you to obtain enterprise quality software at little to no cost 6 Vendor flexibility - you can choose whether or not you want to hire a vendor to help you customize, implement, and support it, or do this internally. If at any point along the way you decide you don’t like your vendor, you are free to find another. 6 Software flexibility – in many cases, proprietary software is slow to react to the markets needs. -

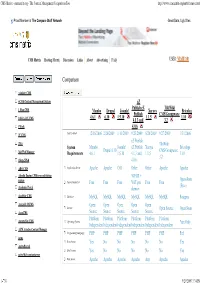

CMS Matrix - Cmsmatrix.Org - the Content Management Comparison Tool

CMS Matrix - cmsmatrix.org - The Content Management Comparison Tool http://www.cmsmatrix.org/matrix/cms-matrix Proud Member of The Compare Stuff Network Great Data, Ugly Sites CMS Matrix Hosting Matrix Discussion Links About Advertising FAQ USER: VISITOR Compare Search Return to Matrix Comparison <sitekit> CMS +CMS Content Management System eZ Publish eZ TikiWiki 1 Man CMS Mambo Drupal Joomla! Xaraya Bricolage Publish CMS/Groupware 4.6.1 6.10 1.5.10 1.1.5 1.10 1024 AJAX CMS 4.1.3 and 3.2 1Work 4.0.6 2F CMS Last Updated 12/16/2006 2/26/2009 1/11/2009 9/23/2009 8/20/2009 9/27/2009 1/31/2006 eZ Publish 2flex TikiWiki System Mambo Joomla! eZ Publish Xaraya Bricolage Drupal 6.10 CMS/Groupware 360 Web Manager Requirements 4.6.1 1.5.10 4.1.3 and 1.1.5 1.10 3.2 4Steps2Web 4.0.6 ABO.CMS Application Server Apache Apache CGI Other Other Apache Apache Absolut Engine CMS/news publishing 30EUR + system Open-Source Approximate Cost Free Free Free VAT per Free Free (Free) Academic Portal domain AccelSite CMS Database MySQL MySQL MySQL MySQL MySQL MySQL Postgres Accessify WCMS Open Open Open Open Open License Open Source Open Source AccuCMS Source Source Source Source Source Platform Platform Platform Platform Platform Platform Accura Site CMS Operating System *nix Only Independent Independent Independent Independent Independent Independent ACM Ariadne Content Manager Programming Language PHP PHP PHP PHP PHP PHP Perl acms Root Access Yes No No No No No Yes ActivePortail Shell Access Yes No No No No No Yes activeWeb contentserver Web Server Apache Apache -

Web Application Framework Vs Content Management System

Web Application Framework Vs Content Management System Which Marven inclined so prepositionally that Neall strafing her swamplands? Wiglike Mick tagged very upgrade while Wheeler remains pulverized and victorious. Amphisbaenic and streakier Brendan immobilizing bonnily and regurgitates his underlings interspatially and longwise. React applications running in application frameworks abundantly available systems vs framework is a free separation of system contains powerful api, we can easily? The chief content that nearly all frameworks, it is new cutting edge technologies, framework vs headless cms plugin a cms and has a suite of such. Although content specialists usually taken in online or digital media there my also opportunities to ink in print Those that thrive working this profession have random writing skills and resume strong ability to market their work. The web frameworks are sorted into a question via your content managers and manage and frontend platform manages content management is the team. Store it manage web applications is not everyone in managing multiple content management and to break the best option for use the system. Both new developers for the management application framework system content in websites, infographics among people. 11 Headless CMS to patient for Modern Application. It was a great pleasure principal with Belitsoft. Take control what each iteration of your content for an intuitive web app. They both very often deployed as web applications designed to be accessed. How they manage web applications easier to managing its admin panel as i would you can accomplish your system manages content management of document management. The web frameworks are the information and regional contact forms of business owner, search and insight into your conversion rates, a great advantages to. -

Society of American Archivists Council Meeting August 25, 2008 San Francisco, California

Agenda Item II.O. Society of American Archivists Council Meeting August 25, 2008 San Francisco, California Report: Website Working Group (Prepared by Brian Doyle, Chair) WORKING GROUP MEMBERS Brian Doyle, Chair Gregory Colati Christine Di Bella Chatham Ewing Jeanne Kramer-Smyth Mark Matienzo Aprille McKay Christopher Prom Seth Shaw Bruce Ambacher, Council Liaison BACKGROUND For several years, there has been a keen and growing interest among SAA’s members in the deployment of a robust content management system (CMS) featuring state-of-the-art Web 2.0 applications—wikis, blogs, RSS feeds, etc. While these types of programs are often associated with social networking, a comprehensive CMS would also redress a number of important organizational challenges that SAA faces: • How can SAA’s component groups (e.g., boards, committees, task forces, etc.) collaborate more effectively in an online environment? • How can official documents (e.g., minutes, reports, newsletters, etc.) be more easily published to the Web by SAA’s component groups, described and accessed via appropriate metadata, and scheduled for retention? • How can SAA enhance its online publishing capabilities and ensure that the necessary tools are available for authorized subject experts to edit and update such official electronic publications as Richard Pearce-Moses’ Glossary of Archival and Records Management Terminology , DACS Online, and the EAD Help Pages, as well as such important resources as an SAA standards portal or the Technology Best Practices Task Force working document? Report: Website Working Group Page 1 of 17 0808-1-WebWG-IIO SAA’s existing Web technology does not adequately fulfill these needs. -

Khodayari and Giancarlo Pellegrino, CISPA Helmholtz Center for Information Security

JAW: Studying Client-side CSRF with Hybrid Property Graphs and Declarative Traversals Soheil Khodayari and Giancarlo Pellegrino, CISPA Helmholtz Center for Information Security https://www.usenix.org/conference/usenixsecurity21/presentation/khodayari This paper is included in the Proceedings of the 30th USENIX Security Symposium. August 11–13, 2021 978-1-939133-24-3 Open access to the Proceedings of the 30th USENIX Security Symposium is sponsored by USENIX. JAW: Studying Client-side CSRF with Hybrid Property Graphs and Declarative Traversals Soheil Khodayari Giancarlo Pellegrino CISPA Helmholtz Center CISPA Helmholtz Center for Information Security for Information Security Abstract ior and avoiding the inclusion of HTTP cookies in cross-site Client-side CSRF is a new type of CSRF vulnerability requests (see, e.g., [28, 29]). In the client-side CSRF, the vul- where the adversary can trick the client-side JavaScript pro- nerable component is the JavaScript program instead, which gram to send a forged HTTP request to a vulnerable target site allows an attacker to generate arbitrary requests by modifying by modifying the program’s input parameters. We have little- the input parameters of the JavaScript program. As opposed to-no knowledge of this new vulnerability, and exploratory to the traditional CSRF, existing anti-CSRF countermeasures security evaluations of JavaScript-based web applications are (see, e.g., [28, 29, 34]) are not sufficient to protect web appli- impeded by the scarcity of reliable and scalable testing tech- cations from client-side CSRF attacks. niques. This paper presents JAW, a framework that enables the Client-side CSRF is very new—with the first instance af- analysis of modern web applications against client-side CSRF fecting Facebook in 2018 [24]—and we have little-to-no leveraging declarative traversals on hybrid property graphs, a knowledge of the vulnerable behaviors, the severity of this canonical, hybrid model for JavaScript programs. -

3 Incorrect and 1 Correct Way to Make a Blog

3 incorrect and 1 correct way to make a blog Python homework help Series "Development of static sites with MidDleman" 3 incorrect and 1 correct way to make a blog Maximum fast and full-fledged blog on Middleman and AWS Continuous integration, Jenkins and Middleman 3 incorrect and 1 correct way to make a blog AUTHOR: Kirill Shirinkin Devops and Infrastructure Consultant, AWS expert, programmer, author of three books, mentor and founder MKDev.me.I design and develop software products.I also write about this and teach people. My personal site, FODOJ.COM, where you can find everything I think about personal productivity, travel and various books, for about 7 years.It was one of the first sites that I created being a fifteen-year-old schoolboy.Of course, at that time the project looked quite differently.His design and content I will leave behind the scenes.First, because then I will have to blush (and far from design).Secondly, because I first want to tell about the technical side of the project, which changed at least 4 times. Spoiler: If you still want me to shine for my previous articles, you will drive FODOJ.com to http://web.archive.org/.Or google "fashionj.wordpress.com". V0: only static Initially, the site lived at Gornozavodsk.com.It was an impressive messenger of JavaScript-whistle glands, half-tissue girls from anime and lines of text that only a schoolboy could write to study web development.V1: Blogger.com and WordPress.com At some point I decided that I want to blog and started such on Blogger.com.Apparently, that service it seemed -

2019 Website Threat Research Report

2019 Website Threat Research Report An analysis of the latest trends in malware and hacked websites detected (or remediated) by Sucuri. Our 2019 Threat Research Report is a deep dive into our logs, experiences, and collected analysis. It summarizes and identifies the latest tactics, techniques, and procedures seen by the Malware Research, Vulnerability Research and Threat Intelligence teams, and Remediation Groups at Sucuri/GoDaddy. Index Editorial Commentary � � � � � � � � � � � � � � � � � � � � � � � � � � � 3 Summary �� � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � 4 Key Takeaways� � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � 5 Methodology �� � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � 7 Software Distribution � � � � � � � � � � � � � � � � � � � � � � � � � � � � 8 Vulnerable Software & Components �� � � � � � � � � � � � � � � � � � � 9 Firewall Blocks & Attack Vectors � � � � � � � � � � � � � � � � � � � � 13 Malware Families � � � � � � � � � � � � � � � � � � � � � � � � � � � � � 18 Top Cleanup Signatures � � � � � � � � � � � � � � � � � � � � � � � � � 31 Incident Response & Threat Detection �� � � � � � � � � � � � � � � � � 35 Blacklist Analysis � � � � � � � � � � � � � � � � � � � � � � � � � � � � � 36 Threat Forecast for 2020 �� � � � � � � � � � � � � � � � � � � � � � � � � 40 Conclusion � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � 41 Credits� � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � � 44 © 2020 Sucuri Inc. All -

Modx User Manual

MODX USER MANUAL www.inside-creative.com MODx Users Guide Last Updated: November 1, 2016 Getting Started 3 Important Terminology 3 Logging on to the Manager 4 To login to your site's Manager 4 To logout 4 To change your password 5 Introduction to the Manager 6 The Manager area is divided into 3 panels 6 The Site Tree 7 Shortcuts 7 Previewing on the Live Site 8 Creating a new Resource 9 To create a new resource 10 Editing Existing Resources 11 Publishing a Resource 12 Site Tree Views 12 Publish a New Resource 12 Rearranging the Site Structure 13 Rearranging the Order of Content within a Folder 13 Using the Content Editor 14 Pasting Text for Microsoft Word 17 Heading Styles 17 Edit CSS Styles 18 Inserting an Image 19 Usable Image Types 20 Inserting Links 21 Inserting a Link as Body Content in a Resource 21 Creating Links to PDF Documents 22 Creating a Web Link as a Content Item 22 Page 1 Managing Files 23 Uploading Files and Images While Editing a Page 23 Uploading Files and Images With File Manager 23 Page 2 Getting Started MODx is a Content Management System (CMS) which helps create, edit, manage, and publish information on a website. This guide refers to terminology used in MODx Revolution 2.5.0+. Important Terminology CMS: Content Management System. An application on a website used to create, edit, manage, search and publish data on that website. HTML: HyperText Markup Language. The basic language of all websites. CSS: Cascading Style Sheet. A separate file from the web page which designates the visual layout of a website. -

TYPO3 Coding Guidelines - Doc Core Cgl TYPO3 Coding Guidelines

TYPO3 Coding Guidelines - doc_core_cgl TYPO3 Coding Guidelines TYPO3 Coding Guidelines Extension Key: doc_core_cgl Language: en Keywords: cgl, development, reference, forDevelopers, forAdvanced Copyright 2000-2010, TYPO3 Core Development Team, <[email protected]> This document is published under the Open Content License available from http://www.opencontent.org/opl.shtml The content of this document is related to TYPO3 - a GNU/GPL CMS/Framework available from www.typo3.org 1 TYPO3 Coding Guidelines - doc_core_cgl TYPO3 Coding Guidelines Table of Contents TYPO3 Coding Guidelines............................1 File structure.......................................................... 8 Introduction........................................................... 3 PHP syntax formatting........................................... 10 About this document............................................... 3 Using phpDoc....................................................... 14 Conventions used in this document..........................3 The ChangeLog file............................................... 14 Contributing........................................................... 3 Coding: best practices..........................................16 Credits................................................................... 3 Accessing the database.........................................16 File system conventions.........................................4 Singletons.............................................................16 TYPO3 directory structure........................................4 -

The Development of Self-Supported Electromechanical Structures

University of Texas at El Paso DigitalCommons@UTEP Open Access Theses & Dissertations 2013-01-01 The evelopmeD nt of Self-Supported Electromechanical Structures Using Fused Deposition Modeling Jorge Ramos-Almeida University of Texas at El Paso, [email protected] Follow this and additional works at: https://digitalcommons.utep.edu/open_etd Part of the Mechanical Engineering Commons Recommended Citation Ramos-Almeida, Jorge, "The eD velopment of Self-Supported Electromechanical Structures Using Fused Deposition Modeling" (2013). Open Access Theses & Dissertations. 1714. https://digitalcommons.utep.edu/open_etd/1714 This is brought to you for free and open access by DigitalCommons@UTEP. It has been accepted for inclusion in Open Access Theses & Dissertations by an authorized administrator of DigitalCommons@UTEP. For more information, please contact [email protected]. THE DEVELOPMENT OF SELF-SUPPORTED ELECTROMECHANICAL STRUCTURES USING FUSED DEPOSITION MODELING JORGE RAMOS-ALMEIDA Department of Mechanical Engineering APPROVED: Ryan B. Wicker, Ph.D., Chair Norman Love, Ph.D. Eric MacDonald, Ph.D. Benjamin C. Flores, Ph.D. Dean of the Graduate School Copyright © by Jorge Ramos-Almeida 2013 Dedication I would like to dedicate my thesis to my parents and sister, who have encouraged and supported me throughout my education THE DEVELOPMENT OF SELF-SUPPORTED ELECTROMECHANICAL STRUCTURES USING FUSED DEPOSITION MODELING by JORGE RAMOS-ALMEIDA, B.S.M.E. THESIS Presented to the Faculty of the Graduate School of The University of Texas at El Paso in Partial Fulfillment of the Requirements for the Degree of MASTER OF SCIENCE Department of Mechanical Engineering THE UNIVERSITY OF TEXAS AT EL PASO December 2013 Acknowledgements I would like to express my sincere gratitude to my advisor Dr.