On Quality Aware Adaptation of Internet Video

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

ONE150 Multi-Service Access Router

ONE150 Multi-Service Access Router • Integrated voice and data managed services • For 10 to 40 desk offices; 8 voice channels • Analogue, TDM and IP Voice • Fast Ethernet Switching • Fibre (Ethernet), VDSL, ADSL access • Industry leading price performance • Simple deployment, provisioning and management • Industry standard CLI MANAGED VOICE AND DATA SERVICES FOR THE SMALLER OFFICE Up to 8 analogue handsets can connect via FSX interfaces. Tradi- tional PBXs can connect via a maximum of 4 ISDN BRI interfaces. Targeting the smaller enterprise and enterprise branch offices, Provisioning is simple, and supported by an industry standard the OneAccess ONE150 Multi-Service Access Router is a high CLI. performance, one box solution for unified voice and data man- VDSL2 aged services. The One150 integrates analogue voice, IP voice ENTERPRISE-CLASS DATA SERVICES ADSL2+ and enterprise-class data services over Fibre (Ethernet), VDSL and ADSL access networks. With Dial Tone Continuity®, sophis- IP PBXs, server rooms and local area networks are connected ticated IP Quality of Service and flexible IP VPN as standard, the to each other and the wide area network via the built in 4 port ONE150 offers a highly cost effective and customisable gateway 100Mbps Ethernet switch. The ONE150 can be optionally speci- to new managed service revenues. fied with WiFi, supporting 802.11b/g with n as a future option. Optical The powerful ONE150 platform supports symmetrical, high speed Ethernet The ONE150 is scaled to provide 10 to 40 desks with analogue, 100 base-FX legacy or IP voice, sophisticated data services, embedded secu- Layer 3 switching at next generation broadband throughputs, with rity and high availability. -

JDSU HST-3000C VDSL-CX/WB2 Specs Provided by HST-3000 Handheld Services Tester Infineon ADSL2+/VDSL2 SIM

COMMUNICATIONS TEST & MEASUREMENT SOLUTIONS JDSU HST-3000C VDSL-CX/WB2 Specs Provided by www.AAATesters.com HST-3000 Handheld Services Tester Infineon ADSL2+/VDSL2 SIM Benefits • Saves money and reduces repeat faults with Triple-Play services testing that supports ADSL1, ADSL2, ADSL2+, VDSL2 (VDSL2 up to 30a profiles) with one module • Provides BPT, Hlog, and QLN graphing, simplifying isolation of bridged taps, noise, and pair balance problems • Emulates both modems (ATU-R/VTU-R) and DSLAMs (ATU-C/ VTU-C) to test both directions of the span • Interoperates with the widest range of chipset manufacturers, such as Broadcom, Infineon, and Ikanos, reducing the costs of carrying multiple test modules • Enables data and services layer testing via PPPoE, PPPoA, IPoE, FTP throughput, web browser, VoIP, and IP Video, making it the right tool for Triple-Play testing • Choose an optional wideband copper pair module that tests up to 30 MHz for VDSL2 Qualifying a very high speed Digital Subscriber Line (VDSL) service that can transport high definition television (HDTV) and triple-play services requires more than a simple Go/No-Go tester. One lightweight, robust, battery-operated JDSU HST-3000 tester equipped with the Infineon Technologies ADSL/VDSL2 module offers more capability than any other handheld tester on the market. This tester gives both technicians and telco engineers the confidence and the necessary power to complete the job, and get it done right. With one tool, they can test and troubleshoot asynchronous DSL (ADSL)/VDSL2 circuits by emulating either the customer modem (ATU-R/VTU-R mode) or the DSL access multiplexer (DSLAM) (ATU-C/VTU-C mode). -

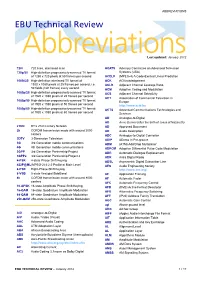

ABBREVIATIONS EBU Technical Review

ABBREVIATIONS EBU Technical Review AbbreviationsLast updated: January 2012 720i 720 lines, interlaced scan ACATS Advisory Committee on Advanced Television 720p/50 High-definition progressively-scanned TV format Systems (USA) of 1280 x 720 pixels at 50 frames per second ACELP (MPEG-4) A Code-Excited Linear Prediction 1080i/25 High-definition interlaced TV format of ACK ACKnowledgement 1920 x 1080 pixels at 25 frames per second, i.e. ACLR Adjacent Channel Leakage Ratio 50 fields (half frames) every second ACM Adaptive Coding and Modulation 1080p/25 High-definition progressively-scanned TV format ACS Adjacent Channel Selectivity of 1920 x 1080 pixels at 25 frames per second ACT Association of Commercial Television in 1080p/50 High-definition progressively-scanned TV format Europe of 1920 x 1080 pixels at 50 frames per second http://www.acte.be 1080p/60 High-definition progressively-scanned TV format ACTS Advanced Communications Technologies and of 1920 x 1080 pixels at 60 frames per second Services AD Analogue-to-Digital AD Anno Domini (after the birth of Jesus of Nazareth) 21CN BT’s 21st Century Network AD Approved Document 2k COFDM transmission mode with around 2000 AD Audio Description carriers ADC Analogue-to-Digital Converter 3DTV 3-Dimension Television ADIP ADress In Pre-groove 3G 3rd Generation mobile communications ADM (ATM) Add/Drop Multiplexer 4G 4th Generation mobile communications ADPCM Adaptive Differential Pulse Code Modulation 3GPP 3rd Generation Partnership Project ADR Automatic Dialogue Replacement 3GPP2 3rd Generation Partnership -

Local-Loop and DSL REFERENCE GUIDE Table of Contents

Local-Loop and DSL REFERENCE GUIDE Table of Contents Prologue ............................................................................ 2 2.3.9.3 REIN ....................................................................32 2.3.9.4 SHINE..................................................................32 1. Introduction ................................................................. 5 2.3.9.5 PEIN ....................................................................32 2. What is DSL? ................................................................ 6 2.3.10 Bonding...............................................................33 2.3.11 Vectoring ............................................................35 2.1 Pre-DSL Delivery of Data ........................................................... 6 2.3.12 G.Fast ..................................................................36 2.1.1 Dial-Up ................................................................................ 6 2.1.2 ISDN .................................................................................... 7 3. DSL Deployment Issues ...........................................38 2.2 xDSL Overview ............................................................................. 8 3.1 Determining the Nature of the Problem ...............................39 2.3 DSL In-Depth .............................................................................12 3.2 Performing a Visual Inspection ..............................................44 2.3.1 ISDN ..................................................................................13 -

Accelerate High- Performance Real-Time Video & Imaging

Accelerate High- Performance Real-Time Video & Imaging Applications with FPGA & Programmable DSP Agenda • Overview • FPGA/PDSP HW/SW Development System Platform: TI EVM642 + Xilinx XEVM642-2VP20 • FPGA for Algorithm Acceleration: H.264/AVC SD Video Encoder • Xilinx MPEG-4 Codec Reference Design • Xilinx SysGen Co-Sim Design and C/C++ Copyright 2004. All rights reserved 2 Analysts See Explosive Growth in Digital Media Market Advanced Codec Unit Shipments (in millions) 250 200 Advanced Codecs 150 Include: MPEG-4 100 H.264 WMV9 50 0 2003 2004 2005 2006 2007 2008 Source: In-Stat/MDR, 6/04 Copyright 2004. All rights reserved 3 Using FPGAs and DSPs Together for Video Processing Codecs Application examples H.264 DSP only MPEG4 DSP + FPGA H.263 MPEG2 JPEG Many Coding Few Channels Encode decode encode / Simultaneous Decode Encode /decode QCIF CIF D1 SD HD Resolution Copyright 2004. All rights reserved 4 Targeted Video Applications Features Features Features 30fps CIF resolution encode Real-time 30fps TV/VGA Real-time 30fps TV/VGA & decode resolution encode & decode resolution encode & decode Integrated audio, video & Integrated audio, video & Integrated audio, video & streaming DSP controller streaming DSP controller streaming DSP controller Headroom available for feature Headroom available for High- Headroom available for enhancements Def feature enhancements & High-Def feature & codec extensions codec extensions enhancements & codec extensions Copyright 2004. All rights reserved 5 DSPs and FPGAs: Complementary Solutions • FPGAs Suitable for Parallel Data-Path Bound Functions/Problems • SW/HW Co-Design Inner-Loop Rule: “Any C/C++ that requires tight inner-loop assembly codes probably should be in hardware” • FPGAs typically complement programmable DSPs in high-performance real-time systems in one or more of the following ways: – System logic muxing and consolidation – New peripheral or bus interface implementation – Performance acceleration in the signal processing chain Copyright 2004. -

ES 202 667 V1.1.1 (2009-04) ETSI Standard

ETSI ES 202 667 V1.1.1 (2009-04) ETSI Standard Speech and multimedia Transmission Quality (STQ); Audiovisual QoS for communication over IP networks 2 ETSI ES 202 667 V1.1.1 (2009-04) Reference DES/STQ-00097 Keywords multimedia, QoS ETSI 650 Route des Lucioles F-06921 Sophia Antipolis Cedex - FRANCE Tel.: +33 4 92 94 42 00 Fax: +33 4 93 65 47 16 Siret N° 348 623 562 00017 - NAF 742 C Association à but non lucratif enregistrée à la Sous-Préfecture de Grasse (06) N° 7803/88 Important notice Individual copies of the present document can be downloaded from: http://www.etsi.org The present document may be made available in more than one electronic version or in print. In any case of existing or perceived difference in contents between such versions, the reference version is the Portable Document Format (PDF). In case of dispute, the reference shall be the printing on ETSI printers of the PDF version kept on a specific network drive within ETSI Secretariat. Users of the present document should be aware that the document may be subject to revision or change of status. Information on the current status of this and other ETSI documents is available at http://portal.etsi.org/tb/status/status.asp If you find errors in the present document, please send your comment to one of the following services: http://portal.etsi.org/chaircor/ETSI_support.asp Copyright Notification No part may be reproduced except as authorized by written permission. The copyright and the foregoing restriction extend to reproduction in all media. -

Vigor160 Series 35B/G.Fast Modem

Vigor160 Series 35b/G.Fast Modem Support VDSL2 Vectoring Support G.Fast (Vigor166)/35b/VDSL2/ADSL2+ Multi-PVC for triple-play applications The Vigor160 series is a compact VDSL2 As a router, the Vigor160 series has included modem/router for SOHO and residential. It SPI firewall to add a layer of security to your has an RJ-11 WAN interface with a built-in local network. Vigor160 series allows custom modem for VDSL or ADSL Internet services, port forwarding rules, offers Layer 3 packet reduce the need for an additional modem. filtering as well as HTTP content filtering by URL keyword and web features, and also Vigor160 series supports all the VDSL2 provides DoS attack defense to protect the profiles, including G.fast (Vigor166) and VDSL network against traffic flooding. Super Vectoring, deliver speeds of up to 300 Mbps downstream and 100 Mbps upstream. The support of multiple-PVC/VLAN (up to 5) and providing two Gigabit Ethernet LAN ports makes it a perfect solution for triple-play applications. TRIPLE PLAY ON DSL P2 P1 OFF PWR Video VoIP Data ADSL/VDSL Network Feature Compliant with ITU-T VDSL2 G.993.2 Router / Bridge Configurable Compliant with ITU-T G.993.1, G.997.1 IGMP Proxy V2 / V3 Capability Fall Back to ADSL2/2+ Standards DHCP Client/Relay/Server ANSI T1.413 Issue2 Dynamic DNS ITU-T G.992.1 G.dmt NTP Client ITU-T G.992.3 ADSL2 G.dmt.bis Static Route ITU-T G.992.5 ADSL2+ DNS Cache / Proxy VDSL Band Plan: 997, 998 RIP v1/v2 VDSL2 Profile : 8a, 8b, 8c, 8d, 12a, 12b, UPnP 100 Sessions 17a, 30a, 35bVDSL2 G.Fast Profile : 106Mhz, 212Mhz -

Visual Quality of Current Coding Technologies at High Definition

Visual Quality of Current Coding Technologies at High Definition IPTV Bitrates Christian Keimel, Julian Habigt, Tim Habigt, Martin Rothbucher and Klaus Diepold Institute for Data Processing, Technische Universitat¨ Munchen¨ Arcisstr. 21, 80333 Munchen,¨ Germany fchristian.keimel, jh, tim, martin.rothbucher, [email protected] Abstract—High definition video over IP based networks (IPTV) Besides the target bitrate, the visual quality is also de- has become a mainstay in today’s consumer environment. In most termined by the selection of different profiles and levels as applications, encoders conforming to the H.264/AVC standard are described in Annex A of H.264/AVC. Moreover, the chosen used. But even within one standard, often a wide range of coding tools are available that can deliver a vastly different visual quality. coding tools in the encoder can have a significant influence Therefore we evaluate in this contribution different coding on the visual quality with respect to a fixed bitrate. Hence, technologies, using different encoder settings of H.264/AVC, but we consider two H.264/AVC settings in this contribution also a completely different encoder like Dirac. We cover a wide representing different levels of encoder complexity. Also, we range of different bitrates from ADSL to VDSL and different take alternative coding technologies into account by including content, with low and high demand on the encoders. As PSNR is not well suited to describe the perceived visual quality, we the wavelet based Dirac [2], [3] encoder into our evaluation. conducted extensive subject tests to determine the visual quality. As the peak signal to noise ratio (PSNR) describes the visual Our results show that for currently common bitrates, the visual quality only poorly [4], we decided to conduct extensive sub- quality can be more than doubled, if the same coding technology, jective testing to determine the visual quality of the different but different coding tools are used. -

Monitoring ADSL2+ and VDSL2 Technologies

CHAPTER 30 Monitoring ADSL2+ and VDSL2 Technologies This chapter discusses the following technology enhancements in Prime Network: • ADSL2+ • VDSL2 • Bonding Group These topics describe how to use the Vision client to manage these technologies. If you cannot perform an operation that is described in these topics, you may not have sufficient permissions; see Permissions for Managing DSL2+ and VDSL2, page B-28. • Viewing the ADSL2+/VDSL2 Configuration Details, page 30-1 • Viewing the DSL Bonding Group Configuration Details, page 30-4 Viewing the ADSL2+/VDSL2 Configuration Details Asymmetric digital subscriber line (ADSL) is a type of digital subscriber line (DSL) technology, a data communications technology that enables faster data transmission over copper telephone 4.3.1 than a conventional voiceband modem can provide. It does this by utilizing frequencies that are not used by a voice telephone call. ADSL2+ extends the capability of basic ADSL by doubling the number of downstream channels. The data rates can be as high as 24 Mbit/s downstream and up to 1.4 Mbit/s upstream depending on the distance from the DSLAM to the customer's premises. It is capable of doubling the frequency band of typical ADSL connections from 1.1 MHz to 2.2 MHz. This doubles the downstream data rates of the previous ADSL2 standard (which was up to 12 Mbit/s), and like the previous standards will degrade from its peak bitrate after a certain distance. Very-high-bit-rate digital subscriber line (VDSL or VHDSL) is a digital subscriber line (DSL) technology providing data transmission faster than ADSL over a single flat untwisted or twisted pair of copper wires (up to 52 Mbit/s downstream and 16 Mbit/s upstream), and on coaxial cable (up to 85 Mbit/s down- and upstream); using the frequency band from 25 kHz to 12 MHz. -

G.Fast : Recent/Ongoing/Future Enhancements • G.Mgfast : Emerging New Project About ITU-T SG15 Q4

Overview of ITU-T SG15 Q4 xDSL and G.(mg)fast Hubert Mariotte ITU-T SG 15 Vice Chairman [email protected] Slides (Version May 2017) prepared by Frank Van der Putten Nokia [email protected] Rapporteur ITU-T Q4/SG15 Overview • About ITU-T SG15 Q4 • xDSL and G.(mg)fast access solutions • VDSL2 : recent/ongoing enhancements • G.fast : recent/ongoing/future enhancements • G.mgfast : emerging new project About ITU-T SG15 Q4 • SG15: Networks, Technologies and Infrastructures for Transport, Access and Home • Q4: Broadband access over metallic conductors • Covers all aspects of transceivers operating over metallic conductors in the access part of the network • Projects: xDSL, G.(mg)fast, testing, management • Main liaisons: ITU-R, ETSI and BBF • Meets face to face about 6 weeks per year Overview Access Network Solutions CO ADSL2plus CPE Long (<6000m) CPE CPE ≤25 Mbit/s VDSL2 CPE Short CPE ≤150 Mbit/s (17a) (<2500m) ≤400 Mbit/s (35b) G.fast G.fast fills an access technology gap CPE • Huge gap 100 Mbit/s multi Gbit/s ≤ 1..2 Gbit/s • Fiber may not always be possible into Very short TP or coax (<400m) the home/apartment G.mgfast • G.fast supports FTTdp and FTTB CPE architectures ≤ 5..10 Gbit/s No driling Fiber Copper No digging (<100m? TP or coax) VDSL2 • What is in the Recommendations (G.993.2/5, G.998.4) – Aggregate data rates up to 150 Mbit/s (17a), 250 Mbit/s (30a), 400 Mbit/s (35b) – Operates over loops up to 2500m of 0.4mm copper – PHY layer retransmission and crosstalk cancellation (vectoring) – Down/up asymmetry ratio -

Fiber Network Topologies Connecting VDSL Networks

HELSINKI UNIVERSITY OF TECHNOLOGY Department of Electrical and Communications Engineering Laboratory of Telecommunications Technology Fiber Network Topologies Connecting VDSL Networks S-38.128 Special Assignment (2 cr) Written by: Harri Mäntylä, 36491N Supervisor: Vesa Kosonen Returned: 27.8.1999 Table of Contents Table of Contents ...........................................................................................................2 Abbreviations .................................................................................................................3 1. Introduction............................................................................................................5 2. Digital Subscriber Line (DSL) technologies..........................................................6 2.1 ISDN ..................................................................................................................6 2.2 HDSL .................................................................................................................6 2.3 HDSL2 ...............................................................................................................7 2.4 ADSL .................................................................................................................7 2.5 ADSL Lite..........................................................................................................7 2.6 VDSL .................................................................................................................8 2.6.1 Standardization...........................................................................................8 -

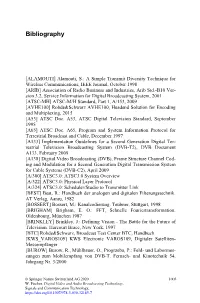

Bibliography

Bibliography [ALAMOUTI] Alamouti, S.: A Simple Transmit Diversity Technique for Wireless Communications, IEEE Journal, October 1998 [ARIB] Association of Radio Business and Industries, Arib Std.-B10 Ver- sion 3.2, Service Information for Digital Broadcasting System, 2001 [ATSC-MH] ATSC-M/H Standard, Part 1, A/153, 2009 [AVHE100] Rohde&Schwarz AVHE100, Headend Solution for Encoding and Multiplexing, 2015 [A53] ATSC Doc. A53, ATSC Digital Television Standard, September 1995 [A65] ATSC Doc. A65, Program and System Information Protocol for Terrestrial Broadcast and Cable, December 1997 [A133] Implementation Guidelines for a Second Generation Digital Ter- restrial Television Broadcasting System (DVB-T2), DVB Document A133, February 2009 [A138] Digital Video Broadcasting (DVB), Frame Structure Channel Cod- ing and Modulation for a Second Generation Digital Transmission System for Cable Systems (DVB-C2), April 2009 [A/300] ATSC3.0: ATSC3.0 System Overview [A/322] ATSC3.0: Physical Layer Protocol [A/324] ATSC3.0: Scheduler/Studio to Transmitter Link [BEST] Best, R.: Handbuch der analogen und digitalen Filterungstechnik. AT Verlag, Aarau, 1982 [BOSSERT] Bossert, M.: Kanalcodierung. Teubner, Stuttgart, 1998 [BRIGHAM] Brigham, E. O.: FFT, Schnelle Fouriertransformation. Oldenbourg, München 1987 [BRINKLEY] Brinkley, J.: Defining Vision - The Battle for the Future of Television. Harcourt Brace, New York, 1997 [BTC] Rohde&Schwarz, Broadcast Test Center BTC, Handbuch [KWS_VAROS109] KWS Electronic VAROS109, Digitaler Satelliten- Messempfänger [BUROW] Burow, R., Mühlbauer, O., Progrzeba, P.: Feld- und Labormes- sungen zum Mobilempfang von DVB-T. Fernseh- und Kinotechnik 54, Jahrgang Nr. 3/2000 © Springer Nature Switzerland AG 2020 1003 W. Fischer, Digital Video and Audio Broadcasting Technology, Signals and Communication Technology, https://doi.org/10.1007/978-3-030-32185-7 1004 Bibliography [CHANG] Robert W.