Comparing the Influence of Ecology Journals Using Citation-Based Indices: Making Sense Of

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Where to Publish?

Where to publish? A Handbook of Scientific Journals for Contributors to Marine Conservation Joshua Cinner and Anya Jaeckli ARC Centre of Excellence for Coral Reef Studies, James Cook University, Townsville, AUSTRALIA. Email: [email protected] Version: September 2018 1 Foreword Deciding where to publish can be important- even career changing. A paper in the right journal can make a career, sometimes even define a field, while the same type of research may fade to oblivion in the wrong venue. Knowing which journals are well suited to your research is an important part of being a scientist. For many scientists, there is a wide range of potential venues. So many, in fact, that many of us often forget what the available options are. Here, we compiled a guide of what we consider key journals in interdisciplinary field of marine resource management. We excluded journals that had a focus on pure, rather than applied ecology, geomorphology, or social science. A lot goes into deciding where to publish- popular metrics such as the impact factor are often used, but it is our feeling that this should be done with great caution. For example, just because a journal has a low impact factor does not mean it is not incredibly influential. Here, we present a number of journal metrics, including impact factor, Scimago Journal Rank (SJR), H-index, and others (which we describe in detail below) for 63 journals that publish interdisciplinary papers on marine resource management. We also provide a description of the aims and scope of the journal (from the journal’s website), and in a few cases, we provide some personal notes about the journal. -

A Comparative Analysis of Ecology Literature Databases

A COMPARATIVE ANALYSIS OF ECOLOGY LITERATURE DATABASES Barry N. Brown, Mansfield Library, The University of Montana, Missoula, MT 59812 [email protected], April 2007 ABSTRACT: There is a bewildering array of databases currently available for literature searches. Major, traditional indexes to the primary literature of ecology include: Biological Abstracts, Biological and Agricultural Index, CAB Direct, CSA Biological Sciences, Web of Science, Wildlife and Ecology Studies Worldwide, and Zoological Record. New indexes and search engines have recently appeared; notably Google Scholar, Scirus, and Scopus. Multidisciplinary, full text, undergraduate-oriented, databases such as Academic Search Premier from EBSCO, and/or Academic Index ASAP from Gale are widely available and frequently used at academic libraries. All of these electronic databases were compared and ranked using quantitative criteria and search results. Database content was benchmarked against randomly selected bibliographies from articles in well known ecology literature review journals. A list of the top 20, most important, journals for ecology was compiled based on a citation analysis of the bibliographies; this analysis revealed that 84% of the literature cited is journal articles and 11% was books or book chapters. Criteria for evaluating databases included: coverage of the most important ecology journals, freshness of indexing, completeness of indexing, inclusion of citations from the bibliographies examined, and size as indicated by keyword searching of title fields. Somewhat surprisingly, based on these analyses, the best databases overall for finding the primary literature of ecology are: Web of Science, Scopus, Google Scholar, Academic Search Premier, and Scirus. An additional analysis of ecology books and book chapters showed OCLC’s WorldCat to clearly have the most content. -

UC Merced Frontiers of Biogeography

UC Merced Frontiers of Biogeography Title Game of Tenure: the role of “hidden” citations on researchers’ ranking in Ecology Permalink https://escholarship.org/uc/item/8m99t8z2 Journal Frontiers of Biogeography, 12(1) Authors Benítez-López, Ana Santini, Luca Publication Date 2020 DOI 10.21425/F5FBG45195 Supplemental Material https://escholarship.org/uc/item/8m99t8z2#supplemental License https://creativecommons.org/licenses/by/4.0/ 4.0 Peer reviewed eScholarship.org Powered by the California Digital Library University of California a Frontiers of Biogeography 2020, 12.1, e45195 Frontiers of Biogeography RESEARCH ARTICLE the scientific journal of the International Biogeography Society Game of Tenure: the role of “hidden” citations on researchers’ ranking in Ecology Ana Benítez-López1,2* and Luca Santini1,3* 1 Department of Environmental Science, Institute for Wetland and Water Research, Radboud University, P.O. Box 9010, NL-6500 GL, Nijmegen, the Netherlands; 2 Integrative Ecology Group, Estación Biológica de Doñana (EBD-CSIC), Avda. Américo Vespucio 26, 41092, Sevilla, Spain; 3 National Research Council, Institute of Research on Terrestrial Ecosystems (CNR-IRET), Via Salaria km 29.300, 00015, Monterotondo (Rome), Italy. *Corresponding authors: [email protected]; [email protected]; [email protected] Abstract Highlights Field ecologists and macroecologists often compete • Primary data are often cited in the supplementary for the same grants and academic positions, with the materials of papers relying on large quantities of former producing primary data that the latter generally secondary data, thus creating an imbalanced system use for model parameterization. Primary data are usually for researchers that compete for the same grants or cited only in the supplementary materials, thereby not positions should they be assessed under the same counting formally as citations, creating a system where field ecologists are routinely under-acknowledged and bibliometric indicators. -

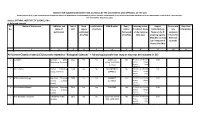

Science Citation Index (SCI) Journals Related to "Biological Sciences" + Following Journals That May Or May Not Be Included in SCI

FORMAT FOR SUBJECTWISE IDENTIFYING JOURNALS BY THE UNIVERSITIES AND APPROVAL OF THE UGC {Under Clause 6.05 (1) of the University Grants Commission (Minimum Qualifications for appointment of Teacher and Other Academic Staff in Universities and Colleges and Measures for the Maintenance of Standards in Higher Education (4th Amendment), Regulations, 2016} Subject: BOTANY, INSTITUTE OF SCIENCE, BHU A. Refereed Journals Sl. Name of the Journal Publisher and Year of Hard e-publication ISSN Number Peer / Indexing status. Impact Do you use Any other No. place of Start copies (Yes/No) Refree If indexed, Name Factor/Rating. any Information publication published Reviewed of the indexing Name of the IF exclusion (Yes/No) (Yes/No) data base assigning agency. criteria for Whether covered Research by Thompson & Journals Reuter (Yes/No) 1 2 3 4 5 6 7 8 9 10 11 All Science Citation Index (SCI) Journals related to "Biological Sciences" + following journals that may or may not be included in SCI 1 3 Biotech Springer Berlin 2011 Yes Yes 2190-572X Yes Thomson and Router, 0.99 Heidelberg, Germany (Print) 2190-5738 Scopus, Google (Online) Scholar, Cross reference 2 AACL Bioflux Bioflux Publishing 2009 Yes Yes ISSN 18448143, Yes Thomson and Router, 0.5 House, Romania 18449166 Scopus, Google Scholar, Cross reference 3 ACS Chemical biology American Chemical 2006 Yes Yes 15548937, Yes Thomson and Router, 5.02 Society (United 15548929 Scopus, Google States) Scholar, Cross reference 4 ACS Synthetic Biology American Chemical 2012 Yes Yes 21615063 Yes Thomson and Router, 4.56 Society (United Scopus, Google States) Scholar, Cross reference 5 Acta Biologica Hungarica Akadémiai Kiadó, 1873 Yes Yes 0236-6495 Online Yes Thomson and Router, 0.97 Hungary ISSN: 1588-2578 Scopus, Google Scholar, Cross reference Sl.