Patch2self: Denoising Diffusion MRI with Self-Supervised Learning

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Increased Power by Harmonizing Structural MRI Site Differences with the Combat Batch Adjustment Method in ENIGMA

UC Irvine UC Irvine Previously Published Works Title Increased power by harmonizing structural MRI site differences with the ComBat batch adjustment method in ENIGMA. Permalink https://escholarship.org/uc/item/2bc1b6zp Authors Radua, Joaquim Vieta, Eduard Shinohara, Russell et al. Publication Date 2020-09-01 DOI 10.1016/j.neuroimage.2020.116956 Peer reviewed eScholarship.org Powered by the California Digital Library University of California HHS Public Access Author manuscript Author ManuscriptAuthor Manuscript Author Neuroimage Manuscript Author . Author manuscript; Manuscript Author available in PMC 2020 September 29. Published in final edited form as: Neuroimage. 2020 September ; 218: 116956. doi:10.1016/j.neuroimage.2020.116956. Increased power by harmonizing structural MRI site differences with the ComBat batch adjustment method in ENIGMA A full list of authors and affiliations appears at the end of the article. Abstract A common limitation of neuroimaging studies is their small sample sizes. To overcome this hurdle, the Enhancing Neuro Imaging Genetics through Meta-Analysis (ENIGMA) Consortium combines neuroimaging data from many institutions worldwide. However, this introduces heterogeneity due to different scanning devices and sequences. ENIGMA projects commonly address this heterogeneity with random-effects meta-analysis or mixed-effects mega-analysis. Here we tested whether the batch adjustment method, ComBat, can further reduce site-related heterogeneity and thus increase statistical power. We conducted random-effects meta-analyses, mixed-effects mega-analyses and ComBat mega-analyses to compare cortical thickness, surface area and subcortical volumes between 2897 individuals with a diagnosis of schizophrenia and 3141 healthy controls from 33 sites. Specifically, we compared the imaging data between individuals with schizophrenia and healthy controls, covarying for age and sex. -

Weighted MRI As an Unenhanced Breast Cancer Screening Tool

BREAST IMAGING The potential of Diffusion- Weighted MRI as an unenhanced breast cancer screening tool by Dr. N Amornsiripanitch and Dr. SC Partridge This article provides an overview of the potential publication [8]. Summary of evidence role of diffusion-weighted magnetic resonance to date of DW-MRI in cancer detection, imaging (DW-MRI) as a breast scancer screening optimal approaches, and future consid- tool independent of dynamic contrast-enhance- erations are presented. ment. The article aims to summarize evidence to CURRENT EVIDENCE FOR DW-MRI IN date of DW-MRI in cancer detection and present BREAST CANCER The following equation describes optimal approaches and future considerations. DW-MRI signal intensity in rela- tion to water mobility within a voxel: Due to well-documented limita- Considering the constraints of SD=S0e-b*ADC, where SD is defined tions of mammography in the settings contrast-enhanced breast MRI, there as diffusion weighted signal intensity, of women with dense breasts and high- is great clinical value in identifying an S0 the signal intensity without diffusion risk women [1], there has been great unenhanced MRI modality. Diffusion- weighting, b or ‘b-value’ the diffusion interest in identifying imaging tech- weighted (DW) MRI is a technique sensitization factor, which is dependent niques to supplement mammography that does not require external contrast on applied gradient’s strength and tim- in breast cancer screening. Dynamic administration—instead, image con- ing (s/mm2), and the apparent diffusion contrast-enhanced (DCE) MRI is trast is generated from endogenous coefficient (ADC) the rate of diffusion endorsed by multinational organiza- water movement, reflecting multiple or average area occupied by a water tions as a supplemental screening tool tissue factors such as cellular mem- molecule per unit time (mm2/s) [9]. -

Brain Imaging Technologies

Updated July 2019 By Carolyn H. Asbury, Ph.D., Dana Foundation Senior Consultant, and John A. Detre, M.D., Professor of Neurology and Radiology, University of Pennsylvania With appreciation to Ulrich von Andrian, M.D., Ph.D., and Michael L. Dustin, Ph.D., for their expert guidance on cellular and molecular imaging in the initial version; to Dana Grantee Investigators for their contributions to this update, and to Celina Sooksatan for monograph preparation. Cover image by Tamily Weissman; Livet et al., Nature 2017 . Table of Contents Section I: Introduction to Clinical and Research Uses..............................................................................................1 • Imaging’s Evolution Using Early Structural Imaging Techniques: X-ray, Angiography, Computer Assisted Tomography and Ultrasound..............................................2 • Magnetic Resonance Imaging.............................................................................................................4 • Physiological and Molecular Imaging: Positron Emission Tomography and Single Photon Emission Computed Tomography...................6 • Functional MRI.....................................................................................................................................7 • Resting-State Functional Connectivity MRI.........................................................................................8 • Arterial Spin Labeled Perfusion MRI...................................................................................................8 -

Diffusion and Perfusion-Weighted Imaging in the Structural and Functional Characterization of Diffuse Gliomas for Differential Diagnosis and Treatment Planning

Diffusion and perfusion-weighted imaging in the structural and functional characterization of diffuse gliomas for differential diagnosis and treatment planning Anna Latysheva Doctoral Thesis Faculty of Medicine, University of Oslo 2020 Department of Radiology and Nuclear Medicine, Oslo University Hospital - Rikshospitalet Oslo, Norway © Anna Latysheva, 2020 Series of dissertations submitted to the Faculty of Medicine, University of Oslo ISBN 978-82-8377-727-7 All rights reserved. No part of this publication may be reproduced or transmitted, in any form or by any means, without permission. Cover: Hanne Baadsgaard Utigard. Print production: Reprosentralen, University of Oslo. Fields of knowledge exist and advance because we find beauty and joy within them. Thomas P. Naidich The idea must be very high, much higher than the ways to realize it. Fyodor M. Dostoevsky 1 TABLE OF CONTENTS ACKNOWLEDGEMENTS .................................................................................................................4 ABBREVIATIONS .............................................................................................................................6 SUMMARY IN ENGLISH...................................................................................................................8 SAMMENDRAG PÅ NORSK...........................................................................................................10 LIST OF PUBLICATIONS ...............................................................................................................12 -

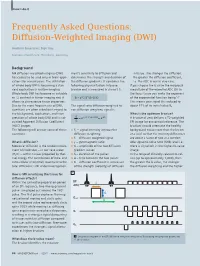

Diffusion-Weighted Imaging (DWI)

ClinicalHow-I-do-it Cardiovascular Frequently Asked Questions: Diffusion-Weighted Imaging (DWI) Joachim Graessner, Dipl. Ing. Siemens Healthcare, Hamburg, Germany Background MR diffusion-weighted imaging (DWI) ment's sensitivity to diffusion and in tissue. The stronger the diffusion, has ceased to be used only in brain appli- determines the strength and duration of the greater the diffusion coefficient, cations for several years. The utilization the diffusion gradients. It combines the i.e. the ADC in our in vivo case. of whole body DWI is becoming a stan- following physical factors into one If you choose the b-value the reciprocal dard application in routine imaging. b-value and is measured in s/mm² [1]. magnitude of the expected ADC (D) in Whole body DWI has become as valuable the focus tissue you make the exponent as T2 contrast in tumor imaging and it b = γ2G2δ2(Δ–δ/3) of the exponential function being ‘-1’. allows to characterize tissue properties. This means your signal S is reduced to Due to the more frequent use of DWI, The signal ratio diffusion-weighted to about 37% of its initial value S0. questions are often asked with regard to non diffusion-weighted signal is: the background, application, and inter- What is the optimum b-value? S 2 2 2 pretation of whole body DWI and its cal- = e-γ G δ (Δ-δ/3)D = e-bD A b-value of zero delivers a T2-weighted culated Apparent Diffusion Coefficient S0 EPI image for anatomical reference. The (ADC) images. b-values should attenuate the healthy ■ The following will answer some of these S0 – signal intensity without the background tissue more than the lesion questions. -

Estimation of Spatiotemporal Isotropic and Anisotropic Myocardial Stiffness Using

Estimation of Spatiotemporal Isotropic and Anisotropic Myocardial Stiffness using Magnetic Resonance Elastography: A Study in Heart Failure DISSERTATION Presented in Partial Fulfillment of the Requirements for the Degree Doctor of Philosophy in the Graduate School of The Ohio State University By Ria Mazumder, M.S. Graduate Program in Electrical and Computer Engineering The Ohio State University 2016 Dissertation Committee: Dr. Bradley Dean Clymer, Advisor Dr. Arunark Kolipaka, Co-Advisor Dr. Patrick Roblin Dr. Richard D. White © Copyright by Ria Mazumder 2016 Abstract Heart failure (HF), a complex clinical syndrome that is characterized by abnormal cardiac structure and function; and has been identified as the new epidemic of the 21st century [1]. Based on the left ventricular (LV) ejection fraction (EF), HF can be classified into two broad categories: HF with reduced EF (HFrEF) and HF with preserved EF (HFpEF). Both HFrEF and HFpEF are associated with alteration in myocardial stiffness (MS), and there is an extensively rich literature to support this relation. However, t0 date, MS is not widely used in the clinics for the diagnosis of HF precisely because of the absence of a clinically efficient tool to estimate MS. Current clinical techniques used to measure MS are invasive in nature, provide global stiffness measurements and cannot assess the true intrinsic properties of the myocardium. Therefore, there is a need to non-invasively quantify MS for accurate diagnosis and prognosis of HF. In recent years, a non-invasive technique known as cardiac magnetic resonance elastography (cMRE) has been developed to estimate MS. However, most of the reported studies using cMRE have been performed on phantoms, animals and healthy volunteers and minimal literature recognizing the importance of cMRE in diagnosing disease conditions, especially with respect to HF is available. -

Diffusion-Weighted MR Imaging of the Brain ⅐ 333 DW MR Imaging Characteristics of Various Disease Entities

State of the Art Pamela W. Schaefer, MD Diffusion-weighted MR P. Ellen Grant, MD 1 R. Gilberto Gonzalez,MD, Imaging of the Brain PhD Diffusion-weighted magnetic resonance (MR) imaging provides image contrast that Index terms: is different from that provided by conventional MR techniques. It is particularly Brain, diffusion sensitive for detection of acute ischemic stroke and differentiation of acute stroke Brain, infarction, 10.781 from other processes that manifest with sudden neurologic deficits. Diffusion- Brain, infection, 10.20 Brain, injuries, 10.41, 10.42 weighted MR imaging also provides adjunctive information for other cerebral dis- Brain, ischemia, 10.781 eases including neoplasms, intracranial infections, traumatic brain injury, and de- Brain, MR, 10.12141, 10.12144 myelinating processes. Because stroke is common and in the differential diagnosis of Brain neoplasms, 10.31, 10.32 most acute neurologic events, diffusion-weighted MR imaging should be considered Sclerosis, multiple, 10.871 an essential sequence, and its use in most brain MR studies is recommended. State of the Art Radiology 2000; 217:331–345 Abbreviations: ADC ϭ apparent diffusion coefficient CSF ϭ cerebrospinal fluid Diffusion-weighted (DW) magnetic resonance (MR) imaging provides potentially unique DW ϭ diffusion weighted information on the viability of brain tissue. It provides image contrast that is dependent on the molecular motion of water, which may be substantially altered by disease. The 1 From the Neuroradiology Division, method was introduced into clinical practice in the middle 1990s, but because of its Massachusetts General Hospital, GRB demanding MR engineering requirements—primarily high-performance magnetic field 285, Fruit St, Boston, MA 02114-2696. -

Technical Advancements and Protocol Optimization of Diffusion-Weighted Imaging (DWI) in Liver

Technical advancements and protocol optimization of diffusion-weighted imaging (DWI) in liver Ping Ni, Yuning Lin, Qun Zhong, Ziqian Chen, Kumar Sandrasegaran, Chen Lin Abstract An area of rapid advancement in abdominal MRI is diffusion-weighted imaging (DWI). By measuring diffusion properties of water molecules, DWI is capable of non-invasively probing tissue properties and physiology at cellular and macromolecular level. The integration of DWI as part of abdominal MRI exam allows better lesion characterization and therefore more accurate initial diagnosis and treatment monitoring. One of the most technical challenging, but also most useful abdominal DWI applications is in liver and therefore requires special attention and careful optimization. In this article, the latest technical developments of DWI and its liver applications are reviewed with the explanations of the technical principles, recommendations of the imaging parameters, and examples of clinical applications. More advanced DWI techniques, including Intra-Voxel Incoherent Motion (IVIM) diffusion imaging, anomalous diffusion imaging, and Diffusion Kurtosis Imaging (DKI) are discussed. Keywords Liver diffusion weighted imaging, Intra-voxel incoherent motion diffusion, Anomalous diffusion, Diffusion kurtosis Introduction Diffusion-weighted imaging (DWI) is a noninvasive imaging technique sensitive to the mobility of water molecule at cellular and macromolecular level, much smaller than the scale of the spatial resolution or voxel size of the images [1, 2]. At such level, the motion of water is strongly influenced by the inter-cellular and intra-cellular environment which changes with tissue type and pathology. For example, for tissue or tumor with high cellularity, the movement of water molecule is more restricted because of narrower inter-cellular space. -

Diffusion Magnetic Resonance Imaging: Its Principle and Applications

102 THE ANATOMICAL RECORD (NEW ANAT.) 257:102–109, 1999 FEATURE ARTICLE Diffusion Magnetic Resonance Imaging: Its Principle and Applications SUSUMU MORI* AND PETER B. BARKER Diffusion magnetic resonance imaging (MRI) is one of the most rapidly evolving techniques in the MRI field. This method exploits the random diffusional motion of water molecules, which has intriguing properties depending on the physiological and anatomical environment of the organisms studied. We explain the principles of this emerging technique and subsequently introduce some of its present applications to neuroimaging, namely detection of ischemic stroke and reconstruction of axonal bundles and myelin fibers. Anat Rec (New Anat) 257:102–109, 1999. 1999 Wiley-Liss, Inc. KEY WORDS: brain imaging; magnetic resonance imaging; diffusion MRI; diffusion tensor imaging; stroke; fiber reconstruction It is truly amazing to realize that more in its acute phase.14 Around the same ous types of MRI techniques have been than two decades after the invention time, scientists had also noticed that designed to use the difference in such of magnetic resonance imaging there is a peculiar property of water MRI properties of water in different (MRI),8 this technology is still evolv- diffusion in highly ordered organs such tissues to differentiate regions of inter- ing with considerable speed. The tech- as brains.13,11,5,6,19 In these organs, wa- est. nique of diffusion-weighted imaging ter does not diffuse equally in all direc- In order to explain the concept of (DWI) is one of the most recent prod- tions, a property called anisotropic the conventional MRI, we use the anal- ucts of this evolution. -

PET/MRI for Neurologic Applications

Journal of Nuclear Medicine, published on November 9, 2012 as doi:10.2967/jnumed.112.105346 CONTINUING EDUCATION PET/MRI for Neurologic Applications Ciprian Catana1, Alexander Drzezga2, Wolf-Dieter Heiss3, and Bruce R. Rosen1 1Athinoula A. Martinos Center for Biomedical Imaging, Department of Radiology, Massachusetts General Hospital and Harvard Medical School, Charlestown, Massachusetts; 2Nuklearmedizinische Klinik und Poliklinik der Technischen UniversitätMünchen, Klinikum rechts der Isar, Munich, Germany; and 3Max Planck Institute for Neurological Research, Cologne, Germany Learning Objectives: On successful completion of this activity, participants should be able to (1) understand the methodologic opportunities created by simultaneous PET/MRI, (2) explain how this novel imaging modality may benefit existing neurologic applications, and (3) design novel investigations for using simultaneous PET/MRI to address unmet scientific and medical needs. Financial Disclosure: Dr. Catana has received honoraria from Siemens Healthcare for lectures. The authors of this article have indicated no other relevant relationships that could be perceived as a real or apparent conflict of interest. CME Credit: SNMMI is accredited by the Accreditation Council for Continuing Medical Education (ACCME) to sponsor continuing education for physicians. SNMMI designates each JNM continuing education article for a maximum of 2.0 AMA PRA Category 1 Credits. Physicians should claim only credit commensurate with the extent of their participation in the activity. For CE credit, participants can access this activity through the SNMMI Web site (http:// www.snmmi.org/ce_online) through December 2015. sumption for some studies, this may not be the case more PET and MRI provide complementary information in the study generally. For example, a subject’s mental state may change of the human brain. -

Advanced Analysis of Diffusion MRI Data

Linköping Studies in Science and Technology Dissertation No. 2017 Advanced analysis of diffusion MRI data Xuan Gu Linköping Studies in Science and Technology Dissertaons, No. 2017 Advanced analysis of diffusion MRI data Xuan Gu Linköping University Department of Biomedical Engineering Division of Biomedical Engineering SE-581 83 Linköping, Sweden Linköping 2019 Edition 1:1 © Xuan Gu, 2019 ISBN 978-91-7519-003-7 ISSN 0345-7524 URL http://urn.kb.se/resolve?urn=urn:nbn:se:liu:diva-161288 Published articles have been reprinted with permission from the respective copyright holder. Typeset using XƎTEX Printed by LiU-Tryck, Linköping 2019 ii POPULÄRVETENSKAPLIG SAMMANFATTNING Diffusions-viktad magnetresonansavbildning (dMRI) är en icke-invasiv metod för att stude- ra hur vattenmolekyler rör sig på grund av diffusion. För att uppnå detta används speciella sekvenser i en magnetkamera, som sätter fart på molekylerna med hjälp av starka magnet- fält. dMRI kan användas som ett verktyg för att studera hjärnkonnektivitet, det vill säga hur olika delar av hjärnan är ihopkopplade via nervfibrer. Denna avhandling presenterar nya metoder för att analysera data från dMRI experiment. En första utmaning är att dMRI data innehåller flera typer av artefakter. Geometriska distortioner skapas via skillnader i hur olika vävnader reagerar på starka magnetfält. Det är även vanligt att försökspersonen rör på huvudet under experimentet. Efter att data har korrigerats för distortioner skattas en diffusions-modell för varje område i hjärnan. Denna modell säger hur stark diffusionen är och om den är starkare i en riktning jämfört med andra riktningar, vilket kan representeras med en tensor. Från tensorn kan man sen räkna ut olika skalära mått, som medeldiffusionen i alla riktningar och hur riktad diffusionen är. -

Diffusion Tensor Imaging 5 Inga Katharina Koerte and Marc Muehlmann

Diffusion Tensor Imaging 5 Inga Katharina Koerte and Marc Muehlmann Abbreviations variety of scientific and clinical settings, espe- cially, but not limited to, the investigation of AD Axial diffusivity brain pathology in schizophrenia (Shenton et al. ADC Apparent diffusion coefficient 2001; Qiu et al. 2009, 2010), Alzheimer’s dis- ADHD Attention deficit hyperactivity disorder ease (Damoiseaux et al. 2009; Mielke et al. BET Brain extraction tool 2009; Avants et al. 2010; Gold et al. 2010; CSF Cerebrospinal fluid Jahng et al. 2011), and autism (Pugliese et al. DKI Diffusion kurtosis imaging 2009; Cheng et al. 2010; Fletcher et al. 2010). DTI Diffusion tensor imaging This chapter describes the basics of the tech- DWI Diffusion-weighted imaging nique, outlines resources needed for acquisi- FA Fractional anisotropy tion, and focuses on post-processing techniques MD Trace, mean diffusivity and statistical analyses with and without a pri- MRI Magnetic resonance imaging ori hypotheses. RD Radial diffusivity ROI Region of interest TBSS Tract-based spatial statistics 5.2 Basic Principles of Diffusion 5.2.1 Brownian Motion 5.1 Introduction Water molecules are constantly moving due to Diffusion tensor imaging (DTI) is an advanced their thermal energy at temperatures above zero. magnetic resonance imaging (MRI) technique In 1827, Robert Brown described the phenomena that provides detailed information about tissue of particles suspended in fluid that move microstructure such as fiber orientation, axonal randomly (Brown 1827). Starting from the same density, and degree of myelination. DTI is position, the movement paths of single water based on the measurement of the diffusion of molecules are unpredictable and end up in water molecules.