Introduction to Compilers and Language Design

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

COM 113 INTRO to COMPUTER PROGRAMMING Theory Book

1 [Type the document title] UNESCO -NIGERIA TECHNICAL & VOCATIONAL EDUCATION REVITALISATION PROJECT -PHASE II NATIONAL DIPLOMA IN COMPUTER TECHNOLOGY Computer Programming COURSE CODE: COM113 YEAR I - SE MESTER I THEORY Version 1: December 2008 2 [Type the document title] Table of Contents WEEK 1 Concept of programming ................................................................................................................ 6 Features of a good computer program ............................................................................................ 7 System Development Cycle ............................................................................................................ 9 WEEK 2 Concept of Algorithm ................................................................................................................... 11 Features of an Algorithm .............................................................................................................. 11 Methods of Representing Algorithm ............................................................................................ 11 Pseudo code .................................................................................................................................. 12 WEEK 3 English-like form .......................................................................................................................... 15 Flowchart ..................................................................................................................................... -

Layout-Sensitive Generalized Parsing

Layout-sensitive Generalized Parsing Sebastian Erdweg, Tillmann Rendel, Christian K¨astner,and Klaus Ostermann University of Marburg, Germany Abstract. The theory of context-free languages is well-understood and context-free parsers can be used as off-the-shelf tools in practice. In par- ticular, to use a context-free parser framework, a user does not need to understand its internals but can specify a language declaratively as a grammar. However, many languages in practice are not context-free. One particularly important class of such languages is layout-sensitive languages, in which the structure of code depends on indentation and whitespace. For example, Python, Haskell, F#, and Markdown use in- dentation instead of curly braces to determine the block structure of code. Their parsers (and lexers) are not declaratively specified but hand-tuned to account for layout-sensitivity. To support declarative specifications of layout-sensitive languages, we propose a parsing framework in which a user can annotate layout in a grammar. Annotations take the form of constraints on the relative posi- tioning of tokens in the parsed subtrees. For example, a user can declare that a block consists of statements that all start on the same column. We have integrated layout constraints into SDF and implemented a layout- sensitive generalized parser as an extension of generalized LR parsing. We evaluate the correctness and performance of our parser by parsing 33 290 open-source Haskell files. Layout-sensitive generalized parsing is easy to use, and its performance overhead compared to layout-insensitive parsing is small enough for practical application. 1 Introduction Most computer languages prescribe a textual syntax. -

Memory Layout and Access Chapter Four

Memory Layout and Access Chapter Four Chapter One discussed the basic format for data in memory. Chapter Three covered how a computer system physically organizes that data. This chapter discusses how the 80x86 CPUs access data in memory. 4.0 Chapter Overview This chapter forms an important bridge between sections one and two (Machine Organization and Basic Assembly Language, respectively). From the point of view of machine organization, this chapter discusses memory addressing, memory organization, CPU addressing modes, and data representation in memory. From the assembly language programming point of view, this chapter discusses the 80x86 register sets, the 80x86 mem- ory addressing modes, and composite data types. This is a pivotal chapter. If you do not understand the material in this chapter, you will have difficulty understanding the chap- ters that follow. Therefore, you should study this chapter carefully before proceeding. This chapter begins by discussing the registers on the 80x86 processors. These proces- sors provide a set of general purpose registers, segment registers, and some special pur- pose registers. Certain members of the family provide additional registers, although typical application do not use them. After presenting the registers, this chapter describes memory organization and seg- mentation on the 80x86. Segmentation is a difficult concept to many beginning 80x86 assembly language programmers. Indeed, this text tends to avoid using segmented addressing throughout the introductory chapters. Nevertheless, segmentation is a power- ful concept that you must become comfortable with if you intend to write non-trivial 80x86 programs. 80x86 memory addressing modes are, perhaps, the most important topic in this chap- ter. -

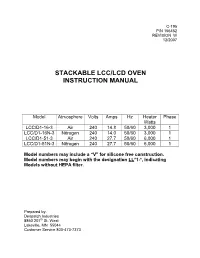

Stackable Lcc/Lcd Oven Instruction Manual

C-195 P/N 156452 REVISION W 12/2007 STACKABLE LCC/LCD OVEN INSTRUCTION MANUAL Model Atmosphere Volts Amps Hz Heater Phase Watts LCC/D1-16-3 Air 240 14.8 50/60 3,000 1 LCC/D1-16N-3 Nitrogen 240 14.0 50/60 3,000 1 LCC/D1-51-3 Air 240 27.7 50/60 6,000 1 LCC/D1-51N-3 Nitrogen 240 27.7 50/60 6,000 1 Model numbers may include a “V” for silicone free construction. Model numbers may begin with the designation LL *1-*, indicating Models without HEPA filter. Prepared by: Despatch Industries 8860 207 th St. West Lakeville, MN 55044 Customer Service 800-473-7373 NOTICE Users of this equipment must comply with operating procedures and training of operation personnel as required by the Occupational Safety and Health Act (OSHA) of 1970, Section 6 and relevant safety standards, as well as other safety rules and regulations of state and local governments. Refer to the relevant safety standards in OSHA and National Fire Protection Association (NFPA), section 86 of 1990. CAUTION Setup and maintenance of the equipment should be performed by qualified personnel who are experienced in handling all facets of this type of system. Improper setup and operation of this equipment could cause an explosion that may result in equipment damage, personal injury or possible death. Dear Customer, Thank you for choosing Despatch Industries. We appreciate the opportunity to work with you and to meet your heat processing needs. We believe that you have selected the finest equipment available in the heat processing industry. -

Introduction to Computer Systems 15-213/18-243, Spring 2009 1St

M4-L3: Executables CSE351, Winter 2021 Executables CSE 351 Winter 2021 Instructor: Teaching Assistants: Mark Wyse Kyrie Dowling Catherine Guevara Ian Hsiao Jim Limprasert Armin Magness Allie Pfleger Cosmo Wang Ronald Widjaja http://xkcd.com/1790/ M4-L3: Executables CSE351, Winter 2021 Administrivia ❖ Lab 2 due Monday (2/8) ❖ hw12 due Friday ❖ hw13 due next Wednesday (2/10) ▪ Based on the next two lectures, longer than normal ❖ Remember: HW and readings due before lecture, at 11am PST on due date 2 M4-L3: Executables CSE351, Winter 2021 Roadmap C: Java: Memory & data car *c = malloc(sizeof(car)); Car c = new Car(); Integers & floats c->miles = 100; c.setMiles(100); x86 assembly c->gals = 17; c.setGals(17); Procedures & stacks float mpg = get_mpg(c); float mpg = Executables free(c); c.getMPG(); Arrays & structs Memory & caches Assembly get_mpg: Processes pushq %rbp language: movq %rsp, %rbp Virtual memory ... Memory allocation popq %rbp Java vs. C ret OS: Machine 0111010000011000 100011010000010000000010 code: 1000100111000010 110000011111101000011111 Computer system: 3 M4-L3: Executables CSE351, Winter 2021 Reading Review ❖ Terminology: ▪ CALL: compiler, assembler, linker, loader ▪ Object file: symbol table, relocation table ▪ Disassembly ▪ Multidimensional arrays, row-major ordering ▪ Multilevel arrays ❖ Questions from the Reading? ▪ also post to Ed post! 4 M4-L3: Executables CSE351, Winter 2021 Building an Executable from a C File ❖ Code in files p1.c p2.c ❖ Compile with command: gcc -Og p1.c p2.c -o p ▪ Put resulting machine code in -

CERES Software Bulletin 95-12

CERES Software Bulletin 95-12 Fortran 90 Linking Experiences, September 5, 1995 1.0 Purpose: To disseminate experience gained in the process of linking Fortran 90 software with library routines compiled under Fortran 77 compiler. 2.0 Originator: Lyle Ziegelmiller ([email protected]) 3.0 Description: One of the summer students, Julia Barsie, was working with a plot program which was written in f77. This program called routines from a graphics package known as NCAR, which is also written in f77. Everything was fine. The plot program was converted to f90, and a new version of the NCAR graphical package was released, which was written in f77. A problem arose when trying to link the new f90 version of the plot program with the new f77 release of NCAR; many undefined references were reported by the linker. This bulletin is intended to convey what was learned in the effort to accomplish this linking. The first step I took was to issue the "-dryrun" directive to the f77 compiler when using it to compile the original f77 plot program and the original NCAR graphics library. "- dryrun" causes the linker to produce an output detailing all the various libraries that it links with. Note that these libaries are in addition to the libaries you would select on the command line. For example, you might compile a program with erbelib, but the linker would have to link with librarie(s) that contain the definitions of sine or cosine. Anyway, it was my hypothesis that if everything compiled and linked with f77, then all the libraries must be contained in the output from the f77's "-dryrun" command. -

The Lcc 4.X Code-Generation Interface

The lcc 4.x Code-Generation Interface Christopher W. Fraser and David R. Hanson Microsoft Research [email protected] [email protected] July 2001 Technical Report MSR-TR-2001-64 Abstract Lcc is a widely used compiler for Standard C described in A Retargetable C Compiler: Design and Implementation. This report details the lcc 4.x code- generation interface, which defines the interaction between the target- independent front end and the target-dependent back ends. This interface differs from the interface described in Chap. 5 of A Retargetable C Com- piler. Additional infomation about lcc is available at http://www.cs.princ- eton.edu/software/lcc/. Microsoft Research Microsoft Corporation One Microsoft Way Redmond, WA 98052 http://www.research.microsoft.com/ The lcc 4.x Code-Generation Interface 1. Introduction Lcc is a widely used compiler for Standard C described in A Retargetable C Compiler [1]. Version 4.x is the current release of lcc, and it uses a different code-generation interface than the inter- face described in Chap. 5 of Reference 1. This report details the 4.x interface. Lcc distributions are available at http://www.cs.princeton.edu/software/lcc/ along with installation instruc- tions [2]. The code generation interface specifies the interaction between lcc’s target-independent front end and target-dependent back ends. The interface consists of a few shared data structures, a 33-operator language, which encodes the executable code from a source program in directed acyclic graphs, or dags, and 18 functions, that manipulate or modify dags and other shared data structures. On most targets, implementations of many of these functions are very simple. -

About ILE C/C++ Compiler Reference

IBM i 7.3 Programming IBM Rational Development Studio for i ILE C/C++ Compiler Reference IBM SC09-4816-07 Note Before using this information and the product it supports, read the information in “Notices” on page 121. This edition applies to IBM® Rational® Development Studio for i (product number 5770-WDS) and to all subsequent releases and modifications until otherwise indicated in new editions. This version does not run on all reduced instruction set computer (RISC) models nor does it run on CISC models. This document may contain references to Licensed Internal Code. Licensed Internal Code is Machine Code and is licensed to you under the terms of the IBM License Agreement for Machine Code. © Copyright International Business Machines Corporation 1993, 2015. US Government Users Restricted Rights – Use, duplication or disclosure restricted by GSA ADP Schedule Contract with IBM Corp. Contents ILE C/C++ Compiler Reference............................................................................... 1 What is new for IBM i 7.3.............................................................................................................................3 PDF file for ILE C/C++ Compiler Reference.................................................................................................5 About ILE C/C++ Compiler Reference......................................................................................................... 7 Prerequisite and Related Information.................................................................................................. -

Compiler Error Messages Considered Unhelpful: the Landscape of Text-Based Programming Error Message Research

Working Group Report ITiCSE-WGR ’19, July 15–17, 2019, Aberdeen, Scotland Uk Compiler Error Messages Considered Unhelpful: The Landscape of Text-Based Programming Error Message Research Brett A. Becker∗ Paul Denny∗ Raymond Pettit∗ University College Dublin University of Auckland University of Virginia Dublin, Ireland Auckland, New Zealand Charlottesville, Virginia, USA [email protected] [email protected] [email protected] Durell Bouchard Dennis J. Bouvier Brian Harrington Roanoke College Southern Illinois University Edwardsville University of Toronto Scarborough Roanoke, Virgina, USA Edwardsville, Illinois, USA Scarborough, Ontario, Canada [email protected] [email protected] [email protected] Amir Kamil Amey Karkare Chris McDonald University of Michigan Indian Institute of Technology Kanpur University of Western Australia Ann Arbor, Michigan, USA Kanpur, India Perth, Australia [email protected] [email protected] [email protected] Peter-Michael Osera Janice L. Pearce James Prather Grinnell College Berea College Abilene Christian University Grinnell, Iowa, USA Berea, Kentucky, USA Abilene, Texas, USA [email protected] [email protected] [email protected] ABSTRACT of evidence supporting each one (historical, anecdotal, and empiri- Diagnostic messages generated by compilers and interpreters such cal). This work can serve as a starting point for those who wish to as syntax error messages have been researched for over half of a conduct research on compiler error messages, runtime errors, and century. Unfortunately, these messages which include error, warn- warnings. We also make the bibtex file of our 300+ reference corpus ing, and run-time messages, present substantial difficulty and could publicly available. -

Unix/Linux Command Reference

Unix/Linux Command Reference .com File Commands System Info ls – directory listing date – show the current date and time ls -al – formatted listing with hidden files cal – show this month's calendar cd dir - change directory to dir uptime – show current uptime cd – change to home w – display who is online pwd – show current directory whoami – who you are logged in as mkdir dir – create a directory dir finger user – display information about user rm file – delete file uname -a – show kernel information rm -r dir – delete directory dir cat /proc/cpuinfo – cpu information rm -f file – force remove file cat /proc/meminfo – memory information rm -rf dir – force remove directory dir * man command – show the manual for command cp file1 file2 – copy file1 to file2 df – show disk usage cp -r dir1 dir2 – copy dir1 to dir2; create dir2 if it du – show directory space usage doesn't exist free – show memory and swap usage mv file1 file2 – rename or move file1 to file2 whereis app – show possible locations of app if file2 is an existing directory, moves file1 into which app – show which app will be run by default directory file2 ln -s file link – create symbolic link link to file Compression touch file – create or update file tar cf file.tar files – create a tar named cat > file – places standard input into file file.tar containing files more file – output the contents of file tar xf file.tar – extract the files from file.tar head file – output the first 10 lines of file tar czf file.tar.gz files – create a tar with tail file – output the last 10 lines -

Parse Forest Diagnostics with Dr. Ambiguity

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by CWI's Institutional Repository Parse Forest Diagnostics with Dr. Ambiguity H. J. S. Basten and J. J. Vinju Centrum Wiskunde & Informatica (CWI) Science Park 123, 1098 XG Amsterdam, The Netherlands {Jurgen.Vinju,Bas.Basten}@cwi.nl Abstract In this paper we propose and evaluate a method for locating causes of ambiguity in context-free grammars by automatic analysis of parse forests. A parse forest is the set of parse trees of an ambiguous sentence. Deducing causes of ambiguity from observing parse forests is hard for grammar engineers because of (a) the size of the parse forests, (b) the complex shape of parse forests, and (c) the diversity of causes of ambiguity. We first analyze the diversity of ambiguities in grammars for programming lan- guages and the diversity of solutions to these ambiguities. Then we introduce DR.AMBIGUITY: a parse forest diagnostics tools that explains the causes of ambiguity by analyzing differences between parse trees and proposes solutions. We demonstrate its effectiveness using a small experiment with a grammar for Java 5. 1 Introduction This work is motivated by the use of parsers generated from general context-free gram- mars (CFGs). General parsing algorithms such as GLR and derivates [35,9,3,6,17], GLL [34,22], and Earley [16,32] support parser generation for highly non-deterministic context-free grammars. The advantages of constructing parsers using such technology are that grammars may be modular and that real programming languages (often requiring parser non-determinism) can be dealt with efficiently1. -

ROSE Tutorial: a Tool for Building Source-To-Source Translators Draft Tutorial (Version 0.9.11.115)

ROSE Tutorial: A Tool for Building Source-to-Source Translators Draft Tutorial (version 0.9.11.115) Daniel Quinlan, Markus Schordan, Richard Vuduc, Qing Yi Thomas Panas, Chunhua Liao, and Jeremiah J. Willcock Lawrence Livermore National Laboratory Livermore, CA 94550 925-423-2668 (office) 925-422-6278 (fax) fdquinlan,panas2,[email protected] [email protected] [email protected] [email protected] [email protected] Project Web Page: www.rosecompiler.org UCRL Number for ROSE User Manual: UCRL-SM-210137-DRAFT UCRL Number for ROSE Tutorial: UCRL-SM-210032-DRAFT UCRL Number for ROSE Source Code: UCRL-CODE-155962 ROSE User Manual (pdf) ROSE Tutorial (pdf) ROSE HTML Reference (html only) September 12, 2019 ii September 12, 2019 Contents 1 Introduction 1 1.1 What is ROSE.....................................1 1.2 Why you should be interested in ROSE.......................2 1.3 Problems that ROSE can address...........................2 1.4 Examples in this ROSE Tutorial...........................3 1.5 ROSE Documentation and Where To Find It.................... 10 1.6 Using the Tutorial................................... 11 1.7 Required Makefile for Tutorial Examples....................... 11 I Working with the ROSE AST 13 2 Identity Translator 15 3 Simple AST Graph Generator 19 4 AST Whole Graph Generator 23 5 Advanced AST Graph Generation 29 6 AST PDF Generator 31 7 Introduction to AST Traversals 35 7.1 Input For Example Traversals............................. 35 7.2 Traversals of the AST Structure............................ 36 7.2.1 Classic Object-Oriented Visitor Pattern for the AST............ 37 7.2.2 Simple Traversal (no attributes)....................... 37 7.2.3 Simple Pre- and Postorder Traversal....................