Cryptanalysis of Stream Ciphers with Linear Masking

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

State of the Art in Lightweight Symmetric Cryptography

State of the Art in Lightweight Symmetric Cryptography Alex Biryukov1 and Léo Perrin2 1 SnT, CSC, University of Luxembourg, [email protected] 2 SnT, University of Luxembourg, [email protected] Abstract. Lightweight cryptography has been one of the “hot topics” in symmetric cryptography in the recent years. A huge number of lightweight algorithms have been published, standardized and/or used in commercial products. In this paper, we discuss the different implementation constraints that a “lightweight” algorithm is usually designed to satisfy. We also present an extensive survey of all lightweight symmetric primitives we are aware of. It covers designs from the academic community, from government agencies and proprietary algorithms which were reverse-engineered or leaked. Relevant national (nist...) and international (iso/iec...) standards are listed. We then discuss some trends we identified in the design of lightweight algorithms, namely the designers’ preference for arx-based and bitsliced-S-Box-based designs and simple key schedules. Finally, we argue that lightweight cryptography is too large a field and that it should be split into two related but distinct areas: ultra-lightweight and IoT cryptography. The former deals only with the smallest of devices for which a lower security level may be justified by the very harsh design constraints. The latter corresponds to low-power embedded processors for which the Aes and modern hash function are costly but which have to provide a high level security due to their greater connectivity. Keywords: Lightweight cryptography · Ultra-Lightweight · IoT · Internet of Things · SoK · Survey · Standards · Industry 1 Introduction The Internet of Things (IoT) is one of the foremost buzzwords in computer science and information technology at the time of writing. -

On the Security of IV Dependent Stream Ciphers

On the Security of IV Dependent Stream Ciphers Côme Berbain and Henri Gilbert France Telecom R&D {[email protected]} research & development Stream Ciphers IV-less IV-dependent key K key K IV (initial value) number ? generator keystream keystream plaintext ⊕ ciphertext plaintext ⊕ ciphertext e.g. RC4, Shrinking Generator e.g. SNOW, Scream, eSTREAM ciphers well founded theory [S81,Y82,BM84] less unanimously agreed theory practical limitations: prior work [RC94, HN01, Z06] - no reuse of K numerous chosen IV attacks - synchronisation - key and IV setup not well understood IV setup – H. Gilbert (2) research & developement Orange Group Outline security requirements on IV-dependent stream ciphers whole cipher key and IV setup key and IV setup constructions satisfying these requirements blockcipher based tree based application example: QUAD incorporate key and IV setup in QUAD's provable security argument IV setup – H. Gilbert (3) research & developement Orange Group Security in IV-less case: PRNG notion m K∈R{0,1} number truly random VS generator g generator g g(K) ∈{0,1}L L OR Z ∈R{0,1} 1 input A 0 or 1 PRNG A tests number distributions: Adv g (A) = PrK [A(g(K)) = 1] − PrZ [A(Z) = 1] PRNG PRNG Advg (t) = maxA,T(A)≤t (Advg (A)) PRNG 80 g is a secure cipher ⇔ g is a PRNG ⇔ Advg (t < 2 ) <<1 IV setup – H. Gilbert (4) research & developement Orange Group Security in IV-dependent case: PRF notion stream cipher perfect random fct. IV∈ {0,1}n function generator VSOR g* gK G = {gK} gK(IV) q oracle queries • A 0 or 1 PRF gK g* A tests function distributions: Adv G (A) = Pr[A = 1] − Pr[A = 1] PRF PRF Adv G (t, q) = max A (Adv G (A)) PRF 80 40 G is a secure cipher ⇔ G is a PRF ⇔ Adv G (t < 2 ,2 ) << 1 IV setup – H. -

9/11 Report”), July 2, 2004, Pp

Final FM.1pp 7/17/04 5:25 PM Page i THE 9/11 COMMISSION REPORT Final FM.1pp 7/17/04 5:25 PM Page v CONTENTS List of Illustrations and Tables ix Member List xi Staff List xiii–xiv Preface xv 1. “WE HAVE SOME PLANES” 1 1.1 Inside the Four Flights 1 1.2 Improvising a Homeland Defense 14 1.3 National Crisis Management 35 2. THE FOUNDATION OF THE NEW TERRORISM 47 2.1 A Declaration of War 47 2.2 Bin Ladin’s Appeal in the Islamic World 48 2.3 The Rise of Bin Ladin and al Qaeda (1988–1992) 55 2.4 Building an Organization, Declaring War on the United States (1992–1996) 59 2.5 Al Qaeda’s Renewal in Afghanistan (1996–1998) 63 3. COUNTERTERRORISM EVOLVES 71 3.1 From the Old Terrorism to the New: The First World Trade Center Bombing 71 3.2 Adaptation—and Nonadaptation— ...in the Law Enforcement Community 73 3.3 . and in the Federal Aviation Administration 82 3.4 . and in the Intelligence Community 86 v Final FM.1pp 7/17/04 5:25 PM Page vi 3.5 . and in the State Department and the Defense Department 93 3.6 . and in the White House 98 3.7 . and in the Congress 102 4. RESPONSES TO AL QAEDA’S INITIAL ASSAULTS 108 4.1 Before the Bombings in Kenya and Tanzania 108 4.2 Crisis:August 1998 115 4.3 Diplomacy 121 4.4 Covert Action 126 4.5 Searching for Fresh Options 134 5. -

The Long Road to the Advanced Encryption Standard

The Long Road to the Advanced Encryption Standard Jean-Luc Cooke CertainKey Inc. [email protected], http://www.certainkey.com/˜jlcooke Abstract 1 Introduction This paper will start with a brief background of the Advanced Encryption Standard (AES) process, lessons learned from the Data Encryp- tion Standard (DES), other U.S. government Two decades ago the state-of-the-art in cryptographic publications and the fifteen first the private sector cryptography was—we round candidate algorithms. The focus of the know now—far behind the public sector. presentation will lie in presenting the general Don Coppersmith’s knowledge of the Data design of the five final candidate algorithms, Encryption Standard’s (DES) resilience to and the specifics of the AES and how it dif- the then unknown Differential Cryptanaly- fers from the Rijndael design. A presentation sis (DC), the design principles used in the on the AES modes of operation and Secure Secure Hash Algorithm (SHA) in Digital Hash Algorithm (SHA) family of algorithms Signature Standard (DSS) being case and will follow and will include discussion about point[NISTDSS][NISTDES][DC][NISTSHA1]. how it is directly implicated by AES develop- ments. The selection and design of the DES was shrouded in controversy and suspicion. This very controversy has lead to a fantastic acceler- Intended Audience ation in private sector cryptographic advance- ment. So intrigued by the NSA’s modifica- tions to the Lucifer algorithm, researchers— This paper was written as a supplement to a academic and industry alike—powerful tools presentation at the Ottawa International Linux in assessing block cipher strength were devel- Symposium. -

Chapter 2 Block Ciphers

Chapter 2 Block Ciphers Block ciphers are the central tool in the design of protocols for shared-key cryp- tography. They are the main available “technology” we have at our disposal. This chapter will take a look at these objects and describe the state of the art in their construction. It is important to stress that block ciphers are just tools—raw ingredients for cooking up something more useful. Block ciphers don’t, by themselves, do something that an end-user would care about. As with any powerful tool, one has to learn to use this one. Even a wonderful block cipher won’t give you security if you use don’t use it right. But used well, these are powerful tools indeed. Accordingly, an important theme in several upcoming chapters will be on how to use block ciphers well. We won’t be emphasizing how to design or analyze block ciphers, as this remains very much an art. The main purpose of this chapter is just to get you acquainted with what typical block ciphers look like. We’ll look at two examples, DES and AES. DES is the “old standby.” It is currently (year 2001) the most widely-used block cipher in existence, and it is of sufficient historical significance that every trained cryptographer needs to have seen its description. AES is a modern block cipher, and it is expected to supplant DES in the years to come. 2.1 What is a block cipher? A block cipher is a function E: {0, 1}k ×{0, 1}n →{0, 1}n that takes two inputs, a k- bit key K and an n-bit “plaintext” M, to return an n-bit “ciphertext” C = E(K, M). -

Ascon (A Submission to CAESAR)

Ascon (A Submission to CAESAR) Ch. Dobraunig1, M. Eichlseder1, F. Mendel1, M. Schl¨affer2 1IAIK, Graz University of Technology, Austria 2Infineon Technologies AG, Austria 22nd Crypto Day, Infineon, Munich Overview CAESAR Design of Ascon Security analysis Implementations 1 / 20 CAESAR CAESAR: Competition for Authenticated Encryption { Security, Applicability, and Robustness (2014{2018) http://competitions.cr.yp.to/caesar.html Inspired by AES, eStream, SHA-3 Authenticated Encryption Confidentiality as provided by block cipher modes Authenticity, Integrity as provided by MACs \it is very easy to accidentally combine secure encryption schemes with secure MACs and still get insecure authenticated encryption schemes" { Kohno, Whiting, and Viega 2 / 20 CAESAR CAESAR: Competition for Authenticated Encryption { Security, Applicability, and Robustness (2014{2018) http://competitions.cr.yp.to/caesar.html Inspired by AES, eStream, SHA-3 Authenticated Encryption Confidentiality as provided by block cipher modes Authenticity, Integrity as provided by MACs \it is very easy to accidentally combine secure encryption schemes with secure MACs and still get insecure authenticated encryption schemes" { Kohno, Whiting, and Viega 2 / 20 Generic compositions MAC-then-Encrypt (MtE) e.g. in SSL/TLS MAC M security depends on E and MAC E ∗ C T k Encrypt-and-MAC (E&M) ∗ e.g. in SSH E C M security depends on E and MAC MAC T Encrypt-then-MAC (EtM) ∗ IPSec, ISO/IEC 19772:2009 M E C provably secure MAC T 3 / 20 Tags for M = IV (N 1), M = IV (N 2), . ⊕ k ⊕ k are the key stream to read M1, M2,... (Keys for) E ∗ and MAC must be independent! Pitfalls: Dependent Keys (Confidentiality) Encrypt-and-MAC with CBC-MAC and CTR CTR CBC-MAC Nk1 Nk2 Nk` M1 M2 M` IV ··· EK EK ··· EK EK EK EK M1 M2 M` C1 C2 C` T What can an attacker do? 4 / 20 Pitfalls: Dependent Keys (Confidentiality) Encrypt-and-MAC with CBC-MAC and CTR CTR CBC-MAC Nk1 Nk2 Nk` M1 M2 M` IV ··· EK EK ··· EK EK EK EK M1 M2 M` C1 C2 C` T What can an attacker do? Tags for M = IV (N 1), M = IV (N 2), . -

Data Encryption Standard

Data Encryption Standard The Data Encryption Standard (DES /ˌdiːˌiːˈɛs, dɛz/) is a Data Encryption Standard symmetric-key algorithm for the encryption of electronic data. Although insecure, it was highly influential in the advancement of modern cryptography. Developed in the early 1970s atIBM and based on an earlier design by Horst Feistel, the algorithm was submitted to the National Bureau of Standards (NBS) following the agency's invitation to propose a candidate for the protection of sensitive, unclassified electronic government data. In 1976, after consultation with theNational Security Agency (NSA), the NBS eventually selected a slightly modified version (strengthened against differential cryptanalysis, but weakened against brute-force attacks), which was published as an official Federal Information Processing Standard (FIPS) for the United States in 1977. The publication of an NSA-approved encryption standard simultaneously resulted in its quick international adoption and widespread academic scrutiny. Controversies arose out of classified The Feistel function (F function) of DES design elements, a relatively short key length of the symmetric-key General block cipher design, and the involvement of the NSA, nourishing Designers IBM suspicions about a backdoor. Today it is known that the S-boxes that had raised those suspicions were in fact designed by the NSA to First 1975 (Federal Register) actually remove a backdoor they secretly knew (differential published (standardized in January 1977) cryptanalysis). However, the NSA also ensured that the key size was Derived Lucifer drastically reduced such that they could break it by brute force from [2] attack. The intense academic scrutiny the algorithm received over Successors Triple DES, G-DES, DES-X, time led to the modern understanding of block ciphers and their LOKI89, ICE cryptanalysis. -

Stream Cipher Designs: a Review

SCIENCE CHINA Information Sciences March 2020, Vol. 63 131101:1–131101:25 . REVIEW . https://doi.org/10.1007/s11432-018-9929-x Stream cipher designs: a review Lin JIAO1*, Yonglin HAO1 & Dengguo FENG1,2* 1 State Key Laboratory of Cryptology, Beijing 100878, China; 2 State Key Laboratory of Computer Science, Institute of Software, Chinese Academy of Sciences, Beijing 100190, China Received 13 August 2018/Accepted 30 June 2019/Published online 10 February 2020 Abstract Stream cipher is an important branch of symmetric cryptosystems, which takes obvious advan- tages in speed and scale of hardware implementation. It is suitable for using in the cases of massive data transfer or resource constraints, and has always been a hot and central research topic in cryptography. With the rapid development of network and communication technology, cipher algorithms play more and more crucial role in information security. Simultaneously, the application environment of cipher algorithms is in- creasingly complex, which challenges the existing cipher algorithms and calls for novel suitable designs. To accommodate new strict requirements and provide systematic scientific basis for future designs, this paper reviews the development history of stream ciphers, classifies and summarizes the design principles of typical stream ciphers in groups, briefly discusses the advantages and weakness of various stream ciphers in terms of security and implementation. Finally, it tries to foresee the prospective design directions of stream ciphers. Keywords stream cipher, survey, lightweight, authenticated encryption, homomorphic encryption Citation Jiao L, Hao Y L, Feng D G. Stream cipher designs: a review. Sci China Inf Sci, 2020, 63(3): 131101, https://doi.org/10.1007/s11432-018-9929-x 1 Introduction The widely applied e-commerce, e-government, along with the fast developing cloud computing, big data, have triggered high demands in both efficiency and security of information processing. -

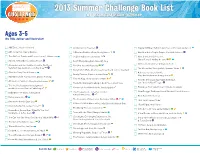

2013 Summer Challenge Book List TM

2013 Summer Challenge Book List TM www.scholastic.com/summer Ages 3-5 (By Title, Author and Illustrator) F ABC Drive, Naomi Howland F Corduroy, Don Freeman F Happy Birthday, Hamster, Cynthia Lord & Derek Anderson F ABC I Like Me!, Nancy Carlson F A Den is a Bed for a Bear, Becky Baines F Harold and the Purple Crayon, Crockett Johnson F The ABCs of Thanks and Please, Diane C. Ohanesian F Dolphin Baby!, Nicola Davies F Here Come the Girl Scouts!, Shana Corey & Hadley Hooper F Abuela, Arthur Dorros & Elisa Kleven F Don’t Worry, Douglas!, David Melling F Homer, Shelley Rotner & Diane Detroit F Alexander and the Terrible, Horrible, No Good, F The Dot, Peter H. Reynolds Very Bad Day, Judith Viorst & Ray Cruz F The House that George Built, Suzanne Slade F Exclamation Mark, Amy Krouse Rosenthal & Tom Lichtenheld F Alice the Fairy, David Shannon F A House is a House for Me, Family Pictures, Carmen Lomas Garza F Mary Ann Hoberman & Betty Fraser F Alphabet Under Construction, Denise Fleming F First the Egg, Laura Vaccaro Seeger F How Do Dinosaurs Say Happy Birthday?, F All Kinds of Families!, Mary Ann Hoberman F Five Little Monkeys Reading in Bed, Eileen Christelow Jane Yolen & Mark Teague F The Are You Ready for Kindergarten? F How Does Your Salad Grow?, Francie Alexander workbook series, Kumon Publishing F The Frog & Toad Are Friends, Arnold Lobel F How Georgie Radboum Saved Baseball, David Shannon F Baby Bear Sees Blue, Ashley Wolff F The Froggy books, Jonathan London & Frank Remkiewicz F Huck Runs Amuck, Sean F Bailey, Harry Bliss F The Geronimo Stilton series, Geronimo Stilton F I Am Small, Emma Dodd F Bats at the Beach, Brian Lies F Gilbert Goldfish Wants a Pet, Kelly DiPucchio & Bob Shea F I Read Signs, Ana Hoban F Bird, Butterfly, Eel, James Prosek F The Giving Tree, Shel Silverstein F If Rocks Could Sing, Leslie McGuirk F Brown Bear, Brown Bear, What Do You See?, F Gone with the Wand, Margie Palatini Bill Martin Jr. -

D-DOG: Securing Sensitive Data in Distributed Storage Space by Data Division and Out-Of-Order Keystream Generation †Jun Feng, †Yu Chen*, ‡Wei-Shinn Ku, §Zhou Su †Dept

D-DOG: Securing Sensitive Data in Distributed Storage Space by Data Division and Out-of-order keystream Generation †Jun Feng, †Yu Chen*, ‡Wei-Shinn Ku, §Zhou Su †Dept. of Electrical & Computer Engineering, SUNY - Binghamton, Binghamton, NY 13902 ‡Dept. of Computer Science & Software Engineering, Auburn University, Auburn, AL 36849 §Dept. of Computer Science, Waseda University, Ohkubo 3-4-1, Shinjyuku, Tokyo 169-8555, Japan {jfeng3, ychen }@binghamton.edu, [email protected], [email protected] ∗ Abstract - Migrating from server-attached storage to system against the attacks/intrusion from outsiders. The distributed storage brings new vulnerabilities in creating a confidentiality and integrity of data are mostly achieved secure data storage and access facility. Particularly it is a using robust cryptograph schemes. challenge on top of insecure networks or unreliable storage However, such a security system is not robust enough to service providers. For example, in applications such as cloud protect the data in distributed storage applications at the computing where data storage is transparent to the owner. It is level of wide area networks. The recent progress of network even harder to protect the data stored in unreliable hosts. technology enables global-scale collaboration over More robust security scheme is desired to prevent adversaries heterogeneous networks under different authorities. For from obtaining sensitive information when the data is in their hands. Meanwhile, the performance gap between the execution instance, in the environment of peer-to-peer (P2P) file speed of security software and the amount of data to be sharing or the distributed storage in cloud computing processed is ever widening. A common solution to close the environment, it enables the concrete data storage to be even performance gap is through hardware implementation. -

Scream on MSP430 07282015

Implementation of the SCREAM Tweakable Block Cipher in MSP430 Assembly Language William Diehl George Mason University, Fairfax VA 22033, USA [email protected] Abstract. The encryption mode of the Tweakable Block Cipher (TBC) of the SCREAM Authenticated Cipher is implemented in the MSP430 microcontroller. Assembly language versions of the TBC are prepared using both precomputed tweak keys and tweak keys computed “on-the-fly.” Both versions are compared against published results for the assembly language version of SCREAM on the ATMEL AVR microcontroller, and against the C reference implementation in terms of performance and size. The assembly language version using precomputed tweak keys achieves a speedup of 1.7 and memory savings of 9 percent over the reported SCREAM implementation in the ATMEL AVR. The assembly language version using tweak keys computed “on-the-fly” achieves a speedup of 1.6 over the ATMEL AVR version while reducing memory usage by 15 percent. Keywords: Cryptography, encryption, MSP430, assembly, speed, efficiency 1 Introduction Authenticated ciphers combine the functionality of confidentiality and integrity into one algorithm. In 2014 the Competition for Authenticated Encryption: Security, Applicability, and Robustness (CAESAR) called for submission of authenticated cipher candidates [1]. CAESAR candidates are evaluated in terms of security, size, robustness, flexibility, and performance. Software reference implementations written in C code are required as part of Rounds One and Two submissions. Many of the Round One submissions contained the authors’ evaluations in both hardware and software. Additionally, several Round One submissions investigated the software performance of the authors’ algorithms on various types of platforms, including high-end CPU and resource-constrained microcontrollers suitable for embedded applications. -

A Software-Optimized Encryption Algorithm

J. Cryptology (1998) 11: 273–287 © 1998 International Association for Cryptologic Research A Software-Optimized Encryption Algorithm¤ Phillip Rogaway Department of Computer Science, Engineering II Building, University of California, Davis, CA 95616, U.S.A. [email protected] Don Coppersmith IBM T.J. Watson Research Center, PO Box 218, Yorktown Heights, NY 10598, U.S.A. [email protected] Communicated by Joan Feigenbaum Received 3 May 1996 and revised 17 September 1997 Abstract. We describe the software-efficient encryption algorithm SEAL 3.0. Com- putational cost on a modern 32-bit processor is about 4 clock cycles per byte of text. The cipher is a pseudorandom function family: under control of a key (first preprocessed into an internal table) it stretches a 32-bit position index into a long, pseudorandom string. This string can be used as the keystream of a Vernam cipher. Key words. Cryptography, Encryption, Fast encryption, Pseudorandom function family, Software encryption, Stream cipher. 1. Introduction Encrypting fast in software. Encryption must often be performed at high data rates, a requirement sometimes met with the help of supporting cryptographic hardware. Un- fortunately, cryptographic hardware is often absent and data confidentiality is sacrificed because the cost of software cryptography is deemed to be excessive. The computational cost of software cryptography is a function of the underlying algorithm and the quality of its implementation. However, regardless of implementation, a cryptographic algorithm designed to run well in hardware will not perform in software as well as an algorithm optimized for software execution. The hardware-oriented Data Encryption Algorithm (DES) is no exception [8].