Linear Logic, Heap-Shape Patterns and Imperative Programming

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Programming Paradigms & Object-Oriented

4.3 (Programming Paradigms & Object-Oriented- Computer Science 9608 Programming) with Majid Tahir Syllabus Content: 4.3.1 Programming paradigms Show understanding of what is meant by a programming paradigm Show understanding of the characteristics of a number of programming paradigms (low- level, imperative (procedural), object-oriented, declarative) – low-level programming Demonstrate an ability to write low-level code that uses various address modes: o immediate, direct, indirect, indexed and relative (see Section 1.4.3 and Section 3.6.2) o imperative programming- see details in Section 2.3 (procedural programming) Object-oriented programming (OOP) o demonstrate an ability to solve a problem by designing appropriate classes o demonstrate an ability to write code that demonstrates the use of classes, inheritance, polymorphism and containment (aggregation) declarative programming o demonstrate an ability to solve a problem by writing appropriate facts and rules based on supplied information o demonstrate an ability to write code that can satisfy a goal using facts and rules Programming paradigms 1 4.3 (Programming Paradigms & Object-Oriented- Computer Science 9608 Programming) with Majid Tahir Programming paradigm: A programming paradigm is a set of programming concepts and is a fundamental style of programming. Each paradigm will support a different way of thinking and problem solving. Paradigms are supported by programming language features. Some programming languages support more than one paradigm. There are many different paradigms, not all mutually exclusive. Here are just a few different paradigms. Low-level programming paradigm The features of Low-level programming languages give us the ability to manipulate the contents of memory addresses and registers directly and exploit the architecture of a given processor. -

15-150 Lectures 27 and 28: Imperative Programming

15-150 Lectures 27 and 28: Imperative Programming Lectures by Dan Licata April 24 and 26, 2012 In these lectures, we will show that imperative programming is a special case of functional programming. We will also investigate the relationship between imperative programming and par- allelism, and think about what happens when the two are combined. 1 Mutable Cells Functional programming is all about transforming data into new data. Imperative programming is all about updating and changing data. To get started with imperative programming, ML provides a type 'a ref representing mutable memory cells. This type is equipped with: • A constructor ref : 'a -> 'a ref. Evaluating ref v creates and returns a new memory cell containing the value v. Pattern-matching a cell with the pattern ref p gets the contents. • An operation := : 'a ref * 'a -> unit that updates the contents of a cell. For example: val account = ref 100 val (ref cur) = account val () = account := 400 val (ref cur) = account 1. In the first line, evaluating ref 100 creates a new memory cell containing the value 100, which we will write as a box: 100 and binds the variable account to this cell. 2. The next line pattern-matches account as ref cur, which binds cur to the value currently in the box, in this case 100. 3. The next line updates the box to contain the value 400. 4. The final line reads the current value in the box, in this case 400. 1 It's important to note that, with mutation, the same program can have different results if it is evaluated multiple times. -

Functional and Imperative Object-Oriented Programming in Theory and Practice

Uppsala universitet Inst. för informatik och media Functional and Imperative Object-Oriented Programming in Theory and Practice A Study of Online Discussions in the Programming Community Per Jernlund & Martin Stenberg Kurs: Examensarbete Nivå: C Termin: VT-19 Datum: 14-06-2019 Abstract Functional programming (FP) has progressively become more prevalent and techniques from the FP paradigm has been implemented in many different Imperative object-oriented programming (OOP) languages. However, there is no indication that OOP is going out of style. Nevertheless the increased popularity in FP has sparked new discussions across the Internet between the FP and OOP communities regarding a multitude of related aspects. These discussions could provide insights into the questions and challenges faced by programmers today. This thesis investigates these online discussions in a small and contemporary scale in order to identify the most discussed aspect of FP and OOP. Once identified the statements and claims made by various discussion participants were selected and compared to literature relating to the aspects and the theory behind the paradigms in order to determine whether there was any discrepancies between practitioners and theory. It was done in order to investigate whether the practitioners had different ideas in the form of best practices that could influence theories. The most discussed aspect within FP and OOP was immutability and state relating primarily to the aspects of concurrency and performance. This thesis presents a selection of representative quotes that illustrate the different points of view held by groups in the community and then addresses those claims by investigating what is said in literature. -

Unit 2 — Imperative and Object-Oriented Paradigm

Programming Paradigms Unit 2 — Imperative and Object-oriented Paradigm J. Gamper Free University of Bozen-Bolzano Faculty of Computer Science IDSE PP 2018/19 Unit 2 – Imperative and Object-oriented Paradigm 1/38 Outline 1 Imperative Programming Paradigm 2 Abstract Data Types 3 Object-oriented Approach PP 2018/19 Unit 2 – Imperative and Object-oriented Paradigm 2/38 Imperative Programming Paradigm Outline 1 Imperative Programming Paradigm 2 Abstract Data Types 3 Object-oriented Approach PP 2018/19 Unit 2 – Imperative and Object-oriented Paradigm 3/38 Imperative Programming Paradigm Imperative Paradigm/1 The imperative paradigm is the oldest and most popular paradigm Based on the von Neumann architecture of computers Imperative programs define sequences of commands/statements for the computer that change a program state (i.e., set of variables) Commands are stored in memory and executed in the order found Commands retrieve data, perform a computation, and assign the result to a memory location Data ←→ Memory CPU (Data and Address Program) ←→ The hardware implementation of almost all Machine code computers is imperative 8B542408 83FA0077 06B80000 Machine code, which is native to the C9010000 008D0419 83FA0376 computer, and written in the imperative style B84AEBF1 5BC3 PP 2018/19 Unit 2 – Imperative and Object-oriented Paradigm 4/38 Imperative Programming Paradigm Imperative Paradigm/2 Central elements of imperative paradigm: Assigment statement: assigns values to memory locations and changes the current state of a program Variables refer -

Comparative Studies of Programming Languages; Course Lecture Notes

Comparative Studies of Programming Languages, COMP6411 Lecture Notes, Revision 1.9 Joey Paquet Serguei A. Mokhov (Eds.) August 5, 2010 arXiv:1007.2123v6 [cs.PL] 4 Aug 2010 2 Preface Lecture notes for the Comparative Studies of Programming Languages course, COMP6411, taught at the Department of Computer Science and Software Engineering, Faculty of Engineering and Computer Science, Concordia University, Montreal, QC, Canada. These notes include a compiled book of primarily related articles from the Wikipedia, the Free Encyclopedia [24], as well as Comparative Programming Languages book [7] and other resources, including our own. The original notes were compiled by Dr. Paquet [14] 3 4 Contents 1 Brief History and Genealogy of Programming Languages 7 1.1 Introduction . 7 1.1.1 Subreferences . 7 1.2 History . 7 1.2.1 Pre-computer era . 7 1.2.2 Subreferences . 8 1.2.3 Early computer era . 8 1.2.4 Subreferences . 8 1.2.5 Modern/Structured programming languages . 9 1.3 References . 19 2 Programming Paradigms 21 2.1 Introduction . 21 2.2 History . 21 2.2.1 Low-level: binary, assembly . 21 2.2.2 Procedural programming . 22 2.2.3 Object-oriented programming . 23 2.2.4 Declarative programming . 27 3 Program Evaluation 33 3.1 Program analysis and translation phases . 33 3.1.1 Front end . 33 3.1.2 Back end . 34 3.2 Compilation vs. interpretation . 34 3.2.1 Compilation . 34 3.2.2 Interpretation . 36 3.2.3 Subreferences . 37 3.3 Type System . 38 3.3.1 Type checking . 38 3.4 Memory management . -

Functional Programming Functional Vs. Imperative Referential

Functional vs. Imperative Referential transparency Imperative programming concerned with “how.” The main (good) property of functional programming is Functional Programming referential transparency. Functional programming concerned with “what.” COMS W4115 Every expression denotes a single value. Based on the mathematics of the lambda calculus Prof. Stephen A. Edwards (Church as opposed to Turing). The value cannot be changed by evaluating an expression Spring 2003 or by sharing it between different parts of the program. “Programming without variables” Columbia University No references to global data; there is no global data. Department of Computer Science It is inherently concise, elegant, and difficult to create subtle bugs in. There are no side-effects, unlike in referentially opaque Original version by Prof. Simon Parsons languages. It’s a cult: once you catch the functional bug, you never escape. The Joy of Pascal Strange behavior Variables program example(output) This prints 5 then 4. At the heart of the “problem” is fact that the global data var flag: boolean; flag affects the value of f. Odd since you expect function f(n:int): int In particular begin f (1) + f (2) = f (2) + f (1) if flag then f := n flag := not flag else f := 2*n; gives the offending behavior flag := not flag Mathematical functions only depend on their inputs end They have no memory Eliminating assignments eliminates such problems. begin In functional languages, variables not names for storage. flag := true; What does this print? Instead, they’re names that refer to particular values. writeln(f(1) + f(2)); writeln(f(2) + f(1)); Think of them as not very variables. -

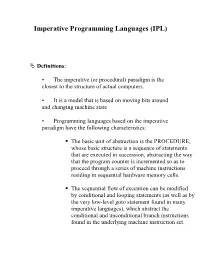

Imperative Programming Languages (IPL)

Imperative Programming Languages (IPL) © Definitions: • The imperative (or procedural) paradigm is the closest to the structure of actual computers. • It is a model that is based on moving bits around and changing machine state • Programming languages based on the imperative paradigm have the following characteristics: The basic unit of abstraction is the PROCEDURE, whose basic structure is a sequence of statements that are executed in succession, abstracting the way that the program counter is incremented so as to proceed through a series of machine instructions residing in sequential hardware memory cells. The sequential flow of execution can be modified by conditional and looping statements (as well as by the very low-level goto statement found in many imperative languages), which abstract the conditional and unconditional branch instructions found in the underlying machine instruction set. Variables play a key role, and serve as abstractions of hardware memory cells. Typically, a given variable may assume many different values of the course of the execution of a program, just as a hardware memory cell may contain many different values. Thus, the assignment statement is a very important and frequently used statement. © Examples of imperative languages: • FORTRAN, Algol, COBOL, Pascal, C (and to some extent C++), BASIC, Ada - and many more. © PL/I • PL/I (1963-5): was one of the few languages that attempted to be a general purpose language, rather than aiming at a particular category of programming. • PL/I incorporated a blend of features from FORTRAN, ALGOL, and COBOL, plus allowed programmers to create concurrent tasks, handle run-time exceptions, use recursive procedures, and use pointers. -

Comparative Studies of 10 Programming Languages Within 10 Diverse Criteria Revision 1.0

Comparative Studies of 10 Programming Languages within 10 Diverse Criteria Revision 1.0 Rana Naim∗ Mohammad Fahim Nizam† Concordia University Montreal, Concordia University Montreal, Quebec, Canada Quebec, Canada [email protected] [email protected] Sheetal Hanamasagar‡ Jalal Noureddine§ Concordia University Montreal, Concordia University Montreal, Quebec, Canada Quebec, Canada [email protected] [email protected] Marinela Miladinova¶ Concordia University Montreal, Quebec, Canada [email protected] Abstract This is a survey on the programming languages: C++, JavaScript, AspectJ, C#, Haskell, Java, PHP, Scala, Scheme, and BPEL. Our survey work involves a comparative study of these ten programming languages with respect to the following criteria: secure programming practices, web application development, web service composition, OOP-based abstractions, reflection, aspect orientation, functional programming, declarative programming, batch scripting, and UI prototyping. We study these languages in the context of the above mentioned criteria and the level of support they provide for each one of them. Keywords: programming languages, programming paradigms, language features, language design and implementation 1 Introduction Choosing the best language that would satisfy all requirements for the given problem domain can be a difficult task. Some languages are better suited for specific applications than others. In order to select the proper one for the specific problem domain, one has to know what features it provides to support the requirements. Different languages support different paradigms, provide different abstractions, and have different levels of expressive power. Some are better suited to express algorithms and others are targeting the non-technical users. The question is then what is the best tool for a particular problem. -

Concurrency and Parallelism in Functional Programming Languages

Concurrency and Parallelism in Functional Programming Languages John Reppy [email protected] University of Chicago April 21 & 24, 2017 Introduction Outline I Programming models I Concurrent I Concurrent ML I Multithreading via continuations (if there is time) CML 2 Introduction Outline I Programming models I Concurrent I Concurrent ML I Multithreading via continuations (if there is time) CML 2 Introduction Outline I Programming models I Concurrent I Concurrent ML I Multithreading via continuations (if there is time) CML 2 Introduction Outline I Programming models I Concurrent I Concurrent ML I Multithreading via continuations (if there is time) CML 2 Introduction Outline I Programming models I Concurrent I Concurrent ML I Multithreading via continuations (if there is time) CML 2 Programming models Different language-design axes I Parallel vs. concurrent vs. distributed. I Implicitly parallel vs. implicitly threaded vs. explicitly threaded. I Deterministic vs. non-deterministic. I Shared state vs. shared-nothing. CML 3 Programming models Different language-design axes I Parallel vs. concurrent vs. distributed. I Implicitly parallel vs. implicitly threaded vs. explicitly threaded. I Deterministic vs. non-deterministic. I Shared state vs. shared-nothing. CML 3 Programming models Different language-design axes I Parallel vs. concurrent vs. distributed. I Implicitly parallel vs. implicitly threaded vs. explicitly threaded. I Deterministic vs. non-deterministic. I Shared state vs. shared-nothing. CML 3 Programming models Different language-design axes I Parallel vs. concurrent vs. distributed. I Implicitly parallel vs. implicitly threaded vs. explicitly threaded. I Deterministic vs. non-deterministic. I Shared state vs. shared-nothing. CML 3 Programming models Different language-design axes I Parallel vs. -

Object-Oriented Programming

Advanced practical Programming for Scientists Thorsten Koch Zuse Institute Berlin TU Berlin WS2014/15 Administrative stuff All emails related to this lecture should start with APPFS in the subject. Everybody participating in this lecture, please send an email to <[email protected]> with your Name and Matrikel-Nr. We will setup a mailing list for announcements and discussion. Everything is here http://www.zib.de/koch/lectures/ws2014_appfs.php If you need the certificate, regular attendance, completion of homework assignments, and in particular participation in our small programming project is expected. Grades will be based on the outcome and a few questions about it . No groups. Advanced Programming 3 Planned Topics Overview: Imperative, OOP, Functional, side effects, thread safe, Design by contract Design: Information hiding, Dependencies, Coding style, Input checking, Error Handling Tools: git, gdb, undodb, gnat Design: Overall program design, Data structures, Memory allocation Examples: BIP Enumerator, Shortest path/STP, SCIP call, Gas computation Languages and correctness: Design errors/problems C/C++, C89 / C99 / C11, Compiler switches, assert, flexelint, FP, How to write correct programs Testing: black-box, white-box, Unit tests, regression tests, error tests, speed tests Tools: gcov, jenkins, ctest, doxygen, make, gprof, valgrind, coverity Software metrics: Why, Examples, Is it useful? Other Languages: Ada 2012, Introduction, Comparison to C/C++, SPARK Parallel programming: OpenMP, MPI, Others (pthreads, OpenCL), Ada How to design large programs All information is subject to change. Advanced Programming 4 Attitudes Algorithm engineering refers to the process required to transform a pencil-and-paper algorithm into a robust, efficient, well tested, and easily usable implementation. -

Clojure (For the Masses

(Clojure (for the masses)) (author Tero Kadenius Tarmo Aidantausta) (date 30.03.2011) 1 Contents 1 Introduction 4 1.1 Dialect of Lisp . 4 1.2 Dynamic typing . 4 1.3 Functional programming . 4 2 Introduction to Clojure syntax 4 2.1 Lispy syntax . 5 2.1.1 Parentheses, parentheses, parentheses . 5 2.1.2 Lists . 6 2.1.3 Prex vs. inx notation . 6 2.1.4 Dening functions . 6 3 Functional vs. imperative programming 7 3.1 Imperative programming . 7 3.2 Object oriented programming . 7 3.3 Functional programming . 8 3.3.1 Functions as rst class objects . 8 3.3.2 Pure functions . 8 3.3.3 Higher-order functions . 9 3.4 Dierences . 10 3.5 Critique . 10 4 Closer look at Clojure 11 4.1 Syntax . 11 4.1.1 Reader . 11 4.1.2 Symbols . 11 4.1.3 Literals . 11 4.1.4 Lists . 11 4.1.5 Vectors . 12 4.1.6 Maps . 12 4.1.7 Sets . 12 4.2 Macros . 12 4.3 Evaluation . 13 4.4 Read-Eval-Print-Loop . 13 4.5 Data structures . 13 4.5.1 Sequences . 14 4.6 Control structures . 14 4.6.1 if . 14 4.6.2 do . 14 4.6.3 loop/recur . 14 2 5 Concurrency in Clojure 15 5.1 From serial to parallel computing . 15 5.2 Problems caused by imperative programming paradigm . 15 5.3 Simple things should be simple . 16 5.4 Reference types . 16 5.4.1 Vars . 16 5.4.2 Atoms . 17 5.4.3 Agents . -

Imperative Programming in Sets with Atoms∗

Imperative Programming in Sets with Atoms∗ Mikołaj Bojańczyk1 and Szymon Toruńczyk1 1 University of Warsaw, Warsaw, Poland Abstract We define an imperative programming language, which extends while programs with a type for storing atoms or hereditarily orbit-finite sets. To deal with an orbit-finite set, the language has a loop construction, which is executed in parallel for all elements of an orbit-finite set. We show examples of programs in this language, e.g. a program for minimising deterministic orbit-finite automata. Keywords and phrases Nominal sets, sets with atoms, while programs Introduction This paper introduces a programming language that works with sets with atoms, which appear in the literature under various other names: Fraenkel-Mostowski models [2], nominal sets [7], sets with urelements [1], permutation models [9]. Sets with atoms are an extended notion of a set – such sets are allowed to contain “atoms”. The existence of atoms is postulated as an axiom. The key role in the theory is played by permutations of atoms. For instance, if a, b, c, d are atoms, then the sets {a, {a, b, c}, {a, c}} {b, {b, c, d}, {b, d}} are equal up to permutation of atoms. In a more general setting, the atoms have some struc- ture, and instead of permutations one talks about automorphisms of the atoms. Suppose for instance that the atoms are real numbers, equipped with the successor relation x = y + 1 and linear order x < y. Then the sets {−1, 0, 0.3}{5.2, 6.2, 6.12} are equal up to automorphism of the atoms, but the sets {0, 2}{5.3, 8.3} are not.