Measuring the Evolution of Timbre in Billboard Hot 100

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

What's the Download® Music Survival Guide

WHAT’S THE DOWNLOAD® MUSIC SURVIVAL GUIDE Written by: The WTD Interactive Advisory Board Inspired by: Thousands of perspectives from two years of work Dedicated to: Anyone who loves music and wants it to survive *A special thank you to Honorary Board Members Chris Brown, Sway Calloway, Kelly Clarkson, Common, Earth Wind & Fire, Eric Garland, Shirley Halperin, JD Natasha, Mark McGrath, and Kanye West for sharing your time and your minds. Published Oct. 19, 2006 What’s The Download® Interactive Advisory Board: WHO WE ARE Based on research demonstrating the need for a serious examination of the issues facing the music industry in the wake of the rise of illegal downloading, in 2005 The Recording Academy® formed the What’s The Download Interactive Advisory Board (WTDIAB) as part of What’s The Download, a public education campaign created in 2004 that recognizes the lack of dialogue between the music industry and music fans. We are comprised of 12 young adults who were selected from hundreds of applicants by The Recording Academy through a process which consisted of an essay, video application and telephone interview. We come from all over the country, have diverse tastes in music and are joined by Honorary Board Members that include high-profile music creators and industry veterans. Since the launch of our Board at the 47th Annual GRAMMY® Awards, we have been dedicated to discussing issues and finding solutions to the current challenges in the music industry surrounding the digital delivery of music. We have spent the last two years researching these issues and gathering thousands of opinions on issues such as piracy, access to digital music, and file-sharing. -

Platinum Master Songlist

Platinum Master Songlist A B C D E 1 STANDARDS/ JAZZ VOCALS/SWING BALLADS/ E.L. -CONT 2 Ain't Misbehavin' Nat King Cole Over The Rainbow Israel Kamakawiwo'ole 3 Ain't I Good Too You Various Artists Paradise Sade 4 All Of Me Various Artists Purple Rain Prince 5 At Last Etta James Say John Mayer 6 Blue Moon Various Artists Saving All My Love Whitney Houston 7 Blue Skies Eva Cassidy Shower The People James Taylor 8 Don't Get Around Much Anymore Nat King Cole Stay With Me Sam Smith 9 Don't Know Why Nora Jones Sunrise, Sunset Fiddler On The Roof 10 Fly Me To The Moon Frank Sinatra The First Time ( Ever I Saw You Face)Roberta Flack 11 Georgia On My Mind Ray Charles Thinking Out Loud Ed Sheeren 12 Girl From Impanema Various Artists Time After Time Cyndi Lauper 13 Haven't Met You Yet Michael Buble' Trouble Ray LaMontagne 14 Home Michael Buble' Tupelo Honey Van Morrison 15 I Get A Kick Out Of You Frank Sinatra Unforgettable Nat King Cole 16 It Don’t Mean A Thing Various Artists When You Say Nothing At All Alison Krause 17 It Had To Be You Harry Connick Jr. You Are The Best Thing Ray LaMontagne 18 Jump, Jive & Wail Brian Setzer Orchestra You Are The Sunshine Of My Life Stevie Wonder 19 La Vie En Rose Louis Armstrong You Look Wonderful Tonight Eric Clapton 20 Let The Good Times Roll Louie Jordan 21 LOVE Various Artists 22 My Funny Valentine Various Artists JAZZ INSTRUMENTAL 23 Oranged Colored Sky Natalie Cole Birdland Joe Zawinul 24 Paper Moon Nat King Cole Breezin' George Benson 25 Route 66 Nat King Cole Chicago Song David Sanborn 26 Satin Doll Nancy Wilson Fragile Sting 27 She's No Lady Various Artists Just The Two Of Us Grover Washington Jr. -

Adult Contemporary Radio at the End of the Twentieth Century

University of Kentucky UKnowledge Theses and Dissertations--Music Music 2019 Gender, Politics, Market Segmentation, and Taste: Adult Contemporary Radio at the End of the Twentieth Century Saesha Senger University of Kentucky, [email protected] Digital Object Identifier: https://doi.org/10.13023/etd.2020.011 Right click to open a feedback form in a new tab to let us know how this document benefits ou.y Recommended Citation Senger, Saesha, "Gender, Politics, Market Segmentation, and Taste: Adult Contemporary Radio at the End of the Twentieth Century" (2019). Theses and Dissertations--Music. 150. https://uknowledge.uky.edu/music_etds/150 This Doctoral Dissertation is brought to you for free and open access by the Music at UKnowledge. It has been accepted for inclusion in Theses and Dissertations--Music by an authorized administrator of UKnowledge. For more information, please contact [email protected]. STUDENT AGREEMENT: I represent that my thesis or dissertation and abstract are my original work. Proper attribution has been given to all outside sources. I understand that I am solely responsible for obtaining any needed copyright permissions. I have obtained needed written permission statement(s) from the owner(s) of each third-party copyrighted matter to be included in my work, allowing electronic distribution (if such use is not permitted by the fair use doctrine) which will be submitted to UKnowledge as Additional File. I hereby grant to The University of Kentucky and its agents the irrevocable, non-exclusive, and royalty-free license to archive and make accessible my work in whole or in part in all forms of media, now or hereafter known. -

PAMM Presents Cashmere Cat, Jillionaire + Special Guest Uncle Luke, a Poplife Production, During Miami Art Week in December

PAMM Presents Cashmere Cat, Jillionaire + Special Guest Uncle Luke, a Poplife Production, during Miami Art Week in December Special one-night-only performance to take place on PAMM’s Terrace Thursday, December 1, 2016 MIAMI – November 9, 2016 – Pérez Art Museum Miami (PAMM) announces PAMM Presents Cashmere Cat, Jillionaire + special guest Uncle Luke, a Poplife Production, performance in celebration of Miami Art Week this December. Taking over PAMM’s terrace overlooking Biscayne Bay, Norwegian Musician and DJ Cashmere Cat will headline a one-night-only performance, kicking off Art Basel in Miami Beach. The show will also feature projected visual elements, set against the backdrop of the museum’s Herzog & de Meuron-designed building. The evening will continue with sets by Trinidadian DJ and music producer Jillionaire, with a special guest performance by Miami-based Luther “Uncle Luke” Campbell, an icon of Hip Hop who has enjoyed worldwide success while still being true to his Miami roots PAMM Presents Cashmere Cat, Jillionaire + special guest Uncle Luke, takes place on Thursday, December 1, 9pm–midnight, during the museum’s signature Miami Art Week/Art Basel Miami Beach celebration. The event is open to exclusively to PAMM Sustaining and above level members as well as Art Basel Miami Beach, Design Miami/ and Art Miami VIP cardholders. For more information, or to join PAMM as a Sustaining or above level member, visit pamm.org/support or contact 305 375 1709. Cashmere Cat (b. 1987, Norway) is a musical producer and DJ who has risen to fame with 30+ international headline dates including a packed out SXSW, MMW & Music Hall of Williamsburg, and recent single ‘With Me’, which premiered with Zane Lowe on BBC Radio One & video premiere at Rolling Stone. -

1. Summer Rain by Carl Thomas 2. Kiss Kiss by Chris Brown Feat T Pain 3

1. Summer Rain By Carl Thomas 2. Kiss Kiss By Chris Brown feat T Pain 3. You Know What's Up By Donell Jones 4. I Believe By Fantasia By Rhythm and Blues 5. Pyramids (Explicit) By Frank Ocean 6. Under The Sea By The Little Mermaid 7. Do What It Do By Jamie Foxx 8. Slow Jamz By Twista feat. Kanye West And Jamie Foxx 9. Calling All Hearts By DJ Cassidy Feat. Robin Thicke & Jessie J 10. I'd Really Love To See You Tonight By England Dan & John Ford Coley 11. I Wanna Be Loved By Eric Benet 12. Where Does The Love Go By Eric Benet with Yvonne Catterfeld 13. Freek'n You By Jodeci By Rhythm and Blues 14. If You Think You're Lonely Now By K-Ci Hailey Of Jodeci 15. All The Things (Your Man Don't Do) By Joe 16. All Or Nothing By JOE By Rhythm and Blues 17. Do It Like A Dude By Jessie J 18. Make You Sweat By Keith Sweat 19. Forever, For Always, For Love By Luther Vandros 20. The Glow Of Love By Luther Vandross 21. Nobody But You By Mary J. Blige 22. I'm Going Down By Mary J Blige 23. I Like By Montell Jordan Feat. Slick Rick 24. If You Don't Know Me By Now By Patti LaBelle 25. There's A Winner In You By Patti LaBelle 26. When A Woman's Fed Up By R. Kelly 27. I Like By Shanice 28. Hot Sugar - Tamar Braxton - Rhythm and Blues3005 (clean) by Childish Gambino 29. -

Songs by Title Karaoke Night with the Patman

Songs By Title Karaoke Night with the Patman Title Versions Title Versions 10 Years 3 Libras Wasteland SC Perfect Circle SI 10,000 Maniacs 3 Of Hearts Because The Night SC Love Is Enough SC Candy Everybody Wants DK 30 Seconds To Mars More Than This SC Kill SC These Are The Days SC 311 Trouble Me SC All Mixed Up SC 100 Proof Aged In Soul Don't Tread On Me SC Somebody's Been Sleeping SC Down SC 10CC Love Song SC I'm Not In Love DK You Wouldn't Believe SC Things We Do For Love SC 38 Special 112 Back Where You Belong SI Come See Me SC Caught Up In You SC Dance With Me SC Hold On Loosely AH It's Over Now SC If I'd Been The One SC Only You SC Rockin' Onto The Night SC Peaches And Cream SC Second Chance SC U Already Know SC Teacher, Teacher SC 12 Gauge Wild Eyed Southern Boys SC Dunkie Butt SC 3LW 1910 Fruitgum Co. No More (Baby I'm A Do Right) SC 1, 2, 3 Redlight SC 3T Simon Says DK Anything SC 1975 Tease Me SC The Sound SI 4 Non Blondes 2 Live Crew What's Up DK Doo Wah Diddy SC 4 P.M. Me So Horny SC Lay Down Your Love SC We Want Some Pussy SC Sukiyaki DK 2 Pac 4 Runner California Love (Original Version) SC Ripples SC Changes SC That Was Him SC Thugz Mansion SC 42nd Street 20 Fingers 42nd Street Song SC Short Dick Man SC We're In The Money SC 3 Doors Down 5 Seconds Of Summer Away From The Sun SC Amnesia SI Be Like That SC She Looks So Perfect SI Behind Those Eyes SC 5 Stairsteps Duck & Run SC Ooh Child SC Here By Me CB 50 Cent Here Without You CB Disco Inferno SC Kryptonite SC If I Can't SC Let Me Go SC In Da Club HT Live For Today SC P.I.M.P. -

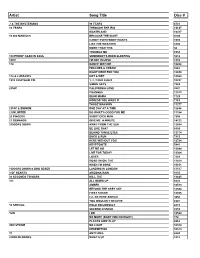

Copy UPDATED KAREOKE 2013

Artist Song Title Disc # ? & THE MYSTERIANS 96 TEARS 6781 10 YEARS THROUGH THE IRIS 13637 WASTELAND 13417 10,000 MANIACS BECAUSE THE NIGHT 9703 CANDY EVERYBODY WANTS 1693 LIKE THE WEATHER 6903 MORE THAN THIS 50 TROUBLE ME 6958 100 PROOF AGED IN SOUL SOMEBODY'S BEEN SLEEPING 5612 10CC I'M NOT IN LOVE 1910 112 DANCE WITH ME 10268 PEACHES & CREAM 9282 RIGHT HERE FOR YOU 12650 112 & LUDACRIS HOT & WET 12569 1910 FRUITGUM CO. 1, 2, 3 RED LIGHT 10237 SIMON SAYS 7083 2 PAC CALIFORNIA LOVE 3847 CHANGES 11513 DEAR MAMA 1729 HOW DO YOU WANT IT 7163 THUGZ MANSION 11277 2 PAC & EMINEM ONE DAY AT A TIME 12686 2 UNLIMITED DO WHAT'S GOOD FOR ME 11184 20 FINGERS SHORT DICK MAN 7505 21 DEMANDS GIVE ME A MINUTE 14122 3 DOORS DOWN AWAY FROM THE SUN 12664 BE LIKE THAT 8899 BEHIND THOSE EYES 13174 DUCK & RUN 7913 HERE WITHOUT YOU 12784 KRYPTONITE 5441 LET ME GO 13044 LIVE FOR TODAY 13364 LOSER 7609 ROAD I'M ON, THE 11419 WHEN I'M GONE 10651 3 DOORS DOWN & BOB SEGER LANDING IN LONDON 13517 3 OF HEARTS ARIZONA RAIN 9135 30 SECONDS TO MARS KILL, THE 13625 311 ALL MIXED UP 6641 AMBER 10513 BEYOND THE GREY SKY 12594 FIRST STRAW 12855 I'LL BE HERE AWHILE 9456 YOU WOULDN'T BELIEVE 8907 38 SPECIAL HOLD ON LOOSELY 2815 SECOND CHANCE 8559 3LW I DO 10524 NO MORE (BABY I'MA DO RIGHT) 178 PLAYAS GON' PLAY 8862 3RD STRIKE NO LIGHT 10310 REDEMPTION 10573 3T ANYTHING 6643 4 NON BLONDES WHAT'S UP 1412 4 P.M. -

Kentucky State Fair Announces Texas Roadhouse Concert Series Lineup 23 Bands Over 11 Nights, All Included with Fair Admission

FOR IMMEDIATE RELEASE Contact: Ian Cox 502-367-5186 [email protected] Kentucky State Fair Announces Texas Roadhouse Concert Series Lineup 23 bands over 11 nights, all included with fair admission. LOUISVILLE, Ky. (May 26, 2021) — The Kentucky State Fair announced the lineup of the Texas Roadhouse Concert Series at a press conference today. Performances range from rock, indie, country, Christian, and R&B. All performances are taking place adjacent to the Pavilion and Kentucky Kingdom. Concerts are included with fair admission. “We’re excited to welcome everyone to the Kentucky State Fair and Texas Roadhouse Concert series this August. We’ve got a great lineup with old friends like the Oak Ridge Boys and up-and-coming artists like Jameson Rodgers and White Reaper. Additionally, we have seven artists that are from Kentucky, which shows the incredible talent we have here in the Commonwealth. This year’s concert series will offer something for everyone and be the perfect celebration after a year without many of our traditional concerts and events,” said David S. Beck, President and CEO of Kentucky Venues. Held August 19-29 during the Kentucky State Fair, the Texas Roadhouse Concert Series features a wide range of musical artists with a different concert every night. All concerts are free with paid gate admission. “The lineup for this year's Kentucky State Fair Concert Series features something for everybody," says Texas Roadhouse spokesperson Travis Doster, "We look forward to being part of this event that brings people together to create memories and fun like we do in our restaurants.” The Texas Roadhouse Concert Series lineup is: Thursday, August 19 Josh Turner with special guest Alex Miller Friday, August 20 Ginuwine with special guest Color Me Badd Saturday, August 21 Colt Ford with special guest Elvie Shane Sunday, August 22 The Oak Ridge Boys with special guest T. -

Analysing Korean Popular Music for Global

Analysing Korean Popular Music for Global Audiences: A Social Semiotic Approach Jonas Robertson Paper originally submitted March 2014 to the Department of English of the University of Birmingham, UK, as an assignment in Multimodal Communication, in partial fulfillment of a Master of Arts in Teaching English as a Foreign / Second Language (TEFL / TESL). Assignment: MMC/13/04 Collect between three and five pieces of music that might be taken to represent a particular artist, genre, style, or mood and present an analysis in terms of the social semiotic approach to music. You might like to concentrate on one or more of the following: - Timing - Sound quality - Melody - Perspective - Tagg’s Sign Typology Reflect briefly on how useful you found the framework in identifying how the pieces of music you chose might work to make meanings. 1 Contents 1 Introduction 3 2 Background of Social Semiotics and Music 3 3 Framework for Analysis 4 4 Analysis A: Fantastic Baby by Big Bang 7 5 Analysis B: I Got a Boy by Girls’ Generation 8 6 Analysis C: The Baddest Female by CL 10 7 Analysis D: La Song by Rain 12 8 Discussion 14 9 Conclusion 15 References 17 Appendices 19 2 1 Introduction This paper documents the analysis of four sample selections of Korean popular music (K- pop) from a social semiotic approach to determine what meanings are conveyed musically. Each of these songs have been selected as examples of K-pop that have been designed to be marketed beyond the borders of Korea, targeting an increasingly global audience. Despite featuring primarily Korean lyrics, these major hits remain popular among the millions of fans overseas who cannot understand most of the words. -

Karaoke Mietsystem Songlist

Karaoke Mietsystem Songlist Ein Karaokesystem der Firma Showtronic Solutions AG in Zusammenarbeit mit Karafun. Karaoke-Katalog Update vom: 13/10/2020 Singen Sie online auf www.karafun.de Gesamter Katalog TOP 50 Shallow - A Star is Born Take Me Home, Country Roads - John Denver Skandal im Sperrbezirk - Spider Murphy Gang Griechischer Wein - Udo Jürgens Verdammt, Ich Lieb' Dich - Matthias Reim Dancing Queen - ABBA Dance Monkey - Tones and I Breaking Free - High School Musical In The Ghetto - Elvis Presley Angels - Robbie Williams Hulapalu - Andreas Gabalier Someone Like You - Adele 99 Luftballons - Nena Tage wie diese - Die Toten Hosen Ring of Fire - Johnny Cash Lemon Tree - Fool's Garden Ohne Dich (schlaf' ich heut' nacht nicht ein) - You Are the Reason - Calum Scott Perfect - Ed Sheeran Münchener Freiheit Stand by Me - Ben E. King Im Wagen Vor Mir - Henry Valentino And Uschi Let It Go - Idina Menzel Can You Feel The Love Tonight - The Lion King Atemlos durch die Nacht - Helene Fischer Roller - Apache 207 Someone You Loved - Lewis Capaldi I Want It That Way - Backstreet Boys Über Sieben Brücken Musst Du Gehn - Peter Maffay Summer Of '69 - Bryan Adams Cordula grün - Die Draufgänger Tequila - The Champs ...Baby One More Time - Britney Spears All of Me - John Legend Barbie Girl - Aqua Chasing Cars - Snow Patrol My Way - Frank Sinatra Hallelujah - Alexandra Burke Aber Bitte Mit Sahne - Udo Jürgens Bohemian Rhapsody - Queen Wannabe - Spice Girls Schrei nach Liebe - Die Ärzte Can't Help Falling In Love - Elvis Presley Country Roads - Hermes House Band Westerland - Die Ärzte Warum hast du nicht nein gesagt - Roland Kaiser Ich war noch niemals in New York - Ich War Noch Marmor, Stein Und Eisen Bricht - Drafi Deutscher Zombie - The Cranberries Niemals In New York Ich wollte nie erwachsen sein (Nessajas Lied) - Don't Stop Believing - Journey EXPLICIT Kann Texte enthalten, die nicht für Kinder und Jugendliche geeignet sind. -

1 Stairway to Heaven Led Zeppelin 1971 2 Hey Jude the Beatles 1968

1 Stairway To Heaven Led Zeppelin 1971 2 Hey Jude The Beatles 1968 3 (I Can't Get No) Satisfaction Rolling Stones 1965 4 Jailhouse Rock Elvis Presley 1957 5 Born To Run Bruce Springsteen 1975 6 I Want To Hold Your Hand The Beatles 1964 7 Yesterday The Beatles 1965 8 Peggy Sue Buddy Holly 1957 9 Imagine John Lennon 1971 10 Johnny B. Goode Chuck Berry 1958 11 Born In The USA Bruce Springsteen 1985 12 Happy Together The Turtles 1967 13 Mack The Knife Bobby Darin 1959 14 Brown Sugar Rolling Stones 1971 15 Blueberry Hill Fats Domino 1956 16 I Heard It Through The Grapevine Marvin Gaye 1968 17 American Pie Don McLean 1972 18 Proud Mary Creedence Clearwater Revival 1969 19 Let It Be The Beatles 1970 20 Nights In White Satin Moody Blues 1972 21 Light My Fire The Doors 1967 22 My Girl The Temptations 1965 23 Help! The Beatles 1965 24 California Girls Beach Boys 1965 25 Born To Be Wild Steppenwolf 1968 26 Take It Easy The Eagles 1972 27 Sherry Four Seasons 1962 28 Stop! In The Name Of Love The Supremes 1965 29 A Hard Day's Night The Beatles 1964 30 Blue Suede Shoes Elvis Presley 1956 31 Like A Rolling Stone Bob Dylan 1965 32 Louie Louie The Kingsmen 1964 33 Still The Same Bob Seger & The Silver Bullet Band 1978 34 Hound Dog Elvis Presley 1956 35 Jumpin' Jack Flash Rolling Stones 1968 36 Tears Of A Clown Smokey Robinson & The Miracles 1970 37 Addicted To Love Robert Palmer 1986 38 (We're Gonna) Rock Around The Clock Bill Haley & His Comets 1955 39 Layla Derek & The Dominos 1972 40 The House Of The Rising Sun The Animals 1964 41 Don't Be Cruel Elvis Presley 1956 42 The Sounds Of Silence Simon & Garfunkel 1966 43 She Loves You The Beatles 1964 44 Old Time Rock And Roll Bob Seger & The Silver Bullet Band 1979 45 Heartbreak Hotel Elvis Presley 1956 46 Jump (For My Love) Pointer Sisters 1984 47 Little Darlin' The Diamonds 1957 48 Won't Get Fooled Again The Who 1971 49 Night Moves Bob Seger & The Silver Bullet Band 1977 50 Oh, Pretty Woman Roy Orbison 1964 51 Ticket To Ride The Beatles 1965 52 Lady Styx 1975 53 Good Vibrations Beach Boys 1966 54 L.A. -

CONGRESSIONAL RECORD— Extensions of Remarks E952 HON

E952 CONGRESSIONAL RECORD — Extensions of Remarks June 11, 2014 the Western New York community and wishing HONORING RABBI AVI AND TOBY PERSONAL EXPLANATION her and her family the best in all of their future WEISS endeavors. HON. PETER WELCH OF VERMONT f HON. ELIOT L. ENGEL IN THE HOUSE OF REPRESENTATIVES OF NEW YORK Wednesday, June 11, 2014 TRANSPORTATION, HOUSING AND URBAN DEVELOPMENT, AND RE- IN THE HOUSE OF REPRESENTATIVES Mr. WELCH. Mr. Speaker, I inadvertently LATED AGENCIES APPROPRIA- voted ‘‘no’’ on rollcall vote No. 277, the Nadler TIONS ACT, 2015 Wednesday, June 11, 2014 Amendment to H.R. 4745. As a strong sup- porter of this amendment, my intent was to Mr. ENGEL. Mr. Speaker, there is a saying vote ‘‘yes.’’ SPEECH OF that ‘‘talent does what it can, while genius f does what it must.’’ The inner strength and HON. TULSI GABBARD spirit which moves Rabbi Avi Weiss and his IN MEMORY OF DON DAVIS AND OF HAWAII wife Toby cannot be contained. The genius of HIS REMARKABLE IMPACT ON their shared vision and commitment to social THE GREATER DETROIT COMMU- IN THE HOUSE OF REPRESENTATIVES justice shines brightly and for all to see. NITY Monday, June 9, 2014 Rabbi Weiss’ work isn’t limited to the con- fines of any city or synagogue, nor has he HON. GARY C. PETERS The House in Committee of the Whole OF MICHIGAN shied away from raising his voice to lift the op- House on the state of the Union had under IN THE HOUSE OF REPRESENTATIVES consideration the bill (H.R.