Converting 3D Model Files

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Agisoft Photoscan User Manual Standard Edition, Version 1.3 Agisoft Photoscan User Manual: Standard Edition, Version 1.3

Agisoft PhotoScan User Manual Standard Edition, Version 1.3 Agisoft PhotoScan User Manual: Standard Edition, Version 1.3 Publication date 2017 Copyright © 2017 Agisoft LLC Table of Contents Overview ......................................................................................................................... iv How it works ............................................................................................................ iv About the manual ...................................................................................................... iv 1. Installation and Activation ................................................................................................ 1 System requirements ................................................................................................... 1 GPU acceleration ........................................................................................................ 1 Installation procedure .................................................................................................. 2 Restrictions of the Demo mode ..................................................................................... 2 Activation procedure ................................................................................................... 3 2. Capturing photos ............................................................................................................ 4 Equipment ................................................................................................................ -

![N Polys Advanced X3D [Autosaved]](https://docslib.b-cdn.net/cover/2915/n-polys-advanced-x3d-autosaved-332915.webp)

N Polys Advanced X3D [Autosaved]

Web3D 2011 Tutorial: Advanced X3D Nicholas Polys: Virginia Tech Yvonne Jung: Fraunhofer IGD Jeff Weekly, Don Brutzman: Naval Postgraduate School Tutorial Outline Recent work in the Web3D Consortium Heading to ISO this month! • X3D : Advanced Features • X3D Basics • Advanced rendering (Yvonne Jung) • Volumes • Geospatial • CAD • Units (Jeff Weekly) • Authoring 2 Open Standards www.web3d.org • Portability • Durability • IP-independence • International recognition and support : the Standard Scenegraph Scene graph for real-time interactive delivery of virtual environments over the web: • Meshes, lights, materials, textures, shaders • Integrated video, audio Event ROUTE • Animation • Interaction • Scripts & Behaviors Sensor • Multiple encodings (ISO = XML, VRML-Classic, Binary) • Multiple Application Programming Interfaces (ISO = ECMA, Java) • X3D 3.3 includes examples for Volume rendering, CAD and Geospatial support! Web3D Collaboration & Convergence W3C ISO OGC - XML - Web3DS - HTML 5 -CityGML - SVG - KML Interoperability Web3D Consortium IETF & Access - Mime types Across Verticals - Extensible 3D (X3D) - Humanoid Animation (H-Anim) - VRML DICOM - N-D Presentation State - DIS - Volume data Khronos - OpenGL, WebGL - COLLADA Adoption Immersive X3D • Virginia Tech Visionarium: VisCube • Multi-screen, clustered stereo rendering • 1920x1920 pixels per wall (x 4) • Infitech Stereo • Wireless Intersense head & wand • Instant Reality 7 VT Visionarium • Output from VMD • Jory Z. Ruscio, Deept Kumar, Maulik Shukla, Michael G. Prisant, T. M. Murali, -

Visualisation and Generalisation of 3D City Models

Visualisation and Generalisation of 3D City Models Bo Mao August 2010 TRITA SoM 2010-08 ISSN 1653-6126 ISRN KTH/SoM/-10/08/-SE ISBN 978-91-7415-715-4 © Bo Mao 2010 Licentiate Thesis Geoinformatics Division Department of Urban Planning and Environment Royal Institute of Technology (KTH) SE-100 44 STOCKHOLM, Sweden ii Abstract 3D city models have been widely used in different applications such as urban planning, traffic control, disaster management etc. Effective visualisation of 3D city models in various scales is one of the pivotal techniques to implement these applications. In this thesis, a framework is proposed to visualise the 3D city models both online and offline using City Geography Makeup Language (CityGML) and Extensible 3D (X3D) to represent and present the models. Then, generalisation methods are studied and tailored to create 3D city scenes in multi- scale dynamically. Finally, the quality of generalised 3D city models is evaluated by measuring the visual similarity from the original models. In the proposed visualisation framework, 3D city models are stored in CityGML format which supports both geometric and semantic information. These CityGML files are parsed to create 3D scenes and be visualised with existing 3D standard. Because the input and output in the framework are all standardised, it is possible to integrate city models from different sources and visualise them through the different viewers. Considering the complexity of the city objects, generalisation methods are studied to simplify the city models and increase the visualisation efficiency. In this thesis, the aggregation and typification methods are improved to simplify the 3D city models. -

WWDC15 Graphics and Games

Graphics and Games #WWDC15 Enhancements to SceneKit Session 606 Thomas Goossens Amaury Balliet Sébastien Métrot © 2015 Apple Inc. All rights reserved. Redistribution or public display not permitted without written permission from Apple. GameKit APIs High-Level APIs SceneKit SpriteKit Services Model I/O GameplayKit GameController Low-Level APIs Metal OpenGL SceneKit Since Mountain Lion Since iOS 8 SceneKit Particles Physics Physics Fields SpriteKit Scene Editor Scene Editor Available in Xcode 7 Scene Editor Can open and edit DAE, OBJ, Alembic, STL, and PLY files New native file format (SceneKit archives) Scene Editor SceneKit file format SCNScene archived with NSKeyedArchiver NSKeyedArchiver.archiveRootObject(scnScene, toFile: aFile) SCN Scene Editor Build your game levels Scene Editor Particles Physics Physics Fields Actions Scene Editor Shader Modifiers Ambient Occlusion Demo Scene Editor Amaury Balliet Behind the Scene Thomas Goossens Behind the Scene Concept phase Behind the Scene 3D modeling Behind the Scene Production • Final models • Textures • Lighting • Skinned character Behind the Scene Make it awesome • Particles • 2D overlays • Vegetation • Fog Game Sample Collisions with walls Rendered Mesh Collision Mesh Collisions with the Ground Collisions with the Ground Collisions with the Ground Collisions with the Ground Collisions with the Ground Collisions with the Ground Collisions with the Ground Collisions with the Ground Collisions with the Ground Collisions with the Ground Animations Animations Game Sample Animated elements Skinning -

ISO/IEC JTC 1 N13604 ISO/IEC JTC 1 Information Technology

ISO/IEC JTC 1 N13604 2017-09-17 Replaces: ISO/IEC JTC 1 Information Technology Document Type: other (defined) Document Title: Study Group Report on 3D Printing and Scanning Document Source: SG Convenor Project Number: Document Status: This document is circulated for review and consideration at the October 2017 JTC 1 meeting in Russia. Action ID: ACT Due Date: 2017-10-02 Pages: Secretariat, ISO/IEC JTC 1, American National Standards Institute, 25 West 43rd Street, New York, NY 10036; Telephone: 1 212 642 4932; Facsimile: 1 212 840 2298; Email: [email protected] Study Group Report on 3D Printing and Scanning September 11, 2017 ISO/IEC JTC 1 Plenary (October 2017, Vladivostok, Russia) Prepared by the ISO/IEC JTC 1 Study Group on 3D Printing and Scanning Executive Summary The purpose of this report is to assess the possible contributions of JTC 1 to the global market enabled by 3D Printing and Scanning. 3D printing, also known as additive manufacturing, is considered by many sources as a truly disruptive technology. 3D printers range presently from small table units to room size and can handle simple plastics, metals, biomaterials, concrete or a mix of materials. They can be used in making simple toys, airplane engine components, custom pills, large buildings components or human organs. Depending on process, materials and precision, 3D printer costs range from hundreds to millions of dollars. 3D printing makes possible the manufacturing of devices and components that cannot be constructed cost-effectively with other manufacturing techniques (injection molding, computerized milling, etc.). It also makes possible the fabrications of customized devices, or individual (instead of identical mass-manufactured) units. -

Moving Web 3D Content Into Gearvr

Moving Web 3d Content into GearVR Mitch Williams Samsung / 3d-online GearVR Software Engineer August 1, 2017, Web 3D BOF SIGGRAPH 2017, Los Angeles Samsung GearVR s/w development goals • Build GearVRf (framework) • GearVR Java API to build apps (also works with JavaScript) • Play nice with other devices • Wands, Controllers, I/O devices • GearVR apps run on Google Daydream ! • Performance, New Features • Fight for every Millisecond: • Android OS, Oculus, GPU, CPU, Vulkun • Unity, Unreal, GearVRf (framework) • Enable content creation • Game developers, 3D artists, UI/UX people, Web designers Content Creation for GearVR • 360 movies • Game Editors: Unity, Unreal • GearVRf (framework) • Open source Java api, JavaScript bindings • WebVR • WebGL; frameworks: A-frame, Three.js, React, X3Dom • 3d file formats • Collada, .FBX, gltf, .OBJ &.mtl, etc. using Jassimp • Java binding for Assimp (Asset Import Library) • X3D Why implement X3D in GearVR • Samsung began this effort February, 2016 • X3D is a widely supported file format • Exported by 3DS Max, Blender, Maya, Moto • Or exports VRML and converts to X3D • No other file format had similar capabilities. • Interactivity via JavaScript • Declarative format easy to edit / visualize the scene. • GearVR is not just a VR game console like Sony PSVR • We are a phone, web access device, camera, apps platform • X3D enables web applications: • Compliments the game influence in GearVR from Unity, Unreal. • Enables new VR web apps including: Google Maps, Facebook, Yelp JavaScript API’s. GearVR app Build Runtime Live Demo Very daring for the presenter who possesses no art skills. • 3ds Max 1. Animated textured objects 2. Export VRML97, Convert with “Instant Reality” to X3D • Android Studio 1. -

Digital Asset Exchange

DDiiggiittaall AAsssseett EExxcchhaannggee Dr. Rémi Arnaud Sony Computer Entertainment Khronos Group Promoter © Copyright Khronos Group, 2007 - Page 1 •www.khronos.org •Founded in January 2000 by a number of leading media-centric companies, including: 3Dlabs, ATI, Discreet, Evans & Sutherland, Intel, NVIDIA, SGI and Sun Microsystems. (currently more than 100) •Dedicated to the creation of royalty-free open standard APIs to enable the playback of rich media on a wide variety of platforms and devices. •Home of 11 WG, including: OpenGL, OpenGL ES, OpenVG, OpenKODE, OpenSL, COLLADA •New WG introduced at GDC: glFX, Compositing © Copyright Khronos Group, 2007 - Page 2 CCOOLLLLAADDAA aass aa SSCCEE pprroojjeecctt •SCE project, started in August 2003 - Goal: raise the quality and productivity of content development - free the content from proprietary formats and API, bring more tools to game developers, lower the barrier to entry for small tool companies, facilitate middleware integration, bring ‘advanced’ features in the main stream, drastically improve artist productivity. •Common description language - Developers get the content in a standard form - Tools can interchange content - Developers can mix and match tools during development - Many specialized tools can be independently created, and incorporated into developer’s content pipeline •COLLAborative Design Activity, SCE brought to the same table the major DCC vendors: Discreet, Alias, Softimage •Released v1.0 specification in August 2004 •Gathered feedback, waiting for tools to provide useful -

Luxion Keyshot ® Amazing Animations and Renderings of 3D Data

Luxion Keyshot ® Amazing Animations and Renderings of 3D Data. In Real Time. KeyShot makes the creation of amazing renderings and animations fast and easy. It supports the widest number of 3D file formats on both Mac and PC. KeyShot is 100% CPU based real-time 3D rendering and animation for both Mac and PC. Import any CAD model and easily start applying colours, materials and lighting with real time ray tracing (rendering results). Used worldwide by top design professionals and independent 3D artists, KeyShot is the complete solution for your 3D visualization needs. Easily add animation to engage your audience with simple turntables, dynamic exploded views, dramatic camera paths and much more. Key Features and Benefits CPU Power Harnessing the raw power of the CPU, KeyShot doesn’ t require any special hardware or graphics card. The more cores and threads in your computer, the higher the performance and faster the render times. Supported 3D formats Alias*, AutoCAD, CATIA, Creo (Pro/Engineer), Inventor, NX, Maya*, Parasolid, Rhinoceros, Sketchup, Solid Edge, SolidWorks, Collada, IGES, JT, OBJ, STEP, FBX Including part/camera animation, 3DS, STL, Allembic, 3DXML Plugins (PC only) - Creo, Pro/ENGINEER, Rhinoceros, SolidWorks, SketchUp, 3DS MAX, GrabCAD Who uses Keyshot? With Keyshot, Photo-realistic rendering takes place in real- time. Any changes made to materials, lighting, or cameras are displayed immediately. This allows designers, engineers photographers and marketers to spend more time being creative and less time wrestling with technical issues and render times. WWW.PDSVISION.COM Keyshot Features and Benefits Keyshot is Flexible The ability to import many popular 3d file formats makes KeyShot the universal rendering tool for CAD models. -

MEDIA LAYER and SERVICE LAYER Media Layer

SNS COLLEGE OF ENGINEERING Kurumbapalayam (Po), Coimbatore – 641 107 AN AUTONOMOUS INSTITUTION Accredited by NBA – AICTE and Accredited by NAAC – UGCwith ‘A’ Grade Approved by AICTE, New Delhi & Affiliated to Anna University, Chennai CS8493-OPERATING SYSTEMS MEDIA LAYER AND SERVICE LAYER Media Layer Beautiful graphics and high-fidelity multimedia are hallmarks of the OS X user experience. Take advantage of the technologies of the Media layer to incorporate 2D and 3D graphics, animations, image effects, and professional-grade audio and video functionality into your app. Supported Media Formats OS X supports more than 100 media types, covering a range of audio, video, image, and streaming formats. Table 3-1 lists some of the more common supported file formats. Table 3-1 Partial list of formats supported in OS X Image formats PICT, BMP, GIF, JPEG, TIFF, PNG, DIB, ICO, EPS, PDF Audio file and data AAC, AIFF, WAVE, uLaw, AC3, MPEG-3, MPEG-4 (.mp4, .m4a), .snd, .au, .caf, formats Adaptive multi-rate (.amr) AVI, AVR, DV, M-JPEG, MPEG-1, MPEG-2, MPEG-4, AAC, OpenDML, 3GPP, 3GPP2, Video file formats AMC, H.264, iTunes (.m4v), QuickTime (.mov, .qt) Web streaming HTTP, RTP, RTSP protocols Graphics Technologies A distinctive quality of any OS X app is high-quality graphics in its user interface. And on a Retina display, users are more aware than ever of your app’s graphics. The simplest, most efficient, and most common way to ensure high-quality graphics in your app is to use the standard views and controls of the AppKit framework, along with prerendered images in different resolutions. -

COLLADA: an Open Standard for Robot File Formats

COLLADA: An Open Standard for Robot File Formats Rosen Diankov*, Ryohei Ueda*, Kei Okada*,Hajime Saito** *JSK Robotics System Laboratory, The University of Tokyo ** GenerarlRobotix Inc. Sept 8, 2011, 09:30-11:00 and 12:30-14:00 Introduction • Robotics Software Platforms – To share research tools between robots • Missing Link: Standard Robot File Format OpenRAVE for planning EusLisp ROS for PR2 Robot File Format OpenHRP for controllers DARwIn-OP Choregraphe for Nao Webots Standard Robot File Format • Define robot file format standards ? – Kinematics, Geometry, Physics – Sensors, Actuators – And more ???? • Content-scalable – There will always be information that another developer wants to insert – NOT adding new information to extend the original content, BUT adding new content. – Manage new content and allow extensibility of existing content with the scalability. COLLADA Features (1) • COLLADA:COLLAborative Design Activity – http://www.khronos.org/collada/ • XML-based open standard • Originally started as 3D model format Mimic joint • Most recent version(1.5) supports physics, kinematics and b-rep splines. • Supported by Blender, OpenSceneGraph and SolidWorks. Closed link COLLADA Specification • Core content management tags to dictate the referencing structures – Geometries, kinematics bodies, physics bodies and joints as libraries • Defines different type of scenes for graphics, physics and kinematics – Bind joints and links to form these scenes, not one- to-one relations between graphics and kinematics • COLLADA kinematics supports -

Abstractprimitive Class, 149–150 Actionscript, 193 Active Drawing

Index AnimatingAlongPaths class, 198 A AnimationLibrary object, 204 AbstractPrimitive class, 149–150 away3d.core.geom package, 197 ActionScript, 193 away3d.loaders package, 202 active drawing position, 127 Bézier curves, 197 addChild(), 10, 25, 32 bones animation, 207 addEventListener(), 15 Chapter09SampleBase class, code listing, addFace(), 154 194 addSegment(), 129 COLLADA animations, working with, 207 alignToPath property, 201 _createScene(), 196, 199 ambient component, 100 Debug class, 205 AmbientLight3D class, 98–99 ENTER_FRAME event handler, 193 animated materials Flash and, 193 AnimatedTexture class, 117 flash3dbook.ch09.misc package, 194 enabling interactivity, 117 frames, definition of, 193 interactive property, 116–117 GenericTweener class, 194, 197, 201 InteractiveTexture class, 117 GTween, 194 MovieMaterial class, 116 importing 3D animations, 202 smooth property, 117 keyframes, definition of, 193 UsingAnimatedMaterials class, code listing, Loader3D class, 203 116 MD2 animations, working with, 202 VideoMaterial class, 118 Number3D objects, 197, 199 See also extrusion tools; geometry offset property, 201 AnimatedTexture class, 117 Path class, 197 animation path tweening, 197 ActionScript, animating in, 193 PathAnimator class, 197–198, 200 alignToPath property, 201 plane primitive, 196 animating objects in a straight line, 197 progress property, 198 251 INDEX pushback property, 196 away3d.exporters package scale(), 196, 199 outputting 3D data as an ActionScript class, spline, definition of, 197 63 target, 197 away3d.lights package, -

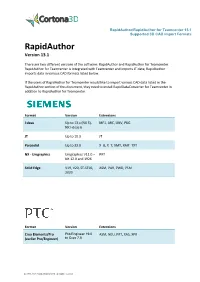

Rapidauthor/Rapidauthor for Teamcenter 13.1 Supported 3D CAD Import Formats

RapidAuthor/RapidAuthor for Teamcenter 13.1 Supported 3D CAD Import Formats RapidAuthor Version 13.1 There are two different versions of the software: RapidAuthor and RapidAuthor for Teamcenter. RapidAuthor for Teamcenter is integrated with Teamcenter and imports JT data; RapidAuthor imports data in various CAD formats listed below. If the users of RapidAuthor for Teamcenter would like to import various CAD data listed in the RapidAuthor section of this document, they need to install RapidDataConverter for Teamcenter in addition to RapidAuthor for Teamcenter. Format Version Extensions I-deas Up to 13.x (NX 5), MF1, ARC, UNV, PKG NX I-deas 6 JT Up to 10.3 JT Parasolid Up to 32.0 X_B, X_T, XMT, XMT_TXT NX - Unigraphics Unigraphics V11.0 – PRT NX 12.0 and 1926 Solid Edge V19, V20, ST-ST10, ASM, PAR, PWD, PSM 2020 Format Version Extensions Creo Elements/Pro Pro/Engineer 19.0 ASM, NEU, PRT, XAS, XPR (earlier Pro/Engineer) to Creo 7.0 (c) 2011-2021 ParallelGraphics Ltd. All rights reserved. RapidAuthor/RapidAuthor for Teamcenter 13.1 Supported 3D CAD Import Formats Format Version Extensions Import of textures and texture coordinates Autodesk Inventor Up to 2021 IPT, IAM Supported AutoCAD Up to 2019 DWG, DXF Autodesk 3DS All versions 3ds Supported Autodesk DWF All versions DWF, DWFX Supported Autodesk FBX All binary versions, FBX Supported ASCII data 7100 – 7400 Format Version Extensions Import of textures and texture coordinates ACIS Up to 2020 SAT, SAB CATIA V4 Up to 4.2.5 MODEL, SESSION, DLV, EXP CATIA V5 Up to V5-6 R2020 CATDRAWING, (R29) CATPART, CATPRODUCT, CATSHAPE, CGR, 3DXML CATIA V6 / Up to V5-6 R2019 3DXML 3DExperience (R29) SolidWorks 97 – 2021 SLDASM, SLDPRT Supported (c) 2011-2021 ParallelGraphics Ltd.