1. Introduction

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Shebang« Zeile 943 7-Zip 1028 Archive 1030 File-Manager 1029

507SIX.fm Seite 1081 Montag, 23. Januar 2006 12:35 12 Index Betriebssystem-Kernel 88 Binary Code 23 Binarys 23 »Shebang« Zeile 943 BIOS 73 7-Zip 1028 Bochs 250 Archive 1030 bochsrc / bochsrc.txt 252 File-Manager 1029 bximage 255 Kommandozeile 1031 Disk-Images 255 Installation 251 A Konfiguration 252 AbiWord 347 Maus 258 Arbeitsfläche 350 Start 257 Felder 357 Tipps 260 Installation 348 Bootloader 74 Kopf- und Fußzeilen 357 Bootprozess 118 Menüleiste 352 Browser 643 Rechtschreib-Prüfung 358 BSD – Lizenz 42 sonstige Funktionen 360 BSD-Projekt 28, 86 Stile 353 FreeBSD 87 Tabellen 355 Funktionen 88 Wörterbücher 359 NetBSD 87 Anti-Viren Programme 1047 OpenBSD 87 Apache 852 BSD-Varianten 132 Container 866 htaccess 865 C htpasswd 872 Chat-Rooms 58 httpd.conf 858 chmod 92 Installation 853 Code – Forking 28 Konfiguration 858 Community 28, 58 Konfigurationseinstellungen 861 Compiere 498 mod_perl 956 Anforderungen 499 Pfadangaben 861 Funktionen 501 starten 873 Installation 500 Zugriffskontrolle 867 Compiler 23, 920, 1016 Assembler Code 23 Composer Austauschbarkeit der Dateien 20 Farben 764 HTML Quelltext 762 B HTML Tags 762 Balsa 698 Normalansicht 761 Filter 705 Vorschau 763 Identität 705 Computer konfigurieren 700 Betriebssystem 74 mails 703 Startprozesses 73 Signatur 705 Concurrent Versions System (CVS) 52 Start 699 Content Management-Systeme 777 Bayesian-Algorithmus 683 ConTEXT 982 Benutzer-Account 94 Einstellungen 984 Betriebssystem-Kern 74 Installation 983 Index 1081 507SIX.fm Seite 1082 Montag, 23. Januar 2006 12:35 12 Navigation 985 Funktionen -

ACS – the Archival Cytometry Standard

http://flowcyt.sf.net/acs/latest.pdf ACS – the Archival Cytometry Standard Archival Cytometry Standard ACS International Society for Advancement of Cytometry Candidate Recommendation DRAFT Document Status The Archival Cytometry Standard (ACS) has undergone several revisions since its initial development in June 2007. The current proposal is an ISAC Candidate Recommendation Draft. It is assumed, however not guaranteed, that significant features and design aspects will remain unchanged for the final version of the Recommendation. This specification has been formally tested to comply with the W3C XML schema version 1.0 specification but no position is taken with respect to whether a particular software implementing this specification performs according to medical or other valid regulations. The work may be used under the terms of the Creative Commons Attribution-ShareAlike 3.0 Unported license. You are free to share (copy, distribute and transmit), and adapt the work under the conditions specified at http://creativecommons.org/licenses/by-sa/3.0/legalcode. Disclaimer of Liability The International Society for Advancement of Cytometry (ISAC) disclaims liability for any injury, harm, or other damage of any nature whatsoever, to persons or property, whether direct, indirect, consequential or compensatory, directly or indirectly resulting from publication, use of, or reliance on this Specification, and users of this Specification, as a condition of use, forever release ISAC from such liability and waive all claims against ISAC that may in any manner arise out of such liability. ISAC further disclaims all warranties, whether express, implied or statutory, and makes no assurances as to the accuracy or completeness of any information published in the Specification. -

Windows, Networking and Software FAQ, Tips, Hints, and Wisdom for Windows 98X/XP Disclaimer

Windows, Networking and Software FAQ, Tips, Hints, and Wisdom for Windows 98x/XP Disclaimer ...................................................................................................................................................... 7 Windows 98SE............................................................................................................................................... 7 Desktop....................................................................................................................................................... 7 Right Click – Deleting Programs from List............................................................................................ 7 Shortcut – Deleting................................................................................................................................. 7 Shortcuts – Deleting the Arrow .............................................................................................................. 7 Shortcuts – Some useful ones (Shutdown and Restart) .......................................................................... 7 Networking................................................................................................................................................. 8 Crossover Cables – Using to network two computers............................................................................ 8 FAQ (Unofficial) Win95/98 ................................................................................................................... 8 IP Addresses -

Comunicado 23 Técnico

Comunicado 23 ISSN 1415-2118 Abril, 2007 Técnico Campinas, SP Armazenagem e transporte de arquivos extensos André Luiz dos Santos Furtado Fernando Antônio de Pádua Paim Resumo O crescimento no volume de informações transportadas por um mesmo indivíduo ou por uma equipe é uma tendência mundial e a cada dia somos confrontados com mídias de maior capacidade de armazenamento de dados. O objetivo desse comunicado é explorar algumas possibilidades destinadas a permitir a divisão e o transporte de arquivos de grande volume. Neste comunicado, tutoriais para o Winrar, 7-zip, ALZip, programas destinados a compactação de arquivos, são apresentados. É descrita a utilização do Hjsplit, software livre, que permite a divisão de arquivos. Adicionalmente, são apresentados dois sites, o rapidshare e o mediafire, destinados ao compartilhamento e à hospedagem de arquivos. 1. Introdução O crescimento no volume de informações transportadas por um mesmo indivíduo ou por uma equipe é uma tendência mundial. No início da década de 90, mesmo nos países desenvolvidos, um computador com capacidade de armazenamento de 12 GB era inovador. As fitas magnéticas foram substituídas a partir do anos 50, quando a IBM lançou o primogenitor dos atuais discos rígidos, o RAMAC Computer, com a capacidade de 5 MB, pesando aproximadamente uma tonelada (ESTADO DE SÃO PAULO, 2006; PCWORLD, 2006) (Figs. 1 e 2). Figura 1 - Transporte do disco rígido do RAMAC Computer criado pela IBM em 1956, com a capacidade de 5 MB. 2 Figura 2 - Sala de operação do RAMAC Computer. Após três décadas, os primeiros computadores pessoais possuíam discos rígidos com capacidade significativamente superior ao RAMAC, algo em torno de 10 MB, consumiam menos energia, custavam menos e, obviamente, tinham uma massa que não alcançava 100 quilogramas. -

Www .Rz.Uni-Frankfurt.De

HochschulrechenzentrumHRZ HRZ-MITTEILUNGEN www.rz.uni-frankfurt.de Hochschulrechenzentrum eröffnet Service Center Riedberg Videokonferenzen Neue Lernplattform erfolgreich gestartet Besuch aus Bangkok HRZ Streaming-Server 25 Jahre RRZN-Handbücher Anmeldeformulare- wie Ihnen das HRZ bei der Veranstaltungsplanung helfen kann Bewährtes von der Sonnenseite des HRZ Thin Clients: SunRays Für Neueinsteiger Neues über SPSS Die Softwarefrage: ZIP-komprimierte Ordner Dienstleistungen des HRZ 11. Ausgabe, Wintersemster 2007/ 2008 2 Editorial/ Impressum Editorial Die im Zuge der Standort-Neuordnung unserer Universität erforderlichen Standortplanungen für das Hochschulrechenzentrum nehmen konkrete Formen an. Mit dem Auszug aus dem Juridicum („Mehrzweckgebäude“) wird die Abteilung Bibliotheksdatenverarbeitung in den Räumen der Universitätsbibliothek (noch im Campus Bockenheim) unterkommen, der Support für die Studierendenverwaltung sowie für die Universitätsverwaltung und auch das Druckzentrum werden im neu zu errichtenden Verwaltungsgebäude der Universität im Campus Westend Platz finden, und die Zentralen Dienste werden auf dem Campus Riedberg stationiert werden. Die Konzepte dazu, wie dieser Umzug mit möglichst geringen Ausfallzeiten der Kern-IT-Services stattfinden soll, sind erarbeitet. Sukzessive sind in den Jahren 2008 und 2009 die technischen Voraussetzungen zu schaffen, welche einen störungsarmen Umzug ermöglichen und gleichzeitig ein umfassend redundantes IT-System für künftige Ausfallsicherheit entstehen lassen. In den HRZ-Mitteilungen werden -

Compactadores / Descompactadores De Arquivos (Zip, Rar, FLT)

Compactadores / Descompactadores de Arquivos (Zip, Rar, FLT) 1 1 RAFAEL FERREIRA COELHO , ERNANDES RODRIGO NORBERTO , 1UFMG – Escola de Ciência da Informação, Curso de Biblioteconomia, Disciplina de Introdução à Informática, Av. Antônio Carlos, 6627, 31270-010, Belo Horizonte, MG, Brasil [email protected] [email protected] Resumo: Neste artigo são apresentados os programas mais utilizados para compactação e descompactação de arquivos. O formato mais utilizado é o zip, embora sejam apresentados outros formatos como o rar. 1 Introdução 3 Os programas mais utilizados Os arquivos compactados sempre estiveram presentes na De inúmeros programas compressores abordaremos os informática. E com o surgimento e crescimento da mais utilizados, que são: Winzip, Filzip, Winrar. Internet, eles passaram a ter um destaque muito grande. Existem arquivos de computadores de vários tipos, 3.1 Winzip grandes, pequenos, moderados, exageradamente grande, O WinZip agora já se encontra na versão 9.0 [2], os que podem ser altamente compactados, pouco desenvolvido pela WinZip Computing, Inc .. É um comprimidos ou ainda que nem modificam seu tamanho. programa extremamente popular na Internet e nos Para que estes arquivos sejam compartilhados com computadores pessoais em todo o mundo. agilidade é preciso que sejam compactados para reduzir o Ele é utilizado basicamente para compactar e seu tamanho. Muitas vezes agrupar grande quantidade em descompactar todos os tipos de arquivos de computador, um só arquivo também facilita o seu transporte, mesmo facilitando em muito o envio destes pela Internet ou a que estes não sofram grandes compactações. Mas para a gravação de dados em unidades de disquete ou CD-ROM. compactação ou descompactação de arquivos ser O usuário possui um período de experiência de 21 dias, realizada, é preciso que alguém faça esta tarefa e, é nesta após este período ele precisa adquirir a licença pagando cena que entra os programas compactadores e uma taxa. -

Open Source Einsetzen Und Integrieren

Auf einen Blick Vorwort .................................................... 9 1 Einführung ................................................ 17 2 Betriebssysteme und mehr ....................... 71 3 Office-Anwendungen ................................ 277 4 Datenbanken ............................................ 539 5 Internet/Intranet ....................................... 639 6 Programmieren ......................................... 917 7 Sonstiges .................................................. 1025 Anhang ..................................................... 1051 Index ......................................................... 1081 Inhalt Vorwort 9 Die Symbole in diesem Buch ......................................................................... 13 Sprachregelungen ............................................................................................. 14 1 Einführung 17 1.1 Wesen freier Software ..................................................................................... 21 1.2 Lizenzmodelle und daraus abgeleitete Vorteile .......................................... 39 1.2.1 BSD-Lizenz ......................................................................................... 42 1.2.2 GNU GPL – General Public Licence ................................................ 44 1.2.3 GNU Library/Lesser General Public License ................................... 47 1.2.4 Sonstige »freie« Lizenzmodelle ........................................................ 48 1.3 Typische Quellen freier Software und Supportmöglichkeiten ................ -

Freeware-List.Pdf

FreeWare List A list free software from www.neowin.net a great forum with high amount of members! Full of information and questions posted are normally answered very quickly 3D Graphics: 3DVia http://www.3dvia.com...re/3dvia-shape/ Anim8or - http://www.anim8or.com/ Art Of Illusion - http://www.artofillusion.org/ Blender - http://www.blender3d.org/ CreaToon http://www.creatoon.com/index.php DAZ Studio - http://www.daz3d.com/program/studio/ Freestyle - http://freestyle.sourceforge.net/ Gelato - http://www.nvidia.co...ge/gz_home.html K-3D http://www.k-3d.org/wiki/Main_Page Kerkythea http://www.kerkythea...oomla/index.php Now3D - http://digilander.li...ng/homepage.htm OpenFX - http://www.openfx.org OpenStages http://www.openstages.co.uk/ Pointshop 3D - http://graphics.ethz...loadPS3D20.html POV-Ray - http://www.povray.org/ SketchUp - http://sketchup.google.com/ Sweet Home 3D http://sweethome3d.sourceforge.net/ Toxic - http://www.toxicengine.org/ Wings 3D - http://www.wings3d.com/ Anti-Virus: a-squared - http://www.emsisoft..../software/free/ Avast - http://www.avast.com...ast_4_home.html AVG - http://free.grisoft.com/ Avira AntiVir - http://www.free-av.com/ BitDefender - http://www.softpedia...e-Edition.shtml ClamWin - http://www.clamwin.com/ Microsoft Security Essentials http://www.microsoft...ity_essentials/ Anti-Spyware: Ad-aware SE Personal - http://www.lavasoft....se_personal.php GeSWall http://www.gentlesec...m/download.html Hijackthis - http://www.softpedia...ijackThis.shtml IObit Security 360 http://www.iobit.com/beta.html Malwarebytes' -

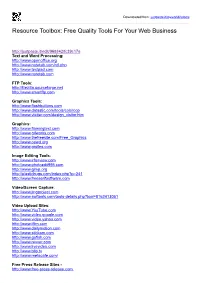

Resource Toolbox: Free Quality Tools for Your Web Business

Downloaded from: justpaste.it/myworld2utools Resource Toolbox: Free Quality Tools For Your Web Business http://justpaste.it/edit/968842/fc39c17a Text and Word Processing: http://www.openoffice.org http://www.notetab.com/n tl.php http://www.textpad.com http://www.notetab.com FTP Tools: http://filezilla .sourceforge.net http://www.smartftp.com Graphics Tools: http://www.flashbu ttons.com http://www.datastic.com/tools /colorcop http://www.vletter.com/design_visitor.ht m Graphics: http://www. flamingtext.com http://www.gifworks.com http://www.thefreesite.com /Free_Graphics http://www.oswd.org http://www.grsites.co m Image Editing Tools: http://www.irfanview.co m http://www.photoedit995. com http://www.gimp.org http://plasticbugs.co m/index.php?p=241 http://www.freeserifsoftware.com Video/Screen Capture: http://www.jingproject.co m http://www.swftools.com/to ols-details.php?tool=8162413051 Video Upload Sites: http://www.YouTube.c om http://www.video.google.c om http://www.video.yahoo.com http://www.ifilm.com http://www.dailymoti on.com http://www.stickam.com http://www.gofish.com http://www.revver.com http://www.livevideo.co m http://www.blip.tv http://www.metac afe.com/ Free Press Release Sites - http://www.free-press-release .com http://www.24-7pressrelease.com http://www.i-newswire.com http://www.pr.com http://www.pressmethod.com http://www.1888pressrelease.com http://www.prbuzz.com http://www.prleap.com http://www.clickpress.com http://www.prfree.com http://www.addpr.com http://www.eworldwire.com http://www.prurgent.com http:///www.theopenpress.com -

America's Army

america's army - http://www.americasarmy.com/ flightgear (simulador de avi�o) - http://www.flightgear.org/ neo sonic universe - http://gamingbrasil.mundoperdido.com.br alien arena 2007 - http://red.planetarena.org/aquire.html cube 2 - http://www.cubeengine.com/index.php4 hidden and dangerous deluxe full - http://www.gathering.com/hd2/hddeluxe.html torcs - http://torcs.sourceforge.net/index.php s.w.i.n.e. - http://www.stormregion.com/index.php?sid=4...=swine_download carom3d: http://carom3d.com/ capman: http://www.jani-immonen.net/capman/ cubert badbone: http://cubert.deirdrakiai.com/ enemy territory: http://www.splashdamage.com/ gunbound brasil: http://www.gbound.com.br/ kartingrace: http://www.steinware.dk/ kquery: http://www.kquery.com/ little fighter 2: http://littlefighter.com/ mu online: http://www.muonline.com/ racer: http://www.racer.nl/ soldat: http://www.soldat.pl/main.php pacwars: http://pw2.sourceforge.net/ teamspeak: http://www.goteamspeak.com/news.php p2p - compartilhadores de arquivos abc: http://pingpong-abc.sf.net/ ares: http://aresgalaxy.sourceforge.net/ azureus: http://azureus.sourceforge.net/ bitcomet: http://www.bitcomet.com/ bittorrent++: http://sourceforge.net/projects/btplusplus/ bt++: http://btplusplus.sourceforge.net/ dc++: http://www.dcpp.net/ edonkey: http://www.edonkey2000.com/ emule: http://www.emule-project.net/ exeem: http://www.exeem.com/ imesh: http://www.imesh.com/ kazaa: http://www.kazaa.com limeware: http://www.limewire.com/ mldonkey: http://mldonkey.berlios.de/modules.php?name=downloads onemx: http://www.onemx.com/ -

Pcoweb User Manual

pCOWeb User manual LEGGI E CONSERVA QUESTE ISTRUZIONI READ AND SAVE THESE INSTRUCTIONS pCOWeb +030220471 – rel. 2.0 – 28.02.2011 1 pCOWeb +030220471 – rel. 2.0 – 28.02.2011 2 WARNINGS CAREL bases the development of its products on decades of experience in HVAC, on the continuous investments in technological innovations to products, procedures and strict quality processes with in-circuit and functional testing on 100% of its products, and on the most innovative production technology available on the market. CAREL and its subsidiaries nonetheless cannot guarantee that all the aspects of the product and the software included with the product respond to the requirements of the final application, despite the product being developed according to start-of-the-art techniques. The customer (manufacturer, developer or installer of the final equipment) accepts all liability and risk relating to the configuration of the product in order to reach the expected results in relation to the specific final installation and/or equipment. CAREL may, based on specific agreements, acts as a consultant for the positive commissioning of the final unit/application, however in no case does it accept liability for the correct operation of the final equipment/system. The CAREL product is a state-of-the-art product, whose operation is specified in the technical documentation supplied with the product or can be downloaded, even prior to purchase, from the website www.carel.com. Each CAREL product, in relation to its advanced level of technology, requires setup / configuration / programming / commissioning to be able to operate in the best possible way for the specific application. -

Slobodan Softver (Open Source Software)

Slobodan softver Slobodan softver (en. free (Open Source Software) software), je softver koji se može koristiti, kopirati, mjenjati Lista besplatnog softvera i redistribuirati bez ikakvih restrikcija. 3D Graphics SpyBot Search & Destroy - http://spybot.safer- Sloboda od 3Delight Free - http://www.3delight.com/index.htm networking.de/ restrikcija je Anim8or - http://www.anim8or.com/ centralni koncept, SpywareBlaster - http:// a suprotno je od Blender - http://www.blender3d.org/ www.javacoolsoftware.com/spywareblaster.html slobodnog Now3D - http://digilander.libero.it/giulios/Eng/ softvera koji je WinPatrol - http://www.winpatrol.com/ vlasnički softver homepage.htm (ne odnosi se na OpenFX - http://www.openfx.org Anti-Virus razliku da li softver košta ili POV-Ray - http://www.povray.org/ AntiVir - http://www.free-av.com/ ne). Uobičajni Terragen - http://www.planetside.co.uk/terragen/ Avast - http://www.avast.com/i_idt_1018.html način distribucije softvera kao Toxic - http://www.toxicengine.org/ AVG - http://free.grisoft.com/ slobodnog je za Wings 3D - http://www.wings3d.com/ BitDefender - http://www.bitdefender.com softver koji je licenciran pod ClamWin - http://www.clamwin.com/ slobodnom Anti-spam programs CyberHawk - http://www.novatix.com/cyberhawk/ licencom (ili kao K9 - http://www.keir.net/k9.html javno vlasništvo), Beta te da je izvorni MailWasher- http://www.mailwasher.net/ kod javno POPFile - http://popfile.sourceforge.net/ Audio Players dostupan. SpamBayes - http://spambayes.sourceforge.net/ Billy - http://www.sheepfriends.com/?page=billy