Scalability Rules: Principles for Scaling Web Sites

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

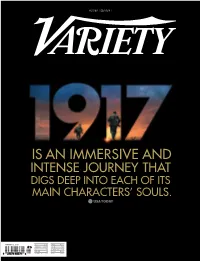

Is an Immersive and Intense Journey That Digs Deep Into Each of Its Main Characters’ Souls

ADVERTISEMENT IS AN IMMERSIVE AND INTENSE JOURNEY THAT DIGS DEEP INTO EACH OF ITS MAIN CHARACTERS’ SOULS. JANUARY 2, 2020 US $9.99 JAPAN ¥1280 CANADA $11.99 CHINA ¥80 UK £ 8 HONG KONG $95 EUROPE €9 RUSSIA 400 AUSTRALIA $14 INDIA 800 3 GOLDEN GLOBE® NOMINATIONS INCLUDING DRAMA BEST DIRECTOR BEST PICTURE SAM MENDES CRITICS’ CHOICE AWARDS NOMINATIONS INCLUDING 8 BEST DIRECTOR BEST PICTURE SAM MENDES THE BEST OF THE LIKE NO FILM YOU A MASTERWORK OF PICTURE YEAR IS HAVE SEEN. THE FIRST ORDER. YOU COULDN’T LOOK AWAY IF YOU WANTED TO. GOLDEN GLOBES YEARS HER MOMENT FOLLOWING ACCLAIMED ROLES IN ‘BOMBSHELL’ AND ‘ONCE UPON A TIME IN HOLLYWOOD,’ ACTOR-PRODUCER MARGOT ROBBIE STORMS THE NEW YEAR WITH A FLURRY OF CREATIVE ENDEAVORS BY KATE AURTHUR P.5 0 P.50 Hollywood on Her Terms Margot Robbie is charging ahead as both an actor and a producer, carving a path for other women along the way. By KATE AURTHUR P.58 Creative Champion AMC Networks programming chief Sarah Barnett competes with streamers by nurturing creators of new cultural hits. By DANIEL HOLLOWAY P.62 Variety Digital Innovators 2020 Twelve leading minds in tech and entertainment are honored for pushing content boundaries. By TODD SPANGLER and JANKO ROETTGERS HANEL; HAIR: BRYCE SCARLETT/THE WALL GROUP/MOROCCAN OIL; MANICURE: TOM BACHIK; DRESS: CHANEL BACHIK; OIL; MANICURE: TOM GROUP/MOROCCAN WALL SCARLETT/THE HANEL; HAIR: BRYCE VARIETY.COM JANUARY 2, 2020 JANUARY (COVER AND THIS PAGE) PHOTOGRAPHS BY ART STREIBER; WARDROBE: KATE YOUNG/THE WALL GROUP; MAKEUP: PATI DUBROFF/FORWARD ARTISTS/C -

Steve Yegge on Google and Amazon Platforms

I was at Amazon for about six and a half years, and now I've been at Google for that long. One thing that struck me immediately about the two companies -- an impression that has been reinforced almost daily -- is that Amazon does everything wrong, and Google does everything right. Sure, it's a sweeping generalization, but a surprisingly accurate one. It's pretty crazy. There are probably a hundred or even two hundred different ways you can compare the two companies, and Google is superior in all but three of them, if I recall correctly. I actually did a spreadsheet at one point but Legal wouldn't let me show it to anyone, even though recruiting loved it. I mean, just to give you a very brief taste: Amazon's recruiting process is fundamentally flawed by having teams hire for themselves, so their hiring bar is incredibly inconsistent across teams, despite various efforts they've made to level it out. And their operations are a mess; they don't really have SREs and they make engineers pretty much do everything, which leaves almost no time for coding - though again this varies by group, so it's luck of the draw. They don't give a single shit about charity or helping the needy or community contributions or anything like that. Never comes up there, except maybe to laugh about it. Their facilities are dirt-smeared cube farms without a dime spent on decor or common meeting areas. Their pay and benefits suck, although much less so lately due to local competition from Google and Facebook. -

AMAZON.COM, INC. (Exact Name of Registrant As Specified in Its Charter) Delaware 91-1646860 (State Or Other Jurisdiction (I.R.S

UNITED STATES SECURITIES AND EXCHANGE COMMISSION Washington, D.C. 20549 FORM 10-K (Mark One) È ANNUAL REPORT PURSUANT TO SECTION 13 OR 15(d) OF THE SECURITIES EXCHANGE ACT OF 1934 For the fiscal year ended December 31, 2004 or ‘ TRANSITION REPORT PURSUANT TO SECTION 13 OR 15(d) OF THE SECURITIES EXCHANGE ACT OF 1934 For the transition period from to . Commission File No. 000-22513 AMAZON.COM, INC. (Exact name of registrant as specified in its charter) Delaware 91-1646860 (State or other jurisdiction (I.R.S. Employer of incorporation or organization) Identification No.) 1200 12th Avenue South, Suite 1200, Seattle, Washington 98144-2734 (206) 266-1000 (Address and telephone number, including area code, of registrant’s principal executive offices) Securities registered pursuant to Section 12(b) of the Act: None Securities registered pursuant to Section 12(g) of the Act: Common Stock, par value $.01 per share Indicate by check mark whether the registrant (1) has filed all reports required to be filed by Section 13 or 15(d) of the Securities Exchange Act of 1934 during the preceding 12 months (or for such shorter period that the registrant was required to file such reports), and (2) has been subject to such filing requirements for the past 90 days. Yes È No ‘ Indicate by check mark if disclosure of delinquent filers pursuant to Item 405 of Regulation S-K is not contained herein, and will not be contained, to the best of registrant’s knowledge, in definitive proxy or information statements incorporated by reference in Part III of this Form 10-K or any amendment to this Form 10-K. -

Minden Eladó

Brad Stone Minden eladó Jeff Bezos és az Amazon kora A fordítás alapja: Brad Stone: Everything Store – Jeff Bezos and the Age of Amazon. This edition published by arrangement with Little, Brown, and Company, New York, USA. All rights reserved. © Brad Stone, 2013 Fordította © Dufka Hajnalka, 2014 Szerkesztette: Csizner Ildikó Borítóterv: Megyeri Gabriella HVG Könyvek Kiadóvezető: Budaházy Árpád Felelős szerkesztő: Koncz Gábor ISBN 978-963-304-197-0 Minden jog fenntartva. Jelen könyvet vagy annak részleteit tilos reprodukálni, adatrendszerben tárolni, bármely formában vagy eszközzel – elektronikus, fényképészeti úton vagy más módon – a kiadó engedélye nélkül közölni. Kiadja a HVG Kiadó Zrt., Budapest, 2016 Felelős kiadó: Szauer Péter www.hvgkonyvek.hu Nyomdai előkészítés: Sörfőző Zsuzsa Nyomás: Gyomai Kner Nyomda Zrt. Felelős vezető: Fazekas Péter Isabella és Calista Stone-nak „Amikor az ember nyolcvanévesen egy nyugodt pillanatban vissza- gondol az életére, és legszemélyesebb krónikáját próbálja felidézni, a legtömörebb és legérvényesebb összegzést addigi döntéseinek egymás- utánja adja. Végső soron a döntéseink határoznak meg minket.” Jeff Bezos beszéde a Princetoni Egyetem diplomaosztó ünnepségén, 2010. május 30. Tartalom Elõszó 11 I. rész: HIT 1. fejezet: Kvantok háza 25 2. fejezet: Bezos könyve 38 3. fejezet: Lázálmok 74 4. fejezet: Milliravi 111 II. rész: OLVASMÁNYÉLMÉNYEK 5. fejezet: Rakétasrác 151 6. fejezet: Káoszelmélet 172 7. fejezet: Nem kereskedelmi – tech-no-ló-gi-a-i cég! 206 8. fejezet: Fiona 238 III. rész: HITTÉRÍTÕ VAGY ZSOLDOS? 9. fejezet: Felemelkedés 275 10. fejezet: Praktikus eszmények 301 11. fejezet: A kérdõjel birodalma 336 Utószó 357 Köszönetnyilvánítás 371 Függelék: Jeff Bezos olvasmánylistája 375 Jegyzetek 379 Név- és tárgymutató 388 Elõszó Az 1970-es évek elején egy ambiciózus reklámigazgató, Julie Ray egy nem mindennapi közoktatási program megszállottja lett, amely a Texas állambeli Houstonban működött, és a tehetséges gyermekeket karolta fel. -

Amazon.Com, Inc

To our shareholders: Long-term thinking is both a requirement and an outcome of true ownership. Owners are different from tenants. I know of a couple who rented out their house, and the family who moved in nailed their Christmas tree to the hardwood floors instead of using a tree stand. Expedient, I suppose, and admittedly these were particularly bad tenants, but no owner would be so short-sighted. Similarly, many investors are effectively short-term tenants, turning their portfolios so quickly they are really just renting the stocks that they temporarily “own.” We emphasized our long-term views in our 1997 letter to shareholders, our first as a public company, because that approach really does drive making many concrete, non-abstract decisions. I’d like to discuss a few of these non-abstract decisions in the context of customer experience. At Amazon.com, we use the term customer experience broadly. It includes every customer-facing aspect of our business—from our product prices to our selection, from our website’s user interface to how we package and ship items. The customer experience we create is by far the most important driver of our business. As we design our customer experience, we do so with long-term owners in mind. We try to make all of our customer experience decisions—big and small—in that framework. For instance, shortly after launching Amazon.com in 1995, we empowered customers to review products. While now a routine Amazon.com practice, at the time we received complaints from a few vendors, basically wondering if we understood our business: “You make money when you sell things—why would you allow negative reviews on your website?” Speaking as a focus group of one, I know I’ve sometimes changed my mind before making purchases on Amazon.com as a result of negative or lukewarm customer reviews. -

“The Everything Store”

“The Everything Store” Here are some parts that I have marked in my copy of the book“ The Everything Store” by Brad Stone. 1.“To be Amazoned means “to watch helplessly as the online upstart from Seattle vacuums up the customers and profits of your traditional brick-and-mortar business.” 2.”Despite the recent rise of its stock price to vertiginous heights, Amazon remains a unique and uniquely puzzling company. The bottom line on its balance sheet is notoriously anemic, and in the midst of its frenetic expansion into new markets and product categories, it actually lost money in 2012. But Wall Street hardly seems to care. With his consistent proclamations that he is building his company for the long term, Jeff Bezos has earned so much faith from his shareholders that investors are willing to patiently wait for the day when he decides to slow his expansion and cultivate healthy profits.” Jeff Bezos 3.“Bezos has proved quite indifferent to the opinions of others. He is an avid problem solver, a man who has a chess grand master’s view of the competitive landscape, and he applies the focus of an obsessive-compulsive to pleasing customers and providing services like free www.capitalideasonline.com Page - 1 “The Everything Store” shipping. He has vast ambitions – not only for Amazon, but to push the boundaries of science and remake the media. In addition to funding his own rocket company, Blue Origin, Bezos acquired the ailing Washington Post newspaper company in August 2013 for $250 million in a deal that stunned the media industry.” 4.“Bezos likes -

UNITED STATES SECURITIES and EXCHANGE COMMISSION Washington, D.C

To our shareholders: At Amazon’s current scale, planting seeds that will grow into meaningful new businesses takes some discipline, a bit of patience, and a nurturing culture. Our established businesses are well-rooted young trees. They are growing, enjoy high returns on capital, and operate in very large market segments. These characteristics set a high bar for any new business we would start. Before we invest our shareholders’ money in a new business, we must convince ourselves that the new opportunity can generate the returns on capital our investors expected when they invested in Amazon. And we must convince ourselves that the new business can grow to a scale where it can be significant in the context of our overall company. Furthermore, we must believe that the opportunity is currently underserved and that we have the capabilities needed to bring strong customer-facing differentiation to the marketplace. Without that, it’s unlikely we’d get to scale in that new business. I often get asked, “When are you going to open physical stores?” That’s an expansion opportunity we’ve resisted. It fails all but one of the tests outlined above. The potential size of a network of physical stores is exciting. However: we don’t know how to do it with low capital and high returns; physical-world retailing is a cagey and ancient business that’s already well served; and we don’t have any ideas for how to build a physical world store experience that’s meaningfully differentiated for customers. When you do see us enter new businesses, it’s because we believe the above tests have been passed. -

ANNUAL RE P O R T to Our Shareholders: in Many Ways, Amazon.Com Is Not a Normal Store

2002 ANNUAL RE P O R T To our shareholders: In many ways, Amazon.com is not a normal store. We have deep selection that is unconstrained by shelf space. We turn our inventory 19 times in a year. We personalize the store for each and every customer. We trade real estate for technology (which gets cheaper and more capable every year). We display customer reviews critical of our products. You can make a purchase with a few seconds and one click. We put used products next to new ones so you can choose. We share our prime real estateÌour product detail pagesÌwith third parties, and, if they can oÅer better value, we let them. One of our most exciting peculiarities is poorly understood. People see that we're determined to oÅer both world-leading customer experience and the lowest possible prices, but to some this dual goal seems paradoxical if not downright quixotic. Traditional stores face a time-tested tradeoÅ between oÅering high- touch customer experience on the one hand and the lowest possible prices on the other. How can Amazon.com be trying to do both? The answer is that we transform much of customer experienceÌsuch as unmatched selection, extensive product information, personalized recommendations, and other new software featuresÌinto largely a Ñxed expense. With customer experience costs largely Ñxed (more like a publishing model than a retailing model), our costs as a percentage of sales can shrink rapidly as we grow our business. Moreover, customer experience costs that remain variableÌsuch as the variable portion of fulÑllment costsÌimprove in our model as we reduce defects. -

Page 1 of 25 (1) Rip Rowan

(1) Rip Rowan - Google+ - Stevey's Google Platforms Rant I was at Amazon for about… Page 1 of 25 +Michael Gmail Calendar Documents Photos Sites Web More Michael Shamos 1 Share… Search Google+ Rip Rowan - 11:18 AM (edited) - Public The best article I've ever read about architecture and the management of IT. ***UPDATE*** This post was intended to be shared privately and was accidentally made public. Thanks to +Steve Yegge for allowing us to keep it out there. It's the sort of writing people do when they think nobody is watching: honest, clear, and frank. The world would be a better place if more people wrote this sort of internal memoranda, and even better if they were allowed to write it for the outside world. Hopefully Steve will not experience any negative repercussions from Google about this. On the contrary, he deserves a promotion. ***UPDATE #2*** This post has received a lot of attention. For anyone here who arrived from The Greater Internet - I stand ready to remove this post if asked. As I mentioned before, I was given permission to keep it up. Google's openness to allow us to keep this message posted on its own social network is, in my opinion, a far greater asset than any SaS platform. In the end, a company's greatest asset is its culture, and here, Google is one of the strongest companies on the planet. Steve Yegge originally shared this post: Stevey's Google Platforms Rant I was at Amazon for about six and a half years, and now I've been at Google for that long. -

Der Allesverkäufer

Der Allesverkäufer Jeff Bezos und das Imperium von Amazon Bearbeitet von Brad Stone, Bernhard Schmid erweitert 2015. Taschenbuch. 416 S. Paperback ISBN 978 3 593 50329 5 Format (B x L): 13,5 x 21,5 cm Wirtschaft > Wirtschaftswissenschaften: Allgemeines > Wirtschaftswissenschaften: Sachbuch und Ratgeberliteratur schnell und portofrei erhältlich bei Die Online-Fachbuchhandlung beck-shop.de ist spezialisiert auf Fachbücher, insbesondere Recht, Steuern und Wirtschaft. Im Sortiment finden Sie alle Medien (Bücher, Zeitschriften, CDs, eBooks, etc.) aller Verlage. Ergänzt wird das Programm durch Services wie Neuerscheinungsdienst oder Zusammenstellungen von Büchern zu Sonderpreisen. Der Shop führt mehr als 8 Millionen Produkte. Leseprobe PROLOG: DER LADEN FÜR ALLES Anfang der 1970er Jahre entwickelte eine rührige Werbemanagerin namens Julie Ray eine gewisse Faszination für ein unkonventionelles staatliches Förderprogramm für hochbegabte Grundschüler im texanischen Houston. Ihr Sohn gehörte zu den ersten Schülern des später als "Vanguard Program" bekannt gewordenen Konzepts zur Förderung von Kreativität, Unabhängigkeit und offenem, unkonventionellem Denken. Ray war sowohl vom Lehrplan als auch von der Gemeinschaft leidenschaftlicher Lehrer und Eltern derart angetan, dass sie sich auf die Suche nach ähnlichen Schulen machte und ein Buch über die damals aufkommende Hochbegabtenförderung in Texas plante. Einige Jahre später, ihr Sohn war bereits an der Highschool, besuchte Ray das Programm ein weiteres Mal im Rahmen der Arbeit an ihrem Buch. Es war in einem Flügel der Grundschule des wohlhabenden Houstoner Bezirks River Oaks untergebracht, deren Direktor ihr einen seiner Schüler, einen wortgewandten Sechstklässler mit sandfarbenem Haar, als Begleiter zuwies. Die Eltern des Jungen baten sich lediglich aus, dass sie im Buch seinen richtigen Namen verschwieg. -

Disruptive Tech 1Q20.Indd

BEST PRACTICES HOW AMAZON DOES IT An exclusive look at decision-making inside the world’s most pioneering digital company. BY RAM CHARAN REUTERS/ALBERT GEA 36 CORPORATE BOARD MEMBER FIRST QUARTER 2020 AMAZON STORY 1Q20.indd 26 12/11/19 2:57 PM he digital age demands high-velocity, high-quality decisions, yet decision-making in traditional companies is hindered by so many hierarchical layers, coordination committees and standing councils that their very survival is at stake. Without algorithms and data at the center of their decision-making, traditional companies cannot compete with today’s digital giants. In 1994, Amazon started creating the greatest revolution in decision-making with one of the oldest cornerstones of success in business: customer obsession. For his new book, The Amazon Management System, world-renowned business adviser, author and speaker Ram Charan drew on publicly available information and interviews with high-level former and current Amazon employees to crystalize some of the ideas and insights developed by Jeff Bezos and his team. This excerpt details how CEOs, managers and directors can increase the Tvelocity and quality of their decisions to gain an upper hand against their traditional competitors. Amazon’s Jeff Bezos focused on the oldest of old business principles—extraordinary customer obsession to the level that each customer is treated individually—and turned it into the ultimate business purpose of his company. As everyone knows, digitization was the engine that enabled the company to treat each one of its more than 100 million customers as if they were shopping at a corner store in a small town. -

Jeff Bezos E a Era Da Amazon Brad Stone

ioneira no comércio de livros pela in- ternet, a Amazon esteve à frente da Pprimeira grande febre das pontocom. Mas Jeff Bezos, seu visionário criador, não © Cynthia E. Wood © Cynthia E. se contentaria com uma livraria virtual descolada: ele queria que a Amazon dispu- sesse de uma seleção ilimitada de produtos a preços radicalmente baixos — e se tor- nasse “a loja de tudo”. Para pôr em prática “É uma grande aula de administração de essa visão, Bezos desenvolveu uma cultura empresas e a biografi a de um visionário. corporativa de ambição implacável e alto BRAD STONE cobre a Amazon e a área sigilo que poucos conheciam de verdade. da tecnologia no Vale do Silício há quin- Conta como Bezos construiu a maior loja ze anos para publicações como Newsweek O jornalista Brad Stone obteve acesso iné- virtual do mundo, quantas besteiras fez e E A ERA DA AMAZON e Th e New York Times. Ele escreve para a dito a funcionários e executivos da Amazon, Bloomberg Businessweek e mora em São como virou um ícone perseguindo uma só além de familiares e amigos de Bezos. O JEFF BEZOS JEFF BEZOS Francisco. ideia: o poder da rede.” resultado é uma análise de como é a vida na ELEITO O LIVRO DE gigante do comércio on-line, com detalhes ELIO GASPARI, COLUNISTA DE FOLHA DE NEGÓCIOS DO ANO PELO que expõem um mundo de competitividade S.PAULO E O GLOBO FINANCIAL TIMES/ sem limites. A exemplo de outros precur- GOLDMAN SACHS sores da tecnologia, como Steve Jobs, Bill Gates e Mark Zuckerberg, Bezos não cede “Jeff Bezos é um dos empreendedores mais JEFF BEZOS em sua incansável busca por novos merca- visionários e determinados da nossa era, dos, levando a Amazon a lançar empreendi- que, assim como Steve Jobs, transforma e mentos como o Kindle e a computação em nuvem e transformando o varejo da mesma inventa os mercados.