Modern Cryptography Applied Mathematics for Encryption and Information Security

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Efficiency of Tuna Purse-Seiners and Effective Effort’ « Efficacité Des Senneurs Thoniers Et Efforts Réels » (ESTHER)

SCTB15 Working Paper FTWG–5 ‘Efficiency of Tuna Purse-Seiners and Effective Effort’ « Efficacité des Senneurs Thoniers et Efforts Réels » (ESTHER) 1 Selected Annotated Bibliography Marie-Christine REALES ESTHER Project Document Officer 1 To receive the complete ESTHER ANNEXE BIBLIOGRAPHIQUE, contact Daniel Gaertner, 1 Institute of Research for Develoment (IRD - UR 109), CHMT BP 171, 34203 Séte Cedex, France PROGRAMME DE RECHERCHES N° 98/061 IRD (Institut de Recherches pour le Développement) / IEO (Instituto Español de Oceanografía) ‘Efficiency of Tuna Purse-Seiners and Effective Effort’ « Efficacité des Senneurs Thoniers et Efforts Réels » (ESTHER) ANNEXE BIBLIOGRAPHIQUE Marie-Christine REALES Documentaliste du projet ESTHER 1 SOMMAIRE INTRODUCTION……………………………………………………………………p. 4 I.Méthodologie………………………………………………………..…………….…p. 5 I.1 Etablissement d'une liste de mots-clés……………………………………...………...p. 5 I.2 Sources utilisées………………………………………………………………………p. 5 I.3 Recherche et validation des résultats…………………………………………………p. 7 I.3.1 Interrogation des bases…………………………………………………………p. 7 I.3.2 Evolution de la liste de mots-clés………………………………………………p. 7 I.3.3 Validation des références…………………………………………………...….p. 9 I.3.4 Diffusion des références……………………………………………………..…p. 9 I.4 Transfert des références sous le logiciel bibliographique PROCITE……………….p. 10 II.Quelques adresses de sites sur Internet………………………………….…p. 23 CONCLUSION………………………………………………………….…….……..p. 26 * * * Bibliographie thématique…………………………………………………………p. 3 Fishing Fleet…………………………………………………………………….…….…p. 3 Purse Seiner Technology…………………………………………………….……..……p. 7 Fisher's Behavior…………………………………………………………………….......p. 13 Fish Catch Statistics……………………………………………………………………...p. 18 Fishing Operations……………………………………………………………..………...p. 30 Tuna Behaviour………………………………………………………………..………...p. 34 Models…………………………………………………………………………………...p. 39 2 Bibliographie Thématique FISHING FLEET Abrahams, M.V. ; Healey, M.C., 1993. Some consequences of variation in vessel density : a manipulative field experiment. Fisheries Research , 15 (4) : 315-322. -

Éric FREYSSINET Lutte Contre Les Botnets

THÈSE DE DOCTORAT DE L’UNIVERSITÉ PIERRE ET MARIE CURIE Spécialité Informatique École doctorale Informatique, Télécommunications et Électronique (Paris) Présentée par Éric FREYSSINET Pour obtenir le grade de DOCTEUR DE L’UNIVERSITÉ PIERRE ET MARIE CURIE Sujet de la thèse : Lutte contre les botnets : analyse et stratégie Présentée et soutenue publiquement le 12 novembre 2015 devant le jury composé de : Rapporteurs : M. Jean-Yves Marion Professeur, Université de Lorraine M. Ludovic Mé Enseignant-chercheur, CentraleSupélec Directeurs : M. David Naccache Professeur, École normale supérieure de thèse M. Matthieu Latapy Directeur de recherche, UPMC, LIP6 Examinateurs : Mme Clémence Magnien Directrice de recherche, UPMC, LIP6 Mme Solange Ghernaouti-Hélie Professeure, Université de Lausanne M. Vincent Nicomette Professeur, INSA Toulouse Cette thèse est dédiée à M. Celui qui n’empêche pas un crime alors qu’il le pourrait s’en rend complice. — Sénèque Remerciements Je tiens à remercier mes deux directeurs de thèse. David Naccache, officier de réserve de la gendarmerie, contribue au développement de la recherche au sein de notre institution en poussant des personnels jeunes et un peu moins jeunes à poursuivre leur passion dans le cadre académique qui s’impose. Matthieu Latapy, du LIP6, avec qui nous avions pu échanger autour d’une thèse qu’il encadrait dans le domaine difficile des atteintes aux mineurs sur Internet et qui a accepté de m’accueillir dans son équipe. Je voudrais remercier aussi, l’ensemble de l’équipe Réseaux Complexes du LIP6 et sa responsable d’équipe actuelle, Clémence Magnien, qui m’ont accueilli à bras ouverts, accom- pagné à chaque étape et dont j’ai pu découvrir les thématiques et les méthodes de travail au fil des rencontres et des discussions. -

Harris Sierra II, Programmable Cryptographic

TYPE 1 PROGRAMMABLE ENCRYPTION Harris Sierra™ II Programmable Cryptographic ASIC KEY BENEFITS When embedded in radios and other voice and data communications equipment, > Legacy algorithm support the Harris Sierra II Programmable Cryptographic ASIC encrypts classified > Low power consumption information prior to transmission and storage. NSA-certified, it is the foundation > JTRS compliant for the Harris Sierra II family of products—which includes two package options for the ASIC and supporting software. > Compliant with NSA’s Crypto Modernization Program The Sierra II ASIC offers a broad range of functionality, with data rates greater than 300 Mbps, > Compact form factor legacy algorithm support, advanced programmability and low power consumption. Its software programmability provides a low-cost migration path for future upgrades to embedded communications equipment—without the logistics and cost burden normally associated with upgrading hardware. Plus, it’s totally compliant with all Joint Tactical Radio System (JTRS) and Crypto Modernization Program requirements. The Sierra II ASIC’s small size, low power requirements, and high data rates make it an ideal choice for battery-powered applications, including military radios, wireless LANs, remote sensors, guided munitions, UAVs and any other devices that require a low-power, programmable solution for encryption. Specifications for: Harris SIERRA II™ Programmable Cryptographic ASIC GENERAL BATON/MEDLEY SAVILLE/PADSTONE KEESEE/CRAYON/WALBURN Type 1 – Cryptographic GOODSPEED Algorithms* ACCORDION FIREFLY/Enhanced FIREFLY JOSEKI Decrypt High Assurance AES DES, Triple DES Type 3 – Cryptographic AES Algorithms* Digital Signature Standard (DSS) Secure Hash Algorithm (SHA) Type 4 – Cryptographic CITADEL® Algorithms* SARK/PARK (KY-57, KYV-5 and KG-84A/C OTAR) DS-101 and DS-102 Key Fill Key Management SINCGARS Mode 2/3 Fill Benign Key/Benign Fill *Other algorithms can be added later. -

Conclusions and Overall Assessment of the Bloody Sunday Inquiry Return to an Address of the Honourable the House of Commons Dated 15 June 2010 for The

Principal Conclusions and Overall Assessment of the Principal Conclusions and Overall Return to an Address of the Honourable the House of Commons dated 15 June 2010 for the Principal Conclusions and Overall Assessment of the Bloody Sunday Inquiry The Rt Hon The Lord Saville of Newdigate (Chairman) The Hon William Hoyt OC The Hon John Toohey AC Bloody Sunday Inquiry Published by TSO (The Stationery Office) and available from: The Principal Conclusions and Overall Assessment Online (Chapters 1–5 of the report) are reproduced in this volume www.tsoshop.co.uk This volume is accompanied by a DVD containing the full Mail, Telephone, Fax & E-mail TSO text of the report PO Box 29, Norwich NR3 1GN Telephone orders/General enquiries: 0870 600 5522 Order through the Parliamentary Hotline Lo-Call: 0845 7 023474 Fax orders: 0870 600 5533 E-mail: [email protected] Textphone: 0870 240 3701 The Parliamentary Bookshop 12 Bridge Street, Parliament Square, London SW1A 2JX Telephone orders/General enquiries: 020 7219 3890 Fax orders: 020 7219 3866 Email: [email protected] Internet: www.bookshop.parliament.uk TSO@Blackwell and other Accredited Agents Customers can also order publications from TSO Ireland 16 Arthur Street, Belfast BT1 4GD Telephone: 028 9023 8451 Fax: 028 9023 5401 HC30 £19.50 Return to an Address of the Honourable the House of Commons dated 15 June 2010 for the Principal Conclusions and Overall Assessment of the Bloody Sunday Inquiry The Rt Hon The Lord Saville of Newdigate (Chairman) The Hon William Hoyt OC The Hon John Toohey -

Volume I Return to an Address of the Honourable the House of Commons Dated 15 June 2010 for The

Report of the Return to an Address of the Honourable the House of Commons dated 15 June 2010 for the Report of the Bloody Sunday Inquiry The Rt Hon The Lord Saville of Newdigate (Chairman) Bloody Sunday Inquiry – Volume I Bloody Sunday Inquiry – Volume The Hon William Hoyt OC The Hon John Toohey AC Volume I Outline Table of Contents General Introduction Glossary Principal Conclusions and Overall Assessment Published by TSO (The Stationery Office) and available from: Online The Background to Bloody www.tsoshop.co.uk Mail, Telephone, Fax & E-mail Sunday TSO PO Box 29, Norwich NR3 1GN Telephone orders/General enquiries: 0870 600 5522 Order through the Parliamentary Hotline Lo-Call: 0845 7 023474 Fax orders: 0870 600 5533 E-mail: [email protected] Textphone: 0870 240 3701 The Parliamentary Bookshop 12 Bridge Street, Parliament Square, London SW1A 2JX This volume is accompanied by a DVD containing the full Telephone orders/General enquiries: 020 7219 3890 Fax orders: 020 7219 3866 text of the report Email: [email protected] Internet: www.bookshop.parliament.uk TSO@Blackwell and other Accredited Agents Customers can also order publications from £572.00 TSO Ireland 10 volumes 16 Arthur Street, Belfast BT1 4GD not sold Telephone: 028 9023 8451 Fax: 028 9023 5401 HC29-I separately Return to an Address of the Honourable the House of Commons dated 15 June 2010 for the Report of the Bloody Sunday Inquiry The Rt Hon The Lord Saville of Newdigate (Chairman) The Hon William Hoyt OC The Hon John Toohey AC Ordered by the House of Commons -

Improved Detection for Advanced Polymorphic Malware James B

Nova Southeastern University NSUWorks CEC Theses and Dissertations College of Engineering and Computing 2017 Improved Detection for Advanced Polymorphic Malware James B. Fraley Nova Southeastern University, [email protected] This document is a product of extensive research conducted at the Nova Southeastern University College of Engineering and Computing. For more information on research and degree programs at the NSU College of Engineering and Computing, please click here. Follow this and additional works at: https://nsuworks.nova.edu/gscis_etd Part of the Computer Sciences Commons Share Feedback About This Item NSUWorks Citation James B. Fraley. 2017. Improved Detection for Advanced Polymorphic Malware. Doctoral dissertation. Nova Southeastern University. Retrieved from NSUWorks, College of Engineering and Computing. (1008) https://nsuworks.nova.edu/gscis_etd/1008. This Dissertation is brought to you by the College of Engineering and Computing at NSUWorks. It has been accepted for inclusion in CEC Theses and Dissertations by an authorized administrator of NSUWorks. For more information, please contact [email protected]. Improved Detection for Advanced Polymorphic Malware by James B. Fraley A Dissertation Proposal submitted in partial fulfillment of the requirements for the degree of Doctor of Philosophy in Information Assurance College of Engineering and Computing Nova Southeastern University 2017 ii An Abstract of a Dissertation Submitted to Nova Southeastern University in Partial Fulfillment of the Requirements for the Degree of Doctor of Philosophy Improved Detection for Advanced Polymorphic Malware by James B. Fraley May 2017 Malicious Software (malware) attacks across the internet are increasing at an alarming rate. Cyber-attacks have become increasingly more sophisticated and targeted. These targeted attacks are aimed at compromising networks, stealing personal financial information and removing sensitive data or disrupting operations. -

W^Ffs^Jlpâltlfmllpla^I N E, Frescos

Fundado em 1930 •• Ano XIX ¦¦ IN." 7880 A. DIÁRIO UE NOTICIAS- Tiragem da Propriedade da S. *'<>n>er» O Matutino de Maior O. R. Dantas, presidente; RI. Moreira. Capital da República leRoiirrire; Aurélio Silva, seeretmio. Dis- ASSINATURAS: Previsões ate. 2 horas de amanhã, no n TEMPO rom nebulosidade. »s- Federa!:' Tunnn nom XO.O0: Trim. Cr» ».i.IK, „.„„ Em elevaçüo. Venlns — Dr. Ano, Cri 100,00; Semestre. Crf vni>lrci. Temperatura W^ffS^JlpâltlfmllPla^i N E, frescos. Rep.B. Paulo: VY Farinello - 8. Bento, 220-3.» T. <-íBJi F,n. »E HOJE, 2 SEi;OEsTll PAUS - Cr* 0.50 Universidade Rural, ¦*'•*!'£• «.íi-- •1q1.15.2-; Penha, Rio de Janeiro, Sábado, 3 de Julho de 1918 «¦"-«••• 11 — Tel.: 42-2910 (Rede Interna) ^í^íBua^^-"*''^^"o***^--- ^r4..?jT"uo5"-.^»:i-.?i..M.,ep. Qú?«i«. Constituição, Éstralésia para enfrentar o bloqueio russo Marshall declara que os Estados Unidos, Inglaterra e APELAM PARA STÀLIN OS COMUNISTAS DA IUGOSLÁVIA combater a ati- França estão traçando planos para Quinze mil marxistas dirrçem-lhe um telegrama, solicitando que elimine as Resolveu não convocar o Congresso "falsas tude soviética em Berlim acusações" feitas pelo Cominform contra o mal. Tito e seu governo decisão do presiden- ação conjunta Oficialmente anunciada essa "amor "premier" — Declinou de revelar qualquer detalhe sobre a Protestaram ilimitado" ao russo Que- — considerada na Casa Branca acaoar com a das três potências Também ram «ue nao comparecerão*- rem que êste faça tudo ao seu alcance para o líder anunciado que » pre.lde»te - Ordens só dos russos, diz T ^v -'- (V '«• •'> - 1*1 ».fUda.n,e,,.e.. -

Computer Viruses and Malware Advances in Information Security

Computer Viruses and Malware Advances in Information Security Sushil Jajodia Consulting Editor Center for Secure Information Systems George Mason University Fairfax, VA 22030-4444 email: [email protected] The goals of the Springer International Series on ADVANCES IN INFORMATION SECURITY are, one, to establish the state of the art of, and set the course for future research in information security and, two, to serve as a central reference source for advanced and timely topics in information security research and development. The scope of this series includes all aspects of computer and network security and related areas such as fault tolerance and software assurance. ADVANCES IN INFORMATION SECURITY aims to publish thorough and cohesive overviews of specific topics in information security, as well as works that are larger in scope or that contain more detailed background information than can be accommodated in shorter survey articles. The series also serves as a forum for topics that may not have reached a level of maturity to warrant a comprehensive textbook treatment. Researchers, as well as developers, are encouraged to contact Professor Sushil Jajodia with ideas for books under this series. Additional tities in the series: HOP INTEGRITY IN THE INTERNET by Chin-Tser Huang and Mohamed G. Gouda; ISBN-10: 0-387-22426-3 PRIVACY PRESERVING DATA MINING by Jaideep Vaidya, Chris Clifton and Michael Zhu; ISBN-10: 0-387- 25886-8 BIOMETRIC USER AUTHENTICATION FOR IT SECURITY: From Fundamentals to Handwriting by Claus Vielhauer; ISBN-10: 0-387-26194-X IMPACTS AND RISK ASSESSMENT OF TECHNOLOGY FOR INTERNET SECURITY.'Enabled Information Small-Medium Enterprises (TEISMES) by Charles A. -

A Comprehensive Survey: Ransomware Attacks Prevention, Monitoring and Damage Control

International Journal of Research and Scientific Innovation (IJRSI) | Volume IV, Issue VIS, June 2017 | ISSN 2321–2705 A Comprehensive Survey: Ransomware Attacks Prevention, Monitoring and Damage Control Jinal P. Tailor Ashish D. Patel Department of Information Technology Department of Information Technology Shri S’ad Vidya Mandal Institute of Technology Shri S’ad Vidya Mandal Institute of Technology Bharuch, Gujarat, India Bharuch, Gujarat, India Abstract – Ransomware is a type of malware that prevents or advertisements, blocking service, disable keyboard or spying restricts user from accessing their system, either by locking the on user activities. It locks the system or encrypts the data system's screen or by locking the users' files in the system unless leaving victims unable to help to make a payment and a ransom is paid. More modern ransomware families, sometimes it also threatens the user to expose sensitive individually categorize as crypto-ransomware, encrypt certain information to the public if payment is not done[1]. file types on infected systems and forces users to pay the ransom through online payment methods to get a decrypt key. The In case of windows, from figure 1 it shown that there are analysis shows that there has been a significant improvement in some main stages that every crypto family goes through. Each encryption techniques used by ransomware. The careful analysis variant gets into victim’s machine via any malicious website, of ransomware behavior can produce an effective detection email attachment or any malicious link and progress from system that significantly reduces the amount of victim data loss. there. Index Terms – Ransomware attack, Security, Detection, Prevention. -

TCP SYN-ACK) to Spoofed IP Addresses

Joint Japan-India Workshop on Cyber Security and Services/Applications for M2M and Fourteenth GISFI Standardization Series Meeting How to secure the network - Darknet based cyber-security technologies for global monitoring and analysis Koji NAKAO Research Executive Director, Distinguished Researcher, NICT Information Security Fellow, KDDI Outline of NICT Mission As the sole national research institute in the information and communications field, we as NICT will strive to advance national technologies and contribute to national policies in the field, by promoting our own research and development and by cooperating with and supporting outside parties. Collaboration between Industry, Academic Institutions and Government R&D carried out by NICT’s researchers Budget (FY 2012): approx. 31.45 Billion Yen (420 Million US$) Personnel: 849 Researchers: 517 PhDs: 410 R&D assistance (as of April 2012) to industry and life convenient Japan Standard Time and academia Space Weather Forecast services Forecast Weather Space of the global community community global the of Growth of Economy of Japanese Growth Promotion of ICT a more for Security and Safety businesses Interaction with National ICT Policy problems major solve to Contribution 2 Internet Security Days 2012 Network Security Research Institute Collabor • Cyber attack monitoring, tracking, • Dynamic and optimal deployment of ation security functions analysis, response and prevention New GenerationNetwork Security • Prompt promotion of outcomes • Secure new generation network design Security Cybersecurity Architecture Laboratory Security Organizations Laboratory Daisuke Inoue Shin’ichiro Matsuo Kazumasa Taira Koji Nakao (Director General) (Distinguished Researcher) Security • Security evaluation of cryptography Fundamentals • Practical security • Post quantum cryptography Laboratory • Quantum security Shiho Moriai Recommendations for Cryptographic Algorithms and Key Lengths to Japan e-Government and SDOs 3 Internet Security Days 2012 Content for Today • Current Security Threats (e.g. -

Understanding Ransomware in the Enterprise

Understanding Ransomware in the Enterprise By SentinelOne Contents Introduction ��������������������������������������������������������������������������������������� 3 Understanding the Ransomware Threat ��������������������������������������������� 4 Methods of Infection �����������������������������������������������������������������������������������������������������4 Common, Prevalent and Historic Ransomware Examples �������������������������������������������6 The Ransomware as a Service (RaaS) Model �������������������������������������������������������������11 The Ransomware “Kill Chain” �������������������������������������������������������������������������������������14 Planning for a Ransomware Incident ����������������������������������������������� 16 Incident Response Policy �������������������������������������������������������������������������������������������16 Recruitment �����������������������������������������������������������������������������������������������������������������18 Define Roles and Responsibilities ������������������������������������������������������������������������������18 Create a Communication Plan ������������������������������������������������������������������������������������18 Test your Incident Response Plan ������������������������������������������������������������������������������18 Review and Understand Policies ��������������������������������������������������������������������������������18 Responding to a Ransomware Incident �������������������������������������������� -

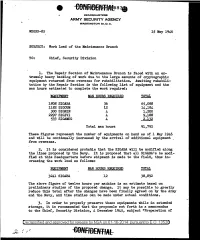

Work Load of the Maintenance Branch

HEADQUARTERS ARMY SECURITY AGENCY WASHINGTON 25, D. C. WDGSS-85 15 May 1946 SUBJECT: Work Load of the Maintenance Branch TO: Chief, Security Division 1. The Repair Section of Maintenance Branch is .faced with an ex tremely heavy backlog of' work due to the large amounts of' cryptographic equipment returned from overseas for rehabilitation. Awaiting rehabili tation by the Repair Section is the followi~g list of equipment and the man hours estimated to complete the work required.: EQUIPMENT MAN HOURS REQUIRED TOTAL 1808 SIGABA 36 65,088 1182 SIGCUM 12 14,184 300 SIGNIN 4 1,200 2297 SIGIVI 4 9,188 533 SIGAMUG 4, 2,132 Total man hours 91,792 These figures represent the number of equipments on hand as of 1 May 1946 and will be continually increased by the arrival of additional equipment . from overseas. 2. It is considered probable that the SIGABA will be modified along the lines proposed by the Navy. It is proposed that all SIGABA 1s be modi fied at this Headquarters before shipment is made to the field, thus in creasing the work load as follows: EQUIPMENT MAN HOURS REQUIRED 3241 SIGABA 12 The above figure of' twelve hours per machine is an estimate based on preliminary studies of' the proposed change. It may be possible to greatly reduce this total af'ter the changes have been f inaJ.ly agreed on by the .Army and the Navy, and time studies can be made under actual conditions. ,3. In order to properly preserve these equipments while in extended storage, it is recommended that the proposals set .forth in a memorandum to the Chief, Security Division, 4 December 1945, subject "Preparation of Declassified and approved for release by NSA on 01-16-2014 pursuantto E .0.