Autobench Comparing the Time Performance of Haskell Programs

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Dynamic Extension of Typed Functional Languages

Dynamic Extension of Typed Functional Languages Don Stewart PhD Dissertation School of Computer Science and Engineering University of New South Wales 2010 Supervisor: Assoc. Prof. Manuel M. T. Chakravarty Co-supervisor: Dr. Gabriele Keller Abstract We present a solution to the problem of dynamic extension in statically typed functional languages with type erasure. The presented solution re- tains the benefits of static checking, including type safety, aggressive op- timizations, and native code compilation of components, while allowing extensibility of programs at runtime. Our approach is based on a framework for dynamic extension in a stat- ically typed setting, combining dynamic linking, runtime type checking, first class modules and code hot swapping. We show that this framework is sufficient to allow a broad class of dynamic extension capabilities in any statically typed functional language with type erasure semantics. Uniquely, we employ the full compile-time type system to perform run- time type checking of dynamic components, and emphasize the use of na- tive code extension to ensure that the performance benefits of static typing are retained in a dynamic environment. We also develop the concept of fully dynamic software architectures, where the static core is minimal and all code is hot swappable. Benefits of the approach include hot swappable code and sophisticated application extension via embedded domain specific languages. We instantiate the concepts of the framework via a full implementation in the Haskell programming language: providing rich mechanisms for dy- namic linking, loading, hot swapping, and runtime type checking in Haskell for the first time. We demonstrate the feasibility of this architecture through a number of novel applications: an extensible text editor; a plugin-based network chat bot; a simulator for polymer chemistry; and xmonad, an ex- tensible window manager. -

Haskell-Like S-Expression-Based Language Designed for an IDE

Department of Computing Imperial College London MEng Individual Project Haskell-Like S-Expression-Based Language Designed for an IDE Author: Supervisor: Michal Srb Prof. Susan Eisenbach June 2015 Abstract The state of the programmers’ toolbox is abysmal. Although substantial effort is put into the development of powerful integrated development environments (IDEs), their features often lack capabilities desired by programmers and target primarily classical object oriented languages. This report documents the results of designing a modern programming language with its IDE in mind. We introduce a new statically typed functional language with strong metaprogramming capabilities, targeting JavaScript, the most popular runtime of today; and its accompanying browser-based IDE. We demonstrate the advantages resulting from designing both the language and its IDE at the same time and evaluate the resulting environment by employing it to solve a variety of nontrivial programming tasks. Our results demonstrate that programmers can greatly benefit from the combined application of modern approaches to programming tools. I would like to express my sincere gratitude to Susan, Sophia and Tristan for their invaluable feedback on this project, my family, my parents Michal and Jana and my grandmothers Hana and Jaroslava for supporting me throughout my studies, and to all my friends, especially to Harry whom I met at the interview day and seem to not be able to get rid of ever since. ii Contents Abstract i Contents iii 1 Introduction 1 1.1 Objectives ........................................ 2 1.2 Challenges ........................................ 3 1.3 Contributions ...................................... 4 2 State of the Art 6 2.1 Languages ........................................ 6 2.1.1 Haskell .................................... -

Universidad Complutense De Madrid Transformación Y

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by EPrints Complutense UNIVERSIDAD COMPLUTENSE DE MADRID FACULTAD DE INFORMÁTICA Departamento de Sistemas Informáticos y Computación TRANSFORMACIÓN Y ANÁLISIS DE CÓDIGO DE BYTES ORIENTADO A OBJETOS. MEMORIA PARA OPTAR AL GRADO DE DOCTOR PRESENTADA POR Miguel Gómez-Zamalloa Gil Bajo la dirección de la doctora Elvira Albert Albiol Madrid, 2010 • ISBN: 978-84-693-4645-7 © Miguel Gómez-Zamalloa Gil, 2009 Transformaci´on y An´alisis de C´odigo de Bytes Orientado a Objetos TESIS DOCTORAL Memoria presentada para obtener el grado de doctor en Ingenier´ıa Inform´atica por Miguel G´omez-Zamalloa Gil Dirigida por la profesora Elvira Albert Albiol Departamento de Sistemas Inform´aticos y Computaci´on Universidad Complutense de Madrid Madrid, Julio de 2009 Transformation and Analysis of Object-Oriented Bytecode PhD THESIS Miguel G´omez-Zamalloa Gil Departamento de Sistemas Inform´aticos y Computaci´on Universidad Complutense de Madrid Advisor: Elvira Albert Albiol July, 2009 Resumen Predecir el comportamiento de los programas antes de su ejecuci´on es cada vez m´as importante, especialmente teniendo en cuenta que ´estos son cada vez m´as complejos y son utilizados frecuentemente en situaciones cr´ıti- cas, como operaciones m´edicas, control a´ereo u operaciones bancarias. El an´alisis est´atico de programas es el proceso por el cual el comportamiento de los programas es analizado sin llegar a ejecutar su c´odigo. Tradicional- mente, la mayor´ıa de an´alisis han sido formulados al nivel del c´odigo fuente. No obstante, puede darse el caso de que el an´alisis deba tratar con c´odigo compilado, o c´odigo de bytes. -

Elaboration on Functional Dependencies: Functional Dependencies Are Dead, Long Live Functional Dependencies!

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Lirias Elaboration on Functional Dependencies: Functional Dependencies Are Dead, Long Live Functional Dependencies! Georgios Karachalias Tom Schrijvers KU Leuven KU Leuven Belgium Belgium [email protected] [email protected] Abstract that inhabit a multi-parameter type class is intended. Hence, Jones Functional dependencies are a popular extension to Haskell’s type- proposed the functional dependency language extension [15], which class system because they provide fine-grained control over type specifies that one class parameter determines another. inference, resolve ambiguities and even enable type-level computa- Functional dependencies became quite popular, not only to re- tions. solve ambiguity, but also as a device for type-level computation, Unfortunately, several aspects of Haskell’s functional dependen- which was used to good effect, e.g., for operations on heterogeneous cies are ill-understood. In particular, the GHC compiler does not collections [18]. They were supported by Hugs, Mercury, Habit [11] properly enforce the functional dependency property, and rejects and also GHC. However, the implementation in GHC has turned out well-typed programs because it does not know how to elaborate to be problematic: As far as we know, it is not possible to elaborate all well-typed programs with functional dependencies into GHC’s them into its core language, System FC. This paper presents a novel formalization of functional dependen- original typed intermediate language based on System F [7]. As a cies that addresses these issues: We explicitly capture the functional consequence, GHC rejects programs that are perfectly valid accord- dependency property in the type system, in the form of explicit ing to the theory of Sulzmann et al.[32]. -

Syntactic Analysis and Type Evaluation in Haskell

MASARYK UNIVERSITY }w¡¢£¤¥¦§¨ FACULTY OF I !"#$%&'()+,-./012345<yA|NFORMATICS Syntactic analysis and type evaluation in Haskell BACHELOR’S THESIS Pavel Dvoˇrák Brno, Spring 2009 Declaration I hereby declare that this thesis is my original work and that, to the best of my knowledge and belief, it contains no material previously published or written by another author except where the citation has been made in the text. ............................................................ signature Adviser: RNDr. Václav Brožek 2 Acknowledgements I am grateful to my adviser Vašek Brožek for his time, great patience, and excel- lent advices, to Libor Škarvada for his worthwhile lectures, to Marek Kružliak for the beautiful Hasky logo, to Miran Lipovaˇca for his cool Haskell tutorial, ∗ to Honza Bartoš, Jirka Hýsek, Tomáš Janoušek, Martin Milata, Peter Molnár, Štˇepán Nˇemec, Tomáš Stanˇek, and Matúš Tejišˇcák for their important remarks, to the rest of the Haskell community and to everybody that I forgot to mention. Thank you all. http://learnyouahaskell.com/ ∗Available online at . 3 Abstract The main goal of this thesis has been to provide a description of Haskell and basic information about source code analysis of this programming language. That includes Haskell standards, implementations, syntactic and lexical analysis, and its type system. Another objective was to write a library for syntactic and type analysis that can be used in further development. † Keywords Haskell, functional programming, code analysis, syntactic analysis, parsing, type evaluation, type-checking, type system †Source code available online at http://hasky.haskell.cz/. 4 Contents 1 Introduction 7 1.1 Why functional programming? . 7 1.2 Structure of the thesis . 8 2 The Haskell language 9 2.1 Main Haskell features . -

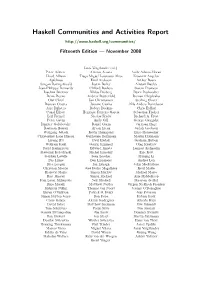

Haskell Communities and Activities Report

Haskell Communities and Activities Report http://www.haskell.org/communities/ Fifteenth Edition — November 2008 Janis Voigtländer (ed.) Peter Achten Alfonso Acosta Andy Adams-Moran Lloyd Allison Tiago Miguel Laureano Alves Krasimir Angelov Apfelmus Emil Axelsson Arthur Baars Sengan Baring-Gould Justin Bailey Alistair Bayley Jean-Philippe Bernardy Clifford Beshers Gwern Branwen Joachim Breitner Niklas Broberg Bjorn Buckwalter Denis Bueno Andrew Butterfield Roman Cheplyaka Olaf Chitil Jan Christiansen Sterling Clover Duncan Coutts Jácome Cunha Nils Anders Danielsson Atze Dijkstra Robert Dockins Chris Eidhof Conal Elliott Henrique Ferreiro García Sebastian Fischer Leif Frenzel Nicolas Frisby Richard A. Frost Peter Gavin Andy Gill George Giorgidze Dimitry Golubovsky Daniel Gorin Jurriaan Hage Bastiaan Heeren Aycan Irican Judah Jacobson Wolfgang Jeltsch Kevin Hammond Enzo Haussecker Christopher Lane Hinson Guillaume Hoffmann Martin Hofmann Liyang HU Paul Hudak Graham Hutton Wolfram Kahl Garrin Kimmell Oleg Kiselyov Farid Karimipour Edward Kmett Lennart Kolmodin Slawomir Kolodynski Michal Konečný Eric Kow Stephen Lavelle Sean Leather Huiqing Li Bas Lijnse Ben Lippmeier Andres Löh Rita Loogen Ian Lynagh John MacFarlane Christian Maeder José Pedro Magalhães Ketil Malde Blažević Mario Simon Marlow Michael Marte Bart Massey Simon Michael Arie Middelkoop Ivan Lazar Miljenovic Neil Mitchell Maarten de Mol Dino Morelli Matthew Naylor Jürgen Nicklisch-Franken Rishiyur Nikhil Thomas van Noort Jeremy O’Donoghue Bryan O’Sullivan Patrick O. Perry Jens Petersen -

Haskell (Programming Language) 1 Haskell (Programming Language)

Haskell (programming language) 1 Haskell (programming language) Haskell Paradigm(s) functional, lazy/non-strict, modular Designed by Simon Peyton Jones, Lennart Augustsson, Dave Barton, Brian Boutel, Warren Burton, Joseph Fasel, Kevin Hammond, Ralf Hinze, Paul Hudak, John Hughes, Thomas Johnsson, Mark Jones, John Launchbury, Erik Meijer, John Peterson, Alastair Reid, Colin Runciman, Philip Wadler Appeared in 1990 Stable release Haskell 2010 / July 2010 Preview release Announced as Haskell 2014 Typing discipline static, strong, inferred Major GHC, Hugs, NHC, JHC, Yhc, UHC implementations Dialects Helium, Gofer Influenced by Clean, FP, Gofer, Hope and Hope+, Id, ISWIM, KRC, Lisp, Miranda, ML and Standard ML, Orwell, SASL, SISAL, Scheme [1] [2] [2] [2] Influenced Agda, Bluespec, C++11/Concepts, C#/LINQ, CAL,Wikipedia:Citation needed Cayenne, Clean, Clojure, [2] [2] CoffeeScript, Curry, Elm, Epigram,Wikipedia:Citation needed Escher,Wikipedia:Citation needed F#, Frege, Isabelle, [2] [2] Java/Generics, Kaya,Wikipedia:Citation needed LiveScript, Mercury, Omega,Wikipedia:Citation needed Perl 6, [2] [2] [2] Python, Qi,Wikipedia:Citation needed Scala, Swift, Timber,Wikipedia:Citation needed Visual Basic 9.0 OS Cross-platform Filename .hs, .lhs extension(s) [3] Website haskell.org Haskell /ˈhæskəl/ is a standardized, general-purpose purely functional programming language, with non-strict semantics and strong static typing.[4] It is named after logician Haskell Curry.[5] History Following the release of Miranda by Research Software Ltd, in 1985, interest in lazy functional languages grew: by 1987, more than a dozen non-strict, purely functional programming languages existed. Of these, Miranda was the most widely used, but was proprietary software. At the conference on Functional Programming Languages and Computer Architecture (FPCA '87) in Portland, Oregon, a meeting was held during which participants formed a strong consensus that a committee should be formed to define an open standard for such languages. -

Yhc.Core – from Haskell to Core by Dimitry Golubovsky [email protected] and Neil Mitchell [email protected] and Matthew Naylor [email protected]

Yhc.Core – from Haskell to Core by Dimitry Golubovsky [email protected] and Neil Mitchell [email protected] and Matthew Naylor [email protected] The Yhc compiler is a hot-bed of new and interesting ideas. We present Yhc.Core – one of the most popular libraries from Yhc. We describe what we think makes Yhc.Core special, and how people have used it in various projects including an evaluator, and a Javascript code generator. What is Yhc Core? The York Haskell Compiler (Yhc) [1] is a fork of the nhc98 compiler [2], started by Tom Shackell. The initial goals included increased portability, a platform inde- pendent bytecode, integrated Hat [3] support and generally being a cleaner code base to work with. Yhc has been going for a number of years, and now compiles and runs almost all Haskell 98 programs and has basic FFI support – the main thing missing is the Haskell base library. Yhc.Core is one of our most successful libraries to date. The original nhc com- piler used an intermediate core language called PosLambda – a basic lambda cal- culus extended with positional information. The language was neither a subset nor a superset of Haskell. In particular there were unusual constructs and all names were stored in a symbol table. There was also no defined external representation. When one of the authors required a core Haskell language, after evaluating GHC Core [4], it was decided that PosLambda was closest to what was desired but required substantial clean up. Rather than attempt to change the PosLambda language, a task that would have been decidedly painful, we chose instead to write a Core language from scratch. -

A History of Haskell: Being Lazy with Class April 16, 2007

A History of Haskell: Being Lazy With Class April 16, 2007 Paul Hudak John Hughes Simon Peyton Jones Yale University Chalmers University Microsoft Research [email protected] [email protected] [email protected] Philip Wadler University of Edinburgh [email protected] Abstract lution that are distinctive in themselves, or that developed in un- expected or surprising ways. We reflect on five areas: syntax (Sec- This paper describes the history of Haskell, including its genesis tion 4); algebraic data types (Section 5); the type system, and type and principles, technical contributions, implementations and tools, classes in particular (Section 6); monads and input/output (Sec- and applications and impact. tion 7); and support for programming in the large, such as modules and packages, and the foreign-function interface (Section 8). 1. Introduction Part III describes implementations and tools: what has been built In September of 1987 a meeting was held at the confer- for the users of Haskell. We describe the various implementations ence on Functional Programming Languages and Computer of Haskell, including GHC, hbc, hugs, nhc, and Yale Haskell (Sec- Architecture in Portland, Oregon, to discuss an unfortunate tion 9), and tools for profiling and debugging (Section 10). situation in the functional programming community: there had come into being more than a dozen non-strict, purely Part IV describes applications and impact: what has been built by functional programming languages, all similar in expressive the users of Haskell. The language has been used for a bewildering power and semantic underpinnings. There was a strong con- variety of applications, and in Section 11 we reflect on the distinc- sensus at this meeting that more widespread use of this class tive aspects of some of these applications, so far as we can dis- of functional languages was being hampered by the lack of cern them. -

The Reduceron: Widening the Von Neumann Bottleneck for Graph Reduction Using an FPGA

The Reduceron: Widening the von Neumann Bottleneck for Graph Reduction using an FPGA Matthew Naylor and Colin Runciman University of York, UK {mfn,colin}@cs.york.ac.uk Abstract. For the memory intensive task of graph reduction, modern PCs are limited not by processor speed, but by the rate that data can travel between processor and memory. This limitation is known as the von Neumann bottleneck. We explore the effect of widening this bottle- neck using a special-purpose graph reduction machine with wide, parallel memories. Our prototype machine – the Reduceron – is implemented us- ing an FPGA, and is based on a simple template-instantiation evaluator. Running at only 91.5MHz on an FPGA, the Reduceron is faster than mature bytecode implementations of Haskell running on a 2.8GHz PC. 1 Introduction The processing power of PCs has risen astonishingly over the past few decades, and this trend looks set to continue with the introduction of multi-core CPUs. However, increased processing power does not necessarily mean faster programs! Many programs, particularly memory intensive ones, are limited by the rate that data can travel between the CPU and the memory, not by the rate that the CPU can process data. A prime example of a memory intensive application is graph reduction [14], the operational basis of standard lazy functional language implementations. The core operation of graph reduction is function unfolding, whereby a function ap- plication f a1 · · · an is reduced to a fresh copy of f’s body with its free variables replaced by the arguments a1 · · · an. On a PC, unfolding a single function in this way requires the sequential execution of many machine instructions.