Segmentation

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

University of California at Berkeley College of Engineering Department of Electrical Engineering and Computer Science

University of California at Berkeley College of Engineering Department of Electrical Engineering and Computer Science EECS 61C, Fall 2003 Lab 2: Strings and pointers; the GDB debugger PRELIMINARY VERSION Goals To learn to use the gdb debugger to debug string and pointer programs in C. Reading Sections 5.1-5.5, in K&R GDB Reference Card (linked to class page under “resources.”) Optional: Complete GDB documentation (http://www.gnu.org/manual/gdb-5.1.1/gdb.html) Note: GDB currently only works on the following machines: • torus.cs.berkeley.edu • rhombus.cs.berkeley.edu • pentagon.cs.berkeley.edu Please ssh into one of these machines before starting the lab. Basic tasks in GDB There are two ways to start the debugger: 1. In EMACS, type M-x gdb, then type gdb <filename> 2. Run gdb <filename> from the command line The following are fundamental operations in gdb. Please make sure you know the gdb commands for the following operations before you proceed. 1. How do you run a program in gdb? 2. How do you pass arguments to a program when using gdb? 3. How do you set a breakpoint in a program? 4. How do you set a breakpoint which which only occurs when a set of conditions is true (eg when certain variables are a certain value)? 5. How do you execute the next line of C code in the program after a break? 1 6. If the next line is a function call, you'll execute the call in one step. How do you execute the C code, line by line, inside the function call? 7. -

Theendokernel: Fast, Secure

The Endokernel: Fast, Secure, and Programmable Subprocess Virtualization Bumjin Im Fangfei Yang Chia-Che Tsai Michael LeMay Rice University Rice University Texas A&M University Intel Labs Anjo Vahldiek-Oberwagner Nathan Dautenhahn Intel Labs Rice University Abstract Intra-Process Sandbox Multi-Process Commodity applications contain more and more combina- ld/st tions of interacting components (user, application, library, and Process Process Trusted Unsafe system) and exhibit increasingly diverse tradeoffs between iso- Unsafe ld/st lation, performance, and programmability. We argue that the Unsafe challenge of future runtime isolation is best met by embracing syscall()ld/st the multi-principle nature of applications, rethinking process Trusted Trusted read/ architecture for fast and extensible intra-process isolation. We IPC IPC write present, the Endokernel, a new process model and security Operating System architecture that nests an extensible monitor into the standard process for building efficient least-authority abstractions. The Endokernel introduces a new virtual machine abstraction for Figure 1: Problem: intra-process is bypassable because do- representing subprocess authority, which is enforced by an main is opaque to OS, sandbox limits functionality, and inter- efficient self-isolating monitor that maps the abstraction to process is slow and costly to apply. Red indicates limitations. system level objects (processes, threads, files, and signals). We show how the Endokernel Architecture can be used to develop enforces subprocess access control to memory and CPU specialized separation abstractions using an exokernel-like state [10, 17, 24, 25, 75].Unfortunately, these approaches only organization to provide virtual privilege rings, which we use virtualize minimal parts of the CPU and neglect tying their to reorganize and secure NGINX. -

Virtual Memory

Chapter 4 Virtual Memory Linux processes execute in a virtual environment that makes it appear as if each process had the entire address space of the CPU available to itself. This virtual address space extends from address 0 all the way to the maximum address. On a 32-bit platform, such as IA-32, the maximum address is 232 − 1or0xffffffff. On a 64-bit platform, such as IA-64, this is 264 − 1or0xffffffffffffffff. While it is obviously convenient for a process to be able to access such a huge ad- dress space, there are really three distinct, but equally important, reasons for using virtual memory. 1. Resource virtualization. On a system with virtual memory, a process does not have to concern itself with the details of how much physical memory is available or which physical memory locations are already in use by some other process. In other words, virtual memory takes a limited physical resource (physical memory) and turns it into an infinite, or at least an abundant, resource (virtual memory). 2. Information isolation. Because each process runs in its own address space, it is not possible for one process to read data that belongs to another process. This improves security because it reduces the risk of one process being able to spy on another pro- cess and, e.g., steal a password. 3. Fault isolation. Processes with their own virtual address spaces cannot overwrite each other’s memory. This greatly reduces the risk of a failure in one process trig- gering a failure in another process. That is, when a process crashes, the problem is generally limited to that process alone and does not cause the entire machine to go down. -

Memory Layout and Access Chapter Four

Memory Layout and Access Chapter Four Chapter One discussed the basic format for data in memory. Chapter Three covered how a computer system physically organizes that data. This chapter discusses how the 80x86 CPUs access data in memory. 4.0 Chapter Overview This chapter forms an important bridge between sections one and two (Machine Organization and Basic Assembly Language, respectively). From the point of view of machine organization, this chapter discusses memory addressing, memory organization, CPU addressing modes, and data representation in memory. From the assembly language programming point of view, this chapter discusses the 80x86 register sets, the 80x86 mem- ory addressing modes, and composite data types. This is a pivotal chapter. If you do not understand the material in this chapter, you will have difficulty understanding the chap- ters that follow. Therefore, you should study this chapter carefully before proceeding. This chapter begins by discussing the registers on the 80x86 processors. These proces- sors provide a set of general purpose registers, segment registers, and some special pur- pose registers. Certain members of the family provide additional registers, although typical application do not use them. After presenting the registers, this chapter describes memory organization and seg- mentation on the 80x86. Segmentation is a difficult concept to many beginning 80x86 assembly language programmers. Indeed, this text tends to avoid using segmented addressing throughout the introductory chapters. Nevertheless, segmentation is a power- ful concept that you must become comfortable with if you intend to write non-trivial 80x86 programs. 80x86 memory addressing modes are, perhaps, the most important topic in this chap- ter. -

Security and Performance Analysis of Custom Memory Allocators Tiffany

Security and Performance Analysis of Custom Memory Allocators by Tiffany Tang Submitted to the Department of Electrical Engineering and Computer Science in partial fulfillment of the requirements for the degree of Master of Engineering in Electrical Engineering and Computer Science at the MASSACHUSETTS INSTITUTE OF TECHNOLOGY September 2019 c Massachusetts Institute of Technology 2019. All rights reserved. Author.............................................................. Department of Electrical Engineering and Computer Science June 10, 2019 Certified by. Howard E. Shrobe Principal Research Scientist and Associate Director of Security, CSAIL Thesis Supervisor Certified by. Hamed Okhravi Senior Technical Staff, MIT Lincoln Laboratory Thesis Supervisor Accepted by . Katrina LaCurts, Chair, Masters of Engineering Thesis Committee 2 DISTRIBUTION STATEMENT A. Approved for public release. Distribution is unlimited. This material is based upon work supported by the Assistant Secretary of Defense for Research and Engineering under Air Force Contract No. FA8702-15-D-0001. Any opinions, findings, conclusions or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of the Assistant Secre- tary of Defense for Research and Engineering. 3 Security and Performance Analysis of Custom Memory Allocators by Tiffany Tang Submitted to the Department of Electrical Engineering and Computer Science on June 10, 2019, in partial fulfillment of the requirements for the degree of Master of Engineering in Electrical Engineering and Computer Science Abstract Computer programmers use custom memory allocators as an alternative to built- in or general-purpose memory allocators with the intent to improve performance and minimize human error. However, it is difficult to achieve both memory safety and performance gains on custom memory allocators. -

The Case for Compressed Caching in Virtual Memory Systems

THE ADVANCED COMPUTING SYSTEMS ASSOCIATION The following paper was originally published in the Proceedings of the USENIX Annual Technical Conference Monterey, California, USA, June 6-11, 1999 The Case for Compressed Caching in Virtual Memory Systems _ Paul R. Wilson, Scott F. Kaplan, and Yannis Smaragdakis aUniversity of Texas at Austin © 1999 by The USENIX Association All Rights Reserved Rights to individual papers remain with the author or the author's employer. Permission is granted for noncommercial reproduction of the work for educational or research purposes. This copyright notice must be included in the reproduced paper. USENIX acknowledges all trademarks herein. For more information about the USENIX Association: Phone: 1 510 528 8649 FAX: 1 510 548 5738 Email: [email protected] WWW: http://www.usenix.org The Case for Compressed Caching in Virtual Memory Systems Paul R. Wilson, Scott F. Kaplan, and Yannis Smaragdakis Dept. of Computer Sciences University of Texas at Austin Austin, Texas 78751-1182 g fwilson|sfkaplan|smaragd @cs.utexas.edu http://www.cs.utexas.edu/users/oops/ Abstract In [Wil90, Wil91b] we proposed compressed caching for virtual memory—storing pages in compressed form Compressed caching uses part of the available RAM to in a main memory compression cache to reduce disk pag- hold pages in compressed form, effectively adding a new ing. Appel also promoted this idea [AL91], and it was level to the virtual memory hierarchy. This level attempts evaluated empirically by Douglis [Dou93] and by Russi- to bridge the huge performance gap between normal (un- novich and Cogswell [RC96]. Unfortunately Douglis’s compressed) RAM and disk. -

Memory Protection at Option

Memory Protection at Option Application-Tailored Memory Safety in Safety-Critical Embedded Systems – Speicherschutz nach Wahl Auf die Anwendung zugeschnittene Speichersicherheit in sicherheitskritischen eingebetteten Systemen Der Technischen Fakultät der Universität Erlangen-Nürnberg zur Erlangung des Grades Doktor-Ingenieur vorgelegt von Michael Stilkerich Erlangen — 2012 Als Dissertation genehmigt von der Technischen Fakultät Universität Erlangen-Nürnberg Tag der Einreichung: 09.07.2012 Tag der Promotion: 30.11.2012 Dekan: Prof. Dr.-Ing. Marion Merklein Berichterstatter: Prof. Dr.-Ing. Wolfgang Schröder-Preikschat Prof. Dr. Michael Philippsen Abstract With the increasing capabilities and resources available on microcontrollers, there is a trend in the embedded industry to integrate multiple software functions on a single system to save cost, size, weight, and power. The integration raises new requirements, thereunder the need for spatial isolation, which is commonly established by using a memory protection unit (MPU) that can constrain access to the physical address space to a fixed set of address regions. MPU-based protection is limited in terms of available hardware, flexibility, granularity and ease of use. Software-based memory protection can provide an alternative or complement MPU-based protection, but has found little attention in the embedded domain. In this thesis, I evaluate qualitative and quantitative advantages and limitations of MPU-based memory protection and software-based protection based on a multi-JVM. I developed a framework composed of the AUTOSAR OS-like operating system CiAO and KESO, a Java implementation for deeply embedded systems. The framework allows choosing from no memory protection, MPU-based protection, software-based protection, and a combination of the two. -

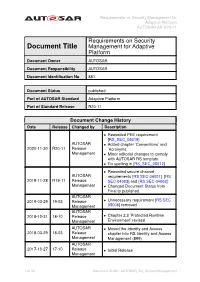

Requirements on Security Management for Adaptive Platform AUTOSAR AP R20-11

Requirements on Security Management for Adaptive Platform AUTOSAR AP R20-11 Requirements on Security Document Title Management for Adaptive Platform Document Owner AUTOSAR Document Responsibility AUTOSAR Document Identification No 881 Document Status published Part of AUTOSAR Standard Adaptive Platform Part of Standard Release R20-11 Document Change History Date Release Changed by Description • Reworded PEE requirement [RS_SEC_05019] AUTOSAR • Added chapter ’Conventions’ and 2020-11-30 R20-11 Release ’Acronyms’ Management • Minor editorial changes to comply with AUTOSAR RS template • Fix spelling in [RS_SEC_05012] • Reworded secure channel AUTOSAR requirements [RS SEC 04001], [RS 2019-11-28 R19-11 Release SEC 04003] and [RS SEC 04003] Management • Changed Document Status from Final to published AUTOSAR 2019-03-29 19-03 Release • Unnecessary requirement [RS SEC Management 05006] removed AUTOSAR 2018-10-31 18-10 Release • Chapter 2.3 ’Protected Runtime Management Environment’ revised AUTOSAR • Moved the Identity and Access 2018-03-29 18-03 Release chapter into RS Identity and Access Management Management (899) AUTOSAR 2017-10-27 17-10 Release • Initial Release Management 1 of 32 Document ID 881: AUTOSAR_RS_SecurityManagement Requirements on Security Management for Adaptive Platform AUTOSAR AP R20-11 Disclaimer This work (specification and/or software implementation) and the material contained in it, as released by AUTOSAR, is for the purpose of information only. AUTOSAR and the companies that have contributed to it shall not be liable for any use of the work. The material contained in this work is protected by copyright and other types of intel- lectual property rights. The commercial exploitation of the material contained in this work requires a license to such intellectual property rights. -

CSE421 Midterm Solutions —SOLUTION SET— 09 Mar 2012

CSE421 Midterm Solutions —SOLUTION SET— 09 Mar 2012 This midterm exam consists of three types of questions: 1. 10 multiple choice questions worth 1 point each. These are drawn directly from lecture slides and intended to be easy. 2. 6 short answer questions worth 5 points each. You can answer as many as you want, but we will give you credit for your best four answers for a total of up to 20 points. You should be able to answer the short answer questions in four or five sentences. 3. 2 long answer questions worth 20 points each. Please answer only one long answer question. If you answer both, we will only grade one. Your answer to the long answer should span a page or two. Please answer each question as clearly and succinctly as possible. Feel free to draw pic- tures or diagrams if they help you to do so. No aids of any kind are permitted. The point value assigned to each question is intended to suggest how to allocate your time. So you should work on a 5 point question for roughly 5 minutes. CSE421 Midterm Solutions 09 Mar 2012 Multiple Choice 1. (10 points) Answer all ten of the following questions. Each is worth one point. (a) In the story that GWA (Geoff) began class with on Monday, March 4th, why was the Harvard student concerned about his grade? p He never attended class. He never arrived at class on time. He usually fell asleep in class. He was using drugs. (b) All of the following are inter-process (IPC) communication mechanisms except p shared files. -

Virtual Memory

Virtual Memory Copyright ©: University of Illinois CS 241 Staff 1 Address Space Abstraction n Program address space ¡ All memory data ¡ i.e., program code, stack, data segment n Hardware interface (physical reality) ¡ Computer has one small, shared memory How can n Application interface (illusion) we close this gap? ¡ Each process wants private, large memory Copyright ©: University of Illinois CS 241 Staff 2 Address Space Illusions n Address independence ¡ Same address can be used in different address spaces yet remain logically distinct n Protection ¡ One address space cannot access data in another address space n Virtual memory ¡ Address space can be larger than the amount of physical memory on the machine Copyright ©: University of Illinois CS 241 Staff 3 Address Space Illusions: Virtual Memory Illusion Reality Giant (virtual) address space Many processes sharing Protected from others one (physical) address space (Unless you want to share it) Limited memory More whenever you want it Today: An introduction to Virtual Memory: The memory space seen by your programs! Copyright ©: University of Illinois CS 241 Staff 4 Multi-Programming n Multiple processes in memory at the same time n Goals 1. Layout processes in memory as needed 2. Protect each process’s memory from accesses by other processes 3. Minimize performance overheads 4. Maximize memory utilization Copyright ©: University of Illinois CS 241 Staff 5 OS & memory management n Main memory is normally divided into two parts: kernel memory and user memory n In a multiprogramming system, the “user” part of main memory needs to be divided to accommodate multiple processes. n The user memory is dynamically managed by the OS (known as memory management) to satisfy following requirements: ¡ Protection ¡ Relocation ¡ Sharing ¡ Memory organization (main mem. -

Paging: Smaller Tables

20 Paging: Smaller Tables We now tackle the second problem that paging introduces: page tables are too big and thus consume too much memory. Let’s start out with a linear page table. As you might recall1, linear page tables get pretty 32 12 big. Assume again a 32-bit address space (2 bytes), with 4KB (2 byte) pages and a 4-byte page-table entry. An address space thus has roughly 232 one million virtual pages in it ( 212 ); multiply by the page-table entry size and you see that our page table is 4MB in size. Recall also: we usually have one page table for every process in the system! With a hundred active processes (not uncommon on a modern system), we will be allocating hundreds of megabytes of memory just for page tables! As a result, we are in search of some techniques to reduce this heavy burden. There are a lot of them, so let’s get going. But not before our crux: CRUX: HOW TO MAKE PAGE TABLES SMALLER? Simple array-based page tables (usually called linear page tables) are too big, taking up far too much memory on typical systems. How can we make page tables smaller? What are the key ideas? What inefficiencies arise as a result of these new data structures? 20.1 Simple Solution: Bigger Pages We could reduce the size of the page table in one simple way: use bigger pages. Take our 32-bit address space again, but this time assume 16KB pages. We would thus have an 18-bit VPN plus a 14-bit offset. -

Virtual Memory Basics

Operating Systems Virtual Memory Basics Peter Lipp, Daniel Gruss 2021-03-04 Table of contents 1. Address Translation First Idea: Base and Bound Segmentation Simple Paging Multi-level Paging 2. Address Translation on x86 processors Address Translation pointers point to objects etc. transparent: it is not necessary to know how memory reference is converted to data enables number of advanced features programmers perspective: Address Translation OS in control of address translation pointers point to objects etc. transparent: it is not necessary to know how memory reference is converted to data programmers perspective: Address Translation OS in control of address translation enables number of advanced features pointers point to objects etc. transparent: it is not necessary to know how memory reference is converted to data Address Translation OS in control of address translation enables number of advanced features programmers perspective: transparent: it is not necessary to know how memory reference is converted to data Address Translation OS in control of address translation enables number of advanced features programmers perspective: pointers point to objects etc. Address Translation OS in control of address translation enables number of advanced features programmers perspective: pointers point to objects etc. transparent: it is not necessary to know how memory reference is converted to data Address Translation - Idea / Overview Shared libraries, interprocess communication Multiple regions for dynamic allocation