Evaluation of High Dynamic Range Photography As a Luminance Data Acquisition System

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Luminance Requirements for Lighted Signage

Luminance Requirements for Lighted Signage Jean Paul Freyssinier, Nadarajah Narendran, John D. Bullough Lighting Research Center Rensselaer Polytechnic Institute, Troy, NY 12180 www.lrc.rpi.edu Freyssinier, J.P., N. Narendran, and J.D. Bullough. 2006. Luminance requirements for lighted signage. Sixth International Conference on Solid State Lighting, Proceedings of SPIE 6337, 63371M. Copyright 2006 Society of Photo-Optical Instrumentation Engineers. This paper was published in the Sixth International Conference on Solid State Lighting, Proceedings of SPIE and is made available as an electronic preprint with permission of SPIE. One print or electronic copy may be made for personal use only. Systematic or multiple reproduction, distribution to multiple locations via electronic or other means, duplication of any material in this paper for a fee or for commercial purposes, or modification of the content of the paper are prohibited. Luminance Requirements for Lighted Signage Jean Paul Freyssinier*, Nadarajah Narendran, John D. Bullough Lighting Research Center, Rensselaer Polytechnic Institute, 21 Union Street, Troy, NY 12180 USA ABSTRACT Light-emitting diode (LED) technology is presently targeted to displace traditional light sources in backlighted signage. The literature shows that brightness and contrast are perhaps the two most important elements of a sign that determine its attention-getting capabilities and its legibility. Presently, there are no luminance standards for signage, and the practice of developing brighter signs to compete with signs in adjacent businesses is becoming more commonplace. Sign luminances in such cases may far exceed what people usually need for identifying and reading a sign. Furthermore, the practice of higher sign luminance than needed has many negative consequences, including higher energy use and light pollution. -

Report on Digital Sign Brightness

REPORT ON DIGITAL SIGN BRIGHTNESS Prepared for the Nevada State Department of Transportation, Washoe County, City of Reno and City of Sparks By Jerry Wachtel, President, The Veridian Group, Inc., Berkeley, CA November 2014 1 Table of Contents PART 1 ......................................................................................................................... 3 Introduction. ................................................................................................................ 3 Background. ................................................................................................................. 3 Key Terms and Definitions. .......................................................................................... 4 Luminance. .................................................................................................................................................................... 4 Illuminance. .................................................................................................................................................................. 5 Reflected Light vs. Emitted Light (Traditional Signs vs. Electronic Signs). ...................................... 5 Measuring Luminance and Illuminance ........................................................................ 6 Measuring Luminance. ............................................................................................................................................. 6 Measuring Illuminance. .......................................................................................................................................... -

Lecture 3: the Sensor

4.430 Daylighting Human Eye ‘HDR the old fashioned way’ (Niemasz) Massachusetts Institute of Technology ChriChristoph RstophReeiinhartnhart Department of Architecture 4.4.430 The430The SeSensnsoror Building Technology Program Happy Valentine’s Day Sun Shining on a Praline Box on February 14th at 9.30 AM in Boston. 1 Happy Valentine’s Day Falsecolor luminance map Light and Human Vision 2 Human Eye Outside view of a human eye Ophtalmogram of a human retina Retina has three types of photoreceptors: Cones, Rods and Ganglion Cells Day and Night Vision Photopic (DaytimeVision): The cones of the eye are of three different types representing the three primary colors, red, green and blue (>3 cd/m2). Scotopic (Night Vision): The rods are repsonsible for night and peripheral vision (< 0.001 cd/m2). Mesopic (Dim Light Vision): occurs when the light levels are low but one can still see color (between 0.001 and 3 cd/m2). 3 VisibleRange Daylighting Hanbook (Reinhart) The human eye can see across twelve orders of magnitude. We can adapt to about 10 orders of magnitude at a time via the iris. Larger ranges take time and require ‘neural adaptation’. Transition Spaces Outside Atrium Circulation Area Final destination 4 Luminous Response Curve of the Human Eye What is daylight? Daylight is the visible part of the electromagnetic spectrum that lies between 380 and 780 nm. UV blue green yellow orange red IR 380 450 500 550 600 650 700 750 wave length (nm) 5 Photometric Quantities Characterize how a space is perceived. Illuminance Luminous Flux Luminance Luminous Intensity Luminous Intensity [Candela] ~ 1 candela Courtesy of Matthew Bowden at www.digitallyrefreshing.com. -

Logarithmic Image Sensor for Wide Dynamic Range Stereo Vision System

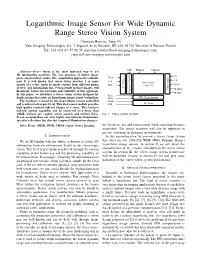

Logarithmic Image Sensor For Wide Dynamic Range Stereo Vision System Christian Bouvier, Yang Ni New Imaging Technologies SA, 1 Impasse de la Noisette, BP 426, 91370 Verrieres le Buisson France Tel: +33 (0)1 64 47 88 58 [email protected], [email protected] NEG PIXbias COLbias Abstract—Stereo vision is the most universal way to get 3D information passively. The fast progress of digital image G1 processing hardware makes this computation approach realizable Vsync G2 Vck now. It is well known that stereo vision matches 2 or more Control Amp Pixel Array - Scan Offset images of a scene, taken by image sensors from different points RST - 1280x720 of view. Any information loss, even partially in those images, will V Video Out1 drastically reduce the precision and reliability of this approach. Exposure Out2 In this paper, we introduce a stereo vision system designed for RD1 depth sensing that relies on logarithmic image sensor technology. RD2 FPN Compensation The hardware is based on dual logarithmic sensors controlled Hsync and synchronized at pixel level. This dual sensor module provides Hck H - Scan high quality contrast indexed images of a scene. This contrast indexed sensing capability can be conserved over more than 140dB without any explicit sensor control and without delay. Fig. 1. Sensor general structure. It can accommodate not only highly non-uniform illumination, specular reflections but also fast temporal illumination changes. Index Terms—HDR, WDR, CMOS sensor, Stereo Imaging. the details are lost and consequently depth extraction becomes impossible. The sensor reactivity will also be important to prevent saturation in changing environments. -

Receiver Dynamic Range: Part 2

The Communications Edge™ Tech-note Author: Robert E. Watson Receiver Dynamic Range: Part 2 Part 1 of this article reviews receiver mea- the receiver can process acceptably. In sim- NF is the receiver noise figure in dB surements which, taken as a group, describe plest terms, it is the difference in dB This dynamic range definition has the receiver dynamic range. Part 2 introduces between the inband 1-dB compression point advantage of being relatively easy to measure comprehensive measurements that attempt and the minimum-receivable signal level. without ambiguity but, unfortunately, it to characterize a receiver’s dynamic range as The compression point is obvious enough; assumes that the receiver has only a single a single number. however, the minimum-receivable signal signal at its input and that the signal is must be identified. desired. For deep-space receivers, this may be COMPREHENSIVE MEASURE- a reasonable assumption, but the terrestrial MENTS There are a number of candidates for mini- mum-receivable signal level, including: sphere is not usually so benign. For specifi- The following receiver measurements and “minimum-discernable signal” (MDS), tan- cation of general-purpose receivers, some specifications attempt to define overall gential sensitivity, 10-dB SNR, and receiver interfering signals must be assumed, and this receiver dynamic range as a single number noise floor. Both MDS and tangential sensi- is what the other definitions of receiver which can be used both to predict overall tivity are based on subjective judgments of dynamic range do. receiver performance and as a figure of merit signal strength, which differ significantly to compare competing receivers. -

For the Falcon™ Range of Microphone Products (Ba5105)

Technical Documentation Microphone Handbook For the Falcon™ Range of Microphone Products Brüel&Kjær B K WORLD HEADQUARTERS: DK-2850 Nærum • Denmark • Telephone: +4542800500 •Telex: 37316 bruka dk • Fax: +4542801405 • e-mail: [email protected] • Internet: http://www.bk.dk BA 5105 –12 Microphone Handbook Revision February 1995 Brüel & Kjær Falcon™ Range of Microphone Products BA 5105 –12 Microphone Handbook Trademarks Microsoft is a registered trademark and Windows is a trademark of Microsoft Cor- poration. Copyright © 1994, 1995, Brüel & Kjær A/S All rights reserved. No part of this publication may be reproduced or distributed in any form or by any means without prior consent in writing from Brüel & Kjær A/S, Nærum, Denmark. 0 − 2 Falcon™ Range of Microphone Products Brüel & Kjær Microphone Handbook Contents 1. Introduction....................................................................................................................... 1 – 1 1.1 About the Microphone Handbook............................................................................... 1 – 2 1.2 About the Falcon™ Range of Microphone Products.................................................. 1 – 2 1.3 The Microphones ......................................................................................................... 1 – 2 1.4 The Preamplifiers........................................................................................................ 1 – 8 1 2. Prepolarized Free-field /2" Microphone Type 4188....................... 2 – 1 2.1 Introduction ................................................................................................................ -

Exposure Metering and Zone System Calibration

Exposure Metering Relating Subject Lighting to Film Exposure By Jeff Conrad A photographic exposure meter measures subject lighting and indicates camera settings that nominally result in the best exposure of the film. The meter calibration establishes the relationship between subject lighting and those camera settings; the photographer’s skill and metering technique determine whether the camera settings ultimately produce a satisfactory image. Historically, the “best” exposure was determined subjectively by examining many photographs of different types of scenes with different lighting levels. Common practice was to use wide-angle averaging reflected-light meters, and it was found that setting the calibration to render the average of scene luminance as a medium tone resulted in the “best” exposure for many situations. Current calibration standards continue that practice, although wide-angle average metering largely has given way to other metering tech- niques. In most cases, an incident-light meter will cause a medium tone to be rendered as a medium tone, and a reflected-light meter will cause whatever is metered to be rendered as a medium tone. What constitutes a “medium tone” depends on many factors, including film processing, image postprocessing, and, when appropriate, the printing process. More often than not, a “medium tone” will not exactly match the original medium tone in the subject. In many cases, an exact match isn’t necessary—unless the original subject is available for direct comparison, the viewer of the image will be none the wiser. It’s often stated that meters are “calibrated to an 18% reflectance,” usually without much thought given to what the statement means. -

Spectral Light Measuring

Spectral Light Measuring 1 Precision GOSSEN Foto- und Lichtmesstechnik – Your Guarantee for Precision and Quality GOSSEN Foto- und Lichtmesstechnik is specialized in the measurement of light, and has decades of experience in its chosen field. Continuous innovation is the answer to rapidly changing technologies, regulations and markets. Outstanding product quality is assured by means of a certified quality management system in accordance with ISO 9001. LED – Light of the Future The GOSSEN Light Lab LED technology has experience rapid growth in recent years thanks to the offers calibration services, for our own products, as well as for products from development of LEDs with very high light efficiency. This is being pushed by other manufacturers, and issues factory calibration certificates. The optical the ban on conventional light bulbs with low energy efficiency, as well as an table used for this purpose is subject to strict test equipment monitoring, and ever increasing energy-saving mentality and environmental awareness. LEDs is traced back to the PTB in Braunschweig, Germany (German Federal Institute have long since gone beyond their previous status as effects lighting and are of Physics and Metrology). Aside from the PTB, our lab is the first in Germany being used for display illumination, LED displays and lamps. Modern means to be accredited for illuminance by DAkkS (German accreditation authority), of transportation, signal systems and street lights, as well as indoor and and is thus authorized to issue internationally recognized DAkkS calibration outdoor lighting, are no longer conceivable without them. The brightness and certificates. This assures that acquired measured values comply with official color of LEDs vary due to manufacturing processes, for which reason they regulations and, as a rule, stand up to legal argumentation. -

Signal-To-Noise Ratio and Dynamic Range Definitions

Signal-to-noise ratio and dynamic range definitions The Signal-to-Noise Ratio (SNR) and Dynamic Range (DR) are two common parameters used to specify the electrical performance of a spectrometer. This technical note will describe how they are defined and how to measure and calculate them. Figure 1: Definitions of SNR and SR. The signal out of the spectrometer is a digital signal between 0 and 2N-1, where N is the number of bits in the Analogue-to-Digital (A/D) converter on the electronics. Typical numbers for N range from 10 to 16 leading to maximum signal level between 1,023 and 65,535 counts. The Noise is the stochastic variation of the signal around a mean value. In Figure 1 we have shown a spectrum with a single peak in wavelength and time. As indicated on the figure the peak signal level will fluctuate a small amount around the mean value due to the noise of the electronics. Noise is measured by the Root-Mean-Squared (RMS) value of the fluctuations over time. The SNR is defined as the average over time of the peak signal divided by the RMS noise of the peak signal over the same time. In order to get an accurate result for the SNR it is generally required to measure over 25 -50 time samples of the spectrum. It is very important that your input to the spectrometer is constant during SNR measurements. Otherwise, you will be measuring other things like drift of you lamp power or time dependent signal levels from your sample. -

High Dynamic Range Ultrasound Imaging

The final publication is available at http://link.springer.com/article/10.1007/s11548-018-1729-3 Int J CARS manuscript No. (will be inserted by the editor) High Dynamic Range Ultrasound Imaging Alperen Degirmenci · Douglas P. Perrin · Robert D. Howe Received: 26 January 2018 Abstract Purpose High dynamic range (HDR) imaging is a popular computational photography technique that has found its way into every modern smartphone and camera. In HDR imaging, images acquired at different exposures are combined to increase the luminance range of the final image, thereby extending the limited dynamic range of the camera. Ultrasound imaging suffers from limited dynamic range as well; at higher power levels, the hyperechogenic tissue is overexposed, whereas at lower power levels, hypoechogenic tissue details are not visible. In this work, we apply HDR techniques to ultrasound imaging, where we combine ultrasound images acquired at different power levels to improve the level of detail visible in the final image. Methods Ultrasound images of ex vivo and in vivo tissue are acquired at different acoustic power levels and then combined to generate HDR ultrasound (HDR-US) images. The performance of five tone mapping operators is quantitatively evaluated using a similarity metric to determine the most suitable mapping for HDR-US imaging. Results The ex vivo and in vivo results demonstrated that HDR-US imaging enables visualizing both hyper- and hypoechogenic tissue at once in a single image. The Durand tone mapping operator preserved the most amount of detail across the dynamic range. Conclusions Our results strongly suggest that HDR-US imaging can improve the utility of ultrasound in image-based diagnosis and procedure guidance. -

Photometric Calibrations —————————————————————————

NIST Special Publication 250-37 NIST MEASUREMENT SERVICES: PHOTOMETRIC CALIBRATIONS ————————————————————————— Yoshihiro Ohno Optical Technology Division Physics Laboratory National Institute of Standards and Technology Gaithersburg, MD 20899 Supersedes SP250-15 Reprint with changes July 1997 ————————————————————————— U.S. DEPARTMENT OF COMMERCE William M. Daley, Secretary Technology Administration Gary R. Bachula, Acting Under Secretary for Technology National Institute of Standards and Technology Robert E. Hebner, Acting Director PREFACE The calibration and related measurement services of the National Institute of Standards and Technology are intended to assist the makers and users of precision measuring instruments in achieving the highest possible levels of accuracy, quality, and productivity. NIST offers over 300 different calibrations, special tests, and measurement assurance services. These services allow customers to directly link their measurement systems to measurement systems and standards maintained by NIST. These services are offered to the public and private organizations alike. They are described in NIST Special Publication (SP) 250, NIST Calibration Services Users Guide. The Users Guide is supplemented by a number of Special Publications (designated as the “SP250 Series”) that provide detailed descriptions of the important features of specific NIST calibration services. These documents provide a description of the: (1) specifications for the services; (2) design philosophy and theory; (3) NIST measurement system; (4) NIST operational procedures; (5) assessment of the measurement uncertainty including random and systematic errors and an error budget; and (6) internal quality control procedures used by NIST. These documents will present more detail than can be given in NIST calibration reports, or than is generally allowed in articles in scientific journals. In the past, NIST has published such information in a variety of ways. -

Sunlight Readability and Luminance Characteristics of Light

SUNLIGHT READABILITY AND LUMINANCE CHARACTERISTICS OF LIGHT- EMITTING DIODE PUSH BUTTON SWITCHES Robert J. Fitch, B.S.E.E., M.B.A. Thesis Prepared for the Degree of MASTER OF SCIENCE UNIVERSITY OF NORTH TEXAS May 2004 APPROVED: Albert B. Grubbs, Jr., Major Professor and Chair of the Department of Engineering Technology Don W. Guthrie, Committee Member Michael R. Kozak, Committee Member Roman Stemprok, Committee Member Vijay Vaidyanathan, Committee Member Oscar N. Garcia, Dean of the College of Engineering Sandra L. Terrell, Interim Dean of the Robert B. Toulouse School of Graduate Studies Fitch, Robert J., Sunlight readability and luminance characteristics of light- emitting diode push button switches. Master of Science (Engineering Technology), May 2004, 69 pp., 7 tables, 9 illustrations, references, 22 titles. Lighted push button switches and indicators serve many purposes in cockpits, shipboard applications and military ground vehicles. The quality of lighting produced by switches is vital to operators’ understanding of the information displayed. Utilizing LED technology in lighted switches has challenges that can adversely affect lighting quality. Incomplete data exists to educate consumers about potential differences in LED switch performance between different manufacturers. LED switches from four different manufacturers were tested for six attributes of lighting quality: average luminance and power consumption at full voltage, sunlight readable contrast, luminance contrast under ambient sunlight, legend uniformity, and dual-color uniformity. Three of the four manufacturers have not developed LED push button switches that meet lighting quality standards established with incandescent technology. Copyright 2004 by Robert J. Fitch ii ACKNOWLEDGMENTS I thank Don Guthrie and John Dillow at Aerospace Optics, Fort Worth, Texas, for providing the test samples, lending the use of their laboratories, and providing tremendous support for this research.