Privacy-Aware Publishing of Decentralized Access-Controlled Content

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Multi-Emotion Classification for Song Lyrics

Multi-Emotion Classification for Song Lyrics Darren Edmonds João Sedoc Donald Bren School of ICS Stern School of Business University of California, Irvine New York University [email protected] [email protected] Abstract and Shamma, 2010; Bobicev and Sokolova, 2017; Takala et al., 2014). Building such a model is espe- Song lyrics convey a multitude of emotions to the listener and powerfully portray the emo- cially challenging in practice as there often exists tional state of the writer or singer. This considerable disagreement regarding the percep- paper examines a variety of modeling ap- tion and interpretation of the emotions of a song or proaches to the multi-emotion classification ambiguity within the song itself (Kim et al., 2010). problem for songs. We introduce the Edmonds There exist a variety of high-quality text datasets Dance dataset, a novel emotion-annotated for emotion classification, from social media lyrics dataset from the reader’s perspective, datasets such as CBET (Shahraki, 2015) and TEC and annotate the dataset of Mihalcea and Strapparava(2012) at the song level. We (Mohammad, 2012) to large dialog corpora such find that models trained on relatively small as the DailyDialog dataset (Li et al., 2017). How- song datasets achieve marginally better perfor- ever, there remains a lack of comparable emotion- mance than BERT (Devlin et al., 2019) fine- annotated song lyric datasets, and existing lyrical tuned on large social media or dialog datasets. datasets are often annotated for valence-arousal af- fect rather than distinct emotions (Çano and Mori- 1 Introduction sio, 2017). Consequently, we introduce the Ed- Text-based sentiment analysis has become increas- monds Dance Dataset1, a novel lyrical dataset that ingly popular in recent years, in part due to its was crowdsourced through Amazon Mechanical numerous applications in fields such as marketing, Turk. -

You Can Judge an Artist by an Album Cover: Using Images for Music Annotation

You Can Judge an Artist by an Album Cover: Using Images for Music Annotation Janis¯ L¯ıbeks Douglas Turnbull Swarthmore College Department of Computer Science Swarthmore, PA 19081 Ithaca College Email: [email protected] Ithaca, NY 14850 Email: [email protected] Abstract—While the perception of music tends to focus on our acoustic listening experience, the image of an artist can play a role in how we categorize (and thus judge) the artistic work. Based on a user study, we show that both album cover artwork and promotional photographs encode valuable information that helps place an artist into a musical context. We also describe a simple computer vision system that can predict music genre tags based on content-based image analysis. This suggests that we can automatically learn some notion of artist similarity based on visual appearance alone. Such visual information may be helpful for improving music discovery in terms of the quality of recommendations, the efficiency of the search process, and the aesthetics of the multimedia experience. Fig. 1. Illustrative promotional photos (left) and album covers (right) of artists with the tags pop (1st row), hip hop (2nd row), metal (3rd row). See I. INTRODUCTION Section VI for attribution. Imagine that you are at a large summer music festival. appearance. Such a measure is useful, for example, because You walk over to one of the side stages and observe a band it allows us to develop a novel music retrieval paradigm in which is about to begin their sound check. Each member of which a user can discover new artists by specifying a query the band has long unkempt hair and is wearing black t-shirts, image. -

Random Walk with Restart for Automatic Playlist Continuation and Query-Specific Adaptations

Random Walk with Restart for Automatic Playlist Continuation and Query-Specific Adaptations Master's Thesis Timo van Niedek Radboud University, Nijmegen [email protected] 2018-08-22 First Supervisor Prof. dr. ir. Arjen P. de Vries Radboud University, Nijmegen [email protected] Second Supervisor Prof. dr. Martha A. Larson Radboud University, Nijmegen [email protected] Abstract In this thesis, we tackle the problem of automatic playlist continuation (APC). APC is the task of recommending one or more tracks to add to a partially created music playlist. Collaborative filtering methods for APC do not take the purpose of a playlist into account, while recent research has shown that a user's judgement of playlist quality is highly subjective. We therefore propose a system in which metadata, audio features and playlist titles are used as a proxy for its purpose, and build a recommender that combines these features with a CF-like approach. Based on recent successes in other recommendation domains, we use random walk with restart as our first recommendation approach. The random walks run over a bipartite graph representation of playlists and tracks, and are highly scalable with respect to the collection size. The playlist characteristics are determined from the track metadata, audio features and playlist title. We propose a number of extensions that integrate these characteristics, namely 1) title-based prefiltering, 2) title-based retrieval, 3) feature-based prefiltering and 4) degree pruning. We evaluate our results on the Million Playlist Dataset, a recently published dataset for the purpose of researching automatic playlist continuation. -

Visual Art Works

COMPENDIUM : Chapter 900 Visual Art Works 901 What This Chapter Covers .............................................................................................................................................. 4 902 Visual Arts Division ........................................................................................................................................................... 5 903 What Is a Visual Art Work? ............................................................................................................................................ 5 903.1 Pictorial, Graphic, and Sculptural Works .................................................................................................................. 5 903.2 Architectural Works ......................................................................................................................................................... 6 904 Fixation of Visual Art Works ......................................................................................................................................... 6 905 Copyrightable Authorship in Visual Art Works ..................................................................................................... 7 906 Uncopyrightable Material ............................................................................................................................................... 8 906.1 Common Geometric Shapes .......................................................................................................................................... -

Lyrics-Based Analysis and Classification of Music

Lyrics-based Analysis and Classification of Music Michael Fell Caroline Sporleder Computational Linguistics Computational Linguistics & Digital Humanities Saarland University Trier University D-66123 Saarbrucken¨ D-54286 Trier [email protected] [email protected] Abstract We present a novel approach for analysing and classifying lyrics, experimenting both with n- gram models and more sophisticated features that model different dimensions of a song text, such as vocabulary, style, semantics, orientation towards the world, and song structure. We show that these can be combined with n-gram features to obtain performance gains on three different classification tasks: genre detection, distinguishing the best and the worst songs, and determining the approximate publication time of a song. 1 Introduction The ever growing amount of music available on the internet calls for intelligent tools for browsing and searching music databases. Music recommendation and retrieval systems can aid users in finding music that is relevant to them. This typically requires automatic music analysis, e.g., classification according to genre, content or artist and song similarity. In addition, automatic music (and lyrics) analysis also offers potential benefits for musicology research, for instance, in the field of Sociomusicology where lyrics analysis is used to place a piece of music in its sociocultural context (Frith, 1988). In principle, both the audio signal and the lyrics (if any exist) can be used to analyse a music piece (as well as additional data such as album reviews (Baumann et al., 2004)). In this paper, we focus on the contribution of the lyrics. Songwriters deploy unique stylistic devices to build their lyrics. -

Dramatis Personae1

Appendix · Dramatis Personae1 Abraxas, Mickie (The Tailor of Panama). As the best friend of Harry Pendel, Mickie helped Harry find a doctor for Marta after she was badly beaten by Nor- iega’s police for having participated in a protest demonstration.As a result, Mickie was imprisoned, tortured, and raped repeatedly.After this traumatic experience, he became a broken drunk. Pendel makes him the courageous leader of a fictitious de- mocratic Silent Opposition to the regime. Consequently, Mickie is again hounded by the police and ends up committing suicide. Harry feels enormous remorse yet uses Mickie’s death to make a martyr of him—just the excuse the Americans need to launch their invasion of Panama. Alleline, Percy (Tinker,Tailor, Soldier, Spy).The Scottish son of a Presbyterian min- ister, Percy has had a problematic relationship with Control since Percy was his stu- dent at Cambridge. Hired by the Circus and mentored by Maston, he cultivates political support in the Conservative party. Alleline becomes head of the Circus after Control’s resignation in 1972. Smiley’s exposure of Bill Haydon, for whom Alleline has been an unwitting tool, ends his career. Alexis, Dr.(The Little Drummer Girl). Investigator for the German Ministry of In- terior,Alexis is the son of a father who resisted Hitler. He is considered erratic and philosemitic by his colleagues. On the losing end of a power struggle in his de- partment, Alexis is recruited by Israeli intelligence, who provide him with invalu- able information about the terrorist bombings he is in charge of investigating.This intelligence enables him to reverse his fortunes and advance his career. -

Regulations for Agility Trials and Agility Course Test (ACT)

Regulations for Agility Trials and Agility Course Test (ACT) Amended to January 1, 2020 Published by The American Kennel Club Revisions to the Regulations for Agility Trials and Agility Course Test (ACT) Effective January 1, 2021 This insert is issued as a supplement to the Regulations for Agility Trials and Agility Course Test (ACT) Amended to January 1, 2020 and approved by the AKC Board of Directors November 10, 2020 Chapter 1. REGULATIONS FOR AGILITY TRIALS Section 14. Opening and Closing Dates. Opening Date: For all trials, clubs shall set a date and time that entries will first be accepted. Entries received prior to the opening date shall be considered invalid entries and shall be returned as soon as possible. Closing Date: Clubs shall set a date and time that entries will close. •The closing date for the trial shall not be less than seven ) (7 days prior to the trial. • Entries must be received prior to the published closing date and time. • Entries for any agility trial may be accepted until the official closing date and time even though the advertised limit has been reached. • The club may contact exhibitors to notify them of their entry status prior to the closing date. • Following the closing date, the Trial Secretary shall promptly contact all entrants and advise them of their status. Entries not accepted shall be returned within seven (7) days of the closing date Pink Insert Issued: November 2020 REAGIL (3/20) Revisions to the Regulations for Agility Trials and Agility Course Test (ACT) Effective February 1, 2021 This insert is issued as a supplement to the Regulations for Agility Trials and Agility Course Test (ACT) Amended to January 1, 2020 and approved by the AKC Board of Directors October 13, 2020 Chapter 15 – Regulations for Agility Course Test (ACT) Section 2. -

The Dramatis Personae of Administrative Law Albert Abel

Osgoode Hall Law Journal Article 2 Volume 10, Number 1 (August 1972) The Dramatis Personae of Administrative Law Albert Abel Follow this and additional works at: http://digitalcommons.osgoode.yorku.ca/ohlj Article Citation Information Abel, Albert. "The Dramatis Personae of Administrative Law." Osgoode Hall Law Journal 10.1 (1972) : 61-92. http://digitalcommons.osgoode.yorku.ca/ohlj/vol10/iss1/2 This Article is brought to you for free and open access by the Journals at Osgoode Digital Commons. It has been accepted for inclusion in Osgoode Hall Law Journal by an authorized editor of Osgoode Digital Commons. THE DRAMATIS PERSONAE OF ADMINISTRATIVE LAW By ALBERT ABEL* Yes, Virginia, there is an administrative law. But what is it? Little is to be gained by simply taking another fling at Dicey for his incautious and inexact denial,1 later recanted, 2 of its existence. It is now recognized. But it is not quite accepted. It fits no antique mould. Not knowing just what it includes, the legal profession has never felt quite at ease with it nor quite known how to handle it. True, it is as hard to say just what law itself3 is but from long association that is seldom perceived as a question. Law is whatever lawyers concern themselves with as being within their special range of competence. It is what lawyers do as lawyers. Similarly administrative law may be approached as the law relating to what public administrators do in the course of administering. There are also private administrators. The law as to them is not admin- istrative law. -

Music Genre Classification with the Million Song Dataset

Music Genre Classification with the Million Song Dataset 15-826 Final Report Dawen Liang,y Haijie Gu,z and Brendan O’Connorz y School of Music, z Machine Learning Department Carnegie Mellon University December 3, 2011 1 Introduction The field of Music Information Retrieval (MIR) draws from musicology, signal process- ing, and artificial intelligence. A long line of work addresses problems including: music understanding (extract the musically-meaningful information from audio waveforms), automatic music annotation (measuring song and artist similarity), and other problems. However, very little work has scaled to commercially sized data sets. The algorithms and data are both complex. An extraordinary range of information is hidden inside of music waveforms, ranging from perceptual to auditory—which inevitably makes large- scale applications challenging. There are a number of commercially successful online music services, such as Pandora, Last.fm, and Spotify, but most of them are merely based on traditional text IR. Our course project focuses on large-scale data mining of music information with the recently released Million Song Dataset (Bertin-Mahieux et al., 2011),1 which consists of 1http://labrosa.ee.columbia.edu/millionsong/ 1 300GB of audio features and metadata. This dataset was released to push the boundaries of Music IR research to commercial scales. Also, the associated musiXmatch dataset2 provides textual lyrics information for many of the MSD songs. Combining these two datasets, we propose a cross-modal retrieval framework to combine the music and textual data for the task of genre classification: Given N song-genre pairs: (S1;GN );:::; (SN ;GN ), where Si 2 F for some feature space F, and Gi 2 G for some genre set G, output the classifier with the highest clas- sification accuracy on the hold-out test set. -

Classification of Heavy Metal Subgenres with Machine Learning

Thomas Rönnberg Classification of Heavy Metal Subgenres with Machine Learning Master’s Thesis in Information Systems Supervisors: Dr. Markku Heikkilä Asst. Prof. József Mezei Faculty of Social Sciences, Business and Economics Åbo Akademi University Åbo 2020 Subject: Information Systems Writer: Thomas Rönnberg Title: Classification of Heavy Metal Subgenres with Machine Learning Supervisor: Dr. Markku Heikkilä Supervisor: Asst. Prof. József Mezei Abstract: The music industry is undergoing an extensive transformation as a result of growth in streaming data and various AI technologies, which allow for more sophisticated marketing and sales methods. Since consumption patterns vary by different factors such as genre and engagement, each customer segment needs to be targeted uniquely for maximal efficiency. A challenge in this genre-based segmentation method lies in today’s large music databases and their maintenance, which have shown to require exhausting and time-consuming work. This has led to automatic music genre classification (AMGC) becoming the most common area of research within the growing field of music information retrieval (MIR). A large portion of previous research has been shown to suffer from serious integrity issues. The purpose of this study is to re-evaluate the current state of applying machine learning for the task of AMGC. Low-level features are derived from audio signals to form a custom-made data set consisting of five subgenres of heavy metal music. Different parameter sets and learning algorithms are weighted against each other to derive insights into the success factors. The results suggest that admirable results can be achieved even with fuzzy subgenres, but that a larger number of high-quality features are needed to further extend the accuracy for reliable real-life applications. -

The Mass Ornament: Weimar Essays / Siegfried Kracauer ; Translated, Edited, and with an Introduction by Thomas Y

Siegfried Kracauer was one of the twentieth century's most brilliant cultural crit ics, a bold and prolific scholar, and an incisive theorist of film. In this volume his important early writings on modern society during the Weimar Republic make their long-awaited appearance in English. This book is a celebration of the masses—their tastes, amusements, and every day lives. Taking up the master themes of modernity, such as isolation and alienation, mass culture and urban experience, and the relation between the group and the individual, Kracauer explores a kaleidoscope of topics: shop ping arcades, the cinema, bestsellers and their readers, photography, dance, hotel lobbies, Kafka, the Bible, and boredom. For Kracauer, the most revela tory facets of modern metropolitan life lie on the surface, in the ephemeral and the marginal. The Mass Ornament today remains a refreshing tribute to popu lar culture, and its impressively interdisciplinary essays continue to shed light not only on Kracauer's later work but also on the ideas of the Frankfurt School, the genealogy of film theory and cultural studies, Weimar cultural politics, and, not least, the exigencies of intellectual exile. This volume presents the full scope of his gifts as one of the most wide-ranging and penetrating interpreters of modern life. "Known to the English-language public for the books he wrote after he reached America in 1941, most famously for From Caligari to Hitler, Siegfried Krac auer is best understood as a charter member of that extraordinary constella tion of Weimar-era intellectuals which has been dubbed retroactively (and mis- leadingly) the Frankfurt School. -

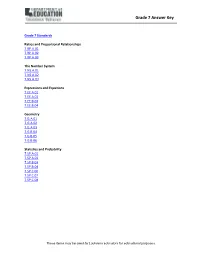

Grade 7 Answer Key

Grade 7 Answer Key Grade 7 Standards Ratios and Proportional Relationships 7.RP.A.01 7.RP.A.02 7.RP.A.03 The Number System 7.NS.A.01 7.NS.A.02 7.NS.A.03 Expressions and Equations 7.EE.A.01 7.EE.A.02 7.EE.B.03 7.EE.B.04 Geometry 7.G.A.01 7.G.A.02 7.G.A.03 7.G.B.04 7.G.B.05 7.G.B.06 Statistics and Probability 7.SP.A.01 7.SP.A.02 7.SP.B.03 7.SP.B.04 7.SP.C.06 7.SP.C.07 7.SP.C.08 These items may be used by Louisiana educators for educational purposes. Grade 7 Answer Key Ratios and Proportional Relationships 7.RP.A.01 Items 1-9 ITEM 1 Gordon makes 4/5 of a recipe with 2/3 baG of cheese. At this rate, what fraction of the baG of cheese will Gordon use to make the entire recipe? $ A. % $ B. & % C. ' ' D. ( These items may be used by Louisiana educators for educational purposes. Grade 7 Answer Key ITEM 2 & $ Marcia dusts of her cabinets with bottle of dusting polish. At this rate, what fraction of the bottle of % & dusting polish will Marcia use to dust all of her cabinets? A. B. C. D. These items may be used by Louisiana educators for educational purposes. Grade 7 Answer Key ITEM 3 * Jason ran of a mile in 12 minutes. How many miles can Jason run in 1 hour? + & 4 miles A.