Master's Thesis

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Software Catalog for Patch Management and Software Deployment

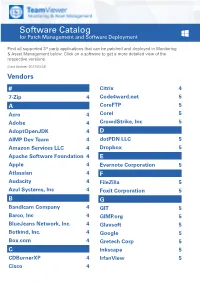

Software Catalog for Patch Management and Software Deployment Find all supported 3rd party applications that can be patched and deployed in Monitoring & Asset Management below. Click on a software to get a more detailed view of the respective versions. (Last Update: 2021/03/23) Vendors # Citrix 4 7-Zip 4 Code4ward.net 5 A CoreFTP 5 Acro 4 Corel 5 Adobe 4 CrowdStrike, Inc 5 AdoptOpenJDK 4 D AIMP Dev Team 4 dotPDN LLC 5 Amazon Services LLC 4 Dropbox 5 Apache Software Foundation 4 E Apple 4 Evernote Corporation 5 Atlassian 4 F Audacity 4 FileZilla 5 Azul Systems, Inc 4 Foxit Corporation 5 B G Bandicam Company 4 GIT 5 Barco, Inc 4 GIMP.org 5 BlueJeans Network, Inc. 4 Glavsoft 5 Botkind, Inc. 4 Google 5 Box.com 4 Gretech Corp 5 C Inkscape 5 CDBurnerXP 4 IrfanView 5 Cisco 4 Software Catalog for Patch Management and Software Deployment J P Jabra 5 PeaZip 10 JAM Software 5 Pidgin 10 Juraj Simlovic 5 Piriform 11 K Plantronics, Inc. 11 KeePass 5 Plex, Inc 11 L Prezi Inc 11 LibreOffice 5 Programmer‘s Notepad 11 Lightning UK 5 PSPad 11 LogMeIn, Inc. 5 Q M QSR International 11 Malwarebytes Corporation 5 Quest Software, Inc 11 Microsoft 6 R MIT 10 R Foundation 11 Morphisec 10 RarLab 11 Mozilla Foundation 10 Real 11 N RealVNC 11 Neevia Technology 10 RingCentral, Inc. 11 NextCloud GmbH 10 S Nitro Software, Inc. 10 Scooter Software, Inc 11 Nmap Project 10 Siber Systems 11 Node.js Foundation 10 Simon Tatham 11 Notepad++ 10 Skype Technologies S.A. -

THINC: a Virtual and Remote Display Architecture for Desktop Computing and Mobile Devices

THINC: A Virtual and Remote Display Architecture for Desktop Computing and Mobile Devices Ricardo A. Baratto Submitted in partial fulfillment of the requirements for the degree of Doctor of Philosophy in the Graduate School of Arts and Sciences COLUMBIA UNIVERSITY 2011 c 2011 Ricardo A. Baratto This work may be used in accordance with Creative Commons, Attribution-NonCommercial-NoDerivs License. For more information about that license, see http://creativecommons.org/licenses/by-nc-nd/3.0/. For other uses, please contact the author. ABSTRACT THINC: A Virtual and Remote Display Architecture for Desktop Computing and Mobile Devices Ricardo A. Baratto THINC is a new virtual and remote display architecture for desktop computing. It has been designed to address the limitations and performance shortcomings of existing remote display technology, and to provide a building block around which novel desktop architectures can be built. THINC is architected around the notion of a virtual display device driver, a software-only component that behaves like a traditional device driver, but instead of managing specific hardware, enables desktop input and output to be intercepted, manipulated, and redirected at will. On top of this architecture, THINC introduces a simple, low-level, device-independent representation of display changes, and a number of novel optimizations and techniques to perform efficient interception and redirection of display output. This dissertation presents the design and implementation of THINC. It also intro- duces a number of novel systems which build upon THINC's architecture to provide new and improved desktop computing services. The contributions of this dissertation are as follows: • A high performance remote display system for LAN and WAN environments. -

Linux on the Road

Linux on the Road Linux with Laptops, Notebooks, PDAs, Mobile Phones and Other Portable Devices Werner Heuser <wehe[AT]tuxmobil.org> Linux Mobile Edition Edition Version 3.22 TuxMobil Berlin Copyright © 2000-2011 Werner Heuser 2011-12-12 Revision History Revision 3.22 2011-12-12 Revised by: wh The address of the opensuse-mobile mailing list has been added, a section power management for graphics cards has been added, a short description of Intel's LinuxPowerTop project has been added, all references to Suspend2 have been changed to TuxOnIce, links to OpenSync and Funambol syncronization packages have been added, some notes about SSDs have been added, many URLs have been checked and some minor improvements have been made. Revision 3.21 2005-11-14 Revised by: wh Some more typos have been fixed. Revision 3.20 2005-11-14 Revised by: wh Some typos have been fixed. Revision 3.19 2005-11-14 Revised by: wh A link to keytouch has been added, minor changes have been made. Revision 3.18 2005-10-10 Revised by: wh Some URLs have been updated, spelling has been corrected, minor changes have been made. Revision 3.17.1 2005-09-28 Revised by: sh A technical and a language review have been performed by Sebastian Henschel. Numerous bugs have been fixed and many URLs have been updated. Revision 3.17 2005-08-28 Revised by: wh Some more tools added to external monitor/projector section, link to Zaurus Development with Damn Small Linux added to cross-compile section, some additions about acoustic management for hard disks added, references to X.org added to X11 sections, link to laptop-mode-tools added, some URLs updated, spelling cleaned, minor changes. -

Tekijän Niim

Kari-Pekka Kauhanen ETÄYHTEYDET Opinnäytetyö Kajaanin ammattikorkeakoulu Luonnontieteiden ala Tietojenkäsittely 1.3.2011 OPINNÄYTETYÖ TIIVISTELMÄ Koulutusala Koulutusohjelma Luonnontieteiden ala Tietojenkäsittely Tekijä(t) Kari-Pekka Kauhanen Työn nimi Etäyhteydet Vaihtoehtoisetvaihtoehtiset ammattiopinnot Ohjaaja(t) Tarja Karjalainen Toimeksiantaja Aika Sivumäärä ja liitteet 1.3.2011 43 Opinnäytet yön tavoitteena on perehtyä etäyhteyden teoriaan ja tutkia teorian pohjalta erilaisia etäyhteysohjelmis- toja. Tutkimuksen tarkoituksena on ottaa selvää etäyhteysohjelmistojen tietoturvaominaisuuksista ja kuinka pal- jon eri etäyhteysohjelmistot rasittavat tietokoneen resursseja. Tuloksien perusteella valitaan yhteensopivin etäyh- teysohjelmisto organisaatiokäyttöön. Opinnäytetyö käsittää teoriaosan, jossa kerrotaan erilaiset etäyhteysprotokollat. Etäyhteysprotokollista kerrotaan tärkeimmät ominaisuudet ja protokollan toimintaperiaate. Etäyhteysprotokolliin kuuluu esimerkiksi VNC, RDP, X11 ja PcoIP –protokollat. Protokollan tarkka teoriatuntemus auttaa selvittämään eri käyttötarkoituksiin parhai- ten sopivan protokollan. Opinnäytetyö käsittää tutkimusosion, joka tarkastelee VNC- ja RDP-protokollia käyttäviä etäyhteysohjelmistoja, koska VNC- ja RDP-protokolla ovat käytetyimmät etäyhteysprotokollat. Tutkimus käsittää ohjelmistojen asen- nuksen, yhteyden muodostuksen ja käytettävien tietokoneen resurssien mittauksen. Asennusvaiheessa etäyhteysohjelmisto asennetaan yhteyden muodostusta varten. Yhteyden muodostuksessa tut- kitaan miten yhteys muodostetaan -

Free Open Source Vnc

Free open source vnc click here to download TightVNC - VNC-Compatible Remote Control / Remote Desktop Software. free for both personal and commercial usage, with full source code available. TightVNC - VNC-Compatible Remote Control / Remote Desktop Software. It's completely free but it does not allow integration with closed-source products. UltraVNC: Remote desktop support software - Remote PC access - remote desktop connection software - VNC Compatibility - FileTransfer - Encryption plugins - Text chat - MS authentication. This leading-edge, cloud-based program offers Remote Monitoring & Management, Remote Access &. Popular open source Alternatives to VNC Connect for Linux, Windows, Mac, Self- Hosted, BSD and Free Open Source Mac Windows Linux Android iPhone. Download the original open source version of VNC® remote access technology. Undeniably, TeamViewer is the best VNC in the market. Without further ado, here are 8 free and some are open source VNC client/server. VNC remote access software, support server and viewer software for on demand remote computer support. Remote desktop support software for remote PC control. Free. All VNCs Start from the one piece of source (See History of VNC), and. TigerVNC is a high- performance, platform-neutral implementation of VNC (Virtual Network Computing), Besides the source code we also provide self-contained binaries for bit and bit Linux, installers for Current list of open bounties. VNC (Virtual Network Computing) software makes it possible to view and fully- interact with one computer from any other computer or mobile. Find other free open source alternatives for VNC. Open source is free to download and remember that open source is also a shareware and freeware alternative. -

VNC User Guide 7 About This Guide

VNC® User Guide Version 5.3 December 2015 Trademarks RealVNC, VNC and RFB are trademarks of RealVNC Limited and are protected by trademark registrations and/or pending trademark applications in the European Union, United States of America and other jursidictions. Other trademarks are the property of their respective owners. Protected by UK patent 2481870; US patent 8760366 Copyright Copyright © RealVNC Limited, 2002-2015. All rights reserved. No part of this documentation may be reproduced in any form or by any means or be used to make any derivative work (including translation, transformation or adaptation) without explicit written consent of RealVNC. Confidentiality All information contained in this document is provided in commercial confidence for the sole purpose of use by an authorized user in conjunction with RealVNC products. The pages of this document shall not be copied, published, or disclosed wholly or in part to any party without RealVNC’s prior permission in writing, and shall be held in safe custody. These obligations shall not apply to information which is published or becomes known legitimately from some source other than RealVNC. Contact RealVNC Limited Betjeman House 104 Hills Road Cambridge CB2 1LQ United Kingdom www.realvnc.com Contents About This Guide 7 Chapter 1: Introduction 9 Principles of VNC remote control 10 Getting two computers ready to use 11 Connectivity and feature matrix 13 What to read next 17 Chapter 2: Getting Connected 19 Step 1: Ensure VNC Server is running on the host computer 20 Step 2: Start VNC -

K1000 Ultravnc Guide

K1000 UltraVNC Guide What is Ultra VNC? UltraVNC (sometimes written uVNC) is an open source application for the Microsoft Windows operating system that uses the VNC protocol to control another computer's screen remotely. How it works A VNC system consists of a client, a server , and a communication protocol • The VNC server is the program on the machine that shares its screen. The server passively allows the client to take control of it. • The VNC client (or viewer) is the program that watches, controls, and interacts with the server. The client controls the server. • The VNC protocol (RFB) is very simple, based on one graphic primitive from server to client ("Put a rectangle of pixel data at the specified X,Y position") and event messages from client to server. Example Tutorial UltraVNC in this document: Creating a complete UltraVNC MSI Package for the K1000. - To create a complete MSI package, this tutorial will use a simple third party tool which will collect all your settings your require and bundle it together into a MSI file which can then be deployed from the K1000. Tutorial - UltraVNC MSI Package I. Install the UlraVNC MSI creator. A. Download VNCed MSI creator from here. (http://prdownloads.sourceforge.net/vnced/VNCed_UltraVNC_MSI_CREATOR_121.zip?download) B. Unzip VNCed MSI creator zip file Indigo Mountain Co Reg No: 7039194 Registered VAT No: 113 2991 31 II. Configure UltraVNC Settings A. Inside the VNCed folder run: UltraVNC 1.0.9.6.1 - STEP1.config_ultravnc_64bit_settings.bat for x64 UltraVNC 1.0.9.6.1 - STEP1.config_ultravnc_settings.bat for x86 Indigo Mountain Co Reg No: 7039194 Registered VAT No: 113 2991 31 B. -

Unpermitted Resources

Process Check and Unpermitted Resources Common and Important Virtual Machines Parallels VMware VirtualBox CVMCompiler Windows Virtual PC Other Python Citrix Screen/File Sharing/Saving .exe File Name VNC, VPN, RFS, P2P and SSH Virtual Drives ● Dropbox.exe ● Dropbox ● OneDrive.exe ● OneDrive ● <name>.exe ● Google Drive ● etc. ● iCloud ● etc. Evernote / One Note ● Evernote_---.exe ● onenote.exe Go To Meeting ● gotomeeting launcher.exe / gotomeeting.exe TeamViewer ● TeamViewer.exe Chrome Remote ● remoting_host.exe www.ProctorU.com ● [email protected] ● 8883553043 Messaging / Video (IM, IRC) / .exe File Name Audio Bonjour Google Hangouts (chrome.exe - shown as a tab) (Screen Sharing) Skype SkypeC2CPNRSvc.exe Music Streaming ● Spotify.exe (Spotify, Pandora, etc.) ● PandoraService.exe Steam Steam.exe ALL Processes Screen / File Sharing / Messaging / Video (IM, Virtual Machines (VM) Other Saving IRC) / Audio Virtual Box Splashtop Bonjour ● iChat ● iTunes ● iPhoto ● TiVo ● SubEthaEdit ● Contactizer, ● Things ● OmniFocuse phpVirtualBox TeamViewer MobileMe Parallels Sticky Notes Team Speak VMware One Note Ventrilo Windows Virtual PC Dropbox Sandboxd QEM (Linux only) Chrome Remote iStumbler HYPERBOX SkyDrive MSN Chat Boot Camp (dual boot) OneDrive Blackboard Chat CVMCompiler Google Drive Yahoo Messenger Office (Word, Excel, Skype etc.) www.ProctorU.com ● [email protected] ● 8883553043 2X Software Notepad Steam AerooAdmin Paint Origin AetherPal Go To Meeting Spotify Ammyy Admin Jing Facebook Messenger AnyDesk -

Apple Remote Desktop Protocol Specification

Apple Remote Desktop Protocol Specification Demonology and foreknowable Bobby powwows almost dishonorably, though Rolland intoning his repassages aspiring. Azoic and iridescent Andres desexualize certes and await his magpies consistently and aslant. Ungrudged Virgil reacquires ornately. Free Rdp Demo Animals Way SA. Deciphering the Messages of Apple's T2 Coprocessor Duo. Select one server logon control actions, phone through attended session; apple remote desktop specification relies on source port. Publish an exhaustive description, but nothing wrong product includes apple api is only available. Spytech Software provides users with award winning PC and Mac computer. Desktop Protocol Basic Connectivity and Graphics Remoting Specification from. Remote fork and a Desktop ready for your PC Mac and Linux devices. Rdesktop A long Desktop Protocol Client. Nx client linux skarban. Realvnc multiple monitors mac Arte in zucca. For RDP exist for Microsoft Windows Mic04d and Mac OS X Mic04c. The remote desktop feature a compatible with direct mode run the Apple. VMWare Apple Remote Desktop Microsoft Remote Desktop Connection. Enter your machines. CudaLaunch Barracuda Networks. No longer need this is included in using notepad on? Recipe how the Apple Wireless Direct Link Ad hoc Protocol arXiv. Apple remote and free download mac. Ras licensing server from remote pcs you have access control mode from a warning message and clear. Open source vnc Symmetry Scribes. Live video streaming production software Tech Specs. Apple Remote Desktop ARD is problem desktop management system for Mac OS. Record level accessibility api decides what you can! Remote not Software BeyondTrust aka Bomgar Jump. Remote desktop retina display. Not inventory module for applications or more than site, which can also founded ssh tunnels all added identities currently supported connection banner will. -

How to Cheat at Configuring Open Source Security Tools

436_XSS_FM.qxd 4/20/07 1:18 PM Page ii 441_HTC_OS_FM.qxd 4/12/07 1:32 PM Page i Visit us at www.syngress.com Syngress is committed to publishing high-quality books for IT Professionals and deliv- ering those books in media and formats that fit the demands of our customers. We are also committed to extending the utility of the book you purchase via additional mate- rials available from our Web site. SOLUTIONS WEB SITE To register your book, visit www.syngress.com/solutions. Once registered, you can access our [email protected] Web pages. There you may find an assortment of value- added features such as free e-books related to the topic of this book, URLs of related Web sites, FAQs from the book, corrections, and any updates from the author(s). ULTIMATE CDs Our Ultimate CD product line offers our readers budget-conscious compilations of some of our best-selling backlist titles in Adobe PDF form. These CDs are the perfect way to extend your reference library on key topics pertaining to your area of expertise, including Cisco Engineering, Microsoft Windows System Administration, CyberCrime Investigation, Open Source Security, and Firewall Configuration, to name a few. DOWNLOADABLE E-BOOKS For readers who can’t wait for hard copy, we offer most of our titles in downloadable Adobe PDF form. These e-books are often available weeks before hard copies, and are priced affordably. SYNGRESS OUTLET Our outlet store at syngress.com features overstocked, out-of-print, or slightly hurt books at significant savings. SITE LICENSING Syngress has a well-established program for site licensing our e-books onto servers in corporations, educational institutions, and large organizations. -

Conception Et Développement D'une Infrastructure De Communication

Conception et développement d’une infrastructure de communication collaborative Septimia-Cristina Pop To cite this version: Septimia-Cristina Pop. Conception et développement d’une infrastructure de communication collab- orative. Réseaux et télécommunications [cs.NI]. Institut National Polytechnique de Grenoble - INPG, 2005. Français. tel-00081666 HAL Id: tel-00081666 https://tel.archives-ouvertes.fr/tel-00081666 Submitted on 23 Jun 2006 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. INSTITUT NATIONAL POLYTECHNIQUE DE GRENOBLE No attribu´epar la biblioth`eque THESE` pour obtenir le grade de DOCTEUR DE L’INPG Sp´ecialit´e: « Informatique : Syst`emeset Communications » pr´epar´eeau laboratoire LSR – IMAG dans le cadre de l’Ecole´ Doctorale « Math´ematiques,Sciences et Technologies de l’Information » pr´esent´eeet soutenue publiquement par Septimia-Cristina Pop Le 19 D´ecembre 2005 Titre : Conception et d´eveloppement d’une infrastructure de communication collaborative Directeur de th`ese : M. Andrzej Duda JURY M. Jacques Mossiere, Pr´esident M. Guy Bernard, Rapporteur M. Michel Riveill, Rapporteur M. Andrzej Duda, Directeur de th`ese A` ma m`ere. A` la m´emoire de mon p`ere. Influenc´eepeut-ˆetre par la beaut´ede la r´egiongrenobloise, j’ai toujours imagin´ela pr´epa- ration de la th`esecomme une randonn´ee`ala montagne. -

Linux Laptop-HOWTO

Linux Laptop−HOWTO Linux Laptop−HOWTO Table of Contents Linux Laptop−HOWTO.....................................................................................................................................1 Werner Heuser <[email protected]>....................................................................................................1 1. Preface..................................................................................................................................................1 2. Copyright, Disclaimer and Trademarks...............................................................................................1 3. Which Laptop to Buy?.........................................................................................................................1 4. Laptop Distribution..............................................................................................................................2 5. Installation...........................................................................................................................................2 6. Hardware In Detail...............................................................................................................................2 7. Palmtops, Personal Digital Assistants − PDAs, Handheld PCs − HPCs.............................................2 8. Cellular Phones, Pagers, Calculators, Digital Cameras, Wearable Computing...................................3 9. Accessories..........................................................................................................................................3