Security and Data Analysis — Three Case Studies

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Kommentarer Till Utgåvan Debian 10 (Buster), 64-Bit PC

Kommentarer till utgåvan Debian 11 (bullseye), 64-bit PC The Debian Documentation Project (https://www.debian.org/doc/) 5 oktober 2021 Kommentarer till utgåvan Debian 11 (bullseye), 64-bit PC Detta dokument är fri mjukvara; du kan vidaredistribuera det och/eller modifiera det i enlighet med villkoren i Free Software Foundations GNU General Public License version 2. Detta program är distribuerat med förhoppning att det ska vara användbart men HELT UTAN GARAN- TIER; inte ens underförstådd garanti om SÄLJBARHET eller att PASSA ETT SÄRSKILT SYFTE. Läs mer i GNU General Public License för djupare detaljer. Du borde ha fått en kopia av GNU General Public License tillsammans med det här programmet; om inte, skriv till Free Software Foundation, Inc., 51 Franklin Street. Fifth Floor, Boston, MA, 02110-1301 USA. Licenstexten kan också hämtas på https://www.gnu.org/licenses/gpl-2.0.html och /usr/ share/common-licenses/GPL-2 på Debian-system. ii Innehåll 1 Introduktion 1 1.1 Rapportera fel i det här dokumentet . 1 1.2 Bidra med uppgraderingsrapporter . 1 1.3 Källor för det här dokumentet . 2 2 Vad är nytt i Debian 11 3 2.1 Arkitekturer med stöd . 3 2.2 Vad är nytt i distributionen? . 3 2.2.1 Skrivbordsmiljöer och kända paket . 3 2.2.2 Utskrifter och scanning utan drivrutiner . 4 2.2.2.1 CUPS och utskrifter utan drivrutiner . 4 2.2.2.2 SANE och scannrar utan drivrutiner . 4 2.2.3 Nytt generellt kommando ”open” . 5 2.2.4 Control groups v2 . 5 2.2.5 Beständig systemd-journal . -

System-On-Chip FPGA Devices for Complex Electrical Energy Systems Control

1 System-on-Chip FPGA Devices for Complex Electrical Energy Systems Control I. INTRODUCTION IGITAL electronics has become a standard for controlling electrical systems. This is due to the D constant improvement of the digital devices, whether in terms of density, performance, flexibility of use or cost reduction [1]. This paper looks into System-on-Chip (SoC) Field Programmable Gate Array (FPGA) for controlling complex electrical energy systems. These devices encompass multicore floating point microprocessors embedded with standard peripherals together with an FPGA fabric that allows the design of custom peripherals and specific hardware accelerators. Thus, SoC FPGA devices can be regarded as a good compromise between “super” microcontrollers (very fast in terms of computation but with a fixed micro-architecture) and pure FPGAs (ideal for specific concurrent micro-architectures but limited in terms of density). SoC FPGA architectures are discussed and compared with state-of-the-art DSP-controllers, since they can also be qualified as SoC devices as they are integrating floating point microprocessor cores and substantial peripherals. The main differences between these two groups of devices lies in the opportunity offered to the designer by the SoC FPGAs to customize the SoC device via its internal FPGA fabric. Two case studies demonstrate that with SoC FPGAs one can go beyond standard control by introducing new auxiliary functions that enhance market competitiveness. The first application concerns a fuel cell hybrid electric system controlled by passivity-based power management associated with an aging prognosis algorithm. For this application, it is shown that the time and cost constraints justify the use of a soft processor core to implement the controller. -

Rawkit Documentation Release 0.6.0

rawkit Documentation Release 0.6.0 Cameron Paul, Sam Whited Sep 20, 2018 Contents 1 Requirements 3 2 Installing rawkit 5 3 Getting Help 7 4 Tutorials 9 5 Architecture and Design 13 6 API Reference 15 7 Indices and tables 73 Python Module Index 75 i ii rawkit Documentation, Release 0.6.0 Note: rawkit is still alpha quality software. Until it hits 1.0, it may undergo substantial changes, including breaking API changes. rawkit is a ctypes-based set of LibRaw bindings for Python inspired by Wand. It is licensed under the MIT License. from rawkit.raw import Raw from rawkit.options import WhiteBalance with Raw(filename='some/raw/image.CR2') as raw: raw.options.white_balance= WhiteBalance(camera=False, auto=True) raw.save(filename='some/destination/image.ppm') Contents 1 rawkit Documentation, Release 0.6.0 2 Contents CHAPTER 1 Requirements • Python – CPython 2.7+ – CPython 3.4+ – PyPy 2.5+ – PyPy3 2.4+ • LibRaw – LibRaw 0.16.x (API version 10) – LibRaw 0.17.x (API version 11) 3 rawkit Documentation, Release 0.6.0 4 Chapter 1. Requirements CHAPTER 2 Installing rawkit First, you’ll need to install LibRaw: • libraw on Arch • LibRaw on Fedora 21+ • libraw10 on Ubuntu Utopic+ • libraw-bin on Debian Jessie+ Now you can fetch rawkit from PyPi: $ pip install rawkit 5 rawkit Documentation, Release 0.6.0 6 Chapter 2. Installing rawkit CHAPTER 3 Getting Help Need help? Join the #photoshell channel on Freenode. As always, don’t ask to ask (just ask) and if no one is around: be patient, if you part before we can answer there’s not much we can do. -

HTTP Cookie - Wikipedia, the Free Encyclopedia 14/05/2014

HTTP cookie - Wikipedia, the free encyclopedia 14/05/2014 Create account Log in Article Talk Read Edit View history Search HTTP cookie From Wikipedia, the free encyclopedia Navigation A cookie, also known as an HTTP cookie, web cookie, or browser HTTP Main page cookie, is a small piece of data sent from a website and stored in a Persistence · Compression · HTTPS · Contents user's web browser while the user is browsing that website. Every time Request methods Featured content the user loads the website, the browser sends the cookie back to the OPTIONS · GET · HEAD · POST · PUT · Current events server to notify the website of the user's previous activity.[1] Cookies DELETE · TRACE · CONNECT · PATCH · Random article Donate to Wikipedia were designed to be a reliable mechanism for websites to remember Header fields Wikimedia Shop stateful information (such as items in a shopping cart) or to record the Cookie · ETag · Location · HTTP referer · DNT user's browsing activity (including clicking particular buttons, logging in, · X-Forwarded-For · Interaction or recording which pages were visited by the user as far back as months Status codes or years ago). 301 Moved Permanently · 302 Found · Help 303 See Other · 403 Forbidden · About Wikipedia Although cookies cannot carry viruses, and cannot install malware on 404 Not Found · [2] Community portal the host computer, tracking cookies and especially third-party v · t · e · Recent changes tracking cookies are commonly used as ways to compile long-term Contact page records of individuals' browsing histories—a potential privacy concern that prompted European[3] and U.S. -

Eaton IPM Infrastructure

Eaton® Intelligent Power Manager ® (IPM) Infrastructure User guide Rev 1.1 02/28/2018 1 Class A EMC Statements 1.1 FCC Part 15 This equipment has been tested and found to comply with the limits for a Class A digital device, pursuant to part 15 of the FCC Rules. These limits are designed to provide reasonable protection against harmful interference when the equipment is operated in a commercial environment. This equipment generates, uses, and can radiate radio frequency energy and, if not installed and used in accordance with the instruction manual, may cause harmful interference to radio communications. Operation of this equipment in a residential area is likely to cause harmful interference, in which case the user will be required to correct the interference at his own expense. 1.2 ICES-003 This Class A Interference Causing Equipment meets all requirements of the Canadian Interference Causing Equipment Regulations ICES-003. Cet appareil numérique de la classe A respecte toutes les exigences du Reglement sur le matériel brouilleur du Canada. 1.3 EN 62040-2 Some configurations are classified under EN 62040-2 as “Class-A UPS for Unrestricted Sales Distribution.” For these configurations, the following applies: 1.3.1 WARNING This is a Class A-UPS Product. In a domestic environment, this product may cause radio interference, in which case the user may be required to take additional measures. 2 Requesting a Declaration of Conformity Units that are labeled with a CE mark comply with the following harmonized standards and EU directives: • Harmonized Standards: IEC 61000-3-12 • EU Directives: 73/23/EEC, Council Directive on equipment designed for use within certain voltage limits 93/68/EEC, Amending Directive 73/23/EEC 89/336/EEC, Council Directive relating to electromagnetic compatibility 92/31/EEC, Amending Directive 89/336/EEC relating to EMC The EC Declaration of Conformity is available upon request for products with a CE mark. -

Security: Patches, BIOS and EC Write Protection, Reproducible Builds (Diffoscope) and Coreboot

Published on Tux Machines (http://www.tuxmachines.org) Home > content > Security: Patches, BIOS and EC Write Protection, Reproducible Builds (DiffoScope) and Coreboot Security: Patches, BIOS and EC Write Protection, Reproducible Builds (DiffoScope) and Coreboot By Roy Schestowitz Created 25/07/2020 - 1:48am Submitted by Roy Schestowitz on Saturday 25th of July 2020 01:48:23 AM Filed under Security [1] Security updates for Friday [2] Security updates have been issued by Debian (qemu), Fedora (java-11-openjdk, mod_authnz_pam, podofo, and python27), openSUSE (cni-plugins, tomcat, and xmlgraphics- batik), Oracle (dbus and thunderbird), SUSE (freerdp, kernel, libraw, perl-YAML-LibYAML, and samba), and Ubuntu (libvncserver and openjdk-lts). Librem 14 Features BIOS and EC Write Protection [3] We have been focused on BIOS security at Purism since the beginning, starting with our initiative to replace the proprietary BIOS on our first generation laptops with the open source coreboot project. This was a great first step as it not only meant customers could avoid proprietary code in line with Purism?s social purpose, it also meant the BIOS on Purism laptops could be audited for security bugs and possible backdoors to help avoid problems like the privilege escalation bug in Lenovo?s AMI firmware. Our next goal in BIOS security was to eliminate, replace or otherwise bypass the proprietary Intel Management Engine (ME) in our firmware. We have made massive progress on this front and our Librem laptops, Librem Mini, and Librem Server all ship with an ME that?s been disabled and neutralized. After that we shifted focus to protecting the BIOS against tampering. -

Digital Safety & Security Lt Cdr Mike Rose RN ©

RNIOA Article 15 [17/05/2020] characteristics linked to their IP address to enable ‘tracking.’ Where internet-based criminality is Digital Safety & Security involved, the acquisition of banking and credit card Lt Cdr Mike Rose RN © data is often the main objective. Introduction The aim of this article is to help people understand Prior to the introduction of the internet, personal the risks they face and how to attempt to mitigate computers for home use were self-contained in that them. So, whether you’re accessing the internet the operating system was bought and installed by with your mobile phone, PC, tablet or laptop, you’re the supplier/user, and external communications potentially exposed to every hacker, digital thief and using the PC were not yet technically developed. spammer across the globe. Not to mention that The most likely risk therefore was the possibility of viruses, trojan horses, spyware and adware are someone switching on unattended, non-password- always just one click away. You wouldn’t drive protected computers and copying sensitive without insurance, a seat belt and a GPS device, information onto an external memory device. so, similarly, when you “surf the net”, you need to Essentially, users were in control of their computing make sure that you are well “buckled up” and well- equipment and paid real money for the services informed. This article is written as a guide to “safe and software they used to a known vendor. surfing” and is just as important for personal users as it is for large tech companies. Malicious websites Spyware, which is software that steals your sensitive data without consent, lurks in many corners of the internet; often in places where you'd least expect it. -

Les Menus Indépendants

les menus indépendants étant un grand fan de minimalisme, et donc pas vraiment attiré vers les environnements de bureaux complets (Gnome/KDE/E17) je me trouve souvent confronté au problème du menu. bien sûr les raccourcis clavier sont parfaits, mais que voulez-vous, j'aime avoir un menu… voici quelques uns des menus indépendants que j'utilise: associés à un raccourcis clavier, un lanceur dans un panel/dock ou intégré dans une zone de notification. Sommaire les menus indépendants....................................................................................................1 compiz-deskmenu...........................................................................................................2 installation..................................................................................................................2 paquet Debian.........................................................................................................2 depuis les sources...................................................................................................2 configuration...............................................................................................................2 MyGTKMenu....................................................................................................................5 installation..................................................................................................................5 configuration...............................................................................................................5 -

FIPS 140-2 Non-Proprietary Security Policy Oracle Linux 7 Libreswan

FIPS 140-2 Non-Proprietary Security Policy Oracle Linux 7 Libreswan Cryptographic Module FIPS 140-2 Level 1 Validation Software Version: R7-2.0.0 Date: April 06, 2018 Document Version 1.2 © Oracle Corporation This document may be reproduced whole and intact including the Copyright notice. Title: Oracle Linux 7 Libreswan Cryptographic Module Security Policy April 06, 2018 Author: Atsec Information Security Contributing Authors: Oracle Linux Engineering Oracle Security Evaluations – Global Product Security Oracle Corporation World Headquarters 500 Oracle Parkway Redwood Shores, CA 94065 U.S.A. Worldwide Inquiries: Phone: +1.650.506.7000 Fax: +1.650.506.7200 oracle.com Copyright © 2018, Oracle and/or its affiliates. All rights reserved. This document is provided for information purposes only and the contents hereof are subject to change without notice. This document is not warranted to be error-free, nor subject to any other warranties or conditions, whether expressed orally or implied in law, including implied warranties and conditions of merchantability or fitness for a particular purpose. Oracle specifically disclaim any liability with respect to this document and no contractual obligations are formed either directly or indirectly by this document. This document may reproduced or distributed whole and intact including this copyright notice. Oracle and Java are registered trademarks of Oracle and/or its affiliates. Other names may be trademarks of their respective owners. Oracle Linux 7 Libreswan Cryptographic Module Security Policy i -

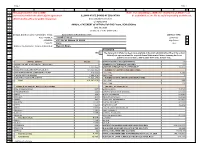

2020 Annual Statement of Affairs

Page 1 Page 1 A B C D E F G H I J 1 This page must be sent to ISBE Note: For submitting to ISBE, the "Statement of Affairs" can 2 and retained within the district/joint agreement ILLINOIS STATE BOARD OF EDUCATION be submitted as one file to avoid separating worksheets. 3 administrative office for public inspection. School Business Services 4 (217)785-8779 5 ANNUAL STATEMENT OF AFFAIRS FOR THE FISCAL YEAR ENDING 6 June 30, 2020 7 (Section 10-17 of the School Code) 8 9 SCHOOL DISTRICT/JOINT AGREEMENT NAME: Aurora East School District 131 DISTRICT TYPE 10 RCDT NUMBER: 31-045-1310-22 Elementary 11 ADDRESS: 417 5th St, Aurora, IL 60505 High School 12 COUNTY: Kane Unit X 1413 NAME OF NEWSPAPER WHERE PUBLISHED: Beacon News 15 ASSURANCE 16 YES x The statement of affairs has been made available in the main administrative office of the school district/joint agreement and the required Annual Statement of Affairs Summary has been published in accordance with Section 10-17 of the School Code. 1817 19 CAPITAL ASSETS VALUE SIZE OF DISTRICT IN SQUARE MILES 13 20 WORKS OF ART & HISTORICAL TREASURES NUMBER OF ATTENDANCE CENTERS 22 21 LAND 2,771,855 9 MONTH AVERAGE DAILY ATTENDANCE 13,327 22 BUILDING & BUILDING IMPROVEMENTS 191,099,506 NUMBER OF CERTIFICATED EMPLOYEES 23 SITE IMPROVMENTS & INFRASTRUCTURE 813,544 FULL-TIME 1,240 24 CAPITALIZED EQUIPMENT 1,553,276 PART-TIME 1 25 CONSTRUCTION IN PROGRESS 13,923,489 NUMBER OF NON-CERTIFICATED EMPLOYEES 26 Total 210,161,670 FULL-TIME 335 27 PART-TIME 113 28 NUMBER OF PUPILS ENROLLED PER GRADE TAX RATE BY FUND -

Nist Sp 800-77 Rev. 1 Guide to Ipsec Vpns

NIST Special Publication 800-77 Revision 1 Guide to IPsec VPNs Elaine Barker Quynh Dang Sheila Frankel Karen Scarfone Paul Wouters This publication is available free of charge from: https://doi.org/10.6028/NIST.SP.800-77r1 C O M P U T E R S E C U R I T Y NIST Special Publication 800-77 Revision 1 Guide to IPsec VPNs Elaine Barker Quynh Dang Sheila Frankel* Computer Security Division Information Technology Laboratory Karen Scarfone Scarfone Cybersecurity Clifton, VA Paul Wouters Red Hat Toronto, ON, Canada *Former employee; all work for this publication was done while at NIST This publication is available free of charge from: https://doi.org/10.6028/NIST.SP.800-77r1 June 2020 U.S. Department of Commerce Wilbur L. Ross, Jr., Secretary National Institute of Standards and Technology Walter Copan, NIST Director and Under Secretary of Commerce for Standards and Technology Authority This publication has been developed by NIST in accordance with its statutory responsibilities under the Federal Information Security Modernization Act (FISMA) of 2014, 44 U.S.C. § 3551 et seq., Public Law (P.L.) 113-283. NIST is responsible for developing information security standards and guidelines, including minimum requirements for federal information systems, but such standards and guidelines shall not apply to national security systems without the express approval of appropriate federal officials exercising policy authority over such systems. This guideline is consistent with the requirements of the Office of Management and Budget (OMB) Circular A-130. Nothing in this publication should be taken to contradict the standards and guidelines made mandatory and binding on federal agencies by the Secretary of Commerce under statutory authority. -

Vue.Js (Evan You, Ancien De Google)

Crédits : Guillaume Rivière Axios Module d’expertise de 2e année Développer des applications full-web : Devenir développeur full-stack ! < 1. Introduction /> – ESTIA – Guillaume Rivière Dernière révision : mars 2019 1 Contexte . Technologies des GAFAM • Permet de déployer des applications et des services à l’échelle mondiale . Application riches . Progressive webapps . Single-Page Application (SPA) 2 Références . Youtube . Dooble . GMail . Amazon . Facebook . MS O365 . Twitter . Pinterest . Onshape.com . Odoo (OpenERP) 3 Transactions pages web classiques 4 Transactions application web : SPA 5 Histoire . HTML . HTML + CSS . XHTML4 . HTML5 + CSS3 . Ajax . Jquery . Bootstrap . AngularJS / Angular / React / Backbone.js 6 Application full-web . « Stack » • Front-end • API / webservice • Back-end • Base de données Développeur front-end Développeur || full-stack Développeur back-end www.alticreation.com, 2013 7 Webservice d’API : applis web / mobile 9 Cloud computing . SaaS . Google Cloud Platform . AWS . MS Azure 10 Front-end • Angular (TypeScript) • React • Vue Office québécois de la langue française : Application frontale 11 Back-end . Back-end • PHP • Symfony • Laravel • Javascript / Typescript • NodeJS • Python • Djando • Ruby • Rails • R • C++ • Crow • Silicon • Cppcms • Tntnet • ctml Office québécois de la langue française : Application dorsale 12 Base de données . Base de données • Relationnelle • MySQL • PostgreSQL • Not Only SQL (NOSQL) • PostgreSQL • MongoDB 13 Full-Stack . API / Webservice • REST • Format • JSON • XML • XML-RPC • GraphQL 14 Choix pour ce module . VueJS • Simple et facile à apprendre • Permet des projets d’ampleur • Reprend des aspects de React et Angular . Symfony • Très répandu • PHP = 80% des applications serveurs en 201X 15 Plan . VueJS 2 . Symfony 4 . VueJS + Symfony . Projet 16 Prérequis . HTML5 . CSS3 . MySQL . PHP5 . Programmation Orientée Objet .