Sampling Uniformly from the Unit Simplex

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Geometry of Generalized Permutohedra

Geometry of Generalized Permutohedra by Jeffrey Samuel Doker A dissertation submitted in partial satisfaction of the requirements for the degree of Doctor of Philosophy in Mathematics in the Graduate Division of the University of California, Berkeley Committee in charge: Federico Ardila, Co-chair Lior Pachter, Co-chair Matthias Beck Bernd Sturmfels Lauren Williams Satish Rao Fall 2011 Geometry of Generalized Permutohedra Copyright 2011 by Jeffrey Samuel Doker 1 Abstract Geometry of Generalized Permutohedra by Jeffrey Samuel Doker Doctor of Philosophy in Mathematics University of California, Berkeley Federico Ardila and Lior Pachter, Co-chairs We study generalized permutohedra and some of the geometric properties they exhibit. We decompose matroid polytopes (and several related polytopes) into signed Minkowski sums of simplices and compute their volumes. We define the associahedron and multiplihe- dron in terms of trees and show them to be generalized permutohedra. We also generalize the multiplihedron to a broader class of generalized permutohedra, and describe their face lattices, vertices, and volumes. A family of interesting polynomials that we call composition polynomials arises from the study of multiplihedra, and we analyze several of their surprising properties. Finally, we look at generalized permutohedra of different root systems and study the Minkowski sums of faces of the crosspolytope. i To Joe and Sue ii Contents List of Figures iii 1 Introduction 1 2 Matroid polytopes and their volumes 3 2.1 Introduction . .3 2.2 Matroid polytopes are generalized permutohedra . .4 2.3 The volume of a matroid polytope . .8 2.4 Independent set polytopes . 11 2.5 Truncation flag matroids . 14 3 Geometry and generalizations of multiplihedra 18 3.1 Introduction . -

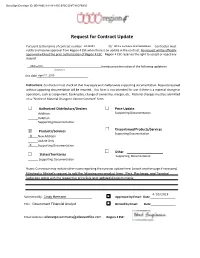

Request for Contract Update

DocuSign Envelope ID: 0E48A6C9-4184-4355-B75C-D9F791DFB905 Request for Contract Update Pursuant to the terms of contract number________________R142201 for _________________________ Office Furniture and Installation Contractor must notify and receive approval from Region 4 ESC when there is an update in the contract. No request will be officially approved without the prior authorization of Region 4 ESC. Region 4 ESC reserves the right to accept or reject any request. Allsteel Inc. hereby provides notice of the following update on (Contractor) this date April 17, 2019 . Instructions: Contractor must check all that may apply and shall provide supporting documentation. Requests received without supporting documentation will be returned. This form is not intended for use if there is a material change in operations, such as assignment, bankruptcy, change of ownership, merger, etc. Material changes must be submitted on a “Notice of Material Change to Vendor Contract” form. Authorized Distributors/Dealers Price Update Addition Supporting Documentation Deletion Supporting Documentation Discontinued Products/Services X Products/Services Supporting Documentation X New Addition Update Only X Supporting Documentation Other States/Territories Supporting Documentation Supporting Documentation Notes: Contractor may include other notes regarding the contract update here: (attach another page if necessary). Attached is Allsteel's request to add the following new product lines: Park, Recharge, and Townhall Collection along with the respective price -

![The Geometry of Nim Arxiv:1109.6712V1 [Math.CO] 30](https://docslib.b-cdn.net/cover/8642/the-geometry-of-nim-arxiv-1109-6712v1-math-co-30-48642.webp)

The Geometry of Nim Arxiv:1109.6712V1 [Math.CO] 30

The Geometry of Nim Kevin Gibbons Abstract We relate the Sierpinski triangle and the game of Nim. We begin by defining both a new high-dimensional analog of the Sierpinski triangle and a natural geometric interpretation of the losing positions in Nim, and then, in a new result, show that these are equivalent in each finite dimension. 0 Introduction The Sierpinski triangle (fig. 1) is one of the most recognizable figures in mathematics, and with good reason. It appears in everything from Pascal's Triangle to Conway's Game of Life. In fact, it has already been seen to be connected with the game of Nim, albeit in a very different manner than the one presented here [3]. A number of analogs have been discovered, such as the Menger sponge (fig. 2) and a three-dimensional version called a tetrix. We present, in the first section, a generalization in higher dimensions differing from the more typical simplex generalization. Rather, we define a discrete Sierpinski demihypercube, which in three dimensions coincides with the simplex generalization. In the second section, we briefly review Nim and the theory behind optimal play. As in all impartial games (games in which the possible moves depend only on the state of the game, and not on which of the two players is moving), all possible positions can be divided into two classes - those in which the next player to move can force a win, called N-positions, and those in which regardless of what the next player does the other player can force a win, called P -positions. -

Notes Has Not Been Formally Reviewed by the Lecturer

6.896 Topics in Algorithmic Game Theory February 22, 2010 Lecture 6 Lecturer: Constantinos Daskalakis Scribe: Jason Biddle, Debmalya Panigrahi NOTE: The content of these notes has not been formally reviewed by the lecturer. It is recommended that they are read critically. In our last lecture, we proved Nash's Theorem using Broweur's Fixed Point Theorem. We also showed Brouwer's Theorem via Sperner's Lemma in 2-d using a limiting argument to go from the discrete combinatorial problem to the topological one. In this lecture, we present a multidimensional generalization of the proof from last time. Our proof differs from those typically found in the literature. In particular, we will insist that each step of the proof be constructive. Using constructive arguments, we shall be able to pin down the complexity-theoretic nature of the proof and make the steps algorithmic in subsequent lectures. In the first part of our lecture, we present a framework for the multidimensional generalization of Sperner's Lemma. A Canonical Triangulation of the Hypercube • A Legal Coloring Rule • In the second part, we formally state Sperner's Lemma in n dimensions and prove it using the following constructive steps: Colored Envelope Construction • Definition of the Walk • Identification of the Starting Simplex • Direction of the Walk • 1 Framework Recall that in the 2-dimensional case we had a square which was divided into triangles. We had also defined a legal coloring scheme for the triangle vertices lying on the boundary of the square. Now let us extend those concepts to higher dimensions. 1.1 Canonical Triangulation of the Hypercube We begin by introducing the n-simplex as the n-dimensional analog of the triangle in 2 dimensions as shown in Figure 1. -

Interval Vector Polytopes

Interval Vector Polytopes By Gabriel Dorfsman-Hopkins SENIOR HONORS THESIS ROSA C. ORELLANA, ADVISOR DEPARTMENT OF MATHEMATICS DARTMOUTH COLLEGE JUNE, 2013 Contents Abstract iv 1 Introduction 1 2 Preliminaries 4 2.1 Convex Polytopes . 4 2.2 Lattice Polytopes . 8 2.2.1 Ehrhart Theory . 10 2.3 Faces, Face Lattices and the Dual . 12 2.3.1 Faces of a Polytope . 12 2.3.2 The Face Lattice of a Polytope . 15 2.3.3 Duality . 18 2.4 Basic Graph Theory . 22 3IntervalVectorPolytopes 23 3.1 The Complete Interval Vector Polytope . 25 3.2 TheFixedIntervalVectorPolytope . 30 3.2.1 FlowDimensionGraphs . 31 4TheIntervalPyramid 38 ii 4.1 f-vector of the Interval Pyramid . 40 4.2 Volume of the Interval Pyramid . 45 4.3 Duality of the Interval Pyramid . 50 5 Open Questions 57 5.1 Generalized Interval Pyramid . 58 Bibliography 60 iii Abstract An interval vector is a 0, 1 -vector where all the ones appear consecutively. An { } interval vector polytope is the convex hull of a set of interval vectors in Rn.Westudy several classes of interval vector polytopes which exhibit interesting combinatorial- geometric properties. In particular, we study a class whose volumes are equal to the catalan numbers, another class whose legs always form a lattice basis for their affine space, and a third whose face numbers are given by the pascal 3-triangle. iv Chapter 1 Introduction An interval vector is a 0, 1 -vector in Rn such that the ones (if any) occur consecu- { } tively. These vectors can nicely and discretely model scheduling problems where they represent the length of an uninterrupted activity, and so understanding the combi- natorics of these vectors is useful in optimizing scheduling problems of continuous activities of certain lengths. -

Package 'Hypercube'

Package ‘hypercube’ February 28, 2020 Type Package Title Organizing Data in Hypercubes Version 0.2.1 Author Michael Scholz Maintainer Michael Scholz <[email protected]> Description Provides functions and methods for organizing data in hypercubes (i.e., a multi-dimensional cube). Cubes are generated from molten data frames. Each cube can be manipulated with five operations: rotation (change.dimensionOrder()), dicing and slicing (add.selection(), remove.selection()), drilling down (add.aggregation()), and rolling up (remove.aggregation()). License GPL-3 Encoding UTF-8 Depends R (>= 3.3.0), stats, plotly Imports methods, stringr, dplyr LazyData TRUE RoxygenNote 7.0.2 NeedsCompilation no Repository CRAN Date/Publication 2020-02-28 07:10:08 UTC R topics documented: hypercube-package . .2 add.aggregation . .3 add.selection . .4 as.data.frame.Cube . .5 change.dimensionOrder . .6 Cube-class . .7 Dimension-class . .7 generateCube . .8 importance . .9 1 2 hypercube-package plot,Cube-method . 10 print.Importances . 11 remove.aggregation . 12 remove.selection . 13 sales . 14 show,Cube-method . 14 show,Dimension-method . 15 sparsity . 16 summary . 17 Index 18 hypercube-package Provides functions and methods for organizing data in hypercubes Description This package provides methods for organizing data in a hypercube Each cube can be manipu- lated with five operations rotation (changeDimensionOrder), dicing and slicing (add.selection, re- move.selection), drilling down (add.aggregation), and rolling up (remove.aggregation). Details Package: hypercube -

Hamiltonicity and Combinatorial Polyhedra

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Elsevier - Publisher Connector JOURNAL OF COMBINATORIAL THEORY, Series B 31, 297-312 (1981) Hamiltonicity and Combinatorial Polyhedra D. NADDEF Laboratoire d’lnformatique et de Mathkmatiques AppliquPes de Grenoble. Grenoble, France AND W. R. PULLEYBLANK* Department of Computer Science, The University of Calgary, Calgary, Alberta T2N lN4, Canada Communicated by the Editors Received April 8, 1980 We say that a polyhedron with O-1 valued vertices is combinatorial if the midpoint of the line joining any pair of nonadjacent vertices is the midpoint of the line joining another pair of vertices. We show that the class of combinatorial polyhedra includes such well-known classes of polyhedra as matching polyhedra, matroid basis polyhedra, node packing or stable set polyhedra and permutation polyhedra. We show the graph of a combinatorial polyhedron is always either a hypercube (i.e., isomorphic to the convex hull of a k-dimension unit cube) or else is hamilton connected (every pair of nodes is the set of terminal nodes of a hamilton path). This imfilies several earlier results concerning special cases of combinatorial polyhedra. 1. INTRODUCTION The graph G(P) of a polyhedron P is the graph whose nodes are the vertices of the polyhedron and which has an edge joining each pair of nodes for which the corresponding vertices of the polyhedron are adjacent, that is, joined by an edge of the polyhedron. Such graphs have been studied since the beginnings of graph theory; in 1857 Sir William Hamilton introduced his “tour of the world” game which consisted of constructing a closed tour passing exactly once through each vertex of the dodecahedron. -

Sequential Evolutionary Operations of Trigonometric Simplex Designs For

SEQUENTIAL EVOLUTIONARY OPERATIONS OF TRIGONOMETRIC SIMPLEX DESIGNS FOR HIGH-DIMENSIONAL UNCONSTRAINED OPTIMIZATION APPLICATIONS Hassan Musafer Under the Supervision of Dr. Ausif Mahmood DISSERTATION SUBMITTED IN PARTIAL FULFILMENT OF THE REQUIRMENTS FOR THE DEGREE OF DOCTOR OF PHILOSOHPY IN COMPUTER SCIENCE AND ENGINEERING THE SCHOOL OF ENGINEERING UNIVERSITY OF BRIDGEPORT CONNECTICUT May, 2020 SEQUENTIAL EVOLUTIONARY OPERATIONS OF TRIGONOMETRIC SIMPLEX DESIGNS FOR HIGH-DIMENSIONAL UNCONSTRAINED OPTIMIZATION APPLICATIONS c Copyright by Hassan Musafer 2020 iii SEQUENTIAL EVOLUTIONARY OPERATIONS OF TRIGONOMETRIC SIMPLEX DESIGNS FOR HIGH-DIMENSIONAL UNCONSTRAINED OPTIMIZATION APPLICATIONS ABSTRACT This dissertation proposes a novel mathematical model for the Amoeba or the Nelder- Mead simplex optimization (NM) algorithm. The proposed Hassan NM (HNM) algorithm allows components of the reflected vertex to adapt to different operations, by breaking down the complex structure of the simplex into multiple triangular simplexes that work sequentially to optimize the individual components of mathematical functions. When the next formed simplex is characterized by different operations, it gives the simplex similar reflections to that of the NM algorithm, but with rotation through an angle determined by the collection of nonisometric features. As a consequence, the generating sequence of triangular simplexes is guaranteed that not only they have different shapes, but also they have different directions, to search the complex landscape of mathematical problems and to perform better performance than the traditional hyperplanes simplex. To test reliability, efficiency, and robustness, the proposed algorithm is examined on three areas of large- scale optimization categories: systems of nonlinear equations, nonlinear least squares, and unconstrained minimization. The experimental results confirmed that the new algorithm delivered better performance than the traditional NM algorithm, represented by a famous Matlab function, known as "fminsearch". -

Computing Invariants of Hyperbolic Coxeter Groups

LMS J. Comput. Math. 18 (1) (2015) 754{773 C 2015 Author doi:10.1112/S1461157015000273 CoxIter { Computing invariants of hyperbolic Coxeter groups R. Guglielmetti Abstract CoxIter is a computer program designed to compute invariants of hyperbolic Coxeter groups. Given such a group, the program determines whether it is cocompact or of finite covolume, whether it is arithmetic in the non-cocompact case, and whether it provides the Euler characteristic and the combinatorial structure of the associated fundamental polyhedron. The aim of this paper is to present the theoretical background for the program. The source code is available online as supplementary material with the published article and on the author's website (http://coxiter.rgug.ch). Supplementarymaterialsareavailablewiththisarticle. Introduction Let Hn be the hyperbolic n-space, and let Isom Hn be the group of isometries of Hn. For a given discrete hyperbolic Coxeter group Γ < Isom Hn and its associated fundamental polyhedron P ⊂ Hn, we are interested in geometrical and combinatorial properties of P . We want to know whether P is compact, has finite volume and, if the answer is yes, what its volume is. We also want to find the combinatorial structure of P , namely, the number of vertices, edges, 2-faces, and so on. Finally, it is interesting to find out whether Γ is arithmetic, that is, if Γ is commensurable to the reflection group of the automorphism group of a quadratic form of signature (n; 1). Most of these questions can be answered by studying finite and affine subgroups of Γ, but this involves a huge number of computations. -

The Hippo Pathway Component Wwc2 Is a Key Regulator of Embryonic Development and Angiogenesis in Mice Anke Hermann1,Guangmingwu2, Pavel I

Hermann et al. Cell Death and Disease (2021) 12:117 https://doi.org/10.1038/s41419-021-03409-0 Cell Death & Disease ARTICLE Open Access The Hippo pathway component Wwc2 is a key regulator of embryonic development and angiogenesis in mice Anke Hermann1,GuangmingWu2, Pavel I. Nedvetsky1,ViktoriaC.Brücher3, Charlotte Egbring3, Jakob Bonse1, Verena Höffken1, Dirk Oliver Wennmann1, Matthias Marks4,MichaelP.Krahn 1,HansSchöler5,PeterHeiduschka3, Hermann Pavenstädt1 and Joachim Kremerskothen1 Abstract The WW-and-C2-domain-containing (WWC) protein family is involved in the regulation of cell differentiation, cell proliferation, and organ growth control. As upstream components of the Hippo signaling pathway, WWC proteins activate the Large tumor suppressor (LATS) kinase that in turn phosphorylates Yes-associated protein (YAP) and its paralog Transcriptional coactivator-with-PDZ-binding motif (TAZ) preventing their nuclear import and transcriptional activity. Inhibition of WWC expression leads to downregulation of the Hippo pathway, increased expression of YAP/ TAZ target genes and enhanced organ growth. In mice, a ubiquitous Wwc1 knockout (KO) induces a mild neurological phenotype with no impact on embryogenesis or organ growth. In contrast, we could show here that ubiquitous deletion of Wwc2 in mice leads to early embryonic lethality. Wwc2 KO embryos display growth retardation, a disturbed placenta development, impaired vascularization, and finally embryonic death. A whole-transcriptome analysis of embryos lacking Wwc2 revealed a massive deregulation of gene expression with impact on cell fate determination, 1234567890():,; 1234567890():,; 1234567890():,; 1234567890():,; cell metabolism, and angiogenesis. Consequently, a perinatal, endothelial-specific Wwc2 KO in mice led to disturbed vessel formation and vascular hypersprouting in the retina. -

Four-Dimensional Regular Polytopes

faculty of science mathematics and applied and engineering mathematics Four-dimensional regular polytopes Bachelor’s Project Mathematics November 2020 Student: S.H.E. Kamps First supervisor: Prof.dr. J. Top Second assessor: P. Kiliçer, PhD Abstract Since Ancient times, Mathematicians have been interested in the study of convex, regular poly- hedra and their beautiful symmetries. These five polyhedra are also known as the Platonic Solids. In the 19th century, the four-dimensional analogues of the Platonic solids were described mathe- matically, adding one regular polytope to the collection with no analogue regular polyhedron. This thesis describes the six convex, regular polytopes in four-dimensional Euclidean space. The focus lies on deriving information about their cells, faces, edges and vertices. Besides that, the symmetry groups of the polytopes are touched upon. To better understand the notions of regularity and sym- metry in four dimensions, our journey begins in three-dimensional space. In this light, the thesis also works out the details of a proof of prof. dr. J. Top, showing there exist exactly five convex, regular polyhedra in three-dimensional space. Keywords: Regular convex 4-polytopes, Platonic solids, symmetry groups Acknowledgements I would like to thank prof. dr. J. Top for supervising this thesis online and adapting to the cir- cumstances of Covid-19. I also want to thank him for his patience, and all his useful comments in and outside my LATEX-file. Also many thanks to my second supervisor, dr. P. Kılıçer. Furthermore, I would like to thank Jeanne for all her hospitality and kindness to welcome me in her home during the process of writing this thesis. -

Arxiv:1705.01294V1

Branes and Polytopes Luca Romano email address: [email protected] ABSTRACT We investigate the hierarchies of half-supersymmetric branes in maximal supergravity theories. By studying the action of the Weyl group of the U-duality group of maximal supergravities we discover a set of universal algebraic rules describing the number of independent 1/2-BPS p-branes, rank by rank, in any dimension. We show that these relations describe the symmetries of certain families of uniform polytopes. This induces a correspondence between half-supersymmetric branes and vertices of opportune uniform polytopes. We show that half-supersymmetric 0-, 1- and 2-branes are in correspondence with the vertices of the k21, 2k1 and 1k2 families of uniform polytopes, respectively, while 3-branes correspond to the vertices of the rectified version of the 2k1 family. For 4-branes and higher rank solutions we find a general behavior. The interpretation of half- supersymmetric solutions as vertices of uniform polytopes reveals some intriguing aspects. One of the most relevant is a triality relation between 0-, 1- and 2-branes. arXiv:1705.01294v1 [hep-th] 3 May 2017 Contents Introduction 2 1 Coxeter Group and Weyl Group 3 1.1 WeylGroup........................................ 6 2 Branes in E11 7 3 Algebraic Structures Behind Half-Supersymmetric Branes 12 4 Branes ad Polytopes 15 Conclusions 27 A Polytopes 30 B Petrie Polygons 30 1 Introduction Since their discovery branes gained a prominent role in the analysis of M-theories and du- alities [1]. One of the most important class of branes consists in Dirichlet branes, or D-branes. D-branes appear in string theory as boundary terms for open strings with mixed Dirichlet-Neumann boundary conditions and, due to their tension, scaling with a negative power of the string cou- pling constant, they are non-perturbative objects [2].