Piko: a Framework for Authoring Programmable Graphics Pipelines

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Candidate Features for Future Opengl 5 / Direct3d 12 Hardware and Beyond 3 May 2014, Christophe Riccio

Candidate features for future OpenGL 5 / Direct3D 12 hardware and beyond 3 May 2014, Christophe Riccio G-Truc Creation Table of contents TABLE OF CONTENTS 2 INTRODUCTION 4 1. DRAW SUBMISSION 6 1.1. GL_ARB_MULTI_DRAW_INDIRECT 6 1.2. GL_ARB_SHADER_DRAW_PARAMETERS 7 1.3. GL_ARB_INDIRECT_PARAMETERS 8 1.4. A SHADER CODE PATH PER DRAW IN A MULTI DRAW 8 1.5. SHADER INDEXED LOSE STATES 9 1.6. GL_NV_BINDLESS_MULTI_DRAW_INDIRECT 10 1.7. GL_AMD_INTERLEAVED_ELEMENTS 10 2. RESOURCES 11 2.1. GL_ARB_BINDLESS_TEXTURE 11 2.2. GL_NV_SHADER_BUFFER_LOAD AND GL_NV_SHADER_BUFFER_STORE 11 2.3. GL_ARB_SPARSE_TEXTURE 12 2.4. GL_AMD_SPARSE_TEXTURE 12 2.5. GL_AMD_SPARSE_TEXTURE_POOL 13 2.6. SEAMLESS TEXTURE STITCHING 13 2.7. 3D MEMORY LAYOUT FOR SPARSE 3D TEXTURES 13 2.8. SPARSE BUFFER 14 2.9. GL_KHR_TEXTURE_COMPRESSION_ASTC 14 2.10. GL_INTEL_MAP_TEXTURE 14 2.11. GL_ARB_SEAMLESS_CUBEMAP_PER_TEXTURE 15 2.12. DMA ENGINES 15 2.13. UNIFIED MEMORY 16 3. SHADER OPERATIONS 17 3.1. GL_ARB_SHADER_GROUP_VOTE 17 3.2. GL_NV_SHADER_THREAD_GROUP 17 3.3. GL_NV_SHADER_THREAD_SHUFFLE 17 3.4. GL_NV_SHADER_ATOMIC_FLOAT 18 3.5. GL_AMD_SHADER_ATOMIC_COUNTER_OPS 18 3.6. GL_ARB_COMPUTE_VARIABLE_GROUP_SIZE 18 3.7. MULTI COMPUTE DISPATCH 19 3.8. GL_NV_GPU_SHADER5 19 3.9. GL_AMD_GPU_SHADER_INT64 20 3.10. GL_AMD_GCN_SHADER 20 3.11. GL_NV_VERTEX_ATTRIB_INTEGER_64BIT 21 3.12. GL_AMD_ SHADER_TRINARY_MINMAX 21 4. FRAMEBUFFER 22 4.1. GL_AMD_SAMPLE_POSITIONS 22 4.2. GL_EXT_FRAMEBUFFER_MULTISAMPLE_BLIT_SCALED 22 4.3. GL_NV_MULTISAMPLE_COVERAGE AND GL_NV_FRAMEBUFFER_MULTISAMPLE_COVERAGE 22 4.4. GL_AMD_DEPTH_CLAMP_SEPARATE 22 5. BLENDING 23 5.1. GL_NV_TEXTURE_BARRIER 23 5.2. GL_EXT_SHADER_FRAMEBUFFER_FETCH (OPENGL ES) 23 5.3. GL_ARM_SHADER_FRAMEBUFFER_FETCH (OPENGL ES) 23 5.4. GL_ARM_SHADER_FRAMEBUFFER_FETCH_DEPTH_STENCIL (OPENGL ES) 23 5.5. GL_EXT_PIXEL_LOCAL_STORAGE (OPENGL ES) 24 5.6. TILE SHADING 25 5.7. GL_INTEL_FRAGMENT_SHADER_ORDERING 26 5.8. GL_KHR_BLEND_EQUATION_ADVANCED 26 5.9. -

Comparison of Technologies for General-Purpose Computing on Graphics Processing Units

Master of Science Thesis in Information Coding Department of Electrical Engineering, Linköping University, 2016 Comparison of Technologies for General-Purpose Computing on Graphics Processing Units Torbjörn Sörman Master of Science Thesis in Information Coding Comparison of Technologies for General-Purpose Computing on Graphics Processing Units Torbjörn Sörman LiTH-ISY-EX–16/4923–SE Supervisor: Robert Forchheimer isy, Linköpings universitet Åsa Detterfelt MindRoad AB Examiner: Ingemar Ragnemalm isy, Linköpings universitet Organisatorisk avdelning Department of Electrical Engineering Linköping University SE-581 83 Linköping, Sweden Copyright © 2016 Torbjörn Sörman Abstract The computational capacity of graphics cards for general-purpose computing have progressed fast over the last decade. A major reason is computational heavy computer games, where standard of performance and high quality graphics con- stantly rise. Another reason is better suitable technologies for programming the graphics cards. Combined, the product is high raw performance devices and means to access that performance. This thesis investigates some of the current technologies for general-purpose computing on graphics processing units. Tech- nologies are primarily compared by means of benchmarking performance and secondarily by factors concerning programming and implementation. The choice of technology can have a large impact on performance. The benchmark applica- tion found the difference in execution time of the fastest technology, CUDA, com- pared to the slowest, OpenCL, to be twice a factor of two. The benchmark applica- tion also found out that the older technologies, OpenGL and DirectX, are compet- itive with CUDA and OpenCL in terms of resulting raw performance. iii Acknowledgments I would like to thank Åsa Detterfelt for the opportunity to make this thesis work at MindRoad AB. -

Club 3D Radeon R9 380 Royalqueen 4096MB GDDR5 256BIT | PCI EXPRESS 3.0

Club 3D Radeon R9 380 royalQueen 4096MB GDDR5 256BIT | PCI EXPRESS 3.0 Product Name Club 3D Radeon R9 380 4GB royalQueen 4096MB GDDR5 256 BIT | PCI Express 3.0 Product Series Club 3D Radeon R9 300 Series codename ‘Antigua’ Itemcode CGAX-R93858 EAN code 8717249401469 UPC code 854365005428 Description: OS Support: The new Club 3D Radeon™ R9 380 4GB royalQueen was conceived to OS Support: Windows 7, Windows 8.1, Windows 10 play hte most demanding games at 1080p, 1440p, all the way up to 4K 3D API Support: DirextX 11.2, DirectX 12, Vulkan, AMD Mantle. resolution. Get quality that rivals 4K, even on 1080p displays thanks to VSR (Virtual Super Resolution). Loaded with the latest advancements in GCN architecture including AMD FreeSync™, AMD Eyefinity and AMD In the box: LiquidVR™ technologies plus support for the nex gen APIs DirectX® 12, • Club 3D R9 380 royalQueen Graphics card Vulkan™ and AMD mantle, the Club 3D R9 380 royalQueen is for the • Club 3D Driver & E-manual CD serious PC Gamer. • Club 3D gaming Door hanger • Quick install guide Features: Outputs: Other info: • Club 3D Radeon R9 380 royalQueen 4GB • DisplayPort 1.2a • Box size: 293 x 195 x 69 mm • 1792 Stream Processors • HDMI 1.4a • Card size: 207 x 111 x 38 mm • Clock speed up to 980 MHz • Dual Link DVI-I • Weight: 0.6 Kg • 4096 MB GDDR5 Memory at 5900MHz • Dual Link DVI-D • Profile: Standard profile • 256 BIT Memory Bus • Slot width: 2 Slots • High performance Dual Fan CoolStream cooler • Requires min 700w PSU with • PCI Express 3.0 two 6pin PCIe connectors • AMD Eyefinity 6 capable (with Club 3D MST Hub) with PLP support Outputs: • AMD Graphics core Next architecture • AMD PowerTune • AMD ZeroCore Power • AMD FreeSync support • AMD Bridgeless CrossFire • Custom backplate Quick install guide: PRODUCT LINK CLICK HERE Disclaimer: While we endeavor to provide the most accurate, up-to-date information available, the content on this document may be out of date or include omissions, inaccuracies or other errors. -

SAPPHIRE R9 285 2GB GDDR5 ITX COMPACT OC Edition (UEFI)

Specification Display Support 4 x Maximum Display Monitor(s) support 1 x HDMI (with 3D) Output 2 x Mini-DisplayPort 1 x Dual-Link DVI-I 928 MHz Core Clock GPU 28 nm Chip 1792 x Stream Processors 2048 MB Size Video Memory 256 -bit GDDR5 5500 MHz Effective 171(L)X110(W)X35(H) mm Size. Dimension 2 x slot Driver CD Software SAPPHIRE TriXX Utility DVI to VGA Adapter Mini-DP to DP Cable Accessory HDMI 1.4a high speed 1.8 meter cable(Full Retail SKU only) 1 x 8 Pin to 6 Pin x2 Power adaptor Overview HDMI (with 3D) Support for Deep Color, 7.1 High Bitrate Audio, and 3D Stereoscopic, ensuring the highest quality Blu-ray and video experience possible from your PC. Mini-DisplayPort Enjoy the benefits of the latest generation display interface, DisplayPort. With the ultra high HD resolution, the graphics card ensures that you are able to support the latest generation of LCD monitors. Dual-Link DVI-I Equipped with the most popular Dual Link DVI (Digital Visual Interface), this card is able to display ultra high resolutions of up to 2560 x 1600 at 60Hz. Advanced GDDR5 Memory Technology GDDR5 memory provides twice the bandwidth per pin of GDDR3 memory, delivering more speed and higher bandwidth. Advanced GDDR5 Memory Technology GDDR5 memory provides twice the bandwidth per pin of GDDR3 memory, delivering more speed and higher bandwidth. AMD Stream Technology Accelerate the most demanding applications with AMD Stream technology and do more with your PC. AMD Stream Technology allows you to use the teraflops of compute power locked up in your graphics processer on tasks other than traditional graphics such as video encoding, at which the graphics processor is many, many times faster than using the CPU alone. -

Masterarbeit / Master's Thesis

MASTERARBEIT / MASTER'S THESIS Titel der Masterarbeit / Title of the Master`s Thesis "Reducing CPU overhead for increased real time rendering performance" verfasst von / submitted by Daniel Martinek BSc angestrebter Akademischer Grad / in partial fulfilment of the requirements for the degree of Diplom-Ingenieur (Dipl.-Ing.) Wien, 2016 / Vienna 2016 Studienkennzahl lt. Studienblatt / A 066 935 degree programme code as it appears on the student record sheet: Studienrichtung lt. Studienblatt / Masterstudium Medieninformatik UG2002 degree programme as it appears on the student record sheet: Betreut von / Supervisor: Univ.-Prof. Dipl.-Ing. Dr. Helmut Hlavacs Contents 1 Introduction 1 1.1 Motivation . .1 1.2 Outline . .2 2 Introduction to real-time rendering 3 2.1 Using a graphics API . .3 2.2 API future . .6 3 Related Work 9 3.1 nVidia Bindless OpenGL Extensions . .9 3.2 Introducing the Programmable Vertex Pulling Rendering Pipeline . 10 3.3 Improving Performance by Reducing Calls to the Driver . 11 4 Libraries and Utilities 13 4.1 SDL . 13 4.2 glm . 13 4.3 ImGui . 14 4.4 STB . 15 4.5 Assimp . 16 4.6 RapidJSON . 16 4.7 DirectXTex . 16 5 Engine Architecture 17 5.1 breach . 17 5.2 graphics . 19 5.3 profiling . 19 5.4 input . 20 5.5 filesystem . 21 5.6 gui . 21 5.7 resources . 21 5.8 world . 22 5.9 rendering . 23 5.10 rendering2d . 23 6 Resource Conditioning 25 6.1 Materials . 26 i 6.2 Geometry . 27 6.3 World Data . 28 6.4 Textures . 29 7 Resource Management 31 7.1 Meshes . -

Quickspecs AMD Firepro W5100 4GB Graphics

QuickSpecs AMD FirePro W5100 4GB Graphics Overview AMD FirePro W5100 4GB Graphics AMD FirePro W5100 4GB Graphics J3G92AA INTRODUCTION The AMD FirePro™ W5100 workstation graphics card delivers impressive performance, superb visual quality, and outstanding multi-display capabilities all in a single-slot, <75W solution. It is an excellent mid-range solution for professionals who work with CAD & Engineering and Media & Entertainment applications. The AMD FirePro W5100 features four display outputs and AMD Eyefinity technology support, as well as support up to six simultaneous and independent monitors from a single graphics card via DisplayPort Multi-Streaming (see Note 1). Also, the AMD FirePro W5100 is backed by 4GB of ultra-fast GDDR5 memory. PERFORMANCE AND FEATURES AMD Graphics Core Next (GCN) architecture designed to effortlessly balance GPU compute and 3D workloads efficiently Segment leading compute architecture yielding up to 1.43 TFLOPS peak single precision Optimized and certified for leading workstation ISV applications. The AMD FirePro™ professional graphics family is certified on more than 100 different applications for reliable performance. GeometryBoost technology with dual primitive engines Four (4) native display DisplayPort 1.2a (with Adaptive-Sync) outputs with 4K resolution support Up to six display outputs using DisplayPort 1.2a and MST compliant displays, HBR2 support AMD Eyefinity technology (see Note 1) support managing up to 6 displays seamlessly as though they were one display c04513037 — DA - 15147 Worldwide -

Gen Vulkan API

Faculty of Science and Technology Department of Computer Science A closer look at problems related to the next- gen Vulkan API — Håvard Mathisen INF-3981 Master’s Thesis in Computer Science June 2017 Abstract Vulkan is a significantly lower-level graphics API than OpenGL and require more effort from application developers to do memory management, synchronization, and other low-level tasks that are spe- cific to this API. The API is closer to the hardware and offer features that is not exposed in older APIs. For this thesis we will extend an existing game engine with a Vulkan back-end. This allows us to eval- uate the API and compare with OpenGL. We find ways to efficiently solve some challenges encountered when using Vulkan. i Contents 1 Introduction 1 1.1 Goals . .2 2 Background 3 2.1 GPU Architecture . .3 2.2 GPU Drivers . .3 2.3 Graphics APIs . .5 2.3.1 What is Vulkan . .6 2.3.2 Why Vulkan . .7 3 Vulkan Overview 8 3.1 Vulkan Architecture . .8 3.2 Vulkan Execution Model . .8 3.3 Vulkan Tools . .9 4 Vulkan Objects 10 4.1 Instances, Physical Devices, Devices . 10 4.1.1 Lost Device . 12 4.2 Command buffers . 12 4.3 Queues . 13 4.4 Memory Management . 13 4.4.1 Memory Heaps . 13 4.4.2 Memory Types . 14 4.4.3 Host visible memory . 14 4.4.4 Memory Alignment, Aliasing and Allocation Limitations 15 4.5 Synchronization . 15 4.5.1 Execution dependencies . 16 4.5.2 Memory dependencies . 16 4.5.3 Image Layout Transitions . -

AMD Announces Major Technology Partnerships at Future of Compute Event

2014-11-20 13:30 CET AMD Announces Major Technology Partnerships at Future of Compute Event ─ During event keynotes, Samsung shows upcoming line of FreeSync-enabled ultra high- definition displays and Capcom announces evaluation of AMD Mantle API ─ SINGAPORE — Nov. 20, 2014 — AMD (NYSE: AMD) today at its Future of Compute event announced the introduction of the consumer electronic industry’s first-ever ultra high-definition (UHD) monitors to feature its innovative, open-standards based FreeSync technology. Samsung Electronics Co., Ltd., plans to launch the screen synching technology around the world in March 2015, starting with the Samsung UD590 (23.6-inch and 28-inch models) and UE850 (23.6-inch, 27-inch and 31.5-inch models), and eventually across all of Samsung’s UHD lineups. FreeSync will enable dynamic refresh rates synchronized to the frame rate of AMD Radeon™ graphics cards and APUs to maximally reduce input latency and reduce or fully eliminate visual defects during gaming and video playback. “We are very pleased to adopt AMD FreeSync technology to our 2015 Samsung Electronics Visual Display division’s UHD monitor roadmap, which fully supports open standards,” said Joe Chan, Vice President of Samsung Electronics Southeast Asia Headquarters. “With this technology, we believe users including gamers will be able to enjoy their videos and games to be played with smoother frame display without stuttering or tearing on their monitors.” In addition, Capcom announced its collaboration with AMD on the AMD Mantle API to enhance Capcom’s “Panta-Rhei” engine, enabling enhanced gaming performance and visual quality for upcoming Capcom game titles. -

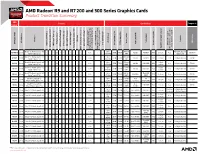

AMD Radeon™ R9 and R7 200 and 300 Series Graphics Cards Product Transition Summary

AMD Radeon™ R9 and R7 200 and 300 Series Graphics Cards Product Transition Summary AMD TM Features Specifications Compare to Radeon ) ™ 7 1 4 5 8 2 9 4 5 6 10 3 (FRTC) (VSR) ®* Model Support ™ ® Technology Version Technology ® ™ ™ DDM Audio API Support Form Factor Form Memory Amount GPU Clock Speed GPU Architecture NVIDIA GeForce Memory Interface Memory Bandwidth AMD Radeon PCI Express PSU Recommendation 4K Resolution Support4K Resolution AMD HD3D Technology (With 3D, Deep Color, x.v.Color Deep Color, (With 3D, AMD CrossFire Stream Processing Units Processing Stream GPU Wattage-TDP Power High Bandwidth Memory Video Codec Engine (VCE) Engine Video Codec AMD Technology ZeroCore MPEG-2, VC-1 & Blu-ray 3D) & Blu-ray VC-1 MPEG-2, AMD Eyefinity Technology AMD Eyefinity ® AMD Freesync AMD TrueAudio Technology AMD TrueAudio AMD Liquid VR AMD PowerTune Technology AMD PowerTune Bridge Interconnect required)Bridge Interconnect Virtual Super Resolution Frame Rate Target Control Control Target Rate Frame Required Power Supply Connectors Power Required (Max number of GPUs and CrossFire™ (Max GPUs and CrossFire™ number of displays without DisplayPort yes/no) without DisplayPort displays (Maximum three support displays, for (With support for H.264, MPEG-4 ASP, ASP, MPEG-4(With H.264, support for HDMI DirectX® 12, Mantle, OpenGL® 4.5, Up to 4096-bit Full height, dual slot, R9 FURY X 28 nm • • • 3.0 • • • • • • • • 6, Yes • • 275W 750W 512 GB/s 4GB HBM 4096 2 x 8-pin 4, no GTX 980 Ti Vulkan™, OpenCL™ 2.0 1050 MHz HBM liquid-cooled DirectX® 12, Mantle, -

Realtime Computer Graphics on Gpus Speedup Techniques, Other Apis

Optimizations Intro Optimize Rendering Textures Other APIs Realtime Computer Graphics on GPUs Speedup Techniques, Other APIs Jan Kolomazn´ık Department of Software and Computer Science Education Faculty of Mathematics and Physics Charles University in Prague 1 / 34 Optimizations Intro Optimize Rendering Textures Other APIs Optimizations Intro 2 / 34 Optimizations Intro Optimize Rendering Textures Other APIs PERFORMANCE BOTTLENECKS I I Most of the applications require steady framerate – What can slow down rendering? I Too much geometry rendered I CPU/GPU can process only limited amount of data per second I Render only what is visible and with adequate details I Lighting/shading computation I Use simpler material model I Limit number of generated fragments I Data transfers between CPU/GPU I Try to reuse/cache data on GPU I Use async transfers 3 / 34 Optimizations Intro Optimize Rendering Textures Other APIs PERFORMANCE BOTTLENECKS II I State changes I Bundle object by materials I Use UBOs (uniform buffer objects) I GPU idling – cannot generate work fast enough I Multithreaded task generation I Not everything must be done in every frame – reuse information (temporal consistency) I CPU/Driver hotspots I Bindless textures I Instanced rendering I Indirect rendering 4 / 34 Optimizations Intro Optimize Rendering Textures Other APIs DIFFERENT NEEDS I Large open world I Rendering lots of objects I Not so many details needed for distant sections I Indoors scenes I Often lots of same objects – instaced rendering I Only small portion of the scene visible -

Think Outside the Box

Think outside the box QX-60 PINNACLE OF THE QX SERIES The Q X-60 is a complete PC based gaming platform designed to drive pay to play gaming machines. It has a comprehensive feature-set designed to address all the requirements of running the latest generation of gaming machines. - Supports 4K Ultra HD monitors - AMD Embedded R-Series APU SoC with integrated AMD Radeon TM HD 10000 Series graphics with third generation GCN architecture - Optional AMD Radeon TM E9171 discrete GPU with 4GB GDDR5 dedicated video memory - Advanced PCI Express ® gaming logic & NVRAM High performance APU Advanced Security Features Dual or Quad-core AMD Embedded R-Series SoC Quixant hardware security engine incorporating PCI processors clocked at up to 2.1GHz (Max Boost Express ® AES 128/256bit hardware TM Frequency up to 3.4GHz) and AMD Radeon HD encryption/decryption engine, hardware RSA 2048 10000 integrated graphics drives three independent engine, optional embedded TPM, built-in unique monitors. The AMD Embedded R-Series SoC integrates serial number and SHA-1 acceleration engine. advanced hardware acceleration for 4K video decoding & encoding acceleration. Complete Software Suite TM Optional AMD Radeon E9171 discrete graphics Device drivers, gaming protocols including SAS, secure customizable BIOS. Full support for Windows The QX-60 can be configured with an optional AMD Radeon TM E9171 discrete embedded graphics Embedded (7, 8 and 10) and Linux. processor. This adds 4GB of dedicated GDDR5 video Long supply lifetime memory and works alongside the integrated AMD Radeon TM HD 10000 integrated into the APU to Embedded design with 5 year supply lifetime from enhance graphic performance and increase the launch. -

AMD Radeon R9 290X Graphics Card Pioneers New Era in Gaming 25 October 2013

AMD Radeon R9 290X graphics card pioneers new era in gaming 25 October 2013 AMD today launched the AMD Radeon R9 290X Radeon R9 290X graphics card will deliver a richer graphics card, introducing the ultimate GPU for a and deeply immersive soundscape, including true new era in PC gaming. As the top AMD Radeon to life echoes, convolution reverbs and incredibly R9 Series graphics card, the AMD Radeon R9 realistic sounding environments. 290X GPU delivers breathtaking performance while pushing the boundaries of audio and visual Made to perform for UltraHD (4K) and AMD realism for gamers who demand the best. Eyefinity technology, the AMD Radeon R9 290X GPU elevates visual experiences on current and "As the pinnacle of our new AMD Radeon R9 upcoming PC titles with superior image quality and Series graphics cards, the AMD Radeon R9 290X expansive, eye-opening displays. GPU embodies AMD's leadership as the ultimate graphics solution for an exceptional gaming Features of the AMD Radeon R9 290X graphics experience, affirming that Radeon is gaming," said cards include: Matt Skynner, general manager and corporate vice president, AMD Graphics Business Unit. "The 2,816 stream processing units formidable combination of Graphics Core Next Up to 1 GHz engine clock (GCN) architecture, Mantle and AMD TrueAudio 4GB GDDR5 memory technology raises the bar for breathtaking audio Up to 5.0Gbps memory clock speed and graphics, and powerful performance, giving 320GB/s memory bandwidth (maximum) enthusiasts an unprecedented gaming 5.6 TFLOPS Single Precision compute experience." power API support for DirectX 11.2, OpenGL 4.3 With AMD's award-winning Graphics Core Next and Mantle (GCN) architecture at its core, the AMD Radeon R9 290X graphics cards have an unrivaled The AMD Radeon R9 290X graphics cards are advantage with Mantle, an industry-changing available on Oct.