Digital Video for the Next Millenium

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Through the Looking Glass: Webcam Interception and Protection in Kernel

VIRUS BULLETIN www.virusbulletin.com Covering the global threat landscape THROUGH THE LOOKING GLASS: and WIA (Windows Image Acquisition), which provides a WEBCAM INTERCEPTION AND still image acquisition API. PROTECTION IN KERNEL MODE ATTACK VECTORS Ronen Slavin & Michael Maltsev Reason Software, USA Let’s pretend for a moment that we’re the bad guys. We have gained control of a victim’s computer and we can run any code on it. We would like to use his camera to get a photo or a video to use for our nefarious purposes. What are our INTRODUCTION options? When we talk about digital privacy, the computer’s webcam The simplest option is just to use one of the user-mode APIs is one of the most relevant components. We all have a tiny mentioned previously. By default, Windows allows every fear that someone might be looking through our computer’s app to access the computer’s camera, with the exception of camera, spying on us and watching our every move [1]. And Store apps on Windows 10. The downside for the attackers is while some of us think this scenario is restricted to the realm that camera access will turn on the indicator LED, giving the of movies, the reality is that malware authors and threat victim an indication that somebody is watching him. actors don’t shy away from incorporating such capabilities A sneakier method is to spy on the victim when he turns on into their malware arsenals [2]. the camera himself. Patrick Wardle described a technique Camera manufacturers protect their customers by incorporating like this for Mac [8], but there’s no reason the principle into their devices an indicator LED that illuminates when can’t be applied to Windows, albeit with a slightly different the camera is in use. -

Download Windows Media App How to Download Windows Media Center

download windows media app How to Download Windows Media Center. wikiHow is a “wiki,” similar to Wikipedia, which means that many of our articles are co-written by multiple authors. To create this article, 16 people, some anonymous, worked to edit and improve it over time. This article has been viewed 208,757 times. Windows Media Center was Microsoft's media PC interface, and allowed you to record live TV, manage and playback your media, and more. Media Center has been discontinued, but you can still get it for Windows 7 or 8.1. If you are using Windows 10, you'll need to use an enthusiast- made hacked version, as Windows Media Center has been completely disabled. How to Download Windows Media Center. wikiHow is a “wiki,” similar to Wikipedia, which means that many of our articles are co-written by multiple authors. To create this article, 16 people, some anonymous, worked to edit and improve it over time. This article has been viewed 208,757 times. Windows Media Center was Microsoft's media PC interface, and allowed you to record live TV, manage and playback your media, and more. Media Center has been discontinued, but you can still get it for Windows 7 or 8.1. If you are using Windows 10, you'll need to use an enthusiast- made hacked version, as Windows Media Center has been completely disabled. Download this free app to get Windows Media Center back in Windows 10. With the release of Windows 10, Microsoft waved farewell to Windows Media Center. There are some excellent free alternatives around, but if you miss the classic video recorder and media player there's a free download that brings its suite of streaming and playback tools to the new operating system. -

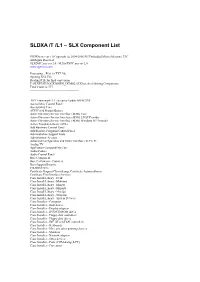

SLDXA /T /L1 – SLX Component List

SLDXA /T /L1 – SLX Component List SLDXA.exe ver 1.0 Copyright (c) 2004-2006 SJJ Embedded Micro Solutions, LLC All Rights Reserved SLXDiffC.exe ver 2.0 / SLXtoTXTC.exe ver 2.0 www.sjjmicro.com Processing... File1 to TXT file. Opening XSL File Reading RTF for final conversion F:\SLXTEST\LOCKDOWN_DEMO2.SLX has the following Components Total Count is: 577 -------------------------------------------------- .NET Framework 1.1 - Security Update KB887998 Accessibility Control Panel Accessibility Core ACPI Fixed Feature Button Active Directory Service Interface (ADSI) Core Active Directory Service Interface (ADSI) LDAP Provider Active Directory Service Interface (ADSI) Windows NT Provider Active Template Library (ATL) Add Hardware Control Panel Add/Remove Programs Control Panel Administration Support Tools Administrator Account Advanced Configuration and Power Interface (ACPI) PC Analog TV Application Compatibility Core Audio Codecs Audio Control Panel Base Component Base Performance Counters Base Support Binaries CD-ROM Drive Certificate Request Client & Certificate Autoenrollment Certificate User Interface Services Class Install Library - Desk Class Install Library - Mdminst Class Install Library - Mmsys Class Install Library - Msports Class Install Library - Netcfgx Class Install Library - Storprop Class Install Library - System Devices Class Installer - Computer Class Installer - Disk drives Class Installer - Display adapters Class Installer - DVD/CD-ROM drives Class Installer - Floppy disk controllers Class Installer - Floppy disk drives -

Scala Infochannel Player Setup Guide

SETUP GUIDE P/N: D40E04-01 Copyright © 1993-2002 Scala, Inc. All rights reserved. No part of this publication, nor any parts of this package, may be copied or distributed, transmitted, transcribed, recorded, photocopied, stored in a retrieval system, or translated into any human or computer language, in any form or by any means, electronic, mechanical, magnetic, manual, or otherwise, or disclosed to third parties without the prior written permission of Scala Incorporated. TRADEMARKS Scala, the exclamation point logo, and InfoChannel are registered trademarks of Scala, Inc. All other trademarks or registered trademarks are the sole property of their respective companies. The following are trademarks or registered trademarks of the companies listed, in the United States and other countries: Microsoft, MS-DOS, Windows, Windows 95, Windows 98, Windows NT, Windows 2000, Windows XP, DirectX, DirectDraw, DirectSound, ActiveX, ActiveMovie, Internet Explorer, Outlook Express: Microsoft Corporation IBM, IBM-PC: International Business Machines Corporation Intel, Pentium, Indeo: Intel Corporation Adobe, the Adobe logo, Adobe Type Manager, Acrobat, ATM, PostScript: Adobe Systems Incorporated TrueType, QuickTime, Macintosh: Apple Computer, Incorporated Agfa: Agfa-Gevaert AG, Agfa Division, Bayer Corporation “Segoe” is a trademark of Agfa Monotype Corporation. “Flash” and “Folio” are trademarks of Bauer Types S.A. Some parts are derived from the RSA Data Security, Inc. MD5 Message-Digest Algorithm. JPEG file handling is based in part on the work of the Independent JPEG Group. Lexsaurus Speller Technology Copyright © 1992, 1997 by Lexsaurus Software Inc. All rights reserved. TIFF-LZW and/or GIF-LZW: Licensed under Unisys Corporation US Patent No. 4,558,302; End-User use restricted to use on only a single personal computer or workstation which is not used as a server. -

Realaudio and Realvideo Content Creation Guide

RealAudioâ and RealVideoâ Content Creation Guide Version 5.0 RealNetworks, Inc. Contents Contents Introduction......................................................................................................................... 1 Streaming and Real-Time Delivery................................................................................... 1 Performance Range .......................................................................................................... 1 Content Sources ............................................................................................................... 2 Web Page Creation and Publishing................................................................................... 2 Basic Steps to Adding Streaming Media to Your Web Site ............................................... 3 Using this Guide .............................................................................................................. 4 Overview ............................................................................................................................. 6 RealAudio and RealVideo Clips ....................................................................................... 6 Components of RealSystem 5.0 ........................................................................................ 6 RealAudio and RealVideo Files and Metafiles .................................................................. 8 Delivering a RealAudio or RealVideo Clip ...................................................................... -

Metadefender Core V4.12.2

MetaDefender Core v4.12.2 © 2018 OPSWAT, Inc. All rights reserved. OPSWAT®, MetadefenderTM and the OPSWAT logo are trademarks of OPSWAT, Inc. All other trademarks, trade names, service marks, service names, and images mentioned and/or used herein belong to their respective owners. Table of Contents About This Guide 13 Key Features of Metadefender Core 14 1. Quick Start with Metadefender Core 15 1.1. Installation 15 Operating system invariant initial steps 15 Basic setup 16 1.1.1. Configuration wizard 16 1.2. License Activation 21 1.3. Scan Files with Metadefender Core 21 2. Installing or Upgrading Metadefender Core 22 2.1. Recommended System Requirements 22 System Requirements For Server 22 Browser Requirements for the Metadefender Core Management Console 24 2.2. Installing Metadefender 25 Installation 25 Installation notes 25 2.2.1. Installing Metadefender Core using command line 26 2.2.2. Installing Metadefender Core using the Install Wizard 27 2.3. Upgrading MetaDefender Core 27 Upgrading from MetaDefender Core 3.x 27 Upgrading from MetaDefender Core 4.x 28 2.4. Metadefender Core Licensing 28 2.4.1. Activating Metadefender Licenses 28 2.4.2. Checking Your Metadefender Core License 35 2.5. Performance and Load Estimation 36 What to know before reading the results: Some factors that affect performance 36 How test results are calculated 37 Test Reports 37 Performance Report - Multi-Scanning On Linux 37 Performance Report - Multi-Scanning On Windows 41 2.6. Special installation options 46 Use RAMDISK for the tempdirectory 46 3. Configuring Metadefender Core 50 3.1. Management Console 50 3.2. -

Encoding H.264 Video for Streaming and Progressive Download

W4: KEY ENCODING SKILLS, TECHNOLOGIES TECHNIQUES STREAMING MEDIA EAST - 2019 Jan Ozer www.streaminglearningcenter.com [email protected]/ 276-235-8542 @janozer Agenda • Introduction • Lesson 5: How to build encoding • Lesson 1: Delivering to Computers, ladder with objective quality metrics Mobile, OTT, and Smart TVs • Lesson 6: Current status of CMAF • Lesson 2: Codec review • Lesson 7: Delivering with dynamic • Lesson 3: Delivering HEVC over and static packaging HLS • Lesson 4: Per-title encoding Lesson 1: Delivering to Computers, Mobile, OTT, and Smart TVs • Computers • Mobile • OTT • Smart TVs Choosing an ABR Format for Computers • Can be DASH or HLS • Factors • Off-the-shelf player vendor (JW Player, Bitmovin, THEOPlayer, etc.) • Encoding/transcoding vendor Choosing an ABR Format for iOS • Native support (playback in the browser) • HTTP Live Streaming • Playback via an app • Any, including DASH, Smooth, HDS or RTMP Dynamic Streaming iOS Media Support Native App Codecs H.264 (High, Level 4.2), HEVC Any (Main10, Level 5 high) ABR formats HLS Any DRM FairPlay Any Captions CEA-608/708, WebVTT, IMSC1 Any HDR HDR10, DolbyVision ? http://bit.ly/hls_spec_2017 iOS Encoding Ladders H.264 HEVC http://bit.ly/hls_spec_2017 HEVC Hardware Support - iOS 3 % bit.ly/mobile_HEVC http://bit.ly/glob_med_2019 Android: Codec and ABR Format Support Codecs ABR VP8 (2.3+) • Multiple codecs and ABR H.264 (3+) HLS (3+) technologies • Serious cautions about HLS • DASH now close to 97% • HEVC VP9 (4.4+) DASH 4.4+ Via MSE • Main Profile Level 3 – mobile HEVC (5+) -

Case Study: Internet Explorer 1994..1997

Case Study: Internet Explorer 1994..1997 Ben Slivka General Manager Windows UI [email protected] Internet Explorer Chronology 8/94 IE effort begins 12/94 License Spyglass Mosaic source code 7/95 IE 1.0 ships as Windows 95 feature 11/95 IE 2.0 ships 3/96 MS Professional Developer’s Conference AOL deal, Java license announced 8/96 IE 3.0 ships, wins all but PC Mag review 9/97 IE 4.0 ships, wins all the reviews IE Feature Chronology IE 1.0 (7/14/95) IE 2.0 (11/17/95) HTML 2.0 HTML Tables, other NS enhancements HTML <font face=> Cell background colors & images Progressive Rendering HTTP cookies (arthurbi) Windows Integration SSL Start.Run HTML (MS enhancements) Internet Shortcuts <marquee> Password Caching background sounds Auto Connect, in-line AVIs Disconnect Active VRML 1.0 Navigator parity MS innovation Feature Chronology - continued IE 3.0 (8/12/96) IE 3.0 - continued... IE 4.0 (9/12/97) Java Accessibility Dynamic HTML (W3C) HTML Frames PICS (W3C) Data Binding Floating frames HTML CSS (W3C) 2D positioning Componentized HTML <object> (W3C) Java JDK 1.1 ActiveX Scripting ActiveX Controls Explorer Bars JavaScript Code Download Active Setup VBScript Code Signing Active Channels MSHTML, SHDOCVW IEAK (corporations) CDF (XML) WININET, URLMON Internet Setup Wizard Security Zones DocObj hosting Referral Server Windows Integration Single Explorer ActiveDesktop™ Navigator parity MS innovation Quick Launch, … Wins for IE • Quality • CoolBar, Explorer Bars • Componetization • Great Mail/News Client • ActiveX Controls – Outlook Express – vs. Nav plug-ins -

Planning for Internet Explorer and the IEAK

02_Inst.fm Page 15 Monday, October 16, 2000 9:40 AM TWO 2Chapter 2 Planning for Internet Explorer and the IEAK LChapter Syllabus In this chapter, we will look at material covered in the Planning section of Microsoft’s Implementing MCSE 2.1 Addressing Technical Needs, Rules, and Policies and Supporting Microsoft Internet Explorer 5 by using the Internet Explorer Administration Kit exam MCSE 2.2 Planning for Custom (70-080). After reading this chapter, you should be Installations and Settings able to: MCSE 2.3 Providing Multiple • Identify and evaluate the technical needs of business Language Support units, such as Internet Service Providers (ISPs), con- tent providers, and corporate administrators. MCSE 2.4 Providing Multiple Platform Support • Design solutions based on organizational rules and policies for ISPs, content providers, and corporate MCSE 2.5 Developing Security Strategies administrators. • Evaluate which components to include in a custom- MCSE 2.6 Configuring for Offline ized Internet Explorer installation package for a given Viewing deployment scenario. MCSE 2.7 Replacing Other Browsers • Develop appropriate security strategies for using Internet Explorer at various sites, including public MCSE 2.8 Developing CMAK kiosks, general business sites, single-task-based sites, Strategies and intranet-only sites. 15 02_Inst.fm Page 16 Monday, October 16, 2000 9:40 AM 16 Chapter 2 • Planning for Internet Explorer and the IEAK • Configure offline viewing for various types of users, including gen- eral business users, single-task users, and mobile users. • Develop strategies for replacing other Internet browsers, such as Netscape Navigator and previous versions of Internet Explorer. • Decide which custom settings to configure for Microsoft Outlook Express for a given scenario. -

An Innovative Video Searching Approach Using Video Indexing

IJCSN - International Journal of Computer Science and Network, Volume 8, Issue 2, April 2019 ISSN (Online) : 2277-5420 www.IJCSN.org Impact Factor: 1.5 An Innovative Video Searching Approach using Video Indexing 1 Jaimon Jacob; 2 Sudeep Ilayidom; 3 V.P.Devassia [1] Department of Computer Science and Engineering, Govt. Model Engineering College, Thrikkakara, Ernakulam,Kerala,, India,682021 [2] Division of Computer Engineering, School of Engineering, Cochin University of Science and Technology, Thrikkakara, Ernakulam, Kerala,, India,682022 [3] Former Principal, Govt. Model Engineering College, Thrikkakara, Ernakulam,Kerala,, India,682021 Abstract - Searching for a Video in World Wide Web has augmented expeditiously as there’s been an explosion of growth in video on social media channels and networks in recent years. At present video search engines use the title, description, and thumbnail of the video for identifying the right one. In this paper, a novel video searching methodology is proposed using the Video indexing method. Video indexing is a technique of preparing an index, based on the content of video for the easy access of frames of interest. Videos are stored along with an index which is created out of video indexing technique. The video searching methodology check the content of index attached with each video to ensure that video is matching with the searching keyword and its relevance ensured, based on the word count of searching keyword in video index. The video searching methodology check the content of index attached with each video to ensure that video is matching with the searching keyword and its relevance ensured, based on the word count of searching keyword in video index. -

NBAR2 Standard Protocol Pack 1.0

NBAR2 Standard Protocol Pack 1.0 Americas Headquarters Cisco Systems, Inc. 170 West Tasman Drive San Jose, CA 95134-1706 USA http://www.cisco.com Tel: 408 526-4000 800 553-NETS (6387) Fax: 408 527-0883 © 2013 Cisco Systems, Inc. All rights reserved. CONTENTS CHAPTER 1 Release Notes for NBAR2 Standard Protocol Pack 1.0 1 CHAPTER 2 BGP 3 BITTORRENT 6 CITRIX 7 DHCP 8 DIRECTCONNECT 9 DNS 10 EDONKEY 11 EGP 12 EIGRP 13 EXCHANGE 14 FASTTRACK 15 FINGER 16 FTP 17 GNUTELLA 18 GOPHER 19 GRE 20 H323 21 HTTP 22 ICMP 23 IMAP 24 IPINIP 25 IPV6-ICMP 26 IRC 27 KAZAA2 28 KERBEROS 29 L2TP 30 NBAR2 Standard Protocol Pack 1.0 iii Contents LDAP 31 MGCP 32 NETBIOS 33 NETSHOW 34 NFS 35 NNTP 36 NOTES 37 NTP 38 OSPF 39 POP3 40 PPTP 41 PRINTER 42 RIP 43 RTCP 44 RTP 45 RTSP 46 SAP 47 SECURE-FTP 48 SECURE-HTTP 49 SECURE-IMAP 50 SECURE-IRC 51 SECURE-LDAP 52 SECURE-NNTP 53 SECURE-POP3 54 SECURE-TELNET 55 SIP 56 SKINNY 57 SKYPE 58 SMTP 59 SNMP 60 SOCKS 61 SQLNET 62 SQLSERVER 63 SSH 64 STREAMWORK 65 NBAR2 Standard Protocol Pack 1.0 iv Contents SUNRPC 66 SYSLOG 67 TELNET 68 TFTP 69 VDOLIVE 70 WINMX 71 NBAR2 Standard Protocol Pack 1.0 v Contents NBAR2 Standard Protocol Pack 1.0 vi CHAPTER 1 Release Notes for NBAR2 Standard Protocol Pack 1.0 NBAR2 Standard Protocol Pack Overview The Network Based Application Recognition (NBAR2) Standard Protocol Pack 1.0 is provided as the base protocol pack with an unlicensed Cisco image on a device. -

SEO-A Review Sonu B

International Journal of Research and Scientific Innovation (IJRSI) | Volume V, Issue II, February 2018 | ISSN 2321–2705 SEO-A Review Sonu B. Surati, Ghanshyam I. Prajapati Department of Information Technology, Shri S’ad Vidya Mandal Institute of Technology, Bharuch, Gujarat, India Abstract— Search Engine Optimization (SEO) is the process of affecting online visibility of a website or web page. This is important to improve rank of search result for website and get more page views, which are requested by user and these users can be converted into customers. A Search Engine Optimization may target on different search engines like image, video, academic, news, industry etc. and using these engine ranks they provide better and optimized result for user. These ranks help them to view popular page among the number of page available in the (non-paid) search result. Also, SEO is to help website managers to improve traffic of website, to making site friendly, to building link, and marketing unique value of site. SEO classified in two categories as either white hat SEO or black hat SEO. White hats tend to produce results that last a long time, whereas black hats anticipate that their sites may eventually be banned either temporarily or permanently. SEO is used to improve their frames and create more economic effectiveness and social effectiveness and also they can focus on national and international searcher(s). Keywords— Search Engine Optimization, White Hat, Black Hat, Link- Building, Marketing, Website, Social Sharing, Ranking. I. INTRODUCTION Fig.1 History of SEO search engine is software that is designed to search for So using cluster k- means algorithm solve delay problems, A information on World Wide Web.