Lessons from the Software Revolution

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Introduction Development Lifecycle Model

DeveIopment of a Comprehensive Software Engineering Environment Thomas C. Hartrum Gary B. Lamont Department of Electrical and Computer Engineering Department of Electrical and Computer Engineering School of Engineering School of Engineering Air Force Institute of Technology Air Force Institute of Technology Wright-Patterson AFB, Dayton, Ohio, 45433 Wright-Patterson AFB, Dayton, Ohio, 45433 Abstract The concept of an integrated software development environment The generation of a set of tools for the software lifecycle is a recur- can be realized in two distinct levels. The first level deals with the ring theme in the software engineering literature. The development of access and usage mechanisms for the interactive tools, while the sec- such tools and their integration into a software development environ- ond level concerns the preservation of software development data and ment is a difficult task at best because of the magnitude (number of the relationships between the products of the different software life variables) and the complexity (combinatorics) of the software lifecycle cycle stages. The first level requires that all of the component tools process. An initial development of a global approach was initiated at be resident under one operating system and be accessible through a AFIT in 1982 as the Software Development Workbench (SDW). Also common user interface. The second level dictates the need to store de- other restricted environments have evolved emphasizing Ada and di5 velopment data (requirements specifications, designs, code, test plans tributed processing. Continuing efforts focus on tool development, and procedures, manuals, etc.) in an integrated data base that pre- tool integration, human interfacing (graphics; SADT, DFD, structure serves the relationships between the products of the different life cy- charts, ...), data dictionaries, and testing algorithms. -

Rugby - a Process Model for Continuous Software Engineering

INSTITUT FUR¨ INFORMATIK DER TECHNISCHEN UNIVERSITAT¨ MUNCHEN¨ Forschungs- und Lehreinheit I Angewandte Softwaretechnik Rugby - A Process Model for Continuous Software Engineering Stephan Tobias Krusche Vollstandiger¨ Abdruck der von der Fakultat¨ fur¨ Informatik der Technischen Universitat¨ Munchen¨ zur Erlangung des akademischen Grades eines Doktors der Naturwissenschaften (Dr. rer. nat.) genehmigten Dissertation. Vorsitzender: Univ.-Prof. Dr. Helmut Seidl Prufer¨ der Dissertation: 1. Univ.-Prof. Bernd Brugge,¨ Ph.D. 2. Prof. Dr. Jurgen¨ Borstler,¨ Blekinge Institute of Technology, Karlskrona, Schweden Die Dissertation wurde am 28.01.2016 bei der Technischen Universitat¨ Munchen¨ eingereicht und durch die Fakultat¨ fur¨ Informatik am 29.02.2016 angenommen. Abstract Software is developed in increasingly dynamic environments. Organizations need the capability to deal with uncertainty and to react to unexpected changes in require- ments and technologies. Agile methods already improve the flexibility towards changes and with the emergence of continuous delivery, regular feedback loops have become possible. The abilities to maintain high code quality through reviews, to regularly re- lease software, and to collect and prioritize user feedback, are necessary for con- tinuous software engineering. However, there exists no uniform process model that handles the increasing number of reviews, releases and feedback reports. In this dissertation, we describe Rugby, a process model for continuous software en- gineering that is based on a meta model, which treats development activities as parallel workflows and which allows tailoring, customization and extension. Rugby includes a change model and treats changes as events that activate workflows. It integrates re- view management, release management, and feedback management as workflows. As a consequence, Rugby handles the increasing number of reviews, releases and feedback and at the same time decreases their size and effort. -

Job Description - Software Engineer I

Job Description - Software Engineer I Department: Engineering - 2013-03 Exemption Status: Non-Exempt Summary: This position is responsible for software development in multi-application, multi-server, and hosted environments. The candidate will primarily provide system/configuration support with a focus in helping the needs of both internal and external customers. He or she will participate in all facets of software and system development life-cycle. The most qualified candidate for this role will have experience working with business intelligence in the public safety and/or public health software fields and have formal software education and/or a ton of practical experience. We develop primarily in C#, .NET, SQL, SSRS and mobile. Anyone that might fit well at FirstWatch must be hard-working, people-oriented, friendly, patient, a fast learner, think quickly on their feet, take initiative and responsibility, and LOVE providing our customers great and honest customer service. This person will need to maintain a high quality productivity level within a fast paced environment. This position shares responsibility (rotates) with other engineering staff for 24×7 on call duties and so must be able to thrive in this environment. Responsibilities: - Maintain and modify the software and system configurations of production, staged, and test applications. - Interface with different stakeholders to determine and propose appropriate and effective technology solutions to meet business and technical objectives. - Develop interfaces, applications and other technical solutions to support the business needs through the planning, analysis, design, development, implementation and maintenance phases of the software and systems development lifecycle. - Create system requirements, technical specifications, and test plans. -

Structured Programming - Retrospect and Prospect Harlan D

University of Tennessee, Knoxville Trace: Tennessee Research and Creative Exchange The aH rlan D. Mills Collection Science Alliance 11-1986 Structured Programming - Retrospect and Prospect Harlan D. Mills Follow this and additional works at: http://trace.tennessee.edu/utk_harlan Part of the Software Engineering Commons Recommended Citation Mills, Harlan D., "Structured Programming - Retrospect and Prospect" (1986). The Harlan D. Mills Collection. http://trace.tennessee.edu/utk_harlan/20 This Article is brought to you for free and open access by the Science Alliance at Trace: Tennessee Research and Creative Exchange. It has been accepted for inclusion in The aH rlan D. Mills Collection by an authorized administrator of Trace: Tennessee Research and Creative Exchange. For more information, please contact [email protected]. mJNDAMNTL9JNNEPTS IN SOFTWARE ENGINEERING Structured Programming. Retrospect and Prospect Harlan D. Mills, IBM Corp. Stnuctured program- 2 ' dsger W. Dijkstra's 1969 "Struc- mon wisdom that no sizable program Ste red .tured Programming" articlel could be error-free. After, many sizable ming haxs changed ho w precipitated a decade of intense programs have run a year or more with no programs are written focus on programming techniques that has errors detected. since its introduction fundamentally alteredhumanexpectations and achievements in software devel- Impact of structured programming. two decades ago. opment. These expectations and achievements are However, it still has a Before this decade of intense focus, pro- not universal because of the inertia of lot of potentialfor gramming was regarded as a private, industrial practices. But they are well- lot of fo puzzle-solving activity ofwriting computer enough established to herald fundamental more change. -

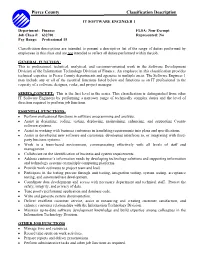

It Software Engineer 1

Pierce County Classification Description IT SOFTWARE ENGINEER 1 Department: Finance FLSA: Non-Exempt Job Class #: 632700 Represented: No Pay Range: Professional 15 Classification descriptions are intended to present a descriptive list of the range of duties performed by employees in this class and are not intended to reflect all duties performed within the job. GENERAL FUNCTION: This is professional, technical, analytical, and customer-oriented work in the Software Development Division of the Information Technology Division of Finance. An employee in this classification provides technical expertise to Pierce County departments and agencies in multiple areas. The Software Engineer 1 may include any or all of the essential functions listed below and functions as an IT professional in the capacity of a software designer, coder, and project manager. SERIES CONCEPT: This is the first level in the series. This classification is distinguished from other IT Software Engineers by performing a narrower range of technically complex duties and the level of direction required to perform job functions. ESSENTIAL FUNCTIONS: Perform professional functions in software programming and analysis. Assist in designing, coding, testing, deploying, maintaining, enhancing, and supporting County software systems. Assist in working with business customers in translating requirements into plans and specifications. Assist in developing new software and customize, developing interfaces to, or integrating with third- party business systems. Work in a team-based environment, communicating effectively with all levels of staff and management. Collaborate on the identification of business and system requirements. Address customer’s information needs by developing technology solutions and supporting information and technology systems on multiple computing platforms. -

MINING the DIGITAL INFORMATION NETWORKS This Page Intentionally Left Blank

MINING THE DIGITAL INFORMATION NETWORKS This page intentionally left blank Mining the Digital Information Networks Proceedings of the 17th International Conference on Electronic Publishing Edited by Nikklas Lavesson Bllekinge Institute of Technology, Sweden Peter Linde Bllekinge Institute of Technology, Sweden and Panayiota Polydoratou Alexander Technological Educational Institute of Thessaloniki, Greece Amsterdam • Berlin • Tokyo • Washington, DC © 2013 The authors and IOS Press. All rights reserved. No part of this book may be reproduced, stored in a retrieval system, or transmitted, in any form or by any means, without prior written permission from the publisher. ISBN 978-1-61499-269-1 (print) ISBN 978-1-61499-270-7 (online) Library of Congress Control Number: 2013941588 Publisher IOS Press BV Nieuwe Hemweg 6B 1013 BG Amsterdam Netherlands fax: +31 20 687 0019 e-mail: [email protected] Distributor in the USA and Canada IOS Press, Inc. 4502 Rachael Manor Drive Fairfax, VA 22032 USA fax: +1 703 323 3668 e-mail: [email protected] LEGAL NOTICE The publisher is not responsible for the use which might be made of the following information. PRINTED IN THE NETHERLANDS Mining the Digital Information Networks v N. Lavesson et al. (Eds.) © 2013 The authors and IOS Press. This article is published online with Open Access by IOS Press and distributed under the terms of the Creative Commons Attribution Non-Commercial License. Preface The main theme of the 17th International Conference on Electronic Publishing (ELPUB) concerns different ways to extract and process data from the vast wealth of digital publishing and how to use and reuse this information in innovative social con- texts in a sustainable way. -

MAKING DATA MODELS READABLE David C

MAKING DATA MODELS READABLE David C. Hay Essential Strategies, Inc. “Confusion and clutter are failures of [drawing] design, not attributes of information. And so the point is to find design strategies that reveal detail and complexity ¾ rather than to fault the data for an excess of complication. Or, worse, to fault viewers for a lack of understanding.” ¾ Edward R. Tufte1 Entity/relationship models (or simply “data models”) are powerful tools for analyzing and representing the structure of an organization. Properly used, they can reveal subtle relationships between elements of a business. They can also form the basis for robust and reliable data base design. Data models have gotten a bad reputation in recent years, however, as many people have found them to be more trouble to produce and less beneficial than promised. Discouraged, people have gone on to other approaches to developing systems ¾ often abandoning modeling altogether, in favor of simply starting with system design. Requirements analysis remains important, however, if systems are ultimately to do something useful for the company. And modeling ¾ especially data modeling ¾ is an essential component of requirements analysis. It is important, therefore, to try to understand why data models have been getting such a “bad rap”. Your author believes, along with Tufte, that the fault lies in the way most people design the models, not in the underlying complexity of what is being represented. An entity/relationship model has two primary objectives: First it represents the analyst’s public understanding of an enterprise, so that the ultimate consumer of a prospective computer system can be sure that the analyst got it right. -

Inf 212 Analysis of Prog. Langs Elements of Imperative Programming Style

INF 212 ANALYSIS OF PROG. LANGS ELEMENTS OF IMPERATIVE PROGRAMMING STYLE Instructors: Kaj Dreef Copyright © Instructors. Objectives Level up on things that you may already know… ! Machine model of imperative programs ! Structured vs. unstructured control flow ! Assignment ! Variables and names ! Lexical scope and blocks ! Expressions and statements …so to understand existing languages better Imperative Programming 3 Oldest and most popular paradigm ! Fortran, Algol, C, Java … Mirrors computer architecture ! In a von Neumann machine, memory holds instructions and data Control-flow statements ! Conditional and unconditional (GO TO) branches, loops Key operation: assignment ! Side effect: updating state (i.e., memory) of the machine Simplified Machine Model 4 Registers Code Data Stack Program counter Environment Heap pointer Memory Management 5 Registers, Code segment, Program counter ! Ignore registers (for our purposes) and details of instruction set Data segment ! Stack contains data related to block entry/exit ! Heap contains data of varying lifetime ! Environment pointer points to current stack position ■ Block entry: add new activation record to stack ■ Block exit: remove most recent activation record Control Flow 6 Control flow in imperative languages is most often designed to be sequential ! Instructions executed in order they are written ! Some also support concurrent execution (Java) But… Goto in C # include <stdio.h> int main(){ float num,average,sum; int i,n; printf("Maximum no. of inputs: "); scanf("%d",&n); for(i=1;i<=n;++i){ -

Tackling Traceability Challenges Through Modeling Principles in Methodologies Underpinned by Metamodels

Tackling Traceability Challenges through Modeling Principles in Methodologies Underpinned by Metamodels Angelina Espinoza and Juan Garbajosa Universidad Politecnica de Madrid - Technical University of Madrid, System and Software Technology Group (SYST), E.U. Informatica. Ctra. de Valencia Km. 7, E-28031, Spain, http://syst.eui.upm.es; aespinoza -at- syst.eui.upm.es, jgs -at- eui.upm.es Abstract. Traceability is recognized to be essential for supporting soft- ware development. However, a number of traceability issues are still open, such as link semantics formalization or traceability process models. Traceability methodologies underpinned by metamodels are a promis- ing approach. However current metamodels still have serious limitations. Concerning methodologies in general, three hierarchical layered levels have been identified: metamodel, methodology and project. Metamod- els do not often properly support this architecture, and that results in semantic problems at the time of specifying the methodology. Another reason is that they provide extensive predefined sets of types for describ- ing project attributes, while these project attributes are domain specific and, sometimes, even project specific. This paper introduces two com- plementary modeling principles to overcome these limitations, i.e. the metamodeling three layer hierarchy, and power-type patterns modeling principles. Mechanisms to extend and refine traceability models are in- herent to them. The paper shows that, when methodologies are developed from metamodels based on these two principles, the result is a method- ology well fitted to project features. Links semantics is also improved. Keywords: Traceability methodology, traceability metamodel, clabject, power-type pattern, metamodeling hierarchy, mechanisms for extension of traceability metamodels, metamodel extensibility. 1 Introduction Traceability is considered fundamental to perform processes and tasks such as V&V, change management, and impact analysis. -

Agile Methods: Where Did “Agile Thinking” Come From?

Historical Roots of Agile Methods: Where did “Agile Thinking” Come from? Noura Abbas, Andrew M Gravell, Gary B Wills School of Electronics and Computer Science, University of Southampton Southampton, SO17 1BJ, United Kingdom {na06r, amg, gbw}@ecs.soton.ac.uk Abstract. The appearance of Agile methods has been the most noticeable change to software process thinking in the last fifteen years [16], but in fact many of the “Agile ideas” have been around since 70’s or even before. Many studies and reviews have been conducted about Agile methods which ascribe their emergence as a reaction against traditional methods. In this paper, we argue that although Agile methods are new as a whole, they have strong roots in the history of software engineering. In addition to the iterative and incremental approaches that have been in use since 1957 [21], people who criticised the traditional methods suggested alternative approaches which were actually Agile ideas such as the response to change, customer involvement, and working software over documentation. The authors of this paper believe that education about the history of Agile thinking will help to develop better understanding as well as promoting the use of Agile methods. We therefore present and discuss the reasons behind the development and introduction of Agile methods, as a reaction to traditional methods, as a result of people's experience, and in particular focusing on reusing ideas from history. Keywords: Agile Methods, Software Development, Foundations and Conceptual Studies of Agile Methods. 1 Introduction Many reviews, studies and surveys have been conducted on Agile methods [1, 20, 15, 23, 38, 27, 22]. -

Alignment of Business and Application Layers of Archimate

Alignment of Business and Application Layers of ArchiMate Oleg Svatoš1, Václav Řepa1 1Department of Information Technologies, Faculty of Informatics and Statistics, University of Economics, Prague [email protected], [email protected] Abstract. ArchiMate is widely used standard for modelling enterprise architec- ture and organizes the model in separate yet interconnected layers. Unfortunate- ly the ArchiMate specification provides us with rather brief detail on the rela- tions between the individual layers and the guidelines how to have them aligned. In this paper we focus on the alignment between business and applica- tion layers especially alignment of business processes and application functions, which we see as crucial condition for proper and consistent enterprise architec- ture. For this alignment we propose an extension for ArchiMate based on the development done in Methodology for Modelling and Analysis of Business Process (MMABP). Application of the proposed extension is illustrated on an example and its benefits discussed. Keywords: business process model·Enterprise Architec- ture·ArchiMate·TOGAF·MMABP·functional model·object life cycle 1 Introduction The enterprise architecture plays an important role in current corporate management. Enterprises realized that after the initial spontaneous growth and accumulation of dif- ferent legacy systems over the years, it is necessary to create the living organism forming an enterprise under some kind of control in order to allow managing it. The enterprise architecture is one of the tools which help them to do it. There are different frameworks for capturing the enterprise architectures like FEAF [20], DoDAF [21], ISO 19439 [22], etc. out of which the most popular standard is the TOGAF [14] accompanied by ArchiMate as the modelling language [1]. -

A Framework for Representing Knowledge Marvin Minsky MIT-AI Laboratory Memo 306, June, 1974. Reprinted in the Psychology of Comp

A Framework for Representing Knowledge Marvin Minsky MIT-AI Laboratory Memo 306, June, 1974. Reprinted in The Psychology of Computer Vision, P. Winston (Ed.), McGraw-Hill, 1975. Shorter versions in J. Haugeland, Ed., Mind Design, MIT Press, 1981, and in Cognitive Science, Collins, Allan and Edward E. Smith (eds.) Morgan-Kaufmann, 1992 ISBN 55860-013-2] FRAMES It seems to me that the ingredients of most theories both in Artificial Intelligence and in Psychology have been on the whole too minute, local, and unstructured to account–either practically or phenomenologically–for the effectiveness of common-sense thought. The "chunks" of reasoning, language, memory, and "perception" ought to be larger and more structured; their factual and procedural contents must be more intimately connected in order to explain the apparent power and speed of mental activities. Similar feelings seem to be emerging in several centers working on theories of intelligence. They take one form in the proposal of Papert and myself (1972) to sub-structure knowledge into "micro-worlds"; another form in the "Problem-spaces" of Newell and Simon (1972); and yet another in new, large structures that theorists like Schank (1974), Abelson (1974), and Norman (1972) assign to linguistic objects. I see all these as moving away from the traditional attempts both by behavioristic psychologists and by logic-oriented students of Artificial Intelligence in trying to represent knowledge as collections of separate, simple fragments. I try here to bring together several of these issues by pretending to have a unified, coherent theory. The paper raises more questions than it answers, and I have tried to note the theory's deficiencies.