The Conferences Start Time Table

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Introduction Development Lifecycle Model

DeveIopment of a Comprehensive Software Engineering Environment Thomas C. Hartrum Gary B. Lamont Department of Electrical and Computer Engineering Department of Electrical and Computer Engineering School of Engineering School of Engineering Air Force Institute of Technology Air Force Institute of Technology Wright-Patterson AFB, Dayton, Ohio, 45433 Wright-Patterson AFB, Dayton, Ohio, 45433 Abstract The concept of an integrated software development environment The generation of a set of tools for the software lifecycle is a recur- can be realized in two distinct levels. The first level deals with the ring theme in the software engineering literature. The development of access and usage mechanisms for the interactive tools, while the sec- such tools and their integration into a software development environ- ond level concerns the preservation of software development data and ment is a difficult task at best because of the magnitude (number of the relationships between the products of the different software life variables) and the complexity (combinatorics) of the software lifecycle cycle stages. The first level requires that all of the component tools process. An initial development of a global approach was initiated at be resident under one operating system and be accessible through a AFIT in 1982 as the Software Development Workbench (SDW). Also common user interface. The second level dictates the need to store de- other restricted environments have evolved emphasizing Ada and di5 velopment data (requirements specifications, designs, code, test plans tributed processing. Continuing efforts focus on tool development, and procedures, manuals, etc.) in an integrated data base that pre- tool integration, human interfacing (graphics; SADT, DFD, structure serves the relationships between the products of the different life cy- charts, ...), data dictionaries, and testing algorithms. -

Backpropagation and Deep Learning in the Brain

Backpropagation and Deep Learning in the Brain Simons Institute -- Computational Theories of the Brain 2018 Timothy Lillicrap DeepMind, UCL With: Sergey Bartunov, Adam Santoro, Jordan Guerguiev, Blake Richards, Luke Marris, Daniel Cownden, Colin Akerman, Douglas Tweed, Geoffrey Hinton The “credit assignment” problem The solution in artificial networks: backprop Credit assignment by backprop works well in practice and shows up in virtually all of the state-of-the-art supervised, unsupervised, and reinforcement learning algorithms. Why Isn’t Backprop “Biologically Plausible”? Why Isn’t Backprop “Biologically Plausible”? Neuroscience Evidence for Backprop in the Brain? A spectrum of credit assignment algorithms: A spectrum of credit assignment algorithms: A spectrum of credit assignment algorithms: How to convince a neuroscientist that the cortex is learning via [something like] backprop - To convince a machine learning researcher, an appeal to variance in gradient estimates might be enough. - But this is rarely enough to convince a neuroscientist. - So what lines of argument help? How to convince a neuroscientist that the cortex is learning via [something like] backprop - What do I mean by “something like backprop”?: - That learning is achieved across multiple layers by sending information from neurons closer to the output back to “earlier” layers to help compute their synaptic updates. How to convince a neuroscientist that the cortex is learning via [something like] backprop 1. Feedback connections in cortex are ubiquitous and modify the -

Implementing Machine Learning & Neural Network Chip

Implementing Machine Learning & Neural Network Chip Architectures Using Network-on-Chip Interconnect IP Implementing Machine Learning & Neural Network Chip Architectures USING NETWORK-ON-CHIP INTERCONNECT IP TY GARIBAY Chief Technology Officer [email protected] Title: Implementing Machine Learning & Neural Network Chip Architectures Using Network-on-Chip Interconnect IP Primary author: Ty Garibay, Chief Technology Officer, ArterisIP, [email protected] Secondary author: Kurt Shuler, Vice President, Marketing, ArterisIP, [email protected] Abstract: A short tutorial and overview of machine learning and neural network chip architectures, with emphasis on how network-on-chip interconnect IP implements these architectures. Time to read: 10 minutes Time to present: 30 minutes Copyright © 2017 Arteris www.arteris.com 1 Implementing Machine Learning & Neural Network Chip Architectures Using Network-on-Chip Interconnect IP What is Machine Learning? “ Field of study that gives computers the ability to learn without being explicitly programmed.” Arthur Samuel, IBM, 1959 • Machine learning is a subset of Artificial Intelligence Copyright © 2017 Arteris 2 • Machine learning is a subset of artificial intelligence, and explicitly relies upon experiential learning rather than programming to make decisions. • The advantage to machine learning for tasks like automated driving or speech translation is that complex tasks like these are nearly impossible to explicitly program using rule-based if…then…else statements because of the large solution space. • However, the “answers” given by a machine learning algorithm have a probability associated with them and can be non-deterministic (meaning you can get different “answers” given the same inputs during different runs) • Neural networks have become the most common way to implement machine learning. -

Predrnn: Recurrent Neural Networks for Predictive Learning Using Spatiotemporal Lstms

PredRNN: Recurrent Neural Networks for Predictive Learning using Spatiotemporal LSTMs Yunbo Wang Mingsheng Long∗ School of Software School of Software Tsinghua University Tsinghua University [email protected] [email protected] Jianmin Wang Zhifeng Gao Philip S. Yu School of Software School of Software School of Software Tsinghua University Tsinghua University Tsinghua University [email protected] [email protected] [email protected] Abstract The predictive learning of spatiotemporal sequences aims to generate future images by learning from the historical frames, where spatial appearances and temporal vari- ations are two crucial structures. This paper models these structures by presenting a predictive recurrent neural network (PredRNN). This architecture is enlightened by the idea that spatiotemporal predictive learning should memorize both spatial ap- pearances and temporal variations in a unified memory pool. Concretely, memory states are no longer constrained inside each LSTM unit. Instead, they are allowed to zigzag in two directions: across stacked RNN layers vertically and through all RNN states horizontally. The core of this network is a new Spatiotemporal LSTM (ST-LSTM) unit that extracts and memorizes spatial and temporal representations simultaneously. PredRNN achieves the state-of-the-art prediction performance on three video prediction datasets and is a more general framework, that can be easily extended to other predictive learning tasks by integrating with other architectures. 1 Introduction -

Job Description - Software Engineer I

Job Description - Software Engineer I Department: Engineering - 2013-03 Exemption Status: Non-Exempt Summary: This position is responsible for software development in multi-application, multi-server, and hosted environments. The candidate will primarily provide system/configuration support with a focus in helping the needs of both internal and external customers. He or she will participate in all facets of software and system development life-cycle. The most qualified candidate for this role will have experience working with business intelligence in the public safety and/or public health software fields and have formal software education and/or a ton of practical experience. We develop primarily in C#, .NET, SQL, SSRS and mobile. Anyone that might fit well at FirstWatch must be hard-working, people-oriented, friendly, patient, a fast learner, think quickly on their feet, take initiative and responsibility, and LOVE providing our customers great and honest customer service. This person will need to maintain a high quality productivity level within a fast paced environment. This position shares responsibility (rotates) with other engineering staff for 24×7 on call duties and so must be able to thrive in this environment. Responsibilities: - Maintain and modify the software and system configurations of production, staged, and test applications. - Interface with different stakeholders to determine and propose appropriate and effective technology solutions to meet business and technical objectives. - Develop interfaces, applications and other technical solutions to support the business needs through the planning, analysis, design, development, implementation and maintenance phases of the software and systems development lifecycle. - Create system requirements, technical specifications, and test plans. -

Structured Programming - Retrospect and Prospect Harlan D

University of Tennessee, Knoxville Trace: Tennessee Research and Creative Exchange The aH rlan D. Mills Collection Science Alliance 11-1986 Structured Programming - Retrospect and Prospect Harlan D. Mills Follow this and additional works at: http://trace.tennessee.edu/utk_harlan Part of the Software Engineering Commons Recommended Citation Mills, Harlan D., "Structured Programming - Retrospect and Prospect" (1986). The Harlan D. Mills Collection. http://trace.tennessee.edu/utk_harlan/20 This Article is brought to you for free and open access by the Science Alliance at Trace: Tennessee Research and Creative Exchange. It has been accepted for inclusion in The aH rlan D. Mills Collection by an authorized administrator of Trace: Tennessee Research and Creative Exchange. For more information, please contact [email protected]. mJNDAMNTL9JNNEPTS IN SOFTWARE ENGINEERING Structured Programming. Retrospect and Prospect Harlan D. Mills, IBM Corp. Stnuctured program- 2 ' dsger W. Dijkstra's 1969 "Struc- mon wisdom that no sizable program Ste red .tured Programming" articlel could be error-free. After, many sizable ming haxs changed ho w precipitated a decade of intense programs have run a year or more with no programs are written focus on programming techniques that has errors detected. since its introduction fundamentally alteredhumanexpectations and achievements in software devel- Impact of structured programming. two decades ago. opment. These expectations and achievements are However, it still has a Before this decade of intense focus, pro- not universal because of the inertia of lot of potentialfor gramming was regarded as a private, industrial practices. But they are well- lot of fo puzzle-solving activity ofwriting computer enough established to herald fundamental more change. -

It Software Engineer 1

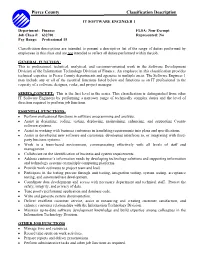

Pierce County Classification Description IT SOFTWARE ENGINEER 1 Department: Finance FLSA: Non-Exempt Job Class #: 632700 Represented: No Pay Range: Professional 15 Classification descriptions are intended to present a descriptive list of the range of duties performed by employees in this class and are not intended to reflect all duties performed within the job. GENERAL FUNCTION: This is professional, technical, analytical, and customer-oriented work in the Software Development Division of the Information Technology Division of Finance. An employee in this classification provides technical expertise to Pierce County departments and agencies in multiple areas. The Software Engineer 1 may include any or all of the essential functions listed below and functions as an IT professional in the capacity of a software designer, coder, and project manager. SERIES CONCEPT: This is the first level in the series. This classification is distinguished from other IT Software Engineers by performing a narrower range of technically complex duties and the level of direction required to perform job functions. ESSENTIAL FUNCTIONS: Perform professional functions in software programming and analysis. Assist in designing, coding, testing, deploying, maintaining, enhancing, and supporting County software systems. Assist in working with business customers in translating requirements into plans and specifications. Assist in developing new software and customize, developing interfaces to, or integrating with third- party business systems. Work in a team-based environment, communicating effectively with all levels of staff and management. Collaborate on the identification of business and system requirements. Address customer’s information needs by developing technology solutions and supporting information and technology systems on multiple computing platforms. -

Introduction to Machine Learning

Introduction to Machine Learning Perceptron Barnabás Póczos Contents History of Artificial Neural Networks Definitions: Perceptron, Multi-Layer Perceptron Perceptron algorithm 2 Short History of Artificial Neural Networks 3 Short History Progression (1943-1960) • First mathematical model of neurons ▪ Pitts & McCulloch (1943) • Beginning of artificial neural networks • Perceptron, Rosenblatt (1958) ▪ A single neuron for classification ▪ Perceptron learning rule ▪ Perceptron convergence theorem Degression (1960-1980) • Perceptron can’t even learn the XOR function • We don’t know how to train MLP • 1963 Backpropagation… but not much attention… Bryson, A.E.; W.F. Denham; S.E. Dreyfus. Optimal programming problems with inequality constraints. I: Necessary conditions for extremal solutions. AIAA J. 1, 11 (1963) 2544-2550 4 Short History Progression (1980-) • 1986 Backpropagation reinvented: ▪ Rumelhart, Hinton, Williams: Learning representations by back-propagating errors. Nature, 323, 533—536, 1986 • Successful applications: ▪ Character recognition, autonomous cars,… • Open questions: Overfitting? Network structure? Neuron number? Layer number? Bad local minimum points? When to stop training? • Hopfield nets (1982), Boltzmann machines,… 5 Short History Degression (1993-) • SVM: Vapnik and his co-workers developed the Support Vector Machine (1993). It is a shallow architecture. • SVM and Graphical models almost kill the ANN research. • Training deeper networks consistently yields poor results. • Exception: deep convolutional neural networks, Yann LeCun 1998. (discriminative model) 6 Short History Progression (2006-) Deep Belief Networks (DBN) • Hinton, G. E, Osindero, S., and Teh, Y. W. (2006). A fast learning algorithm for deep belief nets. Neural Computation, 18:1527-1554. • Generative graphical model • Based on restrictive Boltzmann machines • Can be trained efficiently Deep Autoencoder based networks Bengio, Y., Lamblin, P., Popovici, P., Larochelle, H. -

Lecture 11 Recurrent Neural Networks I CMSC 35246: Deep Learning

Lecture 11 Recurrent Neural Networks I CMSC 35246: Deep Learning Shubhendu Trivedi & Risi Kondor University of Chicago May 01, 2017 Lecture 11 Recurrent Neural Networks I CMSC 35246 Introduction Sequence Learning with Neural Networks Lecture 11 Recurrent Neural Networks I CMSC 35246 Some Sequence Tasks Figure credit: Andrej Karpathy Lecture 11 Recurrent Neural Networks I CMSC 35246 MLPs only accept an input of fixed dimensionality and map it to an output of fixed dimensionality Great e.g.: Inputs - Images, Output - Categories Bad e.g.: Inputs - Text in one language, Output - Text in another language MLPs treat every example independently. How is this problematic? Need to re-learn the rules of language from scratch each time Another example: Classify events after a fixed number of frames in a movie Need to resuse knowledge about the previous events to help in classifying the current. Problems with MLPs for Sequence Tasks The "API" is too limited. Lecture 11 Recurrent Neural Networks I CMSC 35246 Great e.g.: Inputs - Images, Output - Categories Bad e.g.: Inputs - Text in one language, Output - Text in another language MLPs treat every example independently. How is this problematic? Need to re-learn the rules of language from scratch each time Another example: Classify events after a fixed number of frames in a movie Need to resuse knowledge about the previous events to help in classifying the current. Problems with MLPs for Sequence Tasks The "API" is too limited. MLPs only accept an input of fixed dimensionality and map it to an output of fixed dimensionality Lecture 11 Recurrent Neural Networks I CMSC 35246 Bad e.g.: Inputs - Text in one language, Output - Text in another language MLPs treat every example independently. -

Comparative Analysis of Recurrent Neural Network Architectures for Reservoir Inflow Forecasting

water Article Comparative Analysis of Recurrent Neural Network Architectures for Reservoir Inflow Forecasting Halit Apaydin 1 , Hajar Feizi 2 , Mohammad Taghi Sattari 1,2,* , Muslume Sevba Colak 1 , Shahaboddin Shamshirband 3,4,* and Kwok-Wing Chau 5 1 Department of Agricultural Engineering, Faculty of Agriculture, Ankara University, Ankara 06110, Turkey; [email protected] (H.A.); [email protected] (M.S.C.) 2 Department of Water Engineering, Agriculture Faculty, University of Tabriz, Tabriz 51666, Iran; [email protected] 3 Department for Management of Science and Technology Development, Ton Duc Thang University, Ho Chi Minh City, Vietnam 4 Faculty of Information Technology, Ton Duc Thang University, Ho Chi Minh City, Vietnam 5 Department of Civil and Environmental Engineering, Hong Kong Polytechnic University, Hong Kong, China; [email protected] * Correspondence: [email protected] or [email protected] (M.T.S.); [email protected] (S.S.) Received: 1 April 2020; Accepted: 21 May 2020; Published: 24 May 2020 Abstract: Due to the stochastic nature and complexity of flow, as well as the existence of hydrological uncertainties, predicting streamflow in dam reservoirs, especially in semi-arid and arid areas, is essential for the optimal and timely use of surface water resources. In this research, daily streamflow to the Ermenek hydroelectric dam reservoir located in Turkey is simulated using deep recurrent neural network (RNN) architectures, including bidirectional long short-term memory (Bi-LSTM), gated recurrent unit (GRU), long short-term memory (LSTM), and simple recurrent neural networks (simple RNN). For this purpose, daily observational flow data are used during the period 2012–2018, and all models are coded in Python software programming language. -

Deep Learning in Bioinformatics

Deep Learning in Bioinformatics Seonwoo Min1, Byunghan Lee1, and Sungroh Yoon1,2* 1Department of Electrical and Computer Engineering, Seoul National University, Seoul 08826, Korea 2Interdisciplinary Program in Bioinformatics, Seoul National University, Seoul 08826, Korea Abstract In the era of big data, transformation of biomedical big data into valuable knowledge has been one of the most important challenges in bioinformatics. Deep learning has advanced rapidly since the early 2000s and now demonstrates state-of-the-art performance in various fields. Accordingly, application of deep learning in bioinformatics to gain insight from data has been emphasized in both academia and industry. Here, we review deep learning in bioinformatics, presenting examples of current research. To provide a useful and comprehensive perspective, we categorize research both by the bioinformatics domain (i.e., omics, biomedical imaging, biomedical signal processing) and deep learning architecture (i.e., deep neural networks, convolutional neural networks, recurrent neural networks, emergent architectures) and present brief descriptions of each study. Additionally, we discuss theoretical and practical issues of deep learning in bioinformatics and suggest future research directions. We believe that this review will provide valuable insights and serve as a starting point for researchers to apply deep learning approaches in their bioinformatics studies. *Corresponding author. Mailing address: 301-908, Department of Electrical and Computer Engineering, Seoul National University, Seoul 08826, Korea. E-mail: [email protected]. Phone: +82-2-880-1401. Keywords Deep learning, neural network, machine learning, bioinformatics, omics, biomedical imaging, biomedical signal processing Key Points As a great deal of biomedical data have been accumulated, various machine algorithms are now being widely applied in bioinformatics to extract knowledge from big data. -

Tackling Traceability Challenges Through Modeling Principles in Methodologies Underpinned by Metamodels

Tackling Traceability Challenges through Modeling Principles in Methodologies Underpinned by Metamodels Angelina Espinoza and Juan Garbajosa Universidad Politecnica de Madrid - Technical University of Madrid, System and Software Technology Group (SYST), E.U. Informatica. Ctra. de Valencia Km. 7, E-28031, Spain, http://syst.eui.upm.es; aespinoza -at- syst.eui.upm.es, jgs -at- eui.upm.es Abstract. Traceability is recognized to be essential for supporting soft- ware development. However, a number of traceability issues are still open, such as link semantics formalization or traceability process models. Traceability methodologies underpinned by metamodels are a promis- ing approach. However current metamodels still have serious limitations. Concerning methodologies in general, three hierarchical layered levels have been identified: metamodel, methodology and project. Metamod- els do not often properly support this architecture, and that results in semantic problems at the time of specifying the methodology. Another reason is that they provide extensive predefined sets of types for describ- ing project attributes, while these project attributes are domain specific and, sometimes, even project specific. This paper introduces two com- plementary modeling principles to overcome these limitations, i.e. the metamodeling three layer hierarchy, and power-type patterns modeling principles. Mechanisms to extend and refine traceability models are in- herent to them. The paper shows that, when methodologies are developed from metamodels based on these two principles, the result is a method- ology well fitted to project features. Links semantics is also improved. Keywords: Traceability methodology, traceability metamodel, clabject, power-type pattern, metamodeling hierarchy, mechanisms for extension of traceability metamodels, metamodel extensibility. 1 Introduction Traceability is considered fundamental to perform processes and tasks such as V&V, change management, and impact analysis.