Hidden Markov Models for Gene Sequence Classification

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Applied Category Theory for Genomics – an Initiative

Applied Category Theory for Genomics { An Initiative Yanying Wu1,2 1Centre for Neural Circuits and Behaviour, University of Oxford, UK 2Department of Physiology, Anatomy and Genetics, University of Oxford, UK 06 Sept, 2020 Abstract The ultimate secret of all lives on earth is hidden in their genomes { a totality of DNA sequences. We currently know the whole genome sequence of many organisms, while our understanding of the genome architecture on a systematic level remains rudimentary. Applied category theory opens a promising way to integrate the humongous amount of heterogeneous informations in genomics, to advance our knowledge regarding genome organization, and to provide us with a deep and holistic view of our own genomes. In this work we explain why applied category theory carries such a hope, and we move on to show how it could actually do so, albeit in baby steps. The manuscript intends to be readable to both mathematicians and biologists, therefore no prior knowledge is required from either side. arXiv:2009.02822v1 [q-bio.GN] 6 Sep 2020 1 Introduction DNA, the genetic material of all living beings on this planet, holds the secret of life. The complete set of DNA sequences in an organism constitutes its genome { the blueprint and instruction manual of that organism, be it a human or fly [1]. Therefore, genomics, which studies the contents and meaning of genomes, has been standing in the central stage of scientific research since its birth. The twentieth century witnessed three milestones of genomics research [1]. It began with the discovery of Mendel's laws of inheritance [2], sparked a climax in the middle with the reveal of DNA double helix structure [3], and ended with the accomplishment of a first draft of complete human genome sequences [4]. -

Functional Effects Detailed Research Plan

GeCIP Detailed Research Plan Form Background The Genomics England Clinical Interpretation Partnership (GeCIP) brings together researchers, clinicians and trainees from both academia and the NHS to analyse, refine and make new discoveries from the data from the 100,000 Genomes Project. The aims of the partnerships are: 1. To optimise: • clinical data and sample collection • clinical reporting • data validation and interpretation. 2. To improve understanding of the implications of genomic findings and improve the accuracy and reliability of information fed back to patients. To add to knowledge of the genetic basis of disease. 3. To provide a sustainable thriving training environment. The initial wave of GeCIP domains was announced in June 2015 following a first round of applications in January 2015. On the 18th June 2015 we invited the inaugurated GeCIP domains to develop more detailed research plans working closely with Genomics England. These will be used to ensure that the plans are complimentary and add real value across the GeCIP portfolio and address the aims and objectives of the 100,000 Genomes Project. They will be shared with the MRC, Wellcome Trust, NIHR and Cancer Research UK as existing members of the GeCIP Board to give advance warning and manage funding requests to maximise the funds available to each domain. However, formal applications will then be required to be submitted to individual funders. They will allow Genomics England to plan shared core analyses and the required research and computing infrastructure to support the proposed research. They will also form the basis of assessment by the Project’s Access Review Committee, to permit access to data. -

Meeting Review: Bioinformatics and Medicine – from Molecules To

Comparative and Functional Genomics Comp Funct Genom 2002; 3: 270–276. Published online 9 May 2002 in Wiley InterScience (www.interscience.wiley.com). DOI: 10.1002/cfg.178 Feature Meeting Review: Bioinformatics And Medicine – From molecules to humans, virtual and real Hinxton Hall Conference Centre, Genome Campus, Hinxton, Cambridge, UK – April 5th–7th Roslin Russell* MRC UK HGMP Resource Centre, Genome Campus, Hinxton, Cambridge CB10 1SB, UK *Correspondence to: Abstract MRC UK HGMP Resource Centre, Genome Campus, The Industrialization Workshop Series aims to promote and discuss integration, automa- Hinxton, Cambridge CB10 1SB, tion, simulation, quality, availability and standards in the high-throughput life sciences. UK. The main issues addressed being the transformation of bioinformatics and bioinformatics- based drug design into a robust discipline in industry, the government, research institutes and academia. The latest workshop emphasized the influence of the post-genomic era on medicine and healthcare with reference to advanced biological systems modeling and simulation, protein structure research, protein-protein interactions, metabolism and physiology. Speakers included Michael Ashburner, Kenneth Buetow, Francois Cambien, Cyrus Chothia, Jean Garnier, Francois Iris, Matthias Mann, Maya Natarajan, Peter Murray-Rust, Richard Mushlin, Barry Robson, David Rubin, Kosta Steliou, John Todd, Janet Thornton, Pim van der Eijk, Michael Vieth and Richard Ward. Copyright # 2002 John Wiley & Sons, Ltd. Received: 22 April 2002 Keywords: bioinformatics; -

Hmms Representing All Proteins of Known Structure. SCOP Sequence Searches, Alignments and Genome Assignments Julian Gough* and Cyrus Chothia

268–272 Nucleic Acids Research, 2002, Vol. 30, No. 1 © 2002 Oxford University Press SUPERFAMILY: HMMs representing all proteins of known structure. SCOP sequence searches, alignments and genome assignments Julian Gough* and Cyrus Chothia MRC Laboratory of Molecular Biology, Hills Road, Cambridge CB2 2QH, UK Received September 28, 2001; Revised and Accepted October 30, 2001 ABSTRACT (currently 59) covering approximately half of the The SUPERFAMILY database contains a library of soluble protein domains. The assignments, super- family breakdown and statistics on them are available hidden Markov models representing all proteins of from the server. The database is currently used by known structure. The database is based on the SCOP this group and others for genome annotation, structural ‘superfamily’ level of protein domain classification genomics, gene prediction and domain-based genomic which groups together the most distantly related studies. proteins which have a common evolutionary ancestor. There is a public server at http://supfam.org which provides three services: sequence searching, INTRODUCTION multiple alignments to sequences of known structure, The SUPERFAMILY database is based on the SCOP (1) and structural assignments to all complete genomes. classification of protein domains. SCOP is a structural domain- Given an amino acid or nucleotide query sequence based heirarchical classification with several levels including the server will return the domain architecture and the ‘superfamily’ level. Proteins grouped together at the super- SCOP classification. The server produces alignments family level are defined as having structural, functional and sequence evidence for a common evolutionary ancestor. It is at of the query sequences with sequences of known this level, as the name suggests, that SUPERFAMILY operates structure, and includes multiple alignments of because it is the level with the most distantly related protein genome and PDB sequences. -

The Principled Design of Large-Scale Recursive Neural Network Architectures–DAG-Rnns and the Protein Structure Prediction Problem

Journal of Machine Learning Research 4 (2003) 575-602 Submitted 2/02; Revised 4/03; Published 9/03 The Principled Design of Large-Scale Recursive Neural Network Architectures–DAG-RNNs and the Protein Structure Prediction Problem Pierre Baldi [email protected] Gianluca Pollastri [email protected] School of Information and Computer Science Institute for Genomics and Bioinformatics University of California, Irvine Irvine, CA 92697-3425, USA Editor: Michael I. Jordan Abstract We describe a general methodology for the design of large-scale recursive neural network architec- tures (DAG-RNNs) which comprises three fundamental steps: (1) representation of a given domain using suitable directed acyclic graphs (DAGs) to connect visible and hidden node variables; (2) parameterization of the relationship between each variable and its parent variables by feedforward neural networks; and (3) application of weight-sharing within appropriate subsets of DAG connec- tions to capture stationarity and control model complexity. Here we use these principles to derive several specific classes of DAG-RNN architectures based on lattices, trees, and other structured graphs. These architectures can process a wide range of data structures with variable sizes and dimensions. While the overall resulting models remain probabilistic, the internal deterministic dy- namics allows efficient propagation of information, as well as training by gradient descent, in order to tackle large-scale problems. These methods are used here to derive state-of-the-art predictors for protein structural features such as secondary structure (1D) and both fine- and coarse-grained contact maps (2D). Extensions, relationships to graphical models, and implications for the design of neural architectures are briefly discussed. -

120421-24Recombschedule FINAL.Xlsx

Friday 20 April 18:00 20:00 REGISTRATION OPENS in Fira Palace 20:00 21:30 WELCOME RECEPTION in CaixaForum (access map) Saturday 21 April 8:00 8:50 REGISTRATION 8:50 9:00 Opening Remarks (Roderic GUIGÓ and Benny CHOR) Session 1. Chair: Roderic GUIGÓ (CRG, Barcelona ES) 9:00 10:00 Richard DURBIN The Wellcome Trust Sanger Institute, Hinxton UK "Computational analysis of population genome sequencing data" 10:00 10:20 44 Yaw-Ling Lin, Charles Ward and Steven Skiena Synthetic Sequence Design for Signal Location Search 10:20 10:40 62 Kai Song, Jie Ren, Zhiyuan Zhai, Xuemei Liu, Minghua Deng and Fengzhu Sun Alignment-Free Sequence Comparison Based on Next Generation Sequencing Reads 10:40 11:00 178 Yang Li, Hong-Mei Li, Paul Burns, Mark Borodovsky, Gene Robinson and Jian Ma TrueSight: Self-training Algorithm for Splice Junction Detection using RNA-seq 11:00 11:30 coffee break Session 2. Chair: Bonnie BERGER (MIT, Cambrige US) 11:30 11:50 139 Son Pham, Dmitry Antipov, Alexander Sirotkin, Glenn Tesler, Pavel Pevzner and Max Alekseyev PATH-SETS: A Novel Approach for Comprehensive Utilization of Mate-Pairs in Genome Assembly 11:50 12:10 171 Yan Huang, Yin Hu and Jinze Liu A Robust Method for Transcript Quantification with RNA-seq Data 12:10 12:30 120 Zhanyong Wang, Farhad Hormozdiari, Wen-Yun Yang, Eran Halperin and Eleazar Eskin CNVeM: Copy Number Variation detection Using Uncertainty of Read Mapping 12:30 12:50 205 Dmitri Pervouchine Evidence for widespread association of mammalian splicing and conserved long range RNA structures 12:50 13:10 169 Melissa Gymrek, David Golan, Saharon Rosset and Yaniv Erlich lobSTR: A Novel Pipeline for Short Tandem Repeats Profiling in Personal Genomes 13:10 13:30 217 Rory Stark Differential oestrogen receptor binding is associated with clinical outcome in breast cancer 13:30 15:00 lunch break Session 3. -

Methodology for Predicting Semantic Annotations of Protein Sequences by Feature Extraction Derived of Statistical Contact Potentials and Continuous Wavelet Transform

Universidad Nacional de Colombia Sede Manizales Master’s Thesis Methodology for predicting semantic annotations of protein sequences by feature extraction derived of statistical contact potentials and continuous wavelet transform Author: Supervisor: Gustavo Alonso Arango Dr. Cesar German Argoty Castellanos Dominguez A thesis submitted in fulfillment of the requirements for the degree of Master’s on Engineering - Industrial Automation in the Department of Electronic, Electric Engineering and Computation Signal Processing and Recognition Group June 2014 Universidad Nacional de Colombia Sede Manizales Tesis de Maestr´ıa Metodolog´ıapara predecir la anotaci´on sem´antica de prote´ınaspor medio de extracci´on de caracter´ısticas derivadas de potenciales de contacto y transformada wavelet continua Autor: Tutor: Gustavo Alonso Arango Dr. Cesar German Argoty Castellanos Dominguez Tesis presentada en cumplimiento a los requerimientos necesarios para obtener el grado de Maestr´ıaen Ingenier´ıaen Automatizaci´onIndustrial en el Departamento de Ingenier´ıaEl´ectrica,Electr´onicay Computaci´on Grupo de Procesamiento Digital de Senales Enero 2014 UNIVERSIDAD NACIONAL DE COLOMBIA Abstract Faculty of Engineering and Architecture Department of Electronic, Electric Engineering and Computation Master’s on Engineering - Industrial Automation Methodology for predicting semantic annotations of protein sequences by feature extraction derived of statistical contact potentials and continuous wavelet transform by Gustavo Alonso Arango Argoty In this thesis, a method to predict semantic annotations of the proteins from its primary structure is proposed. The main contribution of this thesis lies in the implementation of a novel protein feature representation, which makes use of the pairwise statistical contact potentials describing the protein interactions and geometry at the atomic level. -

Curriculum Vitae – Prof. Anders Krogh Personal Information

Curriculum Vitae – Prof. Anders Krogh Personal Information Date of Birth: May 2nd, 1959 Private Address: Borgmester Jensens Alle 22, st th, 2100 København Ø, Denmark Contact information: Dept. of Biology, Univ. of Copenhagen, Ole Maaloes Vej 5, 2200 Copenhagen, Denmark. +45 3532 1329, [email protected] Web: https://scholar.google.com/citations?user=-vGMjmwAAAAJ Education Sept 1991 Ph.D. (Physics), Niels Bohr Institute, Univ. of Copenhagen, Denmark June 1987 Cand. Scient. [M. Sc.] (Physics and mathematics), NBI, Univ. of Copenhagen Professional / Work Experience (since 2000) 2018 – Professor of Bionformatics, Dept of Computer Science (50%) and Dept of Biology (50%), Univ. of Copenhagen 2002 – 2018 Professor of Bionformatics, Dept of Biology, Univ. of Copenhagen 2009 – 2018 Head of Section for Computational and RNA Biology, Dept. of Biology, Univ. of Copenhagen 2000–2002 Associate Prof., Technical Univ. of Denmark (DTU), Copenhagen Prices and Awards 2017 – Fellow of the International Society for Computational Biology https://www.iscb.org/iscb- fellows-program 2008 – Fellow, Royal Danish Academy of Sciences and Letters Public Activities & Appointments (since 2009) 2014 – Board member, Elixir, European Infrastructure for Life Science. 2014 – Steering committee member, Danish Elixir Node. 2012 – 2016 Board member, Bioinformatics Infrastructure for Life Sciences (BILS), Swedish Research Council 2011 – 2016 Director, Centre for Computational and Applied Transcriptomics (COAT) 2009 – Associate editor, BMC Bioinformatics Publications § Google Scholar: https://scholar.google.com/citations?user=-vGMjmwAAAAJ § ORCID: 0000-0002-5147-6282. ResearcherID: M-1541-2014 § Co-author of 130 peer-reviewed papers and 2 monographs § 63,000 citations and h-index of 74 (Google Scholar, June 2019) § H-index of 54 in Web of science (June 2019) § Publications in high-impact journals: Nature (5), Science (1), Cell (1), Nature Genetics (2), Nature Biotechnology (2), Nature Communications (4), Cell (1, to appear), Genome Res. -

AUTOPHY by Deepika Prasad a Thesis

ANALYZING MARKER GENE DIVERSITY USING AN AUTOMATED PHYLOGENETIC TOOL: AUTOPHY by Deepika Prasad A thesis submitted to the Faculty of the University of Delaware in partial fulfillment of the requirements for the degree of Master of Science in Bioinformatics and Computational Biology Spring 2017 © 2017 Deepika Prasad All Rights Reserved ANALYZING MARKER GENE DIVERSITY USING AN AUTOMATED PHYLOGENETIC TOOL: AUTOPHY by Deepika Prasad Approved: __________________________________________________________ Shawn Polson, Ph.D. Professor in charge of thesis on behalf of the Advisory Committee Approved: __________________________________________________________ Kathleen F.McCoy, Ph.D. Chair of the Department of Computer and Information Sciences Approved: __________________________________________________________ Babatunde A. Ogunnaike, Ph.D. Dean of the College of Engineering Approved: __________________________________________________________ Ann L. Ardis, Ph.D. Senior Vice Provost for Graduate and Professional Education ACKNOWLEDGMENTS I want to express my sincerest gratitude to Professor Shawn Polson for his patience, continuous support, and enthusiasm. I could not have imagined having a better mentor to guide me through the process of my Master’s thesis. I would like to thank my committee members, Professor Eric Wommack and Honzhan Huang for their insightful comments and guidance in the thesis. I would also like to express my gratitude to Barbra Ferrell for asking important questions throughout my thesis research and helping me with the writing process. I would like to thank my friend Sagar Doshi, and lab mates Daniel Nasko, and Prasanna Joglekar, for helping me out with data, presentations, and giving important advice, whenever necessary. Last but not the least, I would like to thank my family, for being my pillars of strength. -

Computational Methods Addressing Genetic Variation In

COMPUTATIONAL METHODS ADDRESSING GENETIC VARIATION IN NEXT-GENERATION SEQUENCING DATA by Charlotte A. Darby A dissertation submitted to Johns Hopkins University in conformity with the requirements for the degree of Doctor of Philosophy Baltimore, Maryland June 2020 © 2020 Charlotte A. Darby All rights reserved Abstract Computational genomics involves the development and application of computational meth- ods for whole-genome-scale datasets to gain biological insight into the composition and func- tion of genomes, including how genetic variation mediates molecular phenotypes and disease. New biotechnologies such as next-generation sequencing generate genomic data on a massive scale and have transformed the field thanks to simultaneous advances in the analysis toolkit. In this thesis, I present three computational methods that use next-generation sequencing data, each of which addresses the genetic variations within and between human individuals in a different way. First, Samovar is a software tool for performing single-sample mosaic single-nucleotide variant calling on whole genome sequencing linked read data. Using haplotype assembly of heterozygous germline variants, uniquely made possible by linked reads, Samovar identifies variations in different cells that make up a bulk sequencing sample. We apply it to 13cancer samples in collaboration with researchers at Nationwide Childrens Hospital. Second, scHLAcount is a software pipeline that computes allele-specific molecule counts for the HLA genes from single-cell gene expression data. We use a personalized reference genome based on the individual’s genotypes to reveal allele-specific and cell type-specific gene expression patterns. Even given technology-specific biases of single-cell gene expression data, we can resolve allele-specific expression for these genes since the alleles are often quite different between the two haplotypes of an individual. -

Big Data, Moocs, and ... (PDF)

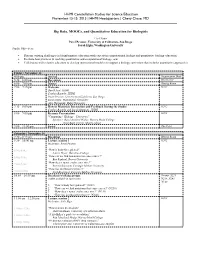

HHMI Constellation Studios for Science Education November 13-15, 2015 | HHMI Headquarters | Chevy Chase, MD Big Data, MOOCs, and Quantitative Education for Biologists Co-Chairs Pavel Pevzner, University of California- San Diego Sarah Elgin, Washington University Studio Objectives Discuss existing challenges in bioinformatics education with experts in computational biology and quantitative biology education, Evaluate best practices in teaching quantitative and computational biology, and Collaborate with scientist educators to develop instructional modules to support a biology curriculum that includes quantitative approaches. Friday | November 13 4:00 pm Arrival Registration Desk 5:30 – 6:00 pm Reception Great Hall 6:00 – 7:00 pm Dinner Dining Room 7:00 – 7:15 pm Welcome K202 David Asai, HHMI Cynthia Bauerle, HHMI Pavel Pevzner, University of California-San Diego Sarah Elgin, Washington University Alex Hartemink, Duke University 7:15 – 8:00 pm How to Maximize Interaction and Feedback During the Studio K202 Cynthia Bauerle and Sarah Simmons, HHMI 8:00 – 9:00 pm Keynote Presentation K202 "Computing + Biology = Discovery" Speakers: Ran Libeskind-Hadas, Harvey Mudd College Eliot Bush, Harvey Mudd College 9:00 – 11:00 pm Social The Pilot Saturday | November 14 7:30 – 8:15 am Breakfast Dining Room 8:30 – 10:00 am Lecture session 1 K202 Moderator: Pavel Pevzner 834a-854a “How is body fat regulated?” Laurie Heyer, Davidson College 856a-916a “How can we find mutations that cause cancer?” Ben Raphael, Brown University “How does a tumor evolve over time?” 918a-938a Russell Schwartz, Carnegie Mellon University “How fast do ribosomes move?” 940a-1000a Carl Kingsford, Carnegie Mellon University 10:05 – 10:55 am Breakout working groups Rooms: S221, (coffee available in each room) N238, N241, N140 1. -

Deep Learning in Chemoinformatics Using Tensor Flow

UC Irvine UC Irvine Electronic Theses and Dissertations Title Deep Learning in Chemoinformatics using Tensor Flow Permalink https://escholarship.org/uc/item/963505w5 Author Jain, Akshay Publication Date 2017 Peer reviewed|Thesis/dissertation eScholarship.org Powered by the California Digital Library University of California UNIVERSITY OF CALIFORNIA, IRVINE Deep Learning in Chemoinformatics using Tensor Flow THESIS submitted in partial satisfaction of the requirements for the degree of MASTER OF SCIENCE in Computer Science by Akshay Jain Thesis Committee: Professor Pierre Baldi, Chair Professor Cristina Videira Lopes Professor Eric Mjolsness 2017 c 2017 Akshay Jain DEDICATION To my family and friends. ii TABLE OF CONTENTS Page LIST OF FIGURES v LIST OF TABLES vi ACKNOWLEDGMENTS vii ABSTRACT OF THE THESIS viii 1 Introduction 1 1.1 QSAR Prediction Methods . .2 1.2 Deep Learning . .4 2 Artificial Neural Networks(ANN) 5 2.1 Artificial Neuron . .5 2.2 Activation Function . .7 2.3 Loss function . .8 2.4 Optimization . .8 3 Deep Recursive Architectures 10 3.1 Recurrent Neural Networks (RNN) . 10 3.2 Recursive Neural Networks . 11 3.3 Directed Acyclic Graph Recursive Neural Networks (DAG-RNN) . 11 4 UG-RNN for small molecules 14 4.1 DAG Generation . 16 4.2 Local Information Vector . 16 4.3 Contextual Vectors . 17 4.4 Activity Prediction . 17 4.5 UG-RNN With Contracted Rings (UG-RNN-CR) . 18 4.6 Example: UG-RNN Model of Propionic Acid . 20 5 Implementation 24 6 Data & Results 26 6.1 Aqueous Solubility Prediction . 26 6.2 Melting Point Prediction . 28 iii 7 Conclusions 30 Bibliography 32 A Source Code 37 A.1 UGRNN .