User-Centered Design in Games 1 Pagulayan, R. J., Keeker, K., Wixon

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Video Game Archive: Nintendo 64

Video Game Archive: Nintendo 64 An Interactive Qualifying Project submitted to the Faculty of WORCESTER POLYTECHNIC INSTITUTE in partial fulfilment of the requirements for the degree of Bachelor of Science by James R. McAleese Janelle Knight Edward Matava Matthew Hurlbut-Coke Date: 22nd March 2021 Report Submitted to: Professor Dean O’Donnell Worcester Polytechnic Institute This report represents work of one or more WPI undergraduate students submitted to the faculty as evidence of a degree requirement. WPI routinely publishes these reports on its web site without editorial or peer review. Abstract This project was an attempt to expand and document the Gordon Library’s Video Game Archive more specifically, the Nintendo 64 (N64) collection. We made the N64 and related accessories and games more accessible to the WPI community and created an exhibition on The History of 3D Games and Twitch Plays Paper Mario, featuring the N64. 2 Table of Contents Abstract…………………………………………………………………………………………………… 2 Table of Contents…………………………………………………………………………………………. 3 Table of Figures……………………………………………………………………………………………5 Acknowledgements……………………………………………………………………………………….. 7 Executive Summary………………………………………………………………………………………. 8 1-Introduction…………………………………………………………………………………………….. 9 2-Background………………………………………………………………………………………… . 11 2.1 - A Brief of History of Nintendo Co., Ltd. Prior to the Release of the N64 in 1996:……………. 11 2.2 - The Console and its Competitors:………………………………………………………………. 16 Development of the Console……………………………………………………………………...16 -

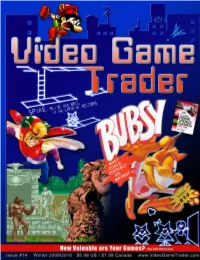

Video Game Trader Magazine & Price Guide

Winter 2009/2010 Issue #14 4 Trading Thoughts 20 Hidden Gems Blue‘s Journey (Neo Geo) Video Game Flashback Dragon‘s Lair (NES) Hidden Gems 8 NES Archives p. 20 19 Page Turners Wrecking Crew Vintage Games 9 Retro Reviews 40 Made in Japan Coin-Op.TV Volume 2 (DVD) Twinkle Star Sprites Alf (Sega Master System) VectrexMad! AutoFire Dongle (Vectrex) 41 Video Game Programming ROM Hacking Part 2 11Homebrew Reviews Ultimate Frogger Championship (NES) 42 Six Feet Under Phantasm (Atari 2600) Accessories Mad Bodies (Atari Jaguar) 44 Just 4 Qix Qix 46 Press Start Comic Michael Thomasson’s Just 4 Qix 5 Bubsy: What Could Possibly Go Wrong? p. 44 6 Spike: Alive and Well in the land of Vectors 14 Special Book Preview: Classic Home Video Games (1985-1988) 43 Token Appreciation Altered Beast 22 Prices for popular consoles from the Atari 2600 Six Feet Under to Sony PlayStation. Now includes 3DO & Complete p. 42 Game Lists! Advertise with Video Game Trader! Multiple run discounts of up to 25% apply THIS ISSUES CONTRIBUTORS: when you run your ad for consecutive Dustin Gulley Brett Weiss Ad Deadlines are 12 Noon Eastern months. Email for full details or visit our ad- Jim Combs Pat “Coldguy” December 1, 2009 (for Issue #15 Spring vertising page on videogametrader.com. Kevin H Gerard Buchko 2010) Agents J & K Dick Ward February 1, 2009(for Issue #16 Summer Video Game Trader can help create your ad- Michael Thomasson John Hancock 2010) vertisement. Email us with your requirements for a price quote. P. Ian Nicholson Peter G NEW!! Low, Full Color, Advertising Rates! -

Triple Plays Analysis

A Second Look At The Triple Plays By Chuck Rosciam This analysis updates my original paper published on SABR.org and Retrosheet.org and my Triple Plays sub-website at SABR. The origin of the extensive triple play database1 from which this analysis stems is the SABR Triple Play Project co-chaired by myself and Frank Hamilton with the assistance of dozens of SABR researchers2. Using the original triple play database and updating/validating each play, I used event files and box scores from Retrosheet3 to build a current database containing all of the recorded plays in which three outs were made (1876-2019). In this updated data set 719 triple plays (TP) were identified. [See complete list/table elsewhere on Retrosheet.org under FEATURES and then under NOTEWORTHY EVENTS]. The 719 triple plays covered one-hundred-forty-four seasons. 1890 was the Year of the Triple Play that saw nineteen of them turned. There were none in 1961 and in 1974. On average the number of TP’s is 4.9 per year. The number of TP’s each year were: Total Triple Plays Each Year (all Leagues) Ye a r T P's Ye a r T P's Ye a r T P's Ye a r T P's Ye a r T P's Ye a r T P's <1876 1900 1 1925 7 1950 5 1975 1 2000 5 1876 3 1901 8 1926 9 1951 4 1976 3 2001 2 1877 3 1902 6 1927 9 1952 3 1977 6 2002 6 1878 2 1903 7 1928 2 1953 5 1978 6 2003 2 1879 2 1904 1 1929 11 1954 5 1979 11 2004 3 1880 4 1905 8 1930 7 1955 7 1980 5 2005 1 1881 3 1906 4 1931 8 1956 2 1981 5 2006 5 1882 10 1907 3 1932 3 1957 4 1982 4 2007 4 1883 2 1908 7 1933 2 1958 4 1983 5 2008 2 1884 10 1909 4 1934 5 1959 2 -

Modelos De Negocios En La Industria Del Videojuego : Análisis De Caso De

Universidad de San Andrés Escuela de Negocios Maestría en Gestión de Servicios Tecnológicos y Telecomunicaciones Modelos de negocios en la industria del videojuego : análisis de caso de Electronics Arts Autor: Weller, Alejandro Legajo : 26116804 Director/Mentor de Tesis: Neumann, Javier Junio 2017 Maestría en Servicios Tecnológicos y Telecomunicaciones Trabajo de Graduación Modelos de negocios en la industria del videojuego Análisis de caso de Electronics Arts Alumno: Alejandro Weller Mentor: Javier Neumann Firma del mentor: Victoria, Provincia de Buenos Aires, 30 de Junio de 2017 Modelos de negocios en la Industria del videojuego Abstracto Con perfil bajo, tímida y sin apariencia de llegar a destacarse algún día, la industria del videojuego ha revolucionado a muchos de los grandes participantes del mercado de medios instalados cómodamente y sin preocupaciones durante muchos años. Los mismos nunca se imaginaron que en tan poco tiempo iban a tener que complementarse, acompañar y hasta temer en muchísimos aspectos a este nuevo actor. Este nuevo partícipe ha generado en solamente unas décadas de vida, una profunda revolución con implicancias sociales, culturales y económicas, creando nuevos e interesantes modelos de negocio los cuales van cambiando en períodos cada vez más cortos. A diferencia del crecimiento lento y progresivo de la radio, el cine y luego la televisión, el videojuego ha alcanzado en apenas medio siglo de vida ser objeto de gran interés e inversión de diferentes rubros, y semejante logro ha ido creciendo a través de transformaciones, aceptación general y evoluciones constantes del concepto mismo de videojuego. Los videojuegos, como indica Provenzo, E. (1991), son algo más que un producto informático, son además un negocio para quienes los manufacturan y los venden, y una empresa comercial sujeta, como todas, a las fluctuaciones del mercado. -

3 Ninjas Kick Back 688 Attack Sub 6-Pak Aaahh!!! Real

3 NINJAS KICK BACK 688 ATTACK SUB 6-PAK AAAHH!!! REAL MONSTERS ACTION 52 ADDAMS FAMILY VALUES THE ADDAMS FAMILY ADVANCED BUSTERHAWK GLEYLANCER ADVANCED DAISENRYAKU - DEUTSCH DENGEKI SAKUSEN THE ADVENTURES OF BATMAN & ROBIN THE ADVENTURES OF MIGHTY MAX THE ADVENTURES OF ROCKY AND BULLWINKLE AND FRIENDS AERO THE ACRO-BAT AERO THE ACRO-BAT 2 AEROBIZ AEROBIZ SUPERSONIC AFTER BURNER II AIR BUSTER AIR DIVER ALADDIN ALADDIN II ALEX KIDD IN THE ENCHANTED CASTLE ALIEN 3 ALIEN SOLDIER ALIEN STORM ALISIA DRAGOON ALTERED BEAST AMERICAN GLADIATORS ANDRE AGASSI TENNIS ANIMANIACS THE AQUATIC GAMES STARRING JAMES POND AND THE AQUABATS ARCADE CLASSICS ARCH RIVALS - THE ARCADE GAME ARCUS ODYSSEY ARIEL THE LITTLE MERMAID ARNOLD PALMER TOURNAMENT GOLF ARROW FLASH ART ALIVE ART OF FIGHTING ASTERIX AND THE GREAT RESCUE ASTERIX AND THE POWER OF THE GODS ATOMIC ROBO-KID ATOMIC RUNNER ATP TOUR CHAMPIONSHIP TENNIS AUSTRALIAN RUGBY LEAGUE AWESOME POSSUM... ...KICKS DR. MACHINO'S BUTT AYRTON SENNA'S SUPER MONACO GP II B.O.B. BABY BOOM (PROTO) BABY'S DAY OUT (PROTO) BACK TO THE FUTURE PART III BALL JACKS BALLZ 3D - FIGHTING AT ITS BALLZIEST ~ BALLZ 3D - THE BATTLE OF THE BALLZ BARBIE SUPER MODEL BARBIE VACATION ADVENTURE (PROTO) BARE KNUCKLE - IKARI NO TETSUKEN ~ STREETS OF RAGE BARE KNUCKLE III BARKLEY SHUT UP AND JAM 2 BARKLEY SHUT UP AND JAM! BARNEY'S HIDE & SEEK GAME BARVER BATTLE SAGA - TAI KONG ZHAN SHI BASS MASTERS CLASSIC - PRO EDITION BASS MASTERS CLASSIC BATMAN - REVENGE OF THE JOKER BATMAN - THE VIDEO GAME BATMAN FOREVER BATMAN RETURNS BATTLE GOLFER YUI -

Playstation Games

The Video Game Guy, Booths Corner Farmers Market - Garnet Valley, PA 19060 (302) 897-8115 www.thevideogameguy.com System Game Genre Playstation Games Playstation 007 Racing Racing Playstation 101 Dalmatians II Patch's London Adventure Action & Adventure Playstation 102 Dalmatians Puppies to the Rescue Action & Adventure Playstation 1Xtreme Extreme Sports Playstation 2Xtreme Extreme Sports Playstation 3D Baseball Baseball Playstation 3Xtreme Extreme Sports Playstation 40 Winks Action & Adventure Playstation Ace Combat 2 Action & Adventure Playstation Ace Combat 3 Electrosphere Other Playstation Aces of the Air Other Playstation Action Bass Sports Playstation Action Man Operation EXtreme Action & Adventure Playstation Activision Classics Arcade Playstation Adidas Power Soccer Soccer Playstation Adidas Power Soccer 98 Soccer Playstation Advanced Dungeons and Dragons Iron and Blood RPG Playstation Adventures of Lomax Action & Adventure Playstation Agile Warrior F-111X Action & Adventure Playstation Air Combat Action & Adventure Playstation Air Hockey Sports Playstation Akuji the Heartless Action & Adventure Playstation Aladdin in Nasiras Revenge Action & Adventure Playstation Alexi Lalas International Soccer Soccer Playstation Alien Resurrection Action & Adventure Playstation Alien Trilogy Action & Adventure Playstation Allied General Action & Adventure Playstation All-Star Racing Racing Playstation All-Star Racing 2 Racing Playstation All-Star Slammin D-Ball Sports Playstation Alone In The Dark One Eyed Jack's Revenge Action & Adventure -

Preventing Flu in School, Child Care Facilities by Capt

COMMANDER’S CORNER: FAREWELL TO A GREAT WING - PAGE 3 Peterson Air Force Base, Colorado Thursday, August 20, 2009 Vol. 53 No. 33 Preventing flu in school, child care facilities by Capt. Brenda Dehn who exhibit symptoms will be asked to stay staying home when sick. When these good · Get H1N1 vaccine — Monitor infor- Public Health flight commander home to prevent exposing others. Health hygiene steps are taken, the spread of illness mation from all sources to learn when the As school districts and child care provid- Department officials explained that even if is prevented. vaccine will become available in the com- ers prepare for the new school year, it’s a a child has the flu, but not H1N1, the recom- · Maintain health — Healthy eating, munity. It is likely that the H1N1 vaccine good time to plan for preventing the spread mendation would be the same — keep the physical activity, getting enough sleep, and will not be available for children through of flu and other illnesses in school and child child home until they have been symptom using medications as prescribed are some military clinics. care settings. free for 48 hours. The Health Department of the many steps one can take to maintain Remember, the best way to prevent expo- The El Paso County Department of Health does not recommend that children be tested health. Individuals with underlying medical sure and spread of H1N1 flu, which is infect- and Environment and Peterson Air Force to confirm the H1N1 virus because the treat- conditions, including respiratory illnesses ing the youngest members of our population, Base are asking all Team Peterson members ment and recommendations to stay home and severe obesity, may be at an increased is to wash hands often and keep sick children to help minimize children’s exposure to these remain the same. -

Texas IT Services Industry Report

Texas IT Services Industry www.BusinessInTexas.com Contents Overview……………………………………………………………. 2 Computer Systems & Software.……….…………….…… 12 Cloud Services & Data Centers……………….…….….... 16 Video & Online Games ……………………..……………….. 19 Online Services……………..…...………………………………. 22 IT Services across Industries……………………………….. 25 Completed March 2015 Texas IT Services Headlines ACTIVE Networks relocates headquarters from California to Dallas, plans to create 1,000 jobs See Page 7 Google Fiber rolls out in Healthcare IT company Austin, the second U.S. metro athenahealth opens major to launch services new office in Austin, plans to hire over 600 See Page 23 See Page 26 Texas ranks #2 in Oracle expands Texas public IT services Austin office, plans universities award employment to create 200 new more than 36,400 nationwide jobs IT-related degrees from 2008-2013 See Page 8 See Page 13 See Page 11 Data Center Map ranks Texas Cyber security company #2 for colocation Websense relocating data centers California headquarters to Austin, plans to See Page 16 create 445 jobs See Page 14 IT Services in Texas Bureau of Labor data. The percentage of Texans employed in computer systems design and related services also exceeds California’s and the nation’s averages. Faced with the continuing growth of IT services, companies from other industries are increasingly expanding into the IT services arena. Leading PC- manufacturer Dell and telecom giant AT&T, both headquartered in Texas, have each acquired IT consulting, software, and online services companies over the past few years in order to broaden their IT nformation technology (IT) services are a large service offerings. and growing part of the Texas economy, with IT I employment across industries exceeding 330,000 workers statewide. -

Electronic Arts 20 01 Ar

ELECTRONIC ARTS ELECTRONIC ARTS 209 REDWOOD SHORES PARKWAY, REDWOOD CITY, CA 94065-1175 www.ea.com AR 01 20 TO OUR S T O C K H O L D E R S Fiscal year 2001 was both a challenging and exciting period for our company and for the industry. The transition fr om the current generation of game consoles to next generation systems continued, marked by the debut of the first entry in the next wave of new technology – the PlayStation®2 computer entertainment system from Sony. Nintendo and Microsoft will launch new platforms later this year, creating unprecedented levels of competition, cr eativity and excitement for consumers. Most importa n t l y , this provides a unique opportunity for Electron i c A rts to drive revenue and profi t growth as we move through the next technology cycle. In conjunction with steady increases in the PC market, plus the significant new opportunity in online gaming, we believe Electron i c Ar ts is poised to achieve new levels of success and leadership in the interactive entertainment category. In FY01, consolidated net revenue decreased 6.9 percent to $1,322 million. As a result of our investment in building the leading Internet gaming website, consolidated net income decreased $128 million to a consoli- dated net loss of $11 million, while consolidated diluted earnings per share decreased $0.96 to a diluted loss per share of $0.08. Pro forma consolidated net income and earnings per share, excluding goodwill, non-cash and one-time charges were $6 million and $0.04, res p e c t i v e l y , for the year. -

View Annual Report

EA 2000 AR 2000 CONSOLIDATED FINANCIAL HIGHLIGHTS Fiscal years ended March 31, 2000 1999 % change (In millions, expect per share data) Net Revenues $1,420 $1,222 16 % Operating Income 154 105 47 % Net Income 117 73 60 % Diluted Earnings Per Share 1.76 1.15 53 % Operating Income* 172 155 11 % Pro-Forma Net Income* 130 114 14 % Pro-Forma Diluted EPS* 1.95 1.81 8 % Working Capital 440 333 32 % Total Assets 1,192 902 32 % Total Stockholders’ Equity 923 663 39 % 2000 PRO-FORMA CORE FINANCIAL HIGHLIGHTS Fiscal years ended March 31, 2000 1999 % change (In millions, expect per share data) Net Revenues $ 1,401 $1,206 16 % Operating Income 208 114 82 % Net Income 154 79 95 % Pro-Forma Net Income* 164 120 37 % $ $1,420 1.95 $1,401 $130 $1.81 $1,222 $1,206 $164 $114 $909 $1.19 $898 $120 $73 $74 98 99 00 98 99 00 98 99 00 98 99 00 98 99 00 Consolidated Consolidated Pro-Forma Consolidated Pro-Forma Pro-Forma Core Pro-Forma Core Net Revenues Net Income* Diluted EPS* Net Revenues Net Income* (In millions) (In millions) (In millions) (In millions) FISCAL 2000 OPERATING HIGHLIGHTS • 16 percent increase in EA core net revenues • Signed agreement to be exclusive provider of games • 37 percent increase in EA core net income* on AOL properties • 69 titles released: • Acquired: ™ – 30 PC –DreamWorks Interactive ™ – 30 PlayStation –Kesmai –8 N64 –PlayNation –1 Mac * EXCLUDES GOODWILL AND ONE-TIME ITEMS IN EACH YEAR. AR 2000 EA CHAIRMAN’S LETTER TO OUR STOCKHOLDERS During fiscal year 2000 Electronic Arts (EA) significantly enhanced its strategic position in the interactive entertainment category. -

Delaware Lottery to Motorola to C.B.C

Marquette University Law School Marquette Law Scholarly Commons Faculty Publications Faculty Scholarship 1-1-2008 A Triple Play for the Public Domain: Delaware Lottery to Motorola to C.B.C. Matthew .J Mitten Marquette University Law School, [email protected] Follow this and additional works at: http://scholarship.law.marquette.edu/facpub Part of the Law Commons Publication Information Matthew J. Mitten, A Triple Play for the Public Domain: Delaware Lottery to Motorola to C.B.C., 11 Chap. L. Rev. 569 (2008) Repository Citation Mitten, Matthew J., "A Triple Play for the Public Domain: Delaware Lottery to Motorola to C.B.C." (2008). Faculty Publications. Paper 590. http://scholarship.law.marquette.edu/facpub/590 This Article is brought to you for free and open access by the Faculty Scholarship at Marquette Law Scholarly Commons. It has been accepted for inclusion in Faculty Publications by an authorized administrator of Marquette Law Scholarly Commons. For more information, please contact [email protected]. A Triple Play for the Public Domain: Delaware Lottery to Motorola to C.B. C. Matthew J Mitten" A trilogy of cases decided by federal courts over the past thirty years correctly holds that game scores, real-time game accounts, and player sta- tistics are in the public domain. There is a consistent thread in these federal cases, based on sound legal, public policy and economic analysis, which justifies judicial rejection of state law claims by sports leagues and players asserting exclusive rights to this purely factual information. -

Mlb on Fox Ready for Prime Time Triple-Play

MLB ON FOX READY FOR PRIME TIME TRIPLE-PLAY Sweet 16 th Season Lineup Features Three Consecutive Saturday Night Dates New York & Los Angeles – MLB on FOX, the sports highest-rated and most-watched television package, today unveils its 16th FOX SATURDAY BASEBALL GAME OF THE WEEK schedule, highlighted by three consecutive prime time dates in May, the most ever on the network’s regular-season schedule. Coverage for the special prime time windows begins at 7:00 PM ET. The select dates are Saturday, May 14 featuring baseball’s most storied rivalry when the Boston Red Sox face the New York Yankees in the Bronx; Saturday, May 21 highlighting four compelling Interleague matchups including the Chicago Cubs taking on the Boston Red Sox and the Mets at Yankees Subway Series; and Saturday, May 28 when FOX Sports regionalizes six compelling matchups in the broadcast window. The Memorial Day weekend lineup includes National League East rivals Philadelphia Phillies and New York Mets squaring-off in Flushing while Adrian Gonzalez and the reloaded Red Sox battle Jim Leyland’s Tigers in Detroit. This year’s schedule also features three dates with 1:00 PM ET start times so FOX Sports can bring fans complete and uninterrupted coverage of both its Saturday night NASCAR Sprint Cup races and the FOX SATURDAY BASEBALL GAME OF THE WEEK. “Last year’s FOX SATURDAY BASEBALL prime time dates were a huge success with fans and a terrific complement to our afternoon games,” said FOX Sports President Eric Shanks. “Now with this season’s enhanced prime time schedule combined with our strong afternoon slate, we’ll again use this unmatched platform to continue the success of MLB’s most-watched television package.” FOX Sports, the broadcast television home of Major League Baseball’s signature events, gears up for another tremendous season of excitement, drama and unforgettable moments.