Appendix 2 for More on This

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

College Basketball Tips Off on XM Satellite Radio with the ACC, Big East, Big Ten, Big 12, Pac-10 and SEC

NEWS RELEASE College Basketball Tips Off on XM Satellite Radio with the ACC, Big East, Big Ten, Big 12, Pac-10 and SEC 11/5/2007 WASHINGTON, Nov. 5 /PRNewswire-FirstCall/ -- XM Satellite Radio will air more than 1,000 college basketball games from all six power conferences during the 2007-2008 season, starting November 6 when Kentucky hosts Central Arkansas at Rupp Arena in Lexington. The game will air nationwide on XM channel 199 at 7:00 pm ET/4:00 pm PT. (Logo: http://www.newscom.com/cgi-bin/prnh/20070313/XMLOGO ) As the official satellite radio network of the ACC, Big East, Big Ten, Big 12, Pac-10, and SEC, XM will air college basketball games on a suite of 17 sports play-by-play channels. A weekly schedule of college basketball games on XM is available online at http://www.xmradio.com/collegesports. XM is the satellite radio home for 16 of the top 25 teams in the pre-season ESPN/USA Today college basketball poll, including top-ranked North Carolina. In March, XM will be the exclusive satellite radio network for the conference basketball tournaments for each of the six power conferences. "You can hear college basketball games from all over the country on XM Radio," said Kevin Straley, XM senior vice president of news, talk, and sports programming. "Team radio announcers are heard nationwide on XM. You can follow your team wherever you are." As the nation's leading satellite radio company with more than 8.5 million subscribers, XM airs college basketball, football, and other sports from the six power conferences for listeners from coast to coast. -

Ed Phelps Logs His 1,000 DTV Station Using Just Himself and His DTV Box. No Autologger Needed

The Magazine for TV and FM DXers October 2020 The Official Publication of the Worldwide TV-FM DX Association Being in the right place at just the right time… WKMJ RF 34 Ed Phelps logs his 1,000th DTV Station using just himself and his DTV Box. No autologger needed. THE VHF-UHF DIGEST The Worldwide TV-FM DX Association Serving the TV, FM, 30-50mhz Utility and Weather Radio DXer since 1968 THE VHF-UHF DIGEST IS THE OFFICIAL PUBLICATION OF THE WORLDWIDE TV-FM DX ASSOCIATION DEDICATED TO THE OBSERVATION AND STUDY OF THE PROPAGATION OF LONG DISTANCE TELEVISION AND FM BROADCASTING SIGNALS AT VHF AND UHF. WTFDA IS GOVERNED BY A BOARD OF DIRECTORS: DOUG SMITH, SAUL CHERNOS, KEITH MCGINNIS, JAMES THOMAS AND MIKE BUGAJ Treasurer: Keith McGinnis wtfda.org/info Webmaster: Tim McVey Forum Site Administrator: Chris Cervantez Creative Director: Saul Chernos Editorial Staff: Jeff Kruszka, Keith McGinnis, Fred Nordquist, Nick Langan, Doug Smith, John Zondlo and Mike Bugaj The WTFDA Board of Directors Doug Smith Saul Chernos James Thomas Keith McGinnis Mike Bugaj [email protected] [email protected] [email protected] [email protected] [email protected] Renewals by mail: Send to WTFDA, P.O. Box 501, Somersville, CT 06072. Check or MO for $10 payable to WTFDA. Renewals by Paypal: Send your dues ($10USD) from the Paypal website to [email protected] or go to https://www.paypal.me/WTFDA and type 10.00 or 20.00 for two years in the box. Our WTFDA.org website webmaster is Tim McVey, [email protected]. -

Good Sports, Fox Sports Supports and Fox Sports “Magnify” Series Team up to Bring Sports Equipment to Chicago Youth

Media Release Contact Christy Keswick, Chief Operating Officer T: 617-934-0545 E: [email protected] GOOD SPORTS, FOX SPORTS SUPPORTS AND FOX SPORTS “MAGNIFY” SERIES TEAM UP TO BRING SPORTS EQUIPMENT TO CHICAGO YOUTH 21st Century Fox Will Match Every Donation to Good Sports Made Through www.goodsports.org/shotinthedark, Dollar-for-Dollar, Up to $25,000 LOS ANGELES, March 2, 2018 – Good Sports has teamed up with FOX Sports Supports and FOX Sports Films’ MAGNIFY documentary “Shot in the Dark,” providing sports and fitness opportunities to the youth of Chicago. Supporters of the initiative can visit www.goodsports.org/shotinthedark to personally handpick sports equipment that will be directly donated by Good Sports to the youth programs in Chicago. In addition, all donations made through the “Shot in the Dark” webpage will be matched up to $25,000 by 21st Century Fox. “In connection to these powerful, sports and community narratives, we’re committed to meaningfully working, within our FOX Sports Supports organization and alongside others, to help neighborhoods and schools capitalize on the awareness that ‘Magnify’ brings to the stories,” says Charlie Dixon, Executive Vice President, Content at FOX Sports. “We are excited to kick off this partnership with FOX Sports. This is such a wonderful opportunity for the kids we serve,” said Christy Keswick, Good Sports Chief Operating Officer. “Chicago is a key market for Good Sports and we are thrilled to be able to address the high need in the area through this new partnership. The match is going to be instrumental in getting a significant number of Chicago kids in the game.” “Shot in the Dark” is produced by Los Angeles Media Fund, executive produced by Dwyane Wade and Chance the Rapper and directed by Dustin Nakao-Haider for Bogie Films, and in association with ZZ Productions . -

New Solar Research Yukon's CKRW Is 50 Uganda

December 2019 Volume 65 No. 7 . New solar research . Yukon’s CKRW is 50 . Uganda: African monitor . Cape Greco goes silent . Radio art sells for $52m . Overseas Russian radio . Oban, Sheigra DXpeditions Hon. President* Bernard Brown, 130 Ashland Road West, Sutton-in-Ashfield, Notts. NG17 2HS Secretary* Herman Boel, Papeveld 3, B-9320 Erembodegem (Aalst), Vlaanderen (Belgium) +32-476-524258 [email protected] Treasurer* Martin Hall, Glackin, 199 Clashmore, Lochinver, Lairg, Sutherland IV27 4JQ 01571-855360 [email protected] MWN General Steve Whitt, Landsvale, High Catton, Yorkshire YO41 1EH Editor* 01759-373704 [email protected] (editorial & stop press news) Membership Paul Crankshaw, 3 North Neuk, Troon, Ayrshire KA10 6TT Secretary 01292-316008 [email protected] (all changes of name or address) MWN Despatch Peter Wells, 9 Hadlow Way, Lancing, Sussex BN15 9DE 01903 851517 [email protected] (printing/ despatch enquiries) Publisher VACANCY [email protected] (all orders for club publications & CDs) MWN Contributing Editors (* = MWC Officer; all addresses are UK unless indicated) DX Loggings Martin Hall, Glackin, 199 Clashmore, Lochinver, Lairg, Sutherland IV27 4JQ 01571-855360 [email protected] Mailbag Herman Boel, Papeveld 3, B-9320 Erembodegem (Aalst), Vlaanderen (Belgium) +32-476-524258 [email protected] Home Front John Williams, 100 Gravel Lane, Hemel Hempstead, Herts HP1 1SB 01442-408567 [email protected] Eurolog John Williams, 100 Gravel Lane, Hemel Hempstead, Herts HP1 1SB World News Ton Timmerman, H. Heijermanspln 10, 2024 JJ Haarlem, The Netherlands [email protected] Beacons/Utility Desk VACANCY [email protected] Central American Tore Larsson, Frejagatan 14A, SE-521 43 Falköping, Sweden Desk +-46-515-13702 fax: 00-46-515-723519 [email protected] S. -

Station Ownership and Programming in Radio

FCC Media Ownership Study #5: Station Ownership and Programming in Radio By Tasneem Chipty CRA International, Inc. June 24, 2007 * CRA International, Inc., 200 Clarendon Street, T-33, Boston, MA 02116. I would like to thank Rashmi Melgiri, Matt List, and Caterina Nelson for helpful discussions and valuable assistance. The opinions expressed here are my own and do not necessarily reflect those of CRA International, Inc., or any of its other employees. Station Ownership and Programming in Radio by Tasneem Chipty, CRA International, June, 2007 I. Introduction Out of concern that common ownership of media may stifle diversity of voices and viewpoints, the Federal Communications Commission (“FCC”) has historically placed limits on the degree of common ownership of local radio stations, as well as on cross-ownership among radio stations, television stations, and newspapers serving the same local area. The 1996 Telecommunications Act loosened local radio station ownership restrictions, to different degrees across markets of different sizes, and it lifted all limits on radio station ownership at the national level. Subsequent FCC rule changes permitted common ownership of television and radio stations in the same market and also permitted a certain degree of cross-ownership between radio stations and newspapers. These changes have resulted in a wave of radio station mergers as well as a number of cross-media acquisitions, shifting control over programming content to fewer hands. For example, the number of radio stations owned or operated by Clear Channel Communications increased from about 196 stations in 1997 to 1,183 stations in 2005; the number of stations owned or operated by CBS (formerly known as Infinity) increased from 160 in 1997 to 178 in 2005; and the number of stations owned or operated by ABC increased from 29 in 1997 to 71 in 2005. -

The Stars Group and FOX Sports Announce Historic U.S. Media and Sports Wagering Partnership

The Stars Group and FOX Sports Announce Historic U.S. Media and Sports Wagering Partnership May 8, 2019 Toronto and Los Angeles, May 8, 2019 – The Stars Group Inc. (Nasdaq: TSG)(TSX: TSGI) and FOX Sports, a unit of Fox Corporation (Nasdaq: FOXA, FOX), today announced plans to launch FOX Bet, the first-of-its kind national media and sports wagering partnership in the United States. The Stars Group and FOX Sports have entered a long-term commercial agreement through which FOX Sports will provide The Stars Group with an exclusive license to use certain FOX Sports trademarks. The Stars Group and FOX Sports expect to launch two products in the Fall of 2019 under the FOX Bet umbrella. One will be a nationwide free-to-play game, awarding cash prizes to players who correctly predict the outcome of sports games. The second product, which will be named FOX Bet, will give customers in states with regulated betting the opportunity to place real money wagers on the outcome of a wide range of sporting events in accordance with the applicable laws and regulations. In addition to the commercial agreement of up to 25 years and associated product launches, Fox Corporation will acquire 14,352,331 newly issued common shares in The Stars Group, representing 4.99% of The Stars Group’s issued and outstanding common shares, at a price of $16.4408 per share, the prevailing market price leading up to the commencement of exclusive negotiations. The Stars Group currently intends to use the aggregate net proceeds of approximately $236 million for general corporate purposes and to prepay outstanding indebtedness on its first lien term loans. -

Steve Czaban WTEM Radio 980, Fox Sports Radio

Steve Czaban WTEM Radio 980, Fox Sports Radio Steve Czaban is one of the most experienced daily sports talk radio hosts in the nation. In his 18 year professional career, Steve has worked for all three major syndicated sports talk networks (Sporting News, ESPN Radio, Fox Sports Radio), filled in as a guest host for popular national host Jim Rome, and has worked locally in markets including Santa Barbara, Chicago, Milwaukee, Charlotte and Washington D.C. Steve (or "Czabe" as his friends and listeners have always called him) is a native of Fairfax County, Virginia. He was born on June 1, 1968 and grew up on what he facetiously calls the "mean streets of McLean." His father is a retired federal worker, his mother a retired teacher. He has one older brother Jim, and one younger sister, Ann Marie. Steve attended Cooper Middle School and Langley High School, both in McLean, Virginia. He received a BS in Communications and Political Science from the University of California Santa Barbara in 1990. Following graduation, he did play-by-play for UCSB Gaucho football and basketball and hosted a sports show on news-talk KTMS-AM 1260 in Santa Barbara from 1990-1994. After a summer anchoring Team Tickers at WTEM-AM 570 in Washington, DC, Steve moved to Chicago to host the morning show for the One-On-One Sports Radio Network in 1994-1997. In 1998-1999, he hosted the afternoon show on all-sports WFNZ-AM 610 in Charlotte, North Carolina which was named "Best Radio Show." Following six months at ESPN Radio, Czabe returned to WTEM in Washington, DC to join “the Sports Reporters” with Andy Pollin in 2000. -

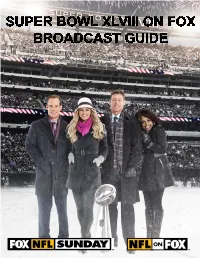

Super Bowl XLVIII on FOX Broadcast Guide

TABLE OF CONTENTS MEDIA INFORMATION 1 PHOTOGRAPHY 2 FOX SUPER BOWL SUNDAY BROADCAST SCHEDULE 3-6 SUPER BOWL WEEK ON FOX SPORTS 1 TELECAST SCHEDULE 7-10 PRODUCTION FACTS 11-13 CAMERA DIAGRAM 14 FOX SPORTS AT SUPER BOWL XLVIII FOXSports.com 15 FOX Sports GO 16 FOX Sports Social Media 17 FOX Sports Radio 18 FOX Deportes 19-21 SUPER BOWL AUDIENCE FACTS 22-23 10 TOP-RATED PROGRAMS ON FOX 24 SUPER BOWL RATINGS & BROADCASTER HISTORY 25-26 FOX SPORTS SUPPORTS 27 SUPERBOWL CONFERENCE CALL HIGHLIGHTS 28-29 BROADCASTER, EXECUTIVE & PRODUCTION BIOS 30-62 MEDIA INFORMATION The Super Bowl XLVIII on FOX broadcast guide has been prepared to assist you with your coverage of the first-ever Super Bowl played outdoors in a northern locale, coming Sunday, Feb. 2, live from MetLife Stadium in East Rutherford, NJ, and it is accurate as of Jan. 22, 2014. The FOX Sports Communications staff is available to assist you with the latest information, photographs and interview requests as needs arise between now and game day. SUPER BOWL XLVIII ON FOX CONFERENCE CALL SCHEDULE CALL-IN NUMBERS LISTED BELOW : Thursday, Jan. 23 (1:00 PM ET) – FOX SUPER BOWL SUNDAY co-host Terry Bradshaw, analyst Michael Strahan and FOX Sports President Eric Shanks are available to answer questions about the Super Bowl XLVIII pregame show and examine the matchups. Call-in number: 719-457-2083. Replay number: 719-457-0820 Passcode: 7331580 Thursday, Jan. 23 (2:30 PM ET) – SUPER BOWL XLVIII ON FOX broadcasters Joe Buck and Troy Aikman, Super Bowl XLVIII game producer Richie Zyontz and game director Rich Russo look ahead to Super Bowl XLVIII and the network’s coverage of its seventh Super Bowl. -

Colin Cowherd Joins Fox Sports and Premiere Networks

FOR IMMEDIATE RELEASE Wednesday, Aug. 12, 2015 COLIN COWHERD JOINS FOX SPORTS AND PREMIERE NETWORKS Thought-Provoking Media Personality Hosts New Program Simulcast on FOX Sports 1 and FOX Sports Radio Network Becomes a Regular on FOX NFL KICKOFF Los Angeles -- Colin Cowherd, one of the most thought-provoking sports hosts in the country, joins FOX Sports and Premiere Networks in September as a television, radio and digital personality. The announcement was made today by Jamie Horowitz, President, FOX Sports National Networks and Julie Talbott, President, Premiere Networks. Cowherd is set to host a three-hour sports talk program titled THE HERD beginning Tuesday, Sept. 8 airing simultaneously on FOX Sports 1 and the FOX Sports Radio Network, including AM 570 LA Sports/KLAC, weekdays from 12:00 to 3:00 PM ET. FOX Sports Radio is produced and distributed under a long-term agreement between FOX Sports and Premiere Networks, a subsidiary of iHeartMedia, the leading media company in America with a greater reach in the U.S. than any radio or television outlet. FSRN programming is also available on www.FOXSportsRadio.com, FOXSports.com, as well as iHeartRadio.com and the iHeartRadio mobile app, iHeartMedia’s digital radio platform. In addition to the FOX Sports 1/FSRN simulcast, Cowherd also becomes a key member of the FOX NFL KICKOFF cast, a show which moves from FOX Sports 1 to FOX, airing Sundays at 11:00 AM ET preceding FOX NFL SUNDAY. The show’s full roster is expected to be unveiled in the days ahead. Cowherd is also part of the team contributing to FOX Sports 1’s coverage of Jim Harbaugh’s debut as Michigan head coach when Utah hosts the Wolverines in both teams’ season-opener on Thursday, Sept. -

A Rhetoric of Sports Talk Radio John D

University of South Florida Scholar Commons Graduate Theses and Dissertations Graduate School 11-28-2005 A Rhetoric of Sports Talk Radio John D. Reffue University of South Florida Follow this and additional works at: https://scholarcommons.usf.edu/etd Part of the American Studies Commons Scholar Commons Citation Reffue, John D., "A Rhetoric of Sports Talk Radio" (2005). Graduate Theses and Dissertations. https://scholarcommons.usf.edu/etd/832 This Dissertation is brought to you for free and open access by the Graduate School at Scholar Commons. It has been accepted for inclusion in Graduate Theses and Dissertations by an authorized administrator of Scholar Commons. For more information, please contact [email protected]. A Rhetoric of Sports Talk Radio by John D. Reffue A dissertation submitted in partial fulfillment of the requirements for the degree of Doctor of Philosophy Department of Communication College of Arts and Sciences University of South Florida Co-Major Professor: Eric M. Eisenberg, Ph.D. Co-Major Professor: Daniel S. Bagley III, Ph.D. Elizabeth Bell, Ph.D Gilbert B. Rodman, Ph.D. Date of Approval: November 28, 2005 Keywords: media studies, popular culture, performance studies, masculinity, community © Copyright 2006, John D. Reffue Acknowledgements I would like to begin by thanking my parents, David and Ann Reffue who supported and encouraged my love of communication from my very earliest years and who taught me that loving your work will bring a lifetime of rewards. Special thanks also to my brother and sister-in-law Doug and Eliana Reffue and my grandmother, Julia Tropia, for their love and encouragement throughout my studies and throughout my life. -

Curriculum Vitae January 2021

Jaren C. Pope Curriculum Vitae January 2021 OFFICE ADDRESS CONTACT INFORMATION Department of Economics Phone: (801) 422-2037 Brigham Young University Fax: (801) 422-0194 2110 West View Building Email: [email protected] Provo, UT 84602-2363 Web: http://jarenpope.weebly.com/ EDUCATION Degree Field University Date Ph.D. Economics North Carolina State University December, 2006 M.A. Economics North Carolina State University December, 2004 B.A. Economics Brigham Young University August, 2001 EMPLOYMENT HISTORY 2018 - present Full Professor, Department of Economics, Brigham Young University. 2013 - 2018 Associate Professor, Department of Economics, Brigham Young University. 2010 - 2013 Assistant Professor, Department of Economics, Brigham Young University. 2006 - 2010 Assistant Professor, Department of Ag. & Applied Economics, Virginia Tech. 2002 - 2006 Graduate Research Fellow, Center for Environmental and Resource Policy, North Carolina State University. PUBLICATIONS Journal Articles: “State Policies and Intergenerational Mobility.” (with Lars Lefgren and David Sims), forthcoming in the Journal of Human Resources. “Best Practices in Using Hedonic Property Value Models for Welfare Measurement.” (with Kelly Bishop, Nicolai Kuminoff, Spencer Banzhaf, Kevin Boyle, Katherine von Gravenitz, V. Kerry Smith, and Christopher Timmins), Review of Environmental Economics and Policy, (2020) 14(2), 260-281. “Failure to Refinance.” (with Benjamin Keys and Devin Pope), Journal of Financial Economics, (2016) 122(3), 482-499. “Does the Housing Market Value Energy Efficient Homes? Evidence from the Energy Star Program.” (with Chris Bruegge and Carmen Carrion-Flores), Regional Science and Urban Economics, (2016) 57, 63-76. “The Psychological Effect of Weather on Car Purchases.” (with Meghan Busse, Devin Pope and Jorge Silva-Risso), Quarterly Journal of Economics, (2015) 130(1), 371-414. -

530 CIAO BRAMPTON on ETHNIC AM 530 N43 35 20 W079 52 54 09-Feb

frequency callsign city format identification slogan latitude longitude last change in listing kHz d m s d m s (yy-mmm) 530 CIAO BRAMPTON ON ETHNIC AM 530 N43 35 20 W079 52 54 09-Feb 540 CBKO COAL HARBOUR BC VARIETY CBC RADIO ONE N50 36 4 W127 34 23 09-May 540 CBXQ # UCLUELET BC VARIETY CBC RADIO ONE N48 56 44 W125 33 7 16-Oct 540 CBYW WELLS BC VARIETY CBC RADIO ONE N53 6 25 W121 32 46 09-May 540 CBT GRAND FALLS NL VARIETY CBC RADIO ONE N48 57 3 W055 37 34 00-Jul 540 CBMM # SENNETERRE QC VARIETY CBC RADIO ONE N48 22 42 W077 13 28 18-Feb 540 CBK REGINA SK VARIETY CBC RADIO ONE N51 40 48 W105 26 49 00-Jul 540 WASG DAPHNE AL BLK GSPL/RELIGION N30 44 44 W088 5 40 17-Sep 540 KRXA CARMEL VALLEY CA SPANISH RELIGION EL SEMBRADOR RADIO N36 39 36 W121 32 29 14-Aug 540 KVIP REDDING CA RELIGION SRN VERY INSPIRING N40 37 25 W122 16 49 09-Dec 540 WFLF PINE HILLS FL TALK FOX NEWSRADIO 93.1 N28 22 52 W081 47 31 18-Oct 540 WDAK COLUMBUS GA NEWS/TALK FOX NEWSRADIO 540 N32 25 58 W084 57 2 13-Dec 540 KWMT FORT DODGE IA C&W FOX TRUE COUNTRY N42 29 45 W094 12 27 13-Dec 540 KMLB MONROE LA NEWS/TALK/SPORTS ABC NEWSTALK 105.7&540 N32 32 36 W092 10 45 19-Jan 540 WGOP POCOMOKE CITY MD EZL/OLDIES N38 3 11 W075 34 11 18-Oct 540 WXYG SAUK RAPIDS MN CLASSIC ROCK THE GOAT N45 36 18 W094 8 21 17-May 540 KNMX LAS VEGAS NM SPANISH VARIETY NBC K NEW MEXICO N35 34 25 W105 10 17 13-Nov 540 WBWD ISLIP NY SOUTH ASIAN BOLLY 540 N40 45 4 W073 12 52 18-Dec 540 WRGC SYLVA NC VARIETY NBC THE RIVER N35 23 35 W083 11 38 18-Jun 540 WETC # WENDELL-ZEBULON NC RELIGION EWTN DEVINE MERCY R.