A Multi-View Multi-Source Benchmark for Drone-Based Geo-Localization

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Approach to Community Recreation Facility Planning in Edmonton

Approach to Community Recreation Facility Planning In Edmonton Current State of Community and Recreation Facilities Report April 2018 CR_5746 Attachment 3 CR_5746 Attachment 3 Table of Contents 1: Introduction 1 Project Overview and Methodology 1 2: Summary of the 2005 – 2015 Recreation Facility Master Plan 3 Overview of the 2005 – 2015 RFMP 3 2009 RFMP Update 6 Additional Plans Emanating from the 2005 – 2015 RFMP & 2009 Update 7 Infrastructure Milestones 9 3: Community Dynamics 13 Historical Growth Overview 14 Demographics Profile 15 Social Vulnerability 19 Current Population Distribution 21 Anticipated Growth 21 Regional Growth 22 4: Provincial and National Planning Influences 23 A Framework for Recreation in Canada 2015: Pathways to Wellbeing 24 Active Alberta Policy 26 Going the Distance: The Alberta Sport Plan (2014-2024) 27 Canadian Sport for Life 28 Truth and Reconciliation 29 The Modernized Municipal Government Act 30 Alignment with the New Vision and Goals 31 5: Strategic Planning of Key Partners 32 Partnership Approach Overview 33 6: Strategic Planning of other Capital Region Municipalities 35 Regional Infrastructure Overview 36 Strategic Planning and Potential Initiatives 37 Capital Region Board Planning 41 CR_5746 Attachment 3 Table of Contents 7: Leading Practices and Trends: Recreation 42 General Trends in Recreation 43 Physical Activity and Wellness Levels 43 Participation Trends 44 Recreation Activity Shifts 47 Understanding the Recreation Facility Consumer in Edmonton 50 Market Share 50 Summary of Market Share Position -

EAC Annual Report 2018

q01 q02 q03 q05 q04 ABOUT THE EAC The Edmonton Arts Council (EAC) is a not-for- profit society and charitable organization that supports and promotes the arts community in Edmonton. The EAC works to increase the profile and involvement of arts and culture in all aspects of our community life. q06 BOARD OF Vivianne Favreau-Sparrow, Debbie Houle Treasurer Aasttha Khajuria DIRECTORS Noel Xavier, Secretary Morgan McClelland as of December 31, 2018 Mark Power Members at Large Mireille Rijavec Executive Committee Patricia Darbasie Don Robinson Jeff Haslam, Chair Christopher Filipowicz Kent Sutherland Glen Erickson, Vice Chair Michael Hamm Will Truchon Ex-Officio Marian Bruin, CoE* (December 2017 - March 2018) The Edmonton Arts Council thanks Heather Inglis, Jackie Foord, CoE* (April 2018 - September 2018) Scott Portingale, Murray Utas, and Christopher White Niki Anderson, CoE* (September 2018 - Present) for their contributions to the EAC board in 2018. Cheryll Watson, EEDC** (January - March 2018) * City of Edmonton Maggie Davison, EEDC** (April 2018 - Present) ** Edmonton Economic Development Corporation STAFF Grace Law Amber Combden (January - April 2018) as of December 31, 2018 Kathryn Morin Olivia Latta Breanna Mroczek Kaelyn Saunders (January 2018) Annette Aslund Chris Roberts Amanda Skopik (on maternity leave) Sanjay Shahani Betty Thomson Jenika Sobolewska Chelsea Boida Churchill Square Programs Bobbie Todd Andrea Bowes Adam Blocka David Turnbull Jamie Chapelsky Liam Brown Eva Marie Clarke Jenna Turner (on maternity leave) Lee-Ann Grenier Shirley Combden Alexandra Perala Justina Verboom Kristina de Guzman Bob Rasko Stephen Williams Penélope Haro Aranda Marwa Salman Robert Harpin TIX on the Square Aleysha Sarinn Melanie Haynes Nicole Boychuk Ben Sures Sally Kim Liza Chatterjee Melissa Wolfinger EAC continued to support operational development of the new Edmonton Screen Industries Office by providing office space and resources to CEO Josh Miller. -

Duggan Volunteer Opportunities

Issue No. 319 FREE newsletter to all Duggan residents Compliments of the July/August Duggan Community League 2007 Duggan Details www.duggancommunity.ab.ca Pooling Resources and Pulling Together. One of the major problems with a volunteer along her block to inform everyone of some policies/procedures/resources, I’ve tried to share organization is the sheer amount of work it suspicious activity taking place along the street. the vision of where this all could lead, it’s up to entails. The funny thing is, in my exerience The effort, while a good start, unfortunately them to give just a little bit more of themselves probably 90% of the time is spent reinventing won’t have much effect unless we are willing to to benefit the whole. The DCL will act as a the wheel. pool our resources and start pulling together. central repository for ANY useful information (of course we could use some help to organize it Case in point…we have a very active soccer All this effort has wonderful short term effects, if it comes in). council which puts on an unbeatable tournament unfortunately I, like a majority of residents here, every year. To do this, they have to call volunteers, are probably planning on being here for the long A community league is about bringing people train people, arrange sponsors, purchase supplies haul. And over the long haul, I find that few together, for sharing, for empowering our and equipment, and a whole list of other things. people are willing to keep up the solitary work community. -

Arizona State University June 30, 2005 Financial Report

2005 FINANCIAL REPORT On the front cover Clockwise from the top – In August 2004 ASU welcomed 58,156 students to its campuses. Included were 162 National Merit Scholars and over 7,700 fi rst time freshmen. More than 27% of the fi rst time freshmen on the campuses were rated in the top 10% of their high school graduating classes. During the past 11 years ASU has had more students than any other public university selected for the USA Today’s ranking of the nation’s top 20 undergraduates. When compared against private universities, ASU ranks 3rd overall in students selected for this ranking. ASU’s student population represents all 50 states and more than 140 nations. As a part of the University’s initiatives to enhance the freshmen classroom experience, the average class size of core freshmen classes, such as English composition and college algebra courses, has been reduced. ASU’s Barrett Honors College is considered among the top honors colleges in the nation and selectively recruits academically outstanding undergraduates. In the 2004/2005 academic year ASU had one of the largest classes of freshmen National Merit Scholars of any public university. ASU is committed to community outreach through its schools and colleges, non academic departments, and student organizations. Often these programs involve interaction with local schools or neighborhoods. Programs include helping American Indian students who have an interest in health care programs explore those interests in the nursing, math, and science fi elds; exposing the children of migrant farm workers to various technology programs and equipment; providing professional development resources to Arizona’s K 12 teachers through a web portal; and preparing minority engineering students for the college experience. -

Bill to Make Private College Police Records Public Officials Disagree On

NEWS MUSE SPORTS Boston crime has decreased since Documentary director Douglas Tirola Women’s Hockey gears up for last year. discusses tasteless nature of National non-conference tilt with Clarkson. p. 2 Lampoon. p. 10 p. 5 44°/62° DAILYFREEPRESS.COM CLEAR (FORECAST.IO) @DAILYFREEPRESS THURSDAY, OCTOBER 15, 2015 THE INDEPENDENT WEEKLY STUDENT NEWSPAPER AT BOSTON UNIVERSITY YEAR XLIV. VOLUME XC. ISSUE VII. BU lacks stance on trigger warnings, gives professors personal discretion BY PAIGE SMITH DAILY FREE PRESS STAFF Despite several universities across the country forgoing the use of trigger warnings on campus, Boston University holds no official stance of ordinance governing the use of trig- ger warnings in academic settings. Administrators at both American Uni- versity and Cornell University have spoken out against trig-ger warnings, with American cit- PHOTO BY PAIGE TWOMBLY/DAILY FREE PRESS CONTRIBUTOR ing freedom of speech as its defense. A bill seeking to make private college police records public is in a committee in the Massachusetts State Legislature. Boston Univer- “The Faculty Senate does not endorse of- sity students currently have to go to the Boston University Police Department and have the records read to them because they do fering ‘trigger warnings’ or otherwise labeling not exist online. controver-sial material in such a way that stu- dents construe it as an option to ‘opt out’ of Bill to make private college police records public engaging with texts or concepts, or otherwise not participating in intellectual inquiries,” the BY CAROLYN HOFFMAN state police officer of a college, university or College, Harvard University Police Depart- AU Faculty Senate wrote in a statement. -

Bentley EMAIL:Layout 1

11710 - 87 Avenue, Edmonton, Alberta www.bentleycondos.ca Hawrelak Park, steps away from the Bentley. Victoria Golf Course, minutes away from the Bentley. A new lifestyle... From Trendy Enjoy urban life surrounded by Boutiques... nature. From tranquil nature walks to indulging in a shopper’s To Tranquil Walks paradise of trendy boutiques in the Park and specialty shops. At the Bentley in Windsor Park... you truly can have it all. Trendy boutiques along Whyte Avenue, minutes away from the Bentley. 2 3 Bentley is a luxurious condominium that combines European elegance with contemporary design and superior quality finishes. Located in historic Windsor Park, Bentley is just steps away from the world class University of Alberta, University Hospital, Edmonton Clinic and the Mazankowsky Alberta Heart Institute. A Serene Sanctuary... Windsor Park is one of Edmonton’s oldest and most exclusive neighbourhoods, characterized by large in the heart of the mature trees, beautiful scenery, and charming multi-million dollar homes. University District Walk your children to school in the morning to the renowned Windsor Park school, and after a busy day at work University of Alberta Hospital, steps away from the Bentley. unwind in your luxurious Bentley home surrounded by nature, walking trails, and parks. The Windsor Park area boasts a plethora of restaurants, boutiques, and countless professional amenities and services. European elegance and luxury await you at the Bentley. Bentley in Windsor Park – you have indeed arrived! University of Alberta Butterdome, adjacent to the Bentley. 4 5 The elegant graceful exterior of the building is comprised of red brick and stone for a classic old world look designed to withstand the test of time, and become a Classic landmark for generations to come. -

Suffolk University Institutional Master Plan Notification Form

SUFFOLK UNIVERSITY Institutional Master Plan Notification Form Submitted to Prepared by Boston Redevelopment Authority Vanasse Hangen Brustlin, Inc. Boston, Massachusetts In association with Submitted by Chan Krieger Sieniewicz Suffolk University CBT/Childs Bertman Tseckares, Inc. Boston, Massachusetts Rubin & Rudman LLP Suffolk Construction January, 2008 SUFFOLK UNIVERSITY Table of Contents 1. INTRODUCTION Background.............................................................................................................................1-1 The Urban Campus ................................................................................................................1-2 Institutional Master Planning Summary ..................................................................................1-3 2002 Suffolk University Institutional Master Plan....................................................1-3 2005 Amendment to Suffolk University Institutional Master Plan ...........................1-4 2007 Renewal of the Suffolk University Institutional Master Plan...........................1-5 2007 Amendment to Suffolk University Institutional Master Plan – 10 West Street Student Residence Hall Project .....................................................1-5 Public Process and Coordination............................................................................................1-6 Institutional Master Plan Team .............................................................................................1-10 2. MISSION AND OBJECTIVES Introduction.............................................................................................................................2-1 -

Culturing Philanthropy GSDM’S Philanthropic Culture Is in Place, and the Potential Is Unlimited

Boston University Henry M. Goldman School of Dental Medicine impressions Culturing Philanthropy GSDM’S philanthropic culture is in place, and the potential is unlimited. Quoted “ Our 50th anniversary CONTENTS lets us reflect upon Fall 2012 the journey thus 30 far—and take a look at our future.” — Dean Jeffrey W. Hutter A pacesetter Dean’s Message | page 2 from Venezuela Ernesto Muller PERIO 61 thinks that lessons in philanthropy should be part of every dental student’s education. 32 SECTIONS 3 White Coat Tradition Continues • The Mental Blocks Play BU • Group Practice Coming Soon • Dr. Dulong on BORID • Dr. Kaufman in New York Times • Every dollar counts 3 News in Brief Alums in the Military • PERIO Creates New Implant Professor Anita Gohel says, “You’re not System • Dr. Friedman as MDS President • Tri-School involved with GSDM for just four years. Symposium Breaks Record You belong to it for a lifetime.” 33 25 The Unstoppable Shaeffer Family • Liz Pinone Tackles Career Services • Dr. Chogle Leads 15 Spotlight Endodontics • Pamela Baldassarre DMD 82 PERIO 84 Giving back on Remembering BU • Metcalf Winners McManama through teaching and Chou • Commencement 2012 Recap Carl McManama believes teaching future dental educators is among the greatest ways to give. 50 34 Run DMD! Alums Sponsor Brunch • Deedee Gurin Alumni DMD 97 Grows Her Practice • Meet Diversity Director 41 Larry Dunham DMD 83 • Alumni Survey Results • On the Road with Kimberley • Class Notes Inspired by a mentor Paul Fugazzotto PERIO 81 pledged in honor of the late Gerald M. Kramer. 35 Fall 2012 IMPRESSIONS MANAGING EDITOR Francie King DESIGN PHONE 617-638-5147 A publication for the Jackie Rubin Boston University Owen Edwards Design FAX 617-638-4895 alumni and friends of Assistant Director of Development EMAIL [email protected] PHOTOGRAPHY Boston University Publications & Media Communications www.bu.edu/dental COMPREHENSIVE CAMPAIGN BU Photography Henry M. -

Zone of the Game May 12 May 26 Millwoods Soccer SWEMSA - Edmonton Sting

issue 014 2013 edition Eddies Announce National TV Deal Nine FC Edmonton games will be shown on The Score Edmonton, Alberta (May 10, 2013) – FC Edmonton and Rogers have inked a partnership which will see at least six Eddies games live on The Score this NASL season. Eddies FC Edmonton has rapidly become one of Edmonton’s most exciting professional sports franchises, playing to loud and boisterous crowds at least 14 times a year. Thanks to the newly-signed partnership, The Score will broadcast nine FC Edmonton home games this year, starting May 12 vs. Atlanta and culminating October 27 with a game Go against Tampa Bay. “Throughout the club’s first three seasons, FC Edmonton has endeavoured to introduce itself to the Edmonton sports community as a fun and affordable professional entertain- ment choice,” said general manager Rod Proudfoot. “This new deal with Rogers will further raise the profile of FC Ed- LIVE! monton, our sponsors and our supporters within Edmonton and within the North American Soccer League. And with The Score’s reach, FC Edmonton will now become a more recognizable brand across the country.” In addition to having the games televised live, City Ed- monton has committed to help support the broadcasts with on-air promotional spots, commercials, creative features and local live content. The Score is Canada’s third-largest specialty sports channel with 6.6 million television subscribers, delivering breaking sports news, analysis, highlights and live events. City Edmonton offers viewers intensely-local, urban- oriented, culturally-diverse television programming. Media are invited to Clarke Stadium today where FC Edmonton owner Tom Fath, coaches, players and represen- tatives from the City of Edmonton will be on-hand to unveil the stadium’s new stands and seats, as well as a few other exciting announcements. -

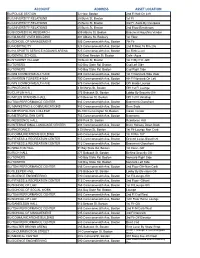

Pepsi Machine Inventory 2013 Web Site.Xlsx

ACCOUNT ADDRESS ASSET LOCATION BU/POLICE STATION 32 Haw, Boston 2nd Fl Hall On Left BU/UNIVERSITY RELATIONS 25 Buick St, Boston 1st Fl BU/UNIVERSITY RELATIONS 25 Buick St, Boston 3rd Fl -Soda By Overpass BU/UNIVERSITY RELATIONS 25 Buick St, Boston 2nd Floor Breakroom BU/BIODMEDICAL RESEARCH 609 Albany St, Boston Basement Aquafina Vendor BU/GILMORE VIVES BUILDING 801 Albany St, Roxbury 1st Floor BU/SCHOOL OF MANAGEMENT 595 Commonwealth Ave, Boston 7th Flr BU/HOSPITALITY 928 Commonwealth Ave, Boston 2nd Fl Next To Rm 206 BU/HA SPORTS SERVICE/AGGANIS ARENA 925 Commonwealth Ave, Boston Ice Rink Level BU/DENTAL SCHOOL 100 East Newton St, Boston Cafe--Aqua BU/STUDENT VILLAGE 10 Buick St, Boston 1st Fl By P.O.-Glfr BU/TOWERS 140 Bay State Rd, Boston Csd Left Side BU/TOWERS 140 Bay State Rd, Boston Csd Right Side BU/808 COMMONWEALTH AVE 808 Commonwealth Ave, Boston 1st Fl Noncarb Side Door BU/WARREN TOWERS #1824 700 Commonwealth Ave, Boston 4th Fl Noncarb On Left BU/575 COMMONWEALTH AVE 575 Commonwealth Ave, Boston Glfr Inside Lounge BU/PHOTONICS 8 St Marys St, Boston Glfr 1st Fl Lounge BU/CLAFLIN HALL 273 Babcock St, Boston Lobby By Security-Glfr BU/MYLES STANDISH HALL 610 Beacon St, Boston Glfr 1st Fl Vending BU/TSAI PERFORMANCE CENTER 685 Commonwealth Ave, Boston Basement-Glassfront BU MARKETING & COMMUNICATIONS 985 Commonwealth Ave, Boston Brrm Soda BU/METROPOLITAN COLLEGE 96-100 Cummington St, Boston Inside Vendor BU/METROPOLITAN CAFE 755 Commonwealth Ave, Boston Basement BU/RESIDENCE HALL 509 Park Dr, Boston Residence Hall BU/INTERNATIONAL -

Capital Improvement Plan 2018-2020 FISCAL YEARS 2018-2020 CAPITAL IMPROVEMENT PLAN

Capital Improvement Plan 2018-2020 FISCAL YEARS 2018-2020 CAPITAL IMPROVEMENT PLAN SUBMITTED FOR APPROVAL BY THE ARIZONA BOARD OF REGENTS SEPTEMBER 2016 Dear Members of the Arizona Board of Regents: On behalf of Arizona State University, I am pleased to present the ASU FY 2018-2020 Capital Improvement Plan (CIP). ASU continues to make meaningful progress in its evolution as a model for 21st century higher education, dedicated to access, excellence and impact. In keeping with the ambitious trajectory reflected in the ASU Strategic Enterprise Framework and the ASU Campus Master Plan, the projects proposed in this CIP focus on addressing the need for sustainable facilities that support the achievement of our institutional objectives. For this CIP, ASU has set as an FY 2018 priority a new, energy-efficient parking structure that will be located adjacent to the core of the Tempe campus and downtown Tempe. The multi-tiered structure will replace existing surface parking that will be displaced by planned new development on this site. This project is intended to provide students, faculty and staff with a high-quality facility that will enable their success and support the key elements of ASU’S Strategic Enterprise Framework, including: - Achieving a 57 percent increase in degree production - Establishing national standing in academic quality and the impact of colleges and schools in every field - Enhancing local impact and social embeddedness - Expanding research performance to $815 million in annual research expenditures by 2025 Details regarding this proposed project can be found in the Three-Year Capital Improvement Plan and the FY 2018 Project Description tab. -

Iconoclast Koffiehuis Changes Café Game 15 SU

Feature SU keeps Dewey’s afloat despite financial loss 13 March 18th, 2015 Issue No. 29 Volume 105 THE gatewayTHE OFFICIAL STUDENT NEWSPAPER AT THE UNIVERSITY OF ALBERTA News Opinion Board of Governors approves all Letters home to parents of GSA five market modifiers 3 students a poor choice 9 Arts Sports Iconoclast Koffiehuis changes café game 15 Bears hockey golden once again 20 news 2 THEgateway WWW.GTWY.CA March 18, 2015 editorial staff complaints contributors THE gateway editor-IN-chief Andrea Ross Comments, concerns or complaints about The Gateway’s Zach Borutski, Jason Timmons, Danielle Carlson, [email protected] | 492.5168 content or operations should be first sent to the Editor-in-Chief Jason Shergill, Mitchell Sorenson, Taylor Evans, Steven Andrais, Christian at the address above. If the Editor-in-Chief is unable to resolve a Pagnani, Lisa Szabo, Maggie Schmidt, Adrianna Stanski, Holly Detillieux, visit us at managing editor Kate Black complaint, it may be taken to the Gateway Student Journalism Floyd Robert, Zachary Popowich, Jamie Sarkonak, Hannah Madsen, Alex GTWY.CA [email protected] | 492.6654 Society’s Board of Directors; beyond that, appeal is to the Migdal, Spencer Nichols, Lara Kmech, Randy Savoie, Stefano Jun non-partisan Society OmbudsBoard. The chairs of the Board of online editor Kevin Schenk Directors and the OmbudsBoard can be reached at the address Wednesday, March 18, 2015 [email protected] | 492.6652 above. haiku Volume 105 Issue No. 29 news editor Richard Catangay-Liew copyright [email protected] | 492.7308 Elections are done! Published since November 21, 1910 All materials appearing in The Gateway bear copyright of their Wait..