From Continuous-Time Domain to Microcontroller Code

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Virtual Analog (VA) Filter Implementation and Comparisons �Copyright © 2013 Will Pirkle

App Note 4"Virtual Analog (VA) Filter Implementation and Comparisons "Copyright © 2013 Will Pirkle Virtual Analog (VA) Filter Implementation and Comparisons Will Pirkle I have had several requests from readers to do a Virtual Analog (VA) Filter Implementation plug-in. The source of these designs is a book electronically published in June 2012 named The Art of VA Filter Design by Vadim Zavalishin. This awesome piece of work is free and available from many sources including my own site www.willpirkle.com. This short book is an excellent introduction to basic analog filtering theory as well as digital transformations. It is so concise in this respect that I am considering using it as part of the text materials for an Advanced Analog Circuits class I teach. I highly recommend this excellent book - you will need to understand its content to use this App Note; for the most part I use the same variable names as the book so you will want to use it as a reference. Zavalishinʼs derivations and descriptions are so well thought out and so well written that it makes no sense for me to repeat them here and I donʼt think it can be simplified any more that it already is in his book. Another reason that I enjoyed this book is that the author followed a similar derivation to reach the bilinear transform as my DSP professor (Claude Lindquist) did when I was in graduate school; in fact I use part of that same derivation in my classes and book. Also similar was the use of an integrator as a prototype filter to generate the discrete time transforms. -

Discrete - Time Signals and Systems

Discrete - Time Signals and Systems Sampling – II Sampling theorem & Reconstruction Yogananda Isukapalli Sampling at diffe- -rent rates From these figures, it can be concluded that it is very important to sample the signal adequately to avoid problems in reconstruction, which leads us to Shannon’s sampling theorem 2 Fig:7.1 Claude Shannon: The man who started the digital revolution Shannon arrived at the revolutionary idea of digital representation by sampling the information source at an appropriate rate, and converting the samples to a bit stream Before Shannon, it was commonly believed that the only way of achieving arbitrarily small probability of error in a communication channel was to 1916-2001 reduce the transmission rate to zero. All this changed in 1948 with the publication of “A Mathematical Theory of Communication”—Shannon’s landmark work Shannon’s Sampling theorem A continuous signal xt( ) with frequencies no higher than fmax can be reconstructed exactly from its samples xn[ ]= xn [Ts ], if the samples are taken at a rate ffs ³ 2,max where fTss= 1 This simple theorem is one of the theoretical Pillars of digital communications, control and signal processing Shannon’s Sampling theorem, • States that reconstruction from the samples is possible, but it doesn’t specify any algorithm for reconstruction • It gives a minimum sampling rate that is dependent only on the frequency content of the continuous signal x(t) • The minimum sampling rate of 2fmax is called the “Nyquist rate” Example1: Sampling theorem-Nyquist rate x( t )= 2cos(20p t ), find the Nyquist frequency ? xt( )= 2cos(2p (10) t ) The only frequency in the continuous- time signal is 10 Hz \ fHzmax =10 Nyquist sampling rate Sampling rate, ffsnyq ==2max 20 Hz Continuous-time sinusoid of frequency 10Hz Fig:7.2 Sampled at Nyquist rate, so, the theorem states that 2 samples are enough per period. -

ECGR4124 Digital Signal Processing Exam 2 Spring 2017 Name

ECGR4124 Digital Signal Processing Exam 2 Spring 2017 Name: _____________________________________ LAST 4 NUMBERS of Student Number: _____ Do NOT begin until told to do so Make sure that you have all pages before starting NO TEXTBOOK, NO CALCULATOR, NO CELL PHONES/WIRELESS DEVICES Open handouts, 2 sheet front/back notes, NO problem handouts, NO exams, NO quizzes DO ALL WORK IN THE SPACE GIVEN Do NOT use the back of the pages, do NOT turn in extra sheets of work/paper Multiple-choice answers should be within 5% of correct value Show ALL work, even for multiple choice ACADEMIC INTEGRITY: Students have the responsibility to know and observe the requirements of The UNCC Code of Student Academic Integrity. This code forbids cheating, fabrication or falsification of information, multiple submission of academic work, plagiarism, abuse of academic materials, and complicity in academic dishonesty. Unless otherwise noted: F{} denotes Discrete time Fourier transform {DTFT, DFT, or Continuous, as implied in problem} F-1{} denotes inverse Fourier transform ω denotes frequency in rad/sample, Ω denotes frequency in rad/second ∗ denotes linear convolution, N denotes circular convolution x*(t) denotes the conjugate of x(t) Useful constants, etc: e ≈ 2.72 π ≈ 3.14 e2 ≈ 7.39 e4 ≈ 54.6 e-0.5 ≈ 0.607 e-0.25 ≈ 0.779 1/e ≈ 0.37 √2 ≈ 1.41 e-2 ≈ 0.135 √3 ≈ 1.73 e-4 ≈ 0.0183 √5 ≈ 2.22 √7 ≈ 2.64 √10 ≈ 3.16 ln( 2 ) ≈ 0.69 ln( 4 ) ≈ 1.38 log10( 2 ) ≈ 0.30 log10( 3 ) ≈ 0.48 log10( 10 ) ≈ 1.0 log10( 0.1 ) ≈ -1 1/π ≈ 0.318 sin(0.1) ≈ 0.1 tan(1/9) ≈ 1/9 cos(π / 4) ≈ 0.71 cos( A ) cos ( B ) = 0.5 cos(A - B) + 0.5 cos(A + B) ejθ = cos(θ) + j sin(θ) 1/10 5 Points Each, Circle the Best Answer 1. -

Digital Signal Processing I Exam 2 Fall 1999 Session 17 Live: 21 Oct

Digital Signal Processing I Exam 2 Fall 1999 Session 17 Live: 21 Oct. 1999 Cover Sheet Test Duration: 75 minutes. Open Book but Closed Notes. Calculators NOT allowed. This test contains four problems. All work should be done in the blue books provided. Do not return this test sheet, just return the blue books. Prob. No. Topic of Problem Points 1. Digital Upsampling 35 2. Digital Subbanding 25 3. Multi-Stage Upsampling/Interpolation 20 4. IIR Filter Design Via Bilinear Transform 20 1 Digital Signal Processing I Exam 2 Fall 1999 Session 17 Live: 21 Oct. 1999 Problem 1. [35 points] X (F) H (ω) a LP 1/4W 2 F ω W W π 3π π π 3π π 4 4 4 4 x (t) h [n] a Ideal A/D x [n] w [n] Lowpass Filter y [n] 2 π LP 3π F = 4W ω = ω = s p 4 s 4 gain =2 Figure 1. The analog signal xa(t) with CTFT Xa(F ) shown above is input to the system above, where x[n]=xa(n/Fs)withFs =4W ,and sin( π n) cos( π n) h [n]= 2 4 , −∞ <n<∞, LP π n − n2 2 1 4 | |≤ π 3π ≤| |≤ such that HLP (ω)=2for ω 4 , HLP (ω)=0for 4 ω π,andHLP (ω) has a cosine π 3π roll-off from 1 at ωp = 4 to 0 at ωs = 4 . Finally, the zero inserts may be mathematically described as ( x( n ),neven w[n]= 2 0,nodd (a) Plot the magnitude of the DTFT of the output y[n], Y (ω), over −π<ω<π. -

Evaluating Oscilloscope Sample Rates Vs. Sampling Fidelity: How to Make the Most Accurate Digital Measurements

AC 2011-2914: EVALUATING OSCILLOSCOPE SAMPLE RATES VS. SAM- PLING FIDELITY Johnnie Lynn Hancock, Agilent Technologies About the Author Johnnie Hancock is a Product Manager at Agilent Technologies Digital Test Division. He began his career with Hewlett-Packard in 1979 as an embedded hardware designer, and holds a patent for digital oscillo- scope amplifier calibration. Johnnie is currently responsible for worldwide application support activities that promote Agilent’s digitizing oscilloscopes and he regularly speaks at technical conferences world- wide. Johnnie graduated from the University of South Florida with a degree in electrical engineering. In his spare time, he enjoys spending time with his four grandchildren and restoring his century-old Victorian home located in Colorado Springs. Contact Information: Johnnie Hancock Agilent Technologies 1900 Garden of the Gods Rd Colorado Springs, CO 80907 USA +1 (719) 590-3183 johnnie [email protected] c American Society for Engineering Education, 2011 Evaluating Oscilloscope Sample Rates vs. Sampling Fidelity: How to Make the Most Accurate Digital Measurements Introduction Digital storage oscilloscopes (DSO) are the primary tools used today by digital designers to perform signal integrity measurements such as setup/hold times, rise/fall times, and eye margin tests. High performance oscilloscopes are also widely used in university research labs to accurately characterize high-speed digital devices and systems, as well as to perform high energy physics experiments such as pulsed laser testing. In addition, general-purpose oscilloscopes are used extensively by Electrical Engineering students in their various EE analog and digital circuits lab courses. The two key banner specifications that affect an oscilloscope’s signal integrity measurement accuracy are bandwidth and sample rate. -

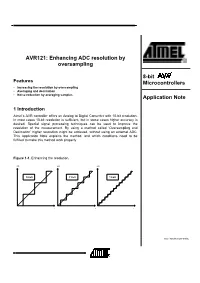

Enhancing ADC Resolution by Oversampling

AVR121: Enhancing ADC resolution by oversampling 8-bit Features Microcontrollers • Increasing the resolution by oversampling • Averaging and decimation • Noise reduction by averaging samples Application Note 1 Introduction Atmel’s AVR controller offers an Analog to Digital Converter with 10-bit resolution. In most cases 10-bit resolution is sufficient, but in some cases higher accuracy is desired. Special signal processing techniques can be used to improve the resolution of the measurement. By using a method called ‘Oversampling and Decimation’ higher resolution might be achieved, without using an external ADC. This Application Note explains the method, and which conditions need to be fulfilled to make this method work properly. Figure 1-1. Enhancing the resolution. A/D A/D A/D 10-bit 11-bit 12-bit t t t Rev. 8003A-AVR-09/05 2 Theory of operation Before reading the rest of this Application Note, the reader is encouraged to read Application Note AVR120 - ‘Calibration of the ADC’, and the ADC section in the AVR datasheet. The following examples and numbers are calculated for Single Ended Input in a Free Running Mode. ADC Noise Reduction Mode is not used. This method is also valid in the other modes, though the numbers in the following examples will be different. The ADCs reference voltage and the ADCs resolution define the ADC step size. The ADC’s reference voltage, VREF, may be selected to AVCC, an internal 2.56V / 1.1V reference, or a reference voltage at the AREF pin. A lower VREF provides a higher voltage precision but minimizes the dynamic range of the input signal. -

MULTIRATE SIGNAL PROCESSING Multirate Signal Processing

MULTIRATE SIGNAL PROCESSING Multirate Signal Processing • Definition: Signal processing which uses more than one sampling rate to perform operations • Upsampling increases the sampling rate • Downsampling reduces the sampling rate • Reference: Digital Signal Processing, DeFatta, Lucas, and Hodgkiss B. Baas, EEC 281 431 Multirate Signal Processing • Advantages of lower sample rates –May require less processing –Likely to reduce power dissipation, P = CV2 f, where f is frequently directly proportional to the sample rate –Likely to require less storage • Advantages of higher sample rates –May simplify computation –May simplify surrounding analog and RF circuitry • Remember that information up to a frequency f requires a sampling rate of at least 2f. This is the Nyquist sampling rate. –Or we can equivalently say the Nyquist sampling rate is ½ the sampling frequency, fs B. Baas, EEC 281 432 Upsampling Upsampling or Interpolation •For an upsampling by a factor of I, add I‐1 zeros between samples in the original sequence •An upsampling by a factor I is commonly written I For example, upsampling by two: 2 • Obviously the number of samples will approximately double after 2 •Note that if the sampling frequency doubles after an upsampling by two, that t the original sample sequence will occur at the same points in time t B. Baas, EEC 281 434 Original Signal Spectrum •Example signal with most energy near DC •Notice 5 spectral “bumps” between large signal “bumps” B. Baas, EEC 281 π 2π435 Upsampled Signal (Time) •One zero is inserted between the original samples for 2x upsampling B. Baas, EEC 281 436 Upsampled Signal Spectrum (Frequency) • Spectrum of 2x upsampled signal •Notice the location of the (now somewhat compressed) five “bumps” on each side of π B. -

Introduction Simulation of Signal Averaging

Signal Processing Naureen Ghani December 9, 2017 Introduction Signal processing is used to enhance signal components in noisy measurements. It is especially important in analyzing time-series data in neuroscience. Applications of signal processing include data compression and predictive algorithms. Data analysis techniques are often subdivided into operations in the spatial domain and frequency domain. For one-dimensional time series data, we begin by signal averaging in the spatial domain. Signal averaging is a technique that allows us to uncover small amplitude signals in the noisy data. It makes the following assumptions: 1. Signal and noise are uncorrelated. 2. The timing of the signal is known. 3. A consistent signal component exists when performing repeated measurements. 4. The noise is truly random with zero mean. In reality, all these assumptions may be violated to some degree. This technique is still useful and robust in extracting signals. Simulation of Signal Averaging To simulate signal averaging, we will generate a measurement x that consists of a signal s and a noise component n. This is repeated over N trials. For each digitized trial, the kth sample point in the jth trial can be written as xj(k) = sj(k) + nj(k) Here is the code to simulate signal averaging: % Signal Averaging Simulation % Generate 256 noisy trials trials = 256; noise_trials = randn(256); % Generate sine signal sz = 1:trials; sz = sz/(trials/2); S = sin(2*pi*sz); % Add noise to 256 sine signals for i = 1:trials noise_trials(i,:) = noise_trials(i,:) + S; -

IIR Filters (II)

Lecture 8 - IIR Filters (II) James Barnes ([email protected]) Spring 2009 Colorado State University Dept of Electrical and Computer Engineering ECE423 – 1 / 27 Lecture 8 Outline ● Introduction ● Digital Filter Design by Analog → Digital Conversion ● (Probably next lecture) ”All Digital” Design Algorithms ● (Next lecture) Conversion of Filter Types by Frequency Transformation Colorado State University Dept of Electrical and Computer Engineering ECE423 – 2 / 27 ❖ Lecture 8 Outline Introduction ❖ IIR Filter Design Overview Method: Impulse Invariance for IIR FIlters Approximation of Derivatives Bilinear Transform Matched Z-Transform Introduction Colorado State University Dept of Electrical and Computer Engineering ECE423 – 3 / 27 IIR Filter Design Overview ● Methods which start from analog design ✦ Impulse Invariance ✦ Approximation of Derivatives ✦ Bilinear Transform ✦ Matched Z-transform All are different methods of mapping the s-plane onto the z-plane ● Methods which are ”all digital” ✦ Least-squares ✦ McClellan-Parks Colorado State University Dept of Electrical and Computer Engineering ECE423 – 4 / 27 ❖ Lecture 8 Outline Introduction Method: Impulse Invariance for IIR FIlters ❖ Impulse Invariance ❖ Impulse Invariance (2) ❖ Impulse Invariance (3) ❖ Impulse Invariance (5) ❖ Impulse Invariance Procedure ❖ Impulse Invariance Example Method: Impulse Invariance for IIR FIlters ❖ Impulse Invariance Example (2) Approximation of Derivatives Bilinear Transform Matched Z-Transform Colorado State University Dept of Electrical and Computer Engineering ECE423 – 5 / 27 Impulse Invariance We start by sampling the impulse response of the analog filter: ha(t) h[n]= ha(nt0) t0 Sampling Theorem gives relation between Fourier Transform of sampled and continuous ”signals”: ∞ 1 ω 2πk H(z)|z=ejω = Ha(j − j ), (1) t0 t0 t0 k=X−∞ where ω =Ωt0 = 2πf/fs and f is the analog frequency in Hz. -

Aliasing, Image Sampling and Reconstruction

https://youtu.be/yr3ngmRuGUc Recall: a pixel is a point… Aliasing, Image Sampling • It is NOT a box, disc or teeny wee light and Reconstruction • It has no dimension • It occupies no area • It can have a coordinate • More than a point, it is a SAMPLE Many of the slides are taken from Thomas Funkhouser course slides and the rest from various sources over the web… Image Sampling Imaging devices area sample. • An image is a 2D rectilinear array of samples • In video camera the CCD Quantization due to limited intensity resolution array is an area integral Sampling due to limited spatial and temporal resolution over a pixel. • The eye: photoreceptors Intensity, I Pixels are infinitely small point samples J. Liang, D. R. Williams and D. Miller, "Supernormal vision and high- resolution retinal imaging through adaptive optics," J. Opt. Soc. Am. A 14, 2884- 2892 (1997) 1 Aliasing Good Old Aliasing Reconstruction artefact Sampling and Reconstruction Sampling Reconstruction Slide © Rosalee Nerheim-Wolfe 2 Sources of Error Aliasing (in general) • Intensity quantization • In general: Artifacts due to under-sampling or poor reconstruction Not enough intensity resolution • Specifically, in graphics: Spatial aliasing • Spatial aliasing Temporal aliasing Not enough spatial resolution • Temporal aliasing Not enough temporal resolution Under-sampling Figure 14.17 FvDFH Sampling & Aliasing Spatial Aliasing • Artifacts due to limited spatial resolution • Real world is continuous • The computer world is discrete • Mapping a continuous function to a -

Signal Approximation Using the Bilinear Transform

SIGNAL APPROXIMATION USING THE BILINEAR TRANSFORM Archana Venkataraman, Alan V. Oppenheim MIT Digital Signal Processing Group 77 Massachusetts Avenue, Cambridge, MA 02139 [email protected], [email protected] ABSTRACT The analysis presented in this paper has application in contexts This paper explores the approximation properties of a unique basis where only a fixed number of DT values can be used to represent a expansion, which realizes a bilinear frequency warping between a CT signal. For example, in a binary detection problem, one might continuous-time signal and its discrete-time representation. We in- want to limit the number of digital multiplies used to compute the vestigate the role that certain parameters and signal characteristics inner product of two CT signals. Numerical simulations of this sce- have on these approximations, and we extend the analysis to a win- nario suggest that the bilinear expansion achieves a better detection dowed representation, which increases the overall time resolution. performance than Nyquist sampling for certain signal classes. Approximations derived from the bilinear representation and from Nyquist sampling are compared in the context of a binary detection 2. THE BILINEAR REPRESENTATION problem. Simulation results indicate that, for many types of signals, the bilinear approximations achieve a better detection performance. As derived in [1], the network shown in Fig. 1 realizes a one-to-one Index Terms— Signal Representations, Approximation Meth- frequency warping between the Laplace and Z-transform domains ods, Bilinear Transformations, Signal Detection according to the bilinear transform. Specifically, the Laplace trans- form F (s) of the signal f(t) and the Z-transform F (z) of the se- f[n] 1. -

Visual and Intuitive Approach to Explaining Digitized Controllers

Paper ID #16436 Visual and Intuitive Approach to Explaining Digitized Controllers Dr. Daniel Raviv, Florida Atlantic University Dr. Raviv is a Professor of Computer & Electrical Engineering and Computer Science at Florida Atlantic University. In December 2009 he was named Assistant Provost for Innovation and Entrepreneurship. With more than 25 years of combined experience in the high-tech industry, government and academia Dr. Raviv developed fundamentally different approaches to ”out-of-the-box” thinking and a breakthrough methodology known as ”Eight Keys to Innovation.” He has been sharing his contributions with profession- als in businesses, academia and institutes nationally and internationally. Most recently he was a visiting professor at the University of Maryland (at Mtech, Maryland Technology Enterprise Institute) and at Johns Hopkins University (at the Center for Leadership Education) where he researched and delivered processes for creative & innovative problem solving. For his unique contributions he received the prestigious Distinguished Teacher of the Year Award, the Faculty Talon Award, the University Researcher of the Year AEA Abacus Award, and the President’s Leadership Award. Dr. Raviv has published in the areas of vision-based driverless cars, green innovation, and innovative thinking. He is a co-holder of a Guinness World Record. His new book is titled: ”Everyone Loves Speed Bumps, Don’t You? A Guide to Innovative Thinking.” Dr. Daniel Raviv received his Ph.D. degree from Case Western Reserve University in 1987 and M.Sc. and B.Sc. degrees from the Technion, Israel Institute of Technology in 1982 and 1980, respectively. Paul Benedict Caballo Reyes, Florida Atlantic University Paul Benedict Reyes is an Electrical Engineering major in Florida Atlantic University who expects to graduate Spring 2016.