Automated Thrash Testing

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Core Elements of Continuous Testing

WHITE PAPER CORE ELEMENTS OF CONTINUOUS TESTING Today’s modern development disciplines -- whether Agile, Continuous Integration (CI) or Continuous Delivery (CD) -- have completely transformed how teams develop and deliver applications. Companies that need to compete in today’s fast-paced digital economy must also transform how they test. Successful teams know the secret sauce to delivering high quality digital experiences fast is continuous testing. This paper will define continuous testing, explain what it is, why it’s important, and the core elements and tactical changes development and QA teams need to make in order to succeed at this emerging practice. TABLE OF CONTENTS 3 What is Continuous Testing? 6 Tactical Engineering Considerations 3 Why Continuous Testing? 7 Benefits of Continuous Testing 4 Core Elements of Continuous Testing WHAT IS CONTINUOUS TESTING? Continuous testing is the practice of executing automated tests throughout the software development cycle. It’s more than just automated testing; it’s applying the right level of automation at each stage in the development process. Unlike legacy testing methods that occur at the end of the development cycle, continuous testing occurs at multiple stages, including development, integration, pre-release, and in production. Continuous testing ensures that bugs are caught and fixed far earlier in the development process, improving overall quality while saving significant time and money. WHY CONTINUOUS TESTING? Continuous testing is a critical requirement for organizations that are shifting left towards CI or CD, both modern development practices that ensure faster time to market. When automated testing is coupled with a CI server, tests can instantly be kicked off with every build, and alerts with passing or failing test results can be delivered directly to the development team in real time. -

Smoke Testing

International Journal of Scientific and Research Publications, Volume 4, Issue 2, February 2014 1 ISSN 2250-3153 Smoke Testing Vinod Kumar Chauhan Quality Assurance (QA), Impetus InfoTech Pvt. Ltd. (India) Abstract- Smoke testing is an end-to-end testing which determine the stability of new build by checking the crucial functionality of the application under test and used as criteria of accepting the new build for detailed testing. Index Terms- Software testing, acceptance testing, agile, build, regression testing, sanity testing I. INTRODUCTION The purpose of this research paper is to give details of smoke testing from the industry practices. II. SMOKE TESTING The purpose of smoke testing is to determine whether the new software build is stable or not so that the build could be used for detailed testing by the QA team and further work by the development team. If the build is stable i.e. the smoke test passes then build could be used by the QA and development team. On the stable build QA team performs functional testing for the newly added features/functionality and then performs regression testing depending upon the situation. But if the build is not stable i.e. the smoke fails then the build is rejected to the development team to fix the build issues and create a new build. Smoke Testing is generally done by the QA team but in certain situations, can be done by the development team. In that case the development team checks the stability of the build and deploy to QA only if the build is stable. In other case, whenever there is new build deployment to the QA environment then the team first performs the smoke test on the build and depending upon the results of smoke the decision to accept or reject the build is taken. -

Effectiveness of Software Testing Techniques in Enterprise: a Case Study

MYKOLAS ROMERIS UNIVERSITY BUSINESS AND MEDIA SCHOOL BRIGITA JAZUKEVIČIŪTĖ (Business Informatics) EFFECTIVENESS OF SOFTWARE TESTING TECHNIQUES IN ENTERPRISE: A CASE STUDY Master Thesis Supervisor – Assoc. Prof. Andrej Vlasenko Vilnius, 2016 CONTENTS INTRODUCTION .................................................................................................................................. 7 1. THE RELATIONSHIP BETWEEN SOFTWARE TESTING AND SOFTWARE QUALITY ASSURANCE ........................................................................................................................................ 11 1.1. Introduction to Software Quality Assurance ......................................................................... 11 1.2. The overview of Software testing fundamentals: Concepts, History, Main principles ......... 20 2. AN OVERVIEW OF SOFTWARE TESTING TECHNIQUES AND THEIR USE IN ENTERPRISES ...................................................................................................................................... 26 2.1. Testing techniques as code analysis ....................................................................................... 26 2.1.1. Static testing ...................................................................................................................... 26 2.1.2. Dynamic testing ................................................................................................................. 28 2.2. Test design based Techniques ............................................................................................... -

Studying the Feasibility and Importance of Software Testing: an Analysis

Dr. S.S.Riaz Ahamed / Internatinal Journal of Engineering Science and Technology Vol.1(3), 2009, 119-128 STUDYING THE FEASIBILITY AND IMPORTANCE OF SOFTWARE TESTING: AN ANALYSIS Dr.S.S.Riaz Ahamed Principal, Sathak Institute of Technology, Ramanathapuram,India. Email:[email protected], [email protected] ABSTRACT Software testing is a critical element of software quality assurance and represents the ultimate review of specification, design and coding. Software testing is the process of testing the functionality and correctness of software by running it. Software testing is usually performed for one of two reasons: defect detection, and reliability estimation. The problem of applying software testing to defect detection is that software can only suggest the presence of flaws, not their absence (unless the testing is exhaustive). The problem of applying software testing to reliability estimation is that the input distribution used for selecting test cases may be flawed. The key to software testing is trying to find the modes of failure - something that requires exhaustively testing the code on all possible inputs. Software Testing, depending on the testing method employed, can be implemented at any time in the development process. Keywords: verification and validation (V & V) 1 INTRODUCTION Testing is a set of activities that could be planned ahead and conducted systematically. The main objective of testing is to find an error by executing a program. The objective of testing is to check whether the designed software meets the customer specification. The Testing should fulfill the following criteria: ¾ Test should begin at the module level and work “outward” toward the integration of the entire computer based system. -

Types of Software Testing

Types of Software Testing We would be glad to have feedback from you. Drop us a line, whether it is a comment, a question, a work proposition or just a hello. You can use either the form below or the contact details on the rightt. Contact details [email protected] +91 811 386 5000 1 Software testing is the way of assessing a software product to distinguish contrasts between given information and expected result. Additionally, to evaluate the characteristic of a product. The testing process evaluates the quality of the software. You know what testing does. No need to explain further. But, are you aware of types of testing. It’s indeed a sea. But before we get to the types, let’s have a look at the standards that needs to be maintained. Standards of Testing The entire test should meet the user prerequisites. Exhaustive testing isn’t conceivable. As we require the ideal quantity of testing in view of the risk evaluation of the application. The entire test to be directed ought to be arranged before executing it. It follows 80/20 rule which expresses that 80% of defects originates from 20% of program parts. Start testing with little parts and extend it to broad components. Software testers know about the different sorts of Software Testing. In this article, we have incorporated majorly all types of software testing which testers, developers, and QA reams more often use in their everyday testing life. Let’s understand them!!! Black box Testing The black box testing is a category of strategy that disregards the interior component of the framework and spotlights on the output created against any input and performance of the system. -

Smoke Testing What Is Smoke Testing?

Smoke Testing What is Smoke Testing? Smoke testing is the initial testing process exercised to check whether the software under test is ready/stable for further testing. The term ‘Smoke Testing’ is came from the hardware testing, in the hardware testing initial pass is done to check if it did not catch the fire or smoked in the initial switch on.Prior to start Smoke testing few test cases need to created once to use for smoke testing. These test cases are executed prior to start actual testing to checkcritical functionalities of the program is working fine. This set of test cases written such a way that all functionality is verified but not in deep. The objective is not to perform exhaustive testing, the tester need check the navigation’s & adding simple things, tester needs to ask simple questions “Can tester able to access software application?”, “Does user navigates from one window to other?”, “Check that the GUI is responsive” etc. Here are graphical representation of Smoke testing & Sanity testing in software testing: Smoke Sanity Testing Diagram The test cases can be executed manually or automated; this depends upon the project requirements. In this types of testing mainly focus on the important functionality of application, tester do not care about detailed testing of each software component, this can be cover in the further testing of application. The Smoke testing is typically executed by testers after every build is received for checking the build is in testable condition. This type of testing is applicable in the Integration Testing, System Testing and Acceptance Testing levels. -

Drive Continuous Delivery with Continuous Testing

I Don’t Believe Your Company Is Agile! Alex Martins CTO Continuous Testing CA Technologies October 2017 1 Why Many Companies Think They’re Agile… They moved some Dev projects from waterfall to agile They’re having daily standups They have a scrum master Product owner is part of the team They are all talking and walking agile… And are talking about Continuous Delivery BUT… QA is STILL a Bottleneck… Even in DevOps Shops A 2017 survey of self- …of delays were occurring at proclaimed DevOps 63% the Test/QA stage of the practitioners found that … cycle. “Where are the main hold-ups in the software production process?” 63% 32% 30% 16% 22% 21% 23% http://www.computing.co.uk/digital_assets/634fe325-aa28-41d5-8676-855b06567fe2/CTG-DevOps-Review-2017.pdf 3 Challenges to Achieving Continuous Delivery & Testing IDEA Requirements 64% of total defect cost originate in the requirements analysis and design phase1. ? ? of developers time is spent 80% of teams experience delays in development and QA Development 50% 3 finding and fixing defects2 due to unavailable dependencies X X Security 70x required manual pen 30% of teams only security scan 50% more time spent on security test scan cost vs. automation10 once per year9 defects in lower-performing teams8 X X X 70% of all 63% of testers admit they 50% of time 79% of teams face prohibitive QA / Testing testing is still can’t test across all the different spent looking for restrictions, time limits or access manual4 devices and OS versions5 test data6 fees on needed 3rd party services3 ! Release 57% are dissatisfied with the time it takes to deploy new features7 ! ! Ave. -

Your Continuous Testing with Spirent's Automation Platforms

STREAMLINE Your Continuous Testing With Spirent’s Automation Platforms DE RELE VE AS LO E P E T A ING T R G ES E T T S N U I O U IN T N E CO STAG Table of Contents Agile 3 What is Continuous Test (CT)? 4 What Hinders CT Implementation? 5 The Chasm 6 The Bridge 7 Spirent Velocity Framework 8 Lab as a Service (LaaS) 9 Test as a Service (TaaS) 10 CT Implementation Best Practices 11 Summary 12 2 of 12 Agile software development practices gained momentum in the late 90s. Agile emphasizes close collaboration between business stakeholders, the development team, and QA. This enabled faster software delivery, better quality and improved customer satisfaction. By employing DevOps practices, the pace and benefits are amplified. 3 of 12 What is Continuous Test (CT)? Continuous Test (CT) enables CT haed Cotuous terato ad Deery ee network testing to be more effectively performed by DEVELOP ITEATE STAGE ELEASE development teams by enabling them to take advantage of the QA team’s knowledge of real world customer use cases and environments. This is known as “shift left” because testing is moved earlier in the development cycle. With “shift left” tests are run as early as possible to accelerate understanding of AUTOMATE TESTS ORCHESTRATE TEST ENVIRONMENTSEXECUTE TESTS EARLIER problem areas in the code and where development attention is required. Why Do You Need CT? The combination of earlier and faster testing shortens time to release while improving quality and customer satisfaction. 4 of 12 What Hinders CT Implementation? Deficient or non existent Insufficient test Lack of test results Inadequate understanding of tools for creating resources for timely analysis tools hinder customer environments and automated tests test execution assessment of test results use cases by developers EW SOFTWAE DEVELOP ITEATE STAGE ELEASE SOFTWAE BILD ELEASE The promise of DevOps can’t be fully realized until Continuous Testing (CT) is factored in. -

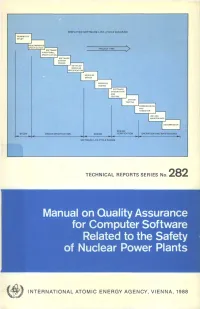

Manual on Quality Assurance for Computer Software Related to the Safety of Nuclear Power Plants

SIMPLIFIED SOFTWARE LIFE-CYCLE DIAGRAM FEASIBILITY STUDY PROJECT TIME I SOFTWARE P FUNCTIONAL I SPECIFICATION! SOFTWARE SYSTEM DESIGN DETAILED MODULES CECIFICATION MODULES DESIGN SOFTWARE INTEGRATION AND TESTING SYSTEM TESTING ••COMMISSIONING I AND HANDOVER | DECOMMISSION DESIGN DESIGN SPECIFICATION VERIFICATION OPERATION AND MAINTENANCE SOFTWARE LIFE-CYCLE PHASES TECHNICAL REPORTS SERIES No. 282 Manual on Quality Assurance for Computer Software Related to the Safety of Nuclear Power Plants f INTERNATIONAL ATOMIC ENERGY AGENCY, VIENNA, 1988 MANUAL ON QUALITY ASSURANCE FOR COMPUTER SOFTWARE RELATED TO THE SAFETY OF NUCLEAR POWER PLANTS The following States are Members of the International Atomic Energy Agency: AFGHANISTAN GUATEMALA PARAGUAY ALBANIA HAITI PERU ALGERIA HOLY SEE PHILIPPINES ARGENTINA HUNGARY POLAND AUSTRALIA ICELAND PORTUGAL AUSTRIA INDIA QATAR BANGLADESH INDONESIA ROMANIA BELGIUM IRAN, ISLAMIC REPUBLIC OF SAUDI ARABIA BOLIVIA IRAQ SENEGAL BRAZIL IRELAND SIERRA LEONE BULGARIA ISRAEL SINGAPORE BURMA ITALY SOUTH AFRICA BYELORUSSIAN SOVIET JAMAICA SPAIN SOCIALIST REPUBLIC JAPAN SRI LANKA CAMEROON JORDAN SUDAN CANADA KENYA SWEDEN CHILE KOREA, REPUBLIC OF SWITZERLAND CHINA KUWAIT SYRIAN ARAB REPUBLIC COLOMBIA LEBANON THAILAND COSTA RICA LIBERIA TUNISIA COTE D'lVOIRE LIBYAN ARAB JAMAHIRIYA TURKEY CUBA LIECHTENSTEIN UGANDA CYPRUS LUXEMBOURG UKRAINIAN SOVIET SOCIALIST CZECHOSLOVAKIA MADAGASCAR REPUBLIC DEMOCRATIC KAMPUCHEA MALAYSIA UNION OF SOVIET SOCIALIST DEMOCRATIC PEOPLE'S MALI REPUBLICS REPUBLIC OF KOREA MAURITIUS UNITED ARAB -

Opportunities and Open Problems for Static and Dynamic Program Analysis Mark Harman∗, Peter O’Hearn∗ ∗Facebook London and University College London, UK

1 From Start-ups to Scale-ups: Opportunities and Open Problems for Static and Dynamic Program Analysis Mark Harman∗, Peter O’Hearn∗ ∗Facebook London and University College London, UK Abstract—This paper1 describes some of the challenges and research questions that target the most productive intersection opportunities when deploying static and dynamic analysis at we have yet witnessed: that between exciting, intellectually scale, drawing on the authors’ experience with the Infer and challenging science, and real-world deployment impact. Sapienz Technologies at Facebook, each of which started life as a research-led start-up that was subsequently deployed at scale, Many industrialists have perhaps tended to regard it unlikely impacting billions of people worldwide. that much academic work will prove relevant to their most The paper identifies open problems that have yet to receive pressing industrial concerns. On the other hand, it is not significant attention from the scientific community, yet which uncommon for academic and scientific researchers to believe have potential for profound real world impact, formulating these that most of the problems faced by industrialists are either as research questions that, we believe, are ripe for exploration and that would make excellent topics for research projects. boring, tedious or scientifically uninteresting. This sociological phenomenon has led to a great deal of miscommunication between the academic and industrial sectors. I. INTRODUCTION We hope that we can make a small contribution by focusing on the intersection of challenging and interesting scientific How do we transition research on static and dynamic problems with pressing industrial deployment needs. Our aim analysis techniques from the testing and verification research is to move the debate beyond relatively unhelpful observations communities to industrial practice? Many have asked this we have typically encountered in, for example, conference question, and others related to it. -

Michael Bolton in NZ Pinheads, from the Testtoolbox, Kiwi Test Teams & More!

NZTester The Quarterly Magazine for the New Zealand Software Testing Community and Supporters ISSUE 4 JUL - SEP 2013 FREE In this issue: Interview with Bryce Day of Catch Testing at ikeGPS Five Behaviours of a Highly Effective Time Lord Tester On the Road Again Hiring Testers Michael Bolton in NZ Pinheads, From the TestToolbox, Kiwi Test Teams & more! NZTester Magazine Editor: Geoff Horne [email protected] [email protected] ph. 021 634 900 P O Box 48-018 Blockhouse Bay Auckland 0600 New Zealand www.nztester.co.nz Advertising Enquiries: [email protected] Disclaimer: Articles and advertisements contained in NZTester Magazine are published in good faith and although provided by people who are experts in their fields, NZTester make no guarantees or representations of any kind concerning the accuracy or suitability of the information contained within or the suitability of products and services advertised for any and all specific applications and uses. All such information is provided “as is” and with specific disclaimer of any warranties of merchantability, fitness for purpose, title and/or non-infringement. The opinions and writings of all authors and contributors to NZTester are merely an expression of the author’s own thoughts, knowledge or information that they have gathered for publication. NZTester does not endorse such authors, necessarily agree with opinions and views expressed nor represents that writings are accurate or suitable for any purpose whatsoever. As a reader of this magazine you disclaim and hold NZTester, its employees and agents and Geoff Horne, its owner, editor and publisher, harmless of all content contained in this magazine as to its warranty of merchantability, fitness for purpose, title and/or non-infringement. -

Test-Driven Development in Enterprise Integration Projects

Test-Driven Development in Enterprise Integration Projects November 2002 Gregor Hohpe Wendy Istvanick Copyright ThoughtWorks, Inc. 2002 Table of Contents Summary............................................................................................................. 1 Testing Complex Business Applications......................................................... 2 Testing – The Stepchild of the Software Development Lifecycle?............................................... 2 Test-Driven Development............................................................................................................. 2 Effective Testing........................................................................................................................... 3 Testing Frameworks..................................................................................................................... 3 Layered Testing Approach ........................................................................................................... 4 Testing Integration Solutions............................................................................ 5 Anatomy of an Enterprise Integration Solution............................................................................. 5 EAI Testing Challenges................................................................................................................ 6 Functional Testing for Integration Solutions................................................................................. 7 EAI Testing Framework ..................................................................................