Open Cloud Based Distributed Geo-Ict Services Gujarat Technological University Ahmedabad

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

US Topo Map Users Guide

US Topo Map Users Guide April 2018. Based on Adobe Reader XI version 11.0.20 (Minor updates and corrections, November 2017.) April 2018 Updates Based on Adobe Reader DC version 2018.009.20050 In 2017, US Topo production systems were redesigned. This system change has few impacts on the design and appearance of US Topo maps, but does affect the geospatial characteristics. The previous version of this Users Guide is frozen in its current form, and applies to US Topo maps created The previous version of this Users Guide, which before approximately June 2017 and to all HTMC applies to US Topo maps created before June maps. The document you are now reading applies 2017 and to all HTMC maps, is linked from to US Topo maps created after June 2017, and https://nationalmap.gov/ustopo will be maintained into the future. US Topo maps are the current generation of USGS topographic maps. The first of these maps were published in 2009. They are modeled on the legacy 7.5-minute series of the mid-20th century, but unlike traditional topographic maps they are mass produced from GIS databases, and are published as PDF documents instead of as paper maps. US Topo maps include base data from The National Map and other sources, including roads, hydrography, contours, boundaries, woodland cover, structures, geographic names, an aerial photo image, Federal land boundaries, and shaded relief. For more information see the project web page at https://nationalmap.gov/ustopo. The Historical Topographic Map Collection (HTMC) includes all editions and all scales of USGS standard topographic quadrangle maps originally published as paper maps in the period 1884-2006. -

Geographic Coordinate System

Introduction to GIS Theory Research Computing Services Sept. 10, 2021 Dennis Milechin, P.E. [email protected] Please fill out tutorial evaluation: http://rcs.bu.edu/eval Introduction to GIS Theory 9/10/2021 Housekeeping - This session is recorded. - Will be published here: https://www.bu.edu/tech/support/research/training-consulting/access-training-materials/ Link to Presentation: http://rcs.bu.edu/examples/gis/tutorials/gis_theory/intro_to_gis_theory.pdf 2 Introduction to GIS Theory 9/10/2021 Outline - What is GIS? - Datum - Geographic Coordinate System - Projections - Common Spatial Data Models - Data Layers - Spatial Data Storage - Example Workflow - Sample GIS Software 3 Introduction to GIS Theory 9/10/2021 What is GIS? - Most of us are familiar with tabular data. 4 Introduction to GIS Theory 9/10/2021 What is GIS? - Import tabular data into GIS. 5 Introduction to GIS Theory 9/10/2021 What is GIS? - Import tabular data into GIS. 6 Introduction to GIS Theory 9/10/2021 What is GIS? - Import tabular data into GIS. 7 Introduction to GIS Theory 9/10/2021 What is GIS? - Geographic Information System “A geographic information system (GIS) is a system designed to capture, store, manipulate, analyze, manage, and present spatial or geographic data” https://en.wikipedia.org/wiki/Geographic_information_system 8 Introduction to GIS Theory 9/10/2021 What is GIS? - Typical functions of GIS software - Read/write spatial data - Maintain spatial meta data - Apply transformations for projections - Apply symbology based on attribute table - Allow layering of data - Tools to query/filter data - Spatial analysis tools - Exporting tools for printing maps or publish web maps 9 Introduction to GIS Theory 9/10/2021 Datum 10 Introduction to GIS Theory 9/10/2021 Datum - The earth is generally round, but is not a perfect sphere or very smooth (e.g. -

GDAL 2.1 What's New ?

GDAL 2.1 What’s new ? Even Rouault - SPATIALYS Dmitry Baryshnikov - NextGIS Ari Jolma August 25th 2016 GDAL 2.1 - What’s new ? Plan ● Introduction to GDAL/OGR ● Community ● GDAL 2.1 : new features ● Future directions GDAL 2.1 - What’s new ? GDAL/OGR : Introduction ● GDAL? Geospatial Data Abstraction Library. The swiss army knife for geospatial. ● Raster (GDAL) and Vector (OGR) ● Read/write access to more than 200 (mainly) geospatial formats and protocols. ● Widely used (FOSS & proprietary): GRASS, MapServer, Mapnik, QGIS, gvSIG, PostGIS, OTB, SAGA, FME, ArcGIS, Google Earth… (> 100 http://trac.osgeo.org/gdal/wiki/SoftwareUsingGdal) ● Started in 1998 by Frank Warmerdam ● A project of OSGeo since 2008 ● MIT/X Open Source license (permissive) ● > 1M lines of code for library + utilities, ... ● > 150K lines of test in Python GDAL 2.1 - What’s new ? Main features ● Format support through drivers implemented a common interface ● Support datasets of arbitrary size with limited resources ● C++ library with C API ● Multi OS: Linux, Windows, MacOSX/iOS, Android, ... ● Language bindings: Python, Perl, C#, Java,... ● Utilities for translation,reprojection, subsetting, mosaicing, interpolating, indexing, tiling… ● Can work with local, remote (/vsicurl), compressed (/vsizip/, /vsigzip/, /vsitar), in-memory (/vsimem) files GDAL 2.1 - What’s new ? General architecture Utilities: gdal_translate, ogr2ogr, ... C API, Python, Java, Perl, C# Raster core Vector core Driver interface ( > 200 ) raster, vector or hybrid drivers CPL: Multi-OS portability layer GDAL 2.1 - What’s new ? Raster Features ● Efficient support for large images (tiling, overviews) ● Several georeferencing methods: affine transform, ground control points, RPC ● Caching of blocks of pixels ● Optimized reprojection engine ● Algorithms: rasterization, vectorization (polygon and contour generation), null pixel interpolation, filters GDAL 2.1 - What’s new ? Raster formats ● Images: JPEG, PNG, GIF, WebP, BPG .. -

GDAL 2.0 Overview

GDAL 2.0 overview Even Rouault SPATIALYS July 15h 2015 GDAL 2.0 overview Plan ● Introduction to GDAL/OGR ● Community ● GDAL 2.0 features ● GDAL 2.1 preview ● Potential future directions ● Discussion GDAL 2.0 overview About me ● Contributor to the GDAL/OGR project since 2007 chair of the PSC since 2015. ● Contributor to MapServer ● Co-maintainer: libtiff, libgeotiff, PROJ.4 ● Funder of Spatialys, consulting company around geospatial open source technologies, with a strong focus on GDAL and MapServer GDAL 2.0 overview GDAL/OGR Introduction ● Geospatial Data Abstraction Library ● Raster (GDAL) and Vector (OGR) ● Read/write access to more than 200 geospatial formats and protocols ● Widely used (FOSS & proprietary): GRASS, MapServer, Mapnik, QGIS, PostGIS, OTB, SAGA, FME, ArcGIS, Google Earth… (93 referenced at http://trac.osgeo.org/gdal/wiki/SoftwareUsingGdal) ● Started in 1998 by Frank Warmerdam ● A project of OSGeo since 2008 ● MIT/X Open Source license (permissive) ● 1.2 M physical lines of code (175 kLOC from external libraries) for library, utilities and language bindings ● 166 kLOC for Python autotest suite. 62% line coverage GDAL 2.0 overview Main features ● Support datasets of arbitrary size with limited resources ● C++ library with C API ● Multi OS: Linux, Windows, MacOSX/iOS, Android, ... ● Language bindings: Python, Perl, C#, Java,... ● OGC WKT coordinate systems, and PROJ.4 as reprojection engine ● Format support through drivers implemented a common interface ● Utilities for translation,reprojection, subsetting, mosaicing, tiling… -

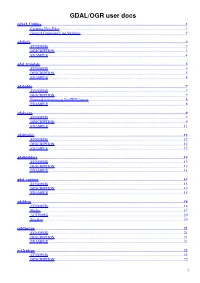

GDAL/OGR User Docs GDAL Utilities

GDAL/OGR user docs GDAL Utilities.............................................................................................................................................................1 Creating New Files.........................................................................................................................................1 General Command Line Switches..................................................................................................................2 gdalinfo.........................................................................................................................................................................3 SYNOPSIS......................................................................................................................................................3 DESCRIPTION..............................................................................................................................................3 EXAMPLE......................................................................................................................................................4 gdal_translate..............................................................................................................................................................5 SYNOPSIS......................................................................................................................................................5 DESCRIPTION..............................................................................................................................................5 -

Geospatial Data Management”, Chapter 5 from the Book Geographic Information System Basics (Index.Html) (V

This is “Geospatial Data Management”, chapter 5 from the book Geographic Information System Basics (index.html) (v. 1.0). This book is licensed under a Creative Commons by-nc-sa 3.0 (http://creativecommons.org/licenses/by-nc-sa/ 3.0/) license. See the license for more details, but that basically means you can share this book as long as you credit the author (but see below), don't make money from it, and do make it available to everyone else under the same terms. This content was accessible as of December 29, 2012, and it was downloaded then by Andy Schmitz (http://lardbucket.org) in an effort to preserve the availability of this book. Normally, the author and publisher would be credited here. However, the publisher has asked for the customary Creative Commons attribution to the original publisher, authors, title, and book URI to be removed. Additionally, per the publisher's request, their name has been removed in some passages. More information is available on this project's attribution page (http://2012books.lardbucket.org/attribution.html?utm_source=header). For more information on the source of this book, or why it is available for free, please see the project's home page (http://2012books.lardbucket.org/). You can browse or download additional books there. i Chapter 5 Geospatial Data Management Every user of geospatial data has experienced the challenge of obtaining, organizing, storing, sharing, and visualizing their data. The variety of formats and data structures, as well as the disparate quality, of geospatial data can result in a dizzying accumulation of useful and useless pieces of spatially explicit information that must be poked, prodded, and wrangled into a single, unified dataset. -

Flood Mitigation Grant Applications Geospatial File Eligibility Criteria Job

Flood Mitigation Assistance Grant Program Job Aid NEW GEOSPATIAL FILE ELIGIBILITY CRITERIA IN FLOOD MITIGATION GRANT APPLICATIONS The Flood Mitigation Assistance (FMA) program makes federal funds available to state, local, tribal, and territorial governments to strengthen national Geospatial File preparedness by reducing or eliminating the risk of repetitive flood damage to Requirements structures insured under the National Flood Insurance Program (NFIP). The proposed benefitting To facilitate and aid this objective, the program has updated its eligibility criteria area is defined by the to include a geospatial file/map requirement. This job aid explains acceptable project application and file types and software that can be used, as well as the creation and preparation incorporates properties that of a geospatial file. might reasonably benefit from the proposed flood mitigation activities. Applicants must submit a map and associated geospatial file(s) highlighting the proposed project’s benefitting area and footprint. Geospatial files enable The project footprint is FEMA to quickly assess a project’s precise location and evaluate the potential the physical area in which benefit to flood insurance policy holders. Only geospatial files that identify the mitigation work will be benefitting area and footprint are required; no other geospatial information conducted. should be included in order to limit the file size. ACCEPTABLE GEOSPATIAL FILE TYPES The file types listed here represent some of the acceptable geospatial file types that can -

US Topo Map and Historical Topographic Map Users Guide

US Topo Map and Historical Topographic Map Users Guide May 2016. Based on Adobe Reader XI version 11.0.15 and TerraGo Toolbar version 6.8.05186 June 2017 note: In 2017, US Topo production systems were redesigned. This system change has few impacts on the design and appearance of US Topo maps, but does affect the geospatial characteristics. This version of the Users Guide is frozen in its current form, and applies to US Topo maps created before approximately June 2017 and to all HTMC maps. US Topo maps published after June 2017 have a new version of this guide, which is posted at https://nationalmap.gov/ustopo This guide explains how to access and use two types of USGS digital topographic maps: US Topo maps and USGS historical topographic maps. US Topo maps are the current generation of USGS topographic maps. The first of these maps were published in 2009. They are modeled on the legacy 7.5-minute series of the mid-20th century, but unlike traditional topographic maps they are mass produced from GIS databases, and are published as PDF documents instead of as paper maps. US Topo maps include base data from The National Map and other sources, including roads, hydrography, contours, boundaries, woodland cover, structures, geographic names, an aerial photo image, Federal land boundaries, and shaded relief. More information about this series is available on at http://nationalmap.gov/ustopo. The Historical Topographic Map Collection (HTMC) includes all editions and all scales of USGS standard topographic quadrangle maps originally published as paper maps in the period 1884-2006. -

Metadata Practices to Support Discovery in Geospatial Platform/Data.Gov Metadata Collection Has Been Ongoing for Many Years

Metadata practices to support discovery in Geospatial Platform/data.gov Metadata collection has been ongoing for many years now in the geospatial field. With the publication of the initial Content Standard for Digital Geospatial Metadata (CSDGM) in 1994 and its Version 2 in 1998, and globally with the international metadata standard ISO 19115 in 2003, and its XML form, ISO TS19139, in 2007. The primary objective of these standards is to provide contextual documentation of geospatial resources – data, services, documents, and applications that enable fitness-for-use evaluations, and facilitate direct online access to the described resources. The Geospatial One-Stop and the Geospatial Platform successor catalog, executed in partnership with data.gov, have as a priority the documentation of online accessible data and information products, specifically the location of data sets and applications for download and Web service URLs for real-time visualization and data access or processing. This document identifies best practices of metadata creation and publishing that will lead to more successful propagation of metadata and ease of resource discovery and access through cataloguing systems like data.gov and the Geospatial Platform. URLs to metadata should be to online resources. Resources (data, services, applications) that are described in either CSDGM or ISO metadata must include URLs that take a user to the online resource. This is a baseline expectation of the data.gov environment and one we must follow in the shared catalog. Links should provide direct access to the resource wherever possible. Metadata records that only include references to websites or HTML pages where users must re-initiate search might not be harvested into the new catalog. -

Supported Data Formats in ERDAS IMAGINE Raster Formats

Supported Data Formats ERDAS IMAGINE Supported Data Formats in ERDAS IMAGINE Legend ⚫ IMAGINE Essentials ◆ IMAGINE Additional Modules IMAGINE Advantage Raster Formats Direct Write Direct Write Data Format Direct Read Import Export (CREATABLE) (SAVEABLE) ADRG Image (.img) ⚫ ⚫ ADRG Legend (.igg) ⚫ ⚫ ADRG Overview (.ovr) ⚫ ⚫ ADRI ⚫ Alaska SAR Facility (.L) ⚫ ⚫ ALOS AVNIR-2 JAXA CEOS ⚫ ⚫ ALOS PALSAR ERSDAC CEOS ⚫ ⚫ ALOS PRISM JAXA CEOS ⚫ ⚫ ALOS PRISM JAXA CEOS IMG ⚫ ⚫ ArcInfo & Space Imaging BIL, BIP & BSQ ⚫ ASCII raster (USGS) ⚫ ⚫ ASRP ⚫ ASRP or USRP (.img) ⚫ ⚫ ASTER EOS-HDF ⚫ ⚫ AVHRR (NOAA, NOAA KLM, Dundee, Sharp) ⚫ AVIRIS ⚫ ⚫ 1 Direct Write Direct Write Data Format Direct Read Import Export (CREATABLE) (SAVEABLE) Binary (Generic BIL, BIP, BSQ and Tiled) ⚫ ⚫ Bitmap (.bmp) ⚫ ⚫ CADRG, CIB (RPF) ⚫ ⚫ Capella (prototype GEO) ⚫ ⚫ Cloud Optimized GeoTIFF (COG) ⚫ COSMO-SkyMed ⚫ ⚫ Daedalus (AMS and ABS sensors) ⚫ Defense Gridded Elevation Data (DGED) ⚫ ⚫ DEM (SDTS) (.ddf) ⚫ ⚫ DEM (USGS) (.dem) ⚫ ⚫ Maxar DigitalGlobe TIL (.til) (e.g. WorldView, QuickBird, Legion, etc) ⚫ ⚫ Digital Orthophoto Quadrangle (USGS DOQ) ⚫ ⚫ Digital Orthophoto Quadrangle Keyword Header (USGS DOQ) ⚫ ⚫ Digital Orthophoto Quarter Quadrangle (USGS DOQQ) ⚫ Digital Point Positioning Database (DPPDB) ⚫ DTED (.dt2, .dt1, .dt0) ⚫ ⚫ ⚫ ECRG, ECIB ⚫ ⚫ ECRG, ECIB TOC (.xml) ⚫ ENVI / AISA (.hdr) ⚫ ⚫ ENVISAT ASAR ⚫ ⚫ Enhanced Compressed Wavelet (.ecw) ⚫ ⚫ ⚫ ⚫ ⚫ 2 Direct Write Direct Write Data Format Direct Read Import Export (CREATABLE) (SAVEABLE) Enhanced Compressed Wavelet Protocol