Full Gradient DQN Reinforcement Learning: a Provably Convergent Scheme

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

White Knight Review Chess E-Magazine January/February - 2012 Table of Contents

Chess E-Magazine Interactive E-Magazine Volume 3 • Issue 1 January/February 2012 Chess Gambits Chess Gambits The Immortal Game Canada and Chess Anderssen- Vs. -Kieseritzky Bill Wall’s Top 10 Chess software programs C Seraphim Press White Knight Review Chess E-Magazine January/February - 2012 Table of Contents Editorial~ “My Move” 4 contents Feature~ Chess and Canada 5 Article~ Bill Wall’s Top 10 Software Programs 9 INTERACTIVE CONTENT ________________ Feature~ The Incomparable Kasparov 10 • Click on title in Table of Contents Article~ Chess Variants 17 to move directly to Unorthodox Chess Variations page. • Click on “White Feature~ Proof Games 21 Knight Review” on the top of each page to return to ARTICLE~ The Immortal Game 22 Table of Contents. Anderssen Vrs. Kieseritzky • Click on red type to continue to next page ARTICLE~ News Around the World 24 • Click on ads to go to their websites BOOK REVIEW~ Kasparov on Kasparov Pt. 1 25 • Click on email to Pt.One, 1973-1985 open up email program Feature~ Chess Gambits 26 • Click up URLs to go to websites. ANNOTATED GAME~ Bareev Vs. Kasparov 30 COMMENTARY~ “Ask Bill” 31 White Knight Review January/February 2012 White Knight Review January/February 2012 Feature My Move Editorial - Jerry Wall [email protected] Well it has been over a year now since we started this publication. It is not easy putting together a 32 page magazine on chess White Knight every couple of months but it certainly has been rewarding (maybe not so Review much financially but then that really never was Chess E-Magazine the goal). -

2.) COMMUNITY COLLEGE: Maricopa Co

GENERAL STUDIES COURSE PROPOSAL COVER FORM (ONE COURSE PER FORM) 1.) DATE: 3/26/19 2.) COMMUNITY COLLEGE: Maricopa Co. Comm. College District 3.) PROPOSED COURSE: Prefix: GST Number: 202 Title: Games, Culture, and Aesthetics Credits: 3 CROSS LISTED WITH: Prefix: Number: ; Prefix: Number: ; Prefix: Number: ; Prefix: Number: ; Prefix: Number: ; Prefix: Number: . 4.) COMMUNITY COLLEGE INITIATOR: KEITH ANDERSON PHONE: 480-654-7300 EMAIL: [email protected] ELIGIBILITY: Courses must have a current Course Equivalency Guide (CEG) evaluation. Courses evaluated as NT (non- transferable are not eligible for the General Studies Program. MANDATORY REVIEW: The above specified course is undergoing Mandatory Review for the following Core or Awareness Area (only one area is permitted; if a course meets more than one Core or Awareness Area, please submit a separate Mandatory Review Cover Form for each Area). POLICY: The General Studies Council (GSC) Policies and Procedures requires the review of previously approved community college courses every five years, to verify that they continue to meet the requirements of Core or Awareness Areas already assigned to these courses. This review is also necessary as the General Studies program evolves. AREA(S) PROPOSED COURSE WILL SERVE: A course may be proposed for more than one core or awareness area. Although a course may satisfy a core area requirement and an awareness area requirement concurrently, a course may not be used to satisfy requirements in two core or awareness areas simultaneously, even if approved for those areas. With departmental consent, an approved General Studies course may be counted toward both the General Studies requirements and the major program of study. -

1999/1 Layout

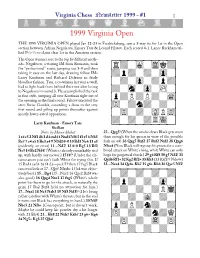

Virginia Chess Newsletter 1999 - #1 1 1999 Virginia Open THE 1999 VIRGINIA OPEN played Jan 22-24 in Fredricksburg, saw a 3-way tie for 1st in the Open section between Adrian Negulescu, Emory Tate & Leonid Filatov. Each scored 4-1. Lance Rackham tal- 1 1 lied 5 ⁄2- ⁄2 to claim clear 1st in the Amateur section. The Open winners rose to the top by different meth- ‹óóóóóóóó‹ ods. Negulescu, a visiting IM from Rumania, took õÏ›‹Ò‹ÌÙ›ú the “professional” route, jumping out 3-0 and then taking it easy on the last day, drawing fellow IMs õ›‡›‹›‹·‹ú Larry Kaufman and Richard Delaune in fairly bloodless fashion. Tate, a co-winner last year as well, õ‹›‹·‹›‡›ú had to fight back from behind this time after losing to Negulescu in round 3. He accomplished the task õ›‹Â‹·‹„‹ú in fine style, jumping all over Kaufman right out of õ‡›fi›fi›‹Ôú the opening in the final round. Filatov executed the semi Swiss Gambit, conceding a draw in the very õfl‹›‰›‹›‹ú first round and piling up points thereafter against mostly lower-rated opposition. õ‹fl‹›‹Áfiflú õ›‹›‹›ÍÛ‹ú Larry Kaufman - Emory Tate Sicilian ‹ìììììììì‹ Notes by Macon Shibut 25...Qxg5! (When the smoke clears Black gets more 1 e4 c5 2 Nf3 d6 3 d4 cxd4 4 Nxd4 Nf6 5 f3 e5 6 Nb3 than enough for his queen in view of the possible Be7 7 c4 a5 8 Be3 a4 9 N3d2 0-0 10 Bd3 Nc6 11 a3 fork on e4) 26 Qxg5 Rxf2 27 Rxf2 Nxf2 28 Qxg6 (evidently an error) 11...Nd7! 12 0-0 Bg5 13 Bf2 Nfxe4 (Now Black will regroup his pieces for a com- Nc5 14 Bc2 Nd4! (White is already remarkably tied bined attack on White’s king, while White can only up, with hardly any moves.) 15 f4!? (Under the cir- hope for perpetual check.) 29 g4 Rf8 30 g5 Nd2! 31 cumstances you can’t fault White for trying this. -

Technology Innovation and the Future of Air Force Intelligence Analysis

C O R P O R A T I O N LANCE MENTHE, DAHLIA ANNE GOLDFELD, ABBIE TINGSTAD, SHERRILL LINGEL, EDWARD GEIST, DONALD BRUNK, AMANDA WICKER, SARAH SOLIMAN, BALYS GINTAUTAS, ANNE STICKELLS, AMADO CORDOVA Technology Innovation and the Future of Air Force Intelligence Analysis Volume 2, Technical Analysis and Supporting Material RR-A341-2_cover.indd All Pages 2/8/21 12:20 PM For more information on this publication, visit www.rand.org/t/RRA341-2 Library of Congress Cataloging-in-Publication Data is available for this publication. ISBN: 978-1-9774-0633-0 Published by the RAND Corporation, Santa Monica, Calif. © Copyright 2021 RAND Corporation R® is a registered trademark. Cover: U.S. Marine Corps photo by Cpl. William Chockey; faraktinov, Adobe Stock. Limited Print and Electronic Distribution Rights This document and trademark(s) contained herein are protected by law. This representation of RAND intellectual property is provided for noncommercial use only. Unauthorized posting of this publication online is prohibited. Permission is given to duplicate this document for personal use only, as long as it is unaltered and complete. Permission is required from RAND to reproduce, or reuse in another form, any of its research documents for commercial use. For information on reprint and linking permissions, please visit www.rand.org/pubs/permissions. The RAND Corporation is a research organization that develops solutions to public policy challenges to help make communities throughout the world safer and more secure, healthier and more prosperous. RAND is nonprofit, nonpartisan, and committed to the public interest. RAND’s publications do not necessarily reflect the opinions of its research clients and sponsors. -

Reggie Workman Working Man

APRIL 2018—ISSUE 192 YOUR FREE GUIDE TO THE NYC JAZZ SCENE NYCJAZZRECORD.COM REGGIE WORKMAN WORKING MAN JIM JONNY RICHARD EDDIE McNEELY KING WYANDS JEFFERSON Managing Editor: Laurence Donohue-Greene Editorial Director & Production Manager: Andrey Henkin To Contact: The New York City Jazz Record 66 Mt. Airy Road East APRIL 2018—ISSUE 192 Croton-on-Hudson, NY 10520 United States Phone/Fax: 212-568-9628 New York@Night 4 Laurence Donohue-Greene: Interview : JIM Mcneely 6 by ken dryden [email protected] Andrey Henkin: [email protected] Artist Feature : JONNY KING 7 by donald elfman General Inquiries: [email protected] ON The COver : REGGIE WORKMAN 8 by john pietaro Advertising: [email protected] Encore : RICHARD WYANDS by marilyn lester Calendar: 10 [email protected] VOXNews: Lest WE Forget : EDDIE JEFFERSON 10 by ori dagan [email protected] LAbel Spotlight : MINUS ZERO by george grella US Subscription rates: 12 issues, $40 11 Canada Subscription rates: 12 issues, $45 International Subscription rates: 12 issues, $50 For subscription assistance, send check, cash or vOXNEWS 11 by suzanne lorge money order to the address above or email [email protected] Obituaries by andrey henkin Staff Writers 12 David R. Adler, Clifford Allen, Duck Baker, Stuart Broomer, FESTIvAL REPORT Robert Bush, Thomas Conrad, 13 Ken Dryden, Donald Elfman, Phil Freeman, Kurt Gottschalk, Tom Greenland, Anders Griffen, CD REviews 14 Tyran Grillo, Alex Henderson, Robert Iannapollo, Matthew Kassel, Marilyn Lester, Suzanne -

Dragon Magazine #127

CONTENTS Magazine Issue #127 Vol. XII, No. 6 SPECIAL ATTRACTIONS November 1987 15 Cal1 to Arms: The fighters world, from berserkers to battlefields. 16 Lords & Legends Kyle Gray Four famous warriors from European myth and legend. 22 No Quarter! Arn Ashleigh Parker Publisher Mike Cook Creative combat for fighters with style. 26 Bazaar of the Bizarre The readers Editor A magical treasury of bows and bolts for arcane archers. Roger E. Moore 32 Two Hands Are Better Than One Donald D. Miller Assistant editor Fiction editor When a two-handed sword becomes a three-handed sword, and other handy facts. Robin Jenkins Patrick L. Price 36 In Defense of the Shield Tim Merrett Editorial assistants A good shield might be the best friend youll ever have. Eileen Lucas Barbara G. Young 38 Fighting for Keeps Roy G. Schelper Debbie Poutsch Georgia Moore Your new castle is full of orcs? Its BATTLESYSTEM supplement time! Art director 46 In the Heat of the Fight Sean Holland Roger Raupp Berserkers, ambushes, fanatics, tribal champions all in a days work. Production Staff 48 A Menagerie of Martial Arts Len Carpenter Marilyn Favaro Gloria Habriga Twenty all-new martial-arts styles for Oriental Adventures. Colleen OMalley OTHER FEATURES Subscriptions Advertising 8 Role-playing Reviews Ken Rolston Pat Schulz Mary Parkinson Game designers rush in where deities fear to tread. Creative editors 56 The Ecology of the Yeti Thomas Kiefer Ed Greenwood Jeff Grubb A particularly chilling encounter on the high glaciers. 62 Arcane Lore Arthur Collins Selections from a lost tome on lifes little illusions. -

Dragon Magazine #100

D RAGON 1 22 45 SPECIAL ATTRACTIONS In the center: SAGA OF OLD CITY Poster Art by Clyde Caldwell, soon to be the cover of an exciting new novel 4 5 THE CITY BEYOND THE GATE Robert Schroeck The longest, and perhaps strongest, AD&D® adventure weve ever done 2 2 At Moonset Blackcat Comes Gary Gygax 34 Gary gives us a glimpse of Gord, with lots more to come Publisher Mike Cook 3 4 DRAGONCHESS Gary Gygax Rules for a fantastic new version of an old game Editor-in-Chief Kim Mohan Editorial staff OTHER FEATURES Patrick Lucien Price Roger Moore 6 Score one for Sabratact Forest Baker Graphics and production Role-playing moves onto the battlefield Roger Raupp Colleen OMalley David C. Sutherland III 9 All about the druid/ranger Frank Mentzer Heres how to get around the alignment problem Subscriptions Georgia Moore 12 Pages from the Mages V Ed Greenwood Advertising Another excursion into Elminsters memory Patricia Campbell Contributing editors 86 The chance of a lifetime Doug Niles Ed Greenwood Reminiscences from the BATTLESYSTEM Supplement designer . Katharine Kerr 96 From first draft to last gasp Michael Dobson This issues contributing artists . followed by the recollections of an out-of-breath editor Dennis Kauth Roger Raupp Jim Roslof 100 Compressor Michael Selinker Marvel Bullpen An appropriate crossword puzzle for our centennial issue Dave Trampier Jeff Marsh Tony Moseley DEPARTMENTS Larry Elmore 3 Letters 101 World Gamers Guide 109 Dragonmirth 10 The forum 102 Convention calendar 110 Snarfquest 69 The ARES Section 107 Wormy COVER Its fitting that an issue filled with things weve never done before should start off with a cover thats unlike any of the ninety-nine that preceded it. -

Languages of New York State Is Designed As a Resource for All Education Professionals, but with Particular Consideration to Those Who Work with Bilingual1 Students

TTHE LLANGUAGES OF NNEW YYORK SSTATE:: A CUNY-NYSIEB GUIDE FOR EDUCATORS LUISANGELYN MOLINA, GRADE 9 ALEXANDER FFUNK This guide was developed by CUNY-NYSIEB, a collaborative project of the Research Institute for the Study of Language in Urban Society (RISLUS) and the Ph.D. Program in Urban Education at the Graduate Center, The City University of New York, and funded by the New York State Education Department. The guide was written under the direction of CUNY-NYSIEB's Project Director, Nelson Flores, and the Principal Investigators of the project: Ricardo Otheguy, Ofelia García and Kate Menken. For more information about CUNY-NYSIEB, visit www.cuny-nysieb.org. Published in 2012 by CUNY-NYSIEB, The Graduate Center, The City University of New York, 365 Fifth Avenue, NY, NY 10016. [email protected]. ABOUT THE AUTHOR Alexander Funk has a Bachelor of Arts in music and English from Yale University, and is a doctoral student in linguistics at the CUNY Graduate Center, where his theoretical research focuses on the semantics and syntax of a phenomenon known as ‘non-intersective modification.’ He has taught for several years in the Department of English at Hunter College and the Department of Linguistics and Communications Disorders at Queens College, and has served on the research staff for the Long-Term English Language Learner Project headed by Kate Menken, as well as on the development team for CUNY’s nascent Institute for Language Education in Transcultural Context. Prior to his graduate studies, Mr. Funk worked for nearly a decade in education: as an ESL instructor and teacher trainer in New York City, and as a gym, math and English teacher in Barcelona. -

Foundations of Hybrid and Embedded Systems and Software

FINAL REPORT FOUNDATIONS OF HYBRID AND EMBEDDED SYSTEMS AND SOFTWARE NSF/ITR PROJECT – AWARD NUMBER: CCR-0225610 UNIVERSITY OF CALIFORNIA, BERKELEY November 26, 2010 PERIOD OF PERFORMANCE COVERED: November 14, 2002 – August 31, 2010 1 Contents 1 Participants 3 1.1 People ....................................... 3 1.2 PartnerOrganizations: . ... 9 1.3 Collaborators:.................................. 9 2 Activities and Findings 14 2.1 ProjectActivities ............................... .. 14 2.1.1 ITREvents ................................ 16 2.1.2 HybridSystemsTheory . .. .. 16 2.1.3 DeepCompositionality . 16 2.1.4 RobustHybridSystems . .. .. 16 2.1.5 Hybrid Systems and Systems Biology . .. 16 2.2 ProjectFindings................................. 16 3 Outreach 16 3.1 Project Training and Development . .... 16 3.2 OutreachActivities .............................. .. 16 3.2.1 Curriculum Development for Modern Systems Science (MSS)..... 16 3.2.2 Undergrad Course Insertion and Transfer . ..... 16 3.2.3 GraduateCourses............................. 16 4 Publications and Products 16 4.1 Technicalreports ................................ 16 4.2 PhDtheses .................................... 16 4.3 PhDtheses .................................... 16 4.4 Conferencepapers................................ 16 4.5 Books ....................................... 16 4.6 Journalarticles ................................. 16 4.6.1 The 2009-2010 Chess seminar series . ... 16 4.6.2 WorkshopsandInvitedTalks . 16 4.6.3 GeneralDissemination . 16 4.7 OtherSpecificProducts -

Ludic Dysnarrativa: How Can Fictional Inconsistency in Games Be Reduced? by Rory Keir Summerley

Ludic Dysnarrativa: How Can Fictional Inconsistency In Games Be Reduced? by Rory Keir Summerley A Thesis submitted in partial fulfilment of the requirements for the Degree of Doctor of Philosophy (PhD) at the University of the Arts London In Collaboration with Falmouth University December 2017 Abstract The experience of fictional inconsistencies in games is surprisingly common. The goal was to determine if solutions exist for this problem and if there are inherent limitations to games as a medium that make storytelling uncommonly difficult. Termed ‘ludic dysnarrativa’, this phenomenon can cause a loss of immersion in the fictional world of a game and lead to greater difficulty in intuitively understanding a game’s rules. Through close textual analysis of The Stanley Parable and other games, common trends are identified that lead a player to experience dysnarrativa. Contemporary cognitive theory is examined alongside how other media deal with fictional inconsistency to develop a model of how information (fictional and otherwise) is structured in media generally. After determining that gaps in information are largely the cause of a player feeling dysnarrativa, it is proposed that a game must encourage imaginative acts from the player to prevent these gaps being perceived. Thus a property of games, termed ‘imaginability’, was determined desirable for fictionally consistent game worlds. Many specific case studies are cited to refine a list of principles that serve as guidelines for achieving imaginability. To further refine these models and principles, multiplayer games such as Dungeons and Dragons were analysed specifically for how multiple players navigate fictional inconsistencies within them. While they operate very differently to most single-player games in terms of their fiction, multiplayer games still provide useful clarifications and principles for reducing fictional inconsistencies in all games. -

The Classified Encyclopedia of Chess Variants

THE CLASSIFIED ENCYCLOPEDIA OF CHESS VARIANTS I once read a story about the discovery of a strange tribe somewhere in the Amazon basin. An eminent anthropologist recalls that there was some evidence that a space ship from Mars had landed in the area a millenium or two earlier. ‘Good heavens,’ exclaims the narrator, are you suggesting that this tribe are the descendants of Martians?’ ‘Certainly not,’ snaps the learned man, ‘they are the original Earth-people — it is we who are the Martians.’ Reflect that chess is but an imperfect variant of a game that was itself a variant of a germinal game whose origins lie somewhere in the darkness of time. The Classified Encyclopedia of Chess Variants D. B. Pritchard The second edition of The Encyclopedia of Chess Variants completed and edited by John Beasley Copyright © the estate of David Pritchard 2007 Published by John Beasley 7 St James Road Harpenden Herts AL5 4NX GB - England ISBN 978-0-9555168-0-1 Typeset by John Beasley Originally printed in Great Britain by Biddles Ltd, King’s Lynn Contents Introduction to the second edition 13 Author’s acknowledgements 16 Editor’s acknowledgements 17 Warning regarding proprietary games 18 Part 1 Games using an ordinary board and men 19 1 Two or more moves at a time 21 1.1 Two moves at a turn, intermediate check observed 21 1.2 Two moves at a turn, intermediate check ignored 24 1.3 Two moves against one 25 1.4 Three to ten moves at a turn 26 1.5 One more move each time 28 1.6 Every man can move 32 1.7 Other kinds of multiple movement 32 2 Games with concealed -

A Curriculum Guide for Reading Mentors TABLE of CONTENTS

THE SOURCE: A Curriculum Guide for Reading Mentors TABLE OF CONTENTS PART 1 Ideas for Building Readers Chapter One How Do Children Become Readers? Chapter Two What Research Tells Us About Struggling Readers Chapter Three Meeting the Needs of Struggling Readers Chapter Four Phonemic Awareness: The Foundation for Phonics Skills Chapter Five Phonics and Decoding Skills Chapter Six Building Fluency Chapter Seven Word Building for Increasing Vocabulary Chapter Eight Comprehension: The Reason for Learning to Read Chapter Nine Finding Appropriate Reading Materials Chapter Ten Individual Assessments PART 2 PLANNING Resources for Intervention Sessions Tutoring Session LESSON 1-30 Routines Individual Nonsense Word Test Assessment Sight-Word Proficiency Assessment Forms Oral Reading Fluency Passage Mentoring Student Survey Lesson Plans Poems: Eighteen Flavors and Sarah Cynthia Sylvia Stout Independent Reading Chart Student Book List Form Reciprocal Teaching Chart Word Web Phonogram Speed Drill Blank Speed Drill Syllable Bingo Word Search Racetrack Game Spin It! BIBLIOGRAPHY THE SOURCE: A Curriculum Guide for Reading Mentors 3 Part 1 IDEAS FOR BUILDING READERS CHAPTER ONE HOW DO CHILDREN BECOME READERS? “At one magical instant in your early childhood, the page of a book --- that string of confused, alien ciphers --- shivered into meaning. Words spoke to you, gave up their secrets; at that moment, whole universes opened. You became, irrevocably, a reader.” All children deserve the promise that books hold. Whether they transport us to another world, make us laugh or cry, teach us something new, or introduce us to people we wouldn’t otherwise meet, we are thankful for their gifts. In turn, all children deserve the gift of reading.