An Automatic Similarity Detection Engine Between Sacred Texts Using Text Mining and Similarity Measures

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Part 3 BECOMING a FRIEND of the FAITHFUL GOD a STUDY on ABRAHAM

Part 3 Becoming a Friend of the Faithful God A STUDY on Abraham i In & Out® GENESIS Part 3 BECOMING A FRIEND OF THE FAITHFUL GOD A STUDY ON ABRAHAM ISBN 978-1-62119-760-7 © 2015, 2018 Precept Ministries International. All rights reserved. This material is published by and is the sole property of Precept Ministries International of Chattanooga, Tennessee. No part of this publication may be reproduced, translated, or transmitted in any form or by any means, electronic or mechanical, including photocopying, recording, or any information storage and retrieval system, without permission in writing from the publisher. Precept, Precept Ministries International, Precept Ministries International The Inductive Bible Study People, the Plumb Bob design, Precept Upon Precept, In & Out, Sweeter than Chocolate!, Cookies on the Lower Shelf, Precepts For Life, Precepts From God’s Word and Transform Student Ministries are trademarks of Precept Ministries International. Unless otherwise noted, all Scripture quotations are from the New American Standard Bible, ©1960, 1962, 1963, 1968, 1971, 1972, 1973, 1975, 1977, 1995 by the Lockman Foundation. Used by permission. www.lockman.org 2nd edition Printed in the United States of America ii CONTENTS PAGE CONTENTS L ESSONS 1 LESSON ONE: An Extraordinary Promise 9 LESSON TWO: Covenant with God 17 LESSON THREE: “Is anything too difficult for the LORD?” 21 LESSON FOUR: What Does God Say about Homosexuality? 27 LESSON FIVE: Is There a Bondwoman in Your Life? 35 LESSON SIX: The Promised Son A PPENDIX 40 Explanations of the New American Standard Bible Text Format 41 Observation Worksheets 77 Abraham’s Family Tree 79 Journal on God 83 From Ur to Canaan 84 Abraham’s Sojournings 85 Genesis 1–25 at a Glance iii iv Precept Ministries International Becoming a Friend P.O. -

The Wife of Manoah, the Mother of Samson

546 THE WIFE OF MANOAH, THE MOTHER OF SAMSON Magdel le Roux University of South Africa P O Box 392, UNISA 0003 E-mail: [email protected] (Received 21/04/2016; accepted 06/07/2016) ABSTRACT The last account of the judges is that of Samson (Judges 13–16). This account has all the elements of a blockbuster. All the indications are that Samson would be an extraordinary person. And yet, even though Samson may be regarded as some sort of hero, the story suggests that Samson was also the weakest or most ineffective of the judges. Tension is created through the juxtaposition of “ideal” and “non-ideal” bodies. An alternative ideology, as a hidden polemic, is concealed in the account. As in the case of Achsah (Judges 1:11–15) and Deborah (Judges 4–5), the nameless wife of Manoah (the mother of Samson) serves as an illustration of “countercultural rhetoric” as a hidden polemic. INTRODUCTION In the dominant cultural ideology of the Israelite tribes, ideal, whole bodies were those of male Israelite soldiers without any defects. This is the image that comes to mind when one first reads about the strong man, Samson, although in time one becomes more aware of his weaknesses than his strengths. These accounts (Judges 14–16) are full of violence and of Samson’s personal revenge, but they also describe his weakness for women. In the case of Samson, an ideal male body develops into an “unwhole body” in that an aesthetic element is added to the story: God favours Samson despite his disobedience (Chs 14–16). -

Samson Gods Strong Man English

Bible for Children presents SAMSON, GOD’S STRONG MAN Written by: Edward Hughes Illustrated by: Janie Forest; Alastair Paterson Adapted by: Lyn Doerksen Produced by: Bible for Children www.M1914.org ©2021 Bible for Children, Inc. License: You have the right to copy or print this story, as long as you do not sell it. Long ago, in the land of Israel, lived a man named Manoah. He and his wife had no children. One day the Angel of the LORD appeared to Mrs. Manoah. "You will have a very special baby," He said. She told her husband the wonderful news. Manoah prayed, "Oh my Lord . come to us again. Teach us what we shall do for the child." The Angel told Manoah the child must never have his hair cut, must never drink alcohol, and must never eat certain foods. God had chosen this child to be a judge. He would lead Israel. God's people certainly needed help. They left God out of their lives, and then were bullied by their enemies, the Philistines. But when they prayed, God heard. He sent this baby who would become the world's strongest man. "So the woman bore a son and called his name Samson: and the child grew, and the LORD blessed him. And the spirit of the LORD began to move upon him." Samson became very strong. One day he fought a young lion with his bare hands - and killed it! Later, Samson tasted honey from a swarm of bees which had nested in the lion's dead body. -

Could the Story of Samson Be True Or Is It Just a Myth

UNIVERZA V MARIBORU FILOZOFSKA FAKULTETA Oddelek za anglistiko in amerikanistiko DIPLOMSKO DELO Marija Vodopivec Maribor, 2014 UNIVERZA V MARIBORU FILOZOFSKA FAKULTETA Oddelek za anglistiko in amerikanistiko Diplomsko delo SAMSONOVA AGONIJA JOHNA MILTONA: KOMPARATIVNI PRISTOP K LIKU SAMSONU Graduation thesis MILTON’S SAMSON AGONISTES: A COMPARATIVE APPROACH TO THE CHARACTER OF SAMSON Mentor: izr. prof. dr. Michelle Gadpaille Kandidat: Marija Vodopivec Študijski program: Pedagogika in Angleški jezik s književnostjo Maribor, 2014 Lektor: Izr. Prof. Dr. Michelle Gadpaille AKNOWLEDGEMENTS I want to thank my mentor, Dr. Michelle Gadpaille for her guidance and her valuable advice during my writing. I want to thank my parents, Drago and Agata for always supporting me and encouraging me during my studies. I want to thank my sister Marta and her husband Nino for always being there for me when I needed the most. I want to thank my big brother Marko and his lovely Tea for encouraging me and believing in me. I also want to thank my dear Denis for encouraging me, making me happy and for not graduating before me. FILOZOFSKA FAKULTETA Koroška cesta 160 2000 Maribor, Slovenija www.ff.um.si IZJAVA Podpisani-a MARIJA VODOPIVEC rojen-a 31.07.1988 študent-ka Filozofske fakultete Univerze v Mariboru, smer ANGLEŠKI JEZIK S KNJIŽEVNOSTJO IN PEDAGOGIKA, izjavljam, da je diplomsko delo z naslovom SAMSONOVA AGONIJA JOHNA MILTONA: KOMPARATIVNI PRISTOP K LIKU SAMSONU / MILTON’S SAMSON AGONISTES: A COMPARATIVE APPROACH TO THE CHARACTER OF SAMSON pri mentorju-ici IZR. PROF. DR. MICHELLE GADPAILLE, avtorsko delo. V diplomskem delu so uporabljeni viri in literatura korektno navedeni; teksti niso prepisani brez navedbe avtorjev. -

Lie Satan's Tool

Central Pentecostal Ministries From the Pulpit... Lie Satan’s Tool Pastor Donald Shoots “But evil men and seducers shall wax worse and worse, deceiving, and being deceived.” II Timothy 3:13 “And they shall turn away their ears from the truth, and shall be turned unto fables.” II Timothy 4:4 Men are all too often deceived by a lie. Understand that a lie is the original reason for deception. If you don’t have a lie, it’s impossible to have deception. We must know Christ to know truth. He is truth and calls all people to it. Deception comes only after a lie has taken root in the hearts and minds of people. The fall of man, in the beginning, was because of a lie. Let’s not let the great initial tool of satan fade away from the story books of religion. That was the first tool of satan, a lie! Not drugs, robbery, murder, gambling or pornography. “And the serpent said unto the woman, Ye shall not surely die” (Genesis 3:4). This was not a long sentence, paragraph or book. This was not a program on Television or an hour dissertation from the perverted pulpits of time. It was one short lie, which would roll in hearts, breeding rebellion against the Holy God. One quick phrase, one subtle, carefully-worded sentence made up of five words, turned the world upside down, and because of this lie, hell will be full. One lie and men and women by the millions will enter the eternal place called hell, where the Bible says the “worm dieth not” (Mark 9:44). -

KINGDOMS Family Guide

KINGDOMS Family Guide Welcome to IMMERSE The Bible Reading Experience Leading a family is arguably one of the most challenging tasks a person can undertake. And since families are the core unit in the church, their growth and development directly impacts the health of the communi- ties where they serve. The Immerse: Kingdoms Family Reading Guide is a resource designed to assist parents, guardians, and other family lead- ers to guide their families in the transformative Immerse experience. Planning Your Family Experience This family guide is essentially an abridged version of Immerse: King- doms. So it’s an excellent way for young readers in your family to par- ticipate in the Immerse experience without becoming overwhelmed. The readings are shorter than the readings in Immerse: Kingdoms and are always drawn from within a single day’s reading. This helps every- one in the family to stay together, whether reading from the family guide or the complete Kingdoms volume. Each daily Bible reading in the family guide is introduced by a short paragraph to orient young readers to what they are about to read. This paragraph will also help to connect the individual daily Scripture pas- sages to the big story revealed in the whole Bible. (This is an excellent tool for helping you guide your family discussions.) The family guide readings end with a feature called Thinking To- gether, created especially for young readers. These provide reflective statements and questions to help them think more deeply about the Scriptures they have read. (Thinking Together is also useful for guiding your family discussions.) The readings in the family guide are intended primarily for children i ii IMMERSE • KINGDOMS in grades 4 to 8. -

Who Cut Samson's Hair?

WHO CUT SAMSON’S HAIR? THE INTERPRETATION OF JUDGES 16:19A RECONSIDERED Cornelis Houtman1 1. Introduction Who cut Samson’s hair? Delilah or some man? Judges 16, the final chapter of the Samson cycle which narrates how Samson could be overpowered by the Philistines through Delilah, allows both interpretations. This does not mean, however, that both are equally plausible. As we shall see, the second interpretation is more fitting in the supposed circumstances. The relevant text for answering the question raised is found in Judg 16:19. In the Hebrew Bible it runs as follows: ותישׁנהו על־ברכיה ותקרא לאישׁ ותגלח את־שׁבע מחלפות ראשׁו By ‘literal’ translating, it can be rendered in the following way: And she (Delilah) made him (Samson) sleep upon her knees; and she called for2 the man and she cut3 the seven locks of his head.4 Or: and she had him cut the seven locks of his head.5 1 I am indebted to Rev. Jaap Faber, Kampen, the Netherlands, for correcting the English of this article at a number of points. ,means (cf. Judg 4:6, 16:18,25 and see, e.g., Exod 1:18, 7:11, 8:4 ל + קרא In this context 2 21), ‘to summon’, ‘to bring out’ (possibly by her personal handmaid [cf. Exod 2:5, Dan 13:15,17–19,36 (Vulgate)]). 3 For such a translation and translations which allow this interpretation, see, e.g., the African Die Bybel in Afrikaans (1936), the German Einheitsübersetzung der Heiligen Schrift (1980), the French La Sainte Bible (Nouvelle version Segond révisée [1989]), the Dutch De Nieuwe Bijbelvertaling (2004) and Naardense Bijbel (2004), the German Bibel in gerechter Sprache (2006). -

Samson and Delilah” (Judges 16:1-30) January 12, 2014 John Bruce, Pastor

Creekside Community Church Strange Tales, “Samson and Delilah” (Judges 16:1-30) January 12, 2014 John Bruce, Pastor What I appreciate most about Bob, Reg and the other Celebrate Recovery leaders is that they are Christ-centered. Celebrate Recovery isn’t a self-help plan to get people to clean up their lives and fly right. Recovery begins with acknowledging that we are all great sinners but Christ is a great Savior. No man-made program can save us from the sin that lives inside each of us – only Jesus can – which is powerfully illustrated in the passage we’ll look at this morning as we continue in the Strange Tales of the book of Judges and the story of Samson and Delilah. Samson is one of the most unlikely heroes in the Bible. He is both the strongest of men and the weakest of men; the man in whom the power of God is very evident yet one of the most resistant men to God in the Bible. He is the best equipped judge to save Israel, yet the least likely to do so because he is a self-absorbed loner. Samson’s story reminds us of the power sin has to enslave even the strongest of us and the power God has to save even the worst of us. As chapter 16 opens, Samson has judged Israel for many years. He’s killed a lot of Philistines yet the Philistines still rule Israel because Samson’s personal war with the Philistines is fueled by his desire for revenge rather than in response to God. -

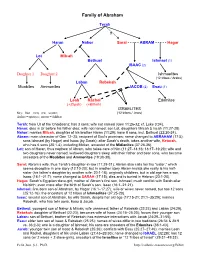

Family of Abraham

Family of Abraham Terah ? Haran Nahor Sarai - - - - - ABRAM - - - - - Hagar Lot Milcah Bethuel Ishmael (1) ISAAC (2) Daughter 1 Daughter 2 Ishmaelites (12 tribes / Arabs) Laban Rebekah Moabites Ammonites JACOB (2) Esau (1) Leah Rachel Edomites (+Zilpah) (+Bilhah) ISRAELITES Key: blue = men; red = women; (12 tribes / Jews) dashes = spouses; arrows = children Terah: from Ur of the Chaldeans; has 3 sons; wife not named (Gen 11:26-32; cf. Luke 3:34). Haran: dies in Ur before his father dies; wife not named; son Lot, daughters Milcah & Iscah (11:27-28). Nahor: marries Milcah, daughter of his brother Haran (11:29); have 8 sons, incl. Bethuel (22:20-24). Abram: main character of Gen 12–25; recipient of God’s promises; name changed to ABRAHAM (17:5); sons Ishmael (by Hagar) and Isaac (by Sarah); after Sarah’s death, takes another wife, Keturah, who has 6 sons (25:1-4), including Midian, ancestor of the Midianites (37:28-36). Lot: son of Haran, thus nephew of Abram, who takes care of him (11:27–14:16; 18:17–19:29); wife and two daughters never named; widowed daughters sleep with their father and bear sons, who become ancestors of the Moabites and Ammonites (19:30-38). Sarai: Abram’s wife, thus Terah’s daughter-in-law (11:29-31); Abram also calls her his “sister,” which seems deceptive in one story (12:10-20); but in another story Abram insists she really is his half- sister (his father’s daughter by another wife; 20:1-18); originally childless, but in old age has a son, Isaac (16:1–21:7); name changed to SARAH (17:15); dies and is buried in Hebron (23:1-20). -

Lies in Tanach Sources with English

The Parodox of a Loving Lie: Justifying Lies in Tanach Nechama Price Keep far from a false charge; do not bring death on those who are innocent and in the right, for I will not acquite the wrongdoer. Keep lies and false words far from me; Give me neither poverty nor riches, But provide me with my daily bread. He who deals deceitfully shall not live in my house; he who speaks untruth shall not stand before my eyes. Rav Yirmiya bar Abbar said: four classes of sinners do not recieve the Divine Presence: the class of scoffers, the class of flatterers and the class of liars, and the class of those who speak lashon hara... Class of lyers: (Tehilim 101) “lyers can’t dwell within my house!” 1) Avraham As he was about to enter Egypt, he said to his wife Sarai, “I know what a beautiful woman you are. If the Egyptians see you, and think, ‘She is his wife,’ they will kill me and let you live. Please say that you are my sister, that it may go well with me because of you, and that I may remain alive thanks to you.” Then Abraham said to his servants, “You stay here with the donkey. The boy and I will go up there; we will worship and we will return to you.” 2) Sarah And Sarah laughed to herself, saying, “Now that I am withered, am I to have enjoyment—with my husband so old?” Then the LORD said to Abraham, “Why did Sarah laugh, saying, ‘Shall I in truth bear a child, old as I am?’ Is anything too wondrous for the LORD? I will return to you at the same season next year, and Sarah shall have a son.” Sarah lied, saying, “I did not laugh,” for she was frightened. -

Samson's Blindness and Ethical Sight

SAMSON’S BLINDNESS AND ETHICAL SIGHT BENJAMIN CRISP Until recently, ethics research, and Scripture’s contribution to it has been sparse. It is, therefore, critical to contribute serious exegetical investigation to the conversation. Ethical blind spots impact every individual. They must not be ignored or placated. Inner texture analysis of Judges 13-16 exposes ethical blind spots in Israel’s last judge, Samson. The repetition of words and thematic progressions reveal Samson’s ethical shortcomings, and his ultimate redemption, as an example for contemporary leaders. Additionally, Samson’s ethical code, tandem with a driving metaphor, prescribes contemporary solutions to ethical waywardness. Ethical blind spots distort the LORD’s divine calling. Wrong decisions carried out with discretion seem hidden and harmless. Samson’s narrative teaches that they mutilate one’s character and calling. Christian leaders must address ethical blind spots through the evaluation of past experience, alignment between the “want” and “should” self, and rootedness in their relationship with the LORD and with others. I. INTRODUCTION The Western world has adopted a post-truth approach which bludgeons morality and fissures ethical development. By dichotomizing truth and values, leaders offer “valueless facts” to their followers (Hathaway, 2018). Society prides itself on calling right wrong and wrong right (Isa 5:20). The biblical refrain that marked the Israelites during the period of the judges—“everyone did what was right in their own eyes”—poignantly describes contemporary approaches to ethics. Such thinking has permeated present- day institutions. One seminary, which will remain unnamed, has adopted a view of the cross as an image of divine erotica. -

Was Samson a Promiscuous Man (Judges 13-16)? Viewing Samson As a Human Figure in a Theoretical Approach

KIU Journal of Social Sciences KIU Journal of Social Sciences Copyright©2019 Kampala International University ISSN: 2413-9580; 5(1): 251–264 Was Samson a Promiscuous Man (Judges 13-16)? Viewing Samson as a Human Figure in a Theoretical Approach JOHN ARIERHI OTTUH Obong University, Obong Ntak, Nigeria Abstract. This paper discusses the character of purely literary point of view thereby treating Samson as an historical figure within Judges 13- Samson as a literary figure. One of the problems 16 narratives in the Old Testament Bible. The that might be encountered in seeing Samson as a main aim of this paper is to find out through literary figure is the tendency of considering the theoretical inference whether there were genre as a fiction and as such posing the circumstances surrounding Samson‟s sexual possibility of explaining away the human behaviour. Drawing from causal theory, the problems encountered by him. Another possible paper argues that there is a possible nexus problem in seeing Samson as a literary figure is between Samson‟s failed marriage and his the likely presentation of Samson as a Super subsequent relationship with other women. It Human who is above human emotions shows that Samson‟s problem was not (feelings), mistakes or errors. Therefore, this metaphysical but human induced and as such it paper intends to view Samson as a human figure is causal. It constructs, Samson as human figure in the historical narrative in Judges 13-16. This in the narrative and analyses the text from the is why this paper argues that there is a possible perspective of causal theory and concludes that nexus between Samson‟s failed marriage and his Samson‟s failed marriage could be responsible subsequent relationship with other women.