Machine Learning Algorithm's Measurement and Analytical

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

1892 , 11/03/2019 Class 9 2184496 02/08/2011 Trading As

Trade Marks Journal No: 1892 , 11/03/2019 Class 9 PAVO 2184496 02/08/2011 FRANCIS K. PAUL JOHN K. PAUL SUSAN FRANCIS SHALET JOHN POPULAR AUTO SPARES PVT. LTD. POPULAR VEHICLES & SERVICES LTD. trading as ;KUTTUKARAN TRADING VENTURES HEAD OFFICE KUTTUKRAN CENTRE, MAMANGALAM, COCHIN - 682 025, KERALA STATE, INDIA MANUFACTURERS AND MERCHANTS Address for service in India/Attorney address: P.M GEORGEKUTTY PMG ASSOCIATES EF7-10, VASANTH NAGAR PALARIVATTOM COCHIN - 682025 Used Since :01/08/2011 CHENNAI AUTOMOBILE ELECTRONICS ACCESSORIES, NAMELY, LED DAYTIME RUNNING LIGHT. 1944 Trade Marks Journal No: 1892 , 11/03/2019 Class 9 SHREE JINDAL 2307107 28/03/2012 KRISHAN LAL ARORA trading as ;ARORA TRADING COMPANY M.I.G. -108 AVAS VIKAS LODHI VIHAR SASNI GATE AGRA ROAD ALIGARH 202001 MANUFACTURER AND TRADER Used Since :25/03/2012 DELHI JUNCTION BOX 1945 Trade Marks Journal No: 1892 , 11/03/2019 Class 9 2515909 18/04/2013 RAVISH TANEJA trading as ;VIRIMPEX 1413 IST FLOOR NICHLSON ROAD KASHMERE GATE DELHI 06 MERCHANTS/MANUFACTURERS Address for service in India/Attorney address: SAI ASSOCIATES B -5A GALI NO 38 BLOCK B PART II KAUSIK ENCLAVE BURARI DELHI 84 Used Since :20/08/2011 DELHI CAMERA, F.M., SPEAKERS, SCREEN, DVD, CD, PLAYERS, MOBILE AND CHARGER, INCLUDING IN CLASS 09 1946 Trade Marks Journal No: 1892 , 11/03/2019 Class 9 2545634 07/06/2013 NIIT Limited Regd. Office: 8, Balaji Estate, First Floor, Guru Ravi Das Marg, Kalkaji, New Delhi-110019 MANUFACTURER & MERCHANT Address for service in India/Attorney address: MAHESH KUMAR MIGLANI Prop. Worldwide -

FROM the HAIR of SHIVA to the HAIR of the PROPHET … and Other Essays

FROM THE HAIR OF SHIVA TO THE HAIR OF THE PROPHET … and other essays SAUVIK CHAKRAVERTI The soft parade has now begun, Listen to the engines hum, People out to have some fun, Cobra on my left, Leopard on my right! JIM MORRISON The Doors 2 DEDICATION To travel and travelers. And to the fond hope that, someday soon, travel in my great country will be safe, comfortable and swift. May Shubh Laabh and Shubh Yatra go together. Bon Voyage! 3 FOREWORD I am a firm believer in the social utility of enjoyable politico-economic journalism. Economists like Frederic Bastiat and Henry Hazlitt have set alight the minds of millions with the fire of freedom, not by writing dissertations, but by reaching out to lay people through jouernalism. These include dentists, engineers, architects and various other professionals who really need to know political economy in order to vote correctly, but have never had the opportunity to study it formally; or rather, in school, they have been MISTAUGHT! They are thus easily led astray by economic sophisms that justify protectionism and other damaging forms of state interference in economic life. It is for such people that I have penned this volume and I offer it to the general reading public so that they may not only enjoy the read but also appreciate the importance of Freedom: Freedom From The State. In this I have attempted to be extremely simple in my arguments – there are no ‘theories’ presented. There are also a series of travelogues which explain reality on the ground as we see it, and contrast East and West. -

IAMAI Gaming Report Jan 21.Pdf

ABOUT IAMAI The Internet and Mobile Association of India (IAMAI) is a young and vibrant association with ambitions of representing the entire gamut of digital businesses in India. It was established in 2004 by the leading online publishers, but in the last 16 years has come to effectively address the challenges facing the digital and online industry including online publishing, mobile advertising, online advertising, ecommerce, mobile content and services, mobile & digital payments, and emerging sectors such as fintech, edutech and health- tech, among others. Sixteen years after its establishment, the association is still the only professional industry body representing the digital and mobile content industry in India. The association is registered under the Societies Act and is a recognized charity in Maharashtra. With a membership of over 300 Indian and MNC companies, and with offices in Delhi, Mumbai, and Bengaluru, the association is well placed to work towards charting a growth path for the digital industry in India. ABOUT IKIGAI LAW Ikigai Law is a technology and innovation focused law and policy firm. The firm stands at the forefront of regulatory and commercial developments in the technology sector with a dedicated technology policy practice. It engages with crucial issues such as data protection and privacy, fin-tech, online content regulation, platform governance, digital gaming, digital competition, cloud computing, net neutrality, health- tech, blockchain and unmanned aviation (drones), among others. In the recent past, the -

In Brief Richard Sets Bike Record Circlin

. a_._-:.- P?gei6CRANP0BD (N.J.) CHRONICLE Thursday, January'1. "1982 Smoke detector Three Eagl$ scoytsjn in Garwood... tw& TKeniTworth.;-. Board, police/menrpromotecf\'7. • teachers still at school tax going _ loggerheads... new police: rank... page 14 • . - ;-.,.«,-. • '.. - ' USPS 136 BOO Second Class Postage Paid Cranford, N.J. 25 CENTS VOL. 90 No. 2 Published Every Thursday Thursday, January 14,1982 Serving Cntriford, Kenilworih arid "(i'krwood ? Richard sets bike record circlin If this were an ordinary week, we would use this space to tell you what's Special help too. , •'.'". ~"- r in brief at Kings. But this week, well simply _ay that we're having our exceptional Florida The Proctor and Gamble couipany is mailing thousands of coupons to be used iri 1 ;__ By R0SALI&_E0SS— — rinstead. _He was a cross country skier, and spent just $2d to $25 a night. The trip .Richard DeBernardis decided it was mountain climber and in 1976 had biked cost him $4,500. He had expected to Citrus satefarid we'll leave the jorrof telling yoilaBourTh^ other speciars t^hT the jDurchjisejj^^ tim6 for another a_ventute. "1 wanted 2,216 miles from Alaska to< Mexico in 40 spend $6,000. "For every coupon redeemed, P&G will donate a nickel. With the help of Kings to get~b_ck on the bike and do something days. He-left the university and embark- , Richard- rode a 12-speed Japanese else." ., \- •• ,J .. Because this is no ordinary weekat Kings shoppersrit can add up to an important contribution. ^ AndBOthree-yeai'sto the day that he reatils for $1,000, but was given to him : ' r. -

24 CRANFORD N J CHRONICLE Thursday

Page 24 CRANFORD N J CHRONICLE Thursday. Ju»e IS. W61 You want a ffnariey photos:..-Harding tax rates set... Center grads, honors.,. asbestos coming out... Garwood school PBA contract still Jersey City awards...pages 26^27 stalled...pages 26. 27 Super Rt #440 & Kedogg Si S«rvtc« Hotwv Monday Mwu Sit. 9 J rn I Center lo ij» p-m. - SuAcUy 9 a.*n. lo 6 p m ml VOL. 89 No. 35 Published Every Thursday Thursday, June 25,1961 Serving Cranford, Kenilworth And Garwood USPS 136 800 Second Class Postage Paid Cranford, N J 25 CENTS is theretcVt m! warehouse pnc« Chfe«GiftGtss foorr 'The Gladiator9 marks You want savings 24 hours a day MUW Cxv«r O«U CMk r. and Pathmark is there. Dads&Grads (: C mm Mor« than 400 Cranford High ••*••>"=»•» MFG-805h ._. •_ - l-lb »>i^tx;htly-&*it«d Solid — gnj By STUART AWBREY —....••... -Chickenof-theSea 803 .ft? [jOMN School seniors will receive diplomas o Bill Earls celebrates the publication' Maxwea House Ir in graduation ceremonies, at 6:30 , C of his first novel next month. It's an Chunk Light Tuna Pathmark Butter p.m tonight at Memorial Field action-packed paperback titled "The KFMM Wanda Chin, senior class president, Gladiator" and it represents a dream with and Andrea-Ciliotta, valedictorian, come true for the Cranford writer. this. will be student speakers. Robert D. ^ Earls wrote his first novel, a war coupon Paul, school superintendent, and story, 24 years ago, at age 15. He put it Robert Seyfarth, principal, will also , re pe^ tamily GooO jt any U-mfl on* ptr tumty.Good tt my P«ttvm»rt i! one pe» taintly. -

Herald of Holiness Volume 48 Number 22 (1959) Stephen S

Olivet Nazarene University Digital Commons @ Olivet Herald of Holiness/Holiness Today Church of the Nazarene 7-29-1959 Herald of Holiness Volume 48 Number 22 (1959) Stephen S. White (Editor) Nazarene Publishing House Follow this and additional works at: https://digitalcommons.olivet.edu/cotn_hoh Part of the Christian Denominations and Sects Commons, Christianity Commons, History of Christianity Commons, Missions and World Christianity Commons, and the Practical Theology Commons Recommended Citation White, Stephen S. (Editor), "Herald of Holiness Volume 48 Number 22 (1959)" (1959). Herald of Holiness/Holiness Today. 911. https://digitalcommons.olivet.edu/cotn_hoh/911 This Journal Issue is brought to you for free and open access by the Church of the Nazarene at Digital Commons @ Olivet. It has been accepted for inclusion in Herald of Holiness/Holiness Today by an authorized administrator of Digital Commons @ Olivet. For more information, please contact [email protected]. T he Lord is my light and my salvation; whom shall I fear? the Lord is the strength of my life; of whom shall I be afraid? When the wicked, even mine enemies and my foes, came upon me to eat up my flesh, they stumbled and fell. Though an host should encamp against me, my heart shall not fear: though war should rise against me, in this will I be confident. One thing have I desired of the Lord, that will I seek after; that I may dwell in the house of Lord all the days of my life, to behold the beauty of the Lord, and to enquire in his temple. For in the time of trouble he shall hide me in his pavilion: in the secret of his taber nacle shall he hide me; he shall set me up upon a rock. -

“Gambling: Indian Perspective and the Intangible Loophole”; Javvad

Volume 15, April 2021 ISSN 2581-5504 “Gambling: Indian Perspective and the Intangible Loophole” Javvad Shaikh L.J. School of Law Abstract There has been a drastic change in contemplation of the term 'gambling' which in the present measures is regarded as 'gaming'. India has been in the regulation of British bylaws for many decades and even after Independence we are yet been regulated by them only, specifically speaking, the Gambling Laws. In modern society, the whole notion of Gambling has shifted itself from just a physical illegal act to an online immoral loophole. This paper analyzes the statutes that restrict, regulates and support gambling laws in India. The paper also deals with the loopholes in the Indian Legislature and Judicial Decision as to outlining the plethora of Gambling Laws in India concerning Online Gambling. The paper lastly confers various consequences which consults a necessity of prerequisites for regulating Gaming in India. Introduction The BCCI of India which indirectly controls ICC internationally has recently been allowed and supported the new and contemporary idea of including ‘Fantasy Cricket’ on its pedestal. Morally speaking, it creates a great plethora of controversies from different minds as to if it is wrong or right. The idea- positivists rely on the pros of State income growth and legalization of gambling that would eventually lead to the decriminalization of various acts. On the contrary, the anti- affirms believes that the entry of gambling and cash flow to sports in this fallacious way would lead to an increase in crimes of different nature and also it would deter and destroy the line of control which may lead to acceptance of some other subsequent act that eventually may hurt the society at large. -

Trade Marks Journal No: 1819 , 16/10/2017 Class 9

Trade Marks Journal No: 1819 , 16/10/2017 Class 9 Advertised before Acceptance under section 20(1) Proviso 638175 25/08/1994 MARATZ LIMITED Nishihonmanchi 2F, 1-41, Nishihonmachi, 2 Chome, Nishi -Ku, Osaka 550JAPAN. MANUFACTURERS & MERCHANTS A CORPORATION ORGANIZED AND EXISTING UNDER THE LAWS OF JAPAN Address for service in India/Agents address: BANSAL & COMPANY BANSALL HOUSE, 111-B POCKET IV, MAYOUR VIHAR I, DELHI - 110 091. Used Since :01/01/1989 DELHI T.V, V.C.R, V.C.P, Two-in-One, Fax machines, Walk Man, Radio, Clock Radio, Transistor and Parts thereof. Registration of this Trade Mark shall give no right to the exclusive use of LETTER "E" EXCEPT AS SUBSTANTIALLY SHOWN IN THE FROM OF REPRESENTATION OF THE MARK AS AGREED TO 1566 Trade Marks Journal No: 1819 , 16/10/2017 Class 9 Advertised before Acceptance under section 20(1) Proviso 794200 09/03/1998 INDO ASIAN FUSEGEAR LTD. trading as ;INDO ASIAN FUSEGEAR LTD. 51, G.T. KARNAL ROAD, MURTHAL. MANUFACTURERS AND MERCHANTS Address for service in India/Agents address: S.K. NARULA A-302, RISHI APTTS., ALAKNANDA, NEW DELHI-110 019. Used Since :01/11/1997 DELHI ADAPTORS, ELECTRICAL APPARATUS AND INSTRUMENTS, ELECTRIC AND ELECTRONIC GOODS, PARTS, FITTINGS AND COMPONENTS OF ELECTRICAL AND ELECTRONIC GOODS AND FIXTURES ALL BEING GOODS INCLUDED IN CLASS 9. Registration of this Trade Mark shall give no right to the exclusive use of the expressions THE SMART CFL ADAPTOR. 1567 Trade Marks Journal No: 1819 , 16/10/2017 Class 9 SIGMA 972831 24/11/2000 MIP METRO GROUP INTELLECTUAL PROPERTY GMBH & CO. -

State of Mobile 2019.Pdf

1 Table of Contents 07 Macro Trends 19 Gaming 25 Retail 31 Restaurant & Food Delivery 36 Banking & Finance 41 Video Streaming 46 Social Networking & Messaging 50 Travel 54 Other Industries Embracing Mobile Disruption 57 Mobile Marketing 61 2019 Predictions 67 Ranking Tables — Top Companies & Apps 155 Ranking Tables — Top Countries & Categories 158 Further Reading on the Mobile Market 2 COPYRIGHT 2019 The State of Mobile 2019 Executive Summary 194B $101B 3 Hrs 360% 30% Worldwide Worldwide App Store Per day spent in Higher average IPO Higher engagement Downloads in 2018 Consumer Spend in mobile by the valuation (USD) for in non-gaming apps 2018 average user in companies with for Gen Z vs. older 2018 mobile as a core demographics in focus in 2018 2018 3 COPYRIGHT 2019 The Most Complete Offering to Confidently Grow Businesses Through Mobile D I S C O V E R S T R A T E G I Z E A C Q U I R E E N G A G E M O N E T I Z E Understand the Develop a mobile Increase app visibility Better understand Accelerate revenue opportunity, competition strategy to drive market, and optimize user targeted users and drive through mobile and discover key drivers corp dev or global acquisition deeper engagement of success objectives 4 COPYRIGHT 2019 Our 1000+ Enterprise Customers Span Industries & the Globe 5 COPYRIGHT 2019 Grow Your Business With Us We deliver the most trusted mobile data and insights for your business to succeed in the global mobile economy. App Annie Intelligence App Annie Connect Provides accurate mobile market data and insights Gives you a full view of your app performance. -

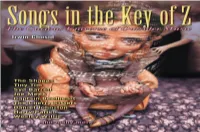

Songs in the Key of Z

covers complete.qxd 7/15/08 9:02 AM Page 1 MUSIC The first book ever about a mutant strain ofZ Songs in theKey of twisted pop that’s so wrong, it’s right! “Iconoclast/upstart Irwin Chusid has written a meticulously researched and passionate cry shedding long-overdue light upon some of the guiltiest musical innocents of the twentieth century. An indispensable classic that defines the indefinable.” –John Zorn “Chusid takes us through the musical looking glass to the other side of the bizarro universe, where pop spelled back- wards is . pop? A fascinating collection of wilder cards and beyond-avant talents.” –Lenny Kaye Irwin Chusid “This book is filled with memorable characters and their preposterous-but-true stories. As a musicologist, essayist, and humorist, Irwin Chusid gives good value for your enter- tainment dollar.” –Marshall Crenshaw Outsider musicians can be the product of damaged DNA, alien abduction, drug fry, demonic possession, or simply sheer obliviousness. But, believe it or not, they’re worth listening to, often outmatching all contenders for inventiveness and originality. This book profiles dozens of outsider musicians, both prominent and obscure, and presents their strange life stories along with photographs, interviews, cartoons, and discographies. Irwin Chusid is a record producer, radio personality, journalist, and music historian. He hosts the Incorrect Music Hour on WFMU; he has produced dozens of records and concerts; and he has written for The New York Times, Pulse, New York Press, and many other publications. $18.95 (CAN $20.95) ISBN 978-1-55652-372-4 51895 9 781556 523724 SONGS IN THE KEY OF Z Songs in the Key of Z THE CURIOUS UNIVERSE OF O U T S I D E R MUSIC ¥ Irwin Chusid Library of Congress Cataloging-in-Publication Data Chusid, Irwin. -

Download Instructions for Card Games from Around the World

Pair - two cards of the same rank. Between two such hands, compare the Card Games from Around the World pair first, then the odd card if these are equal. The highest pair hand is Compiled by the Staff of Two Horizons Press therefore A-A-K and the lowest is 2-2-3. As a Resource for the Heritage Playing Cards High card - three cards that do not belong to any of the above types. Compare the highest card first, then the second highest, then the lowest. www.TwoHorizonsPress.com The best hand of this type is A-K-J of mixed suits, and the worst is 5-3-2. India Any hand of a higher type beats any hand of a lower type - for example the lowest run 4-3-2 beats the best colour A-K-J. Introduction: players, cards, deal Teen PathiPathi, sometimes spelled Teen PattiPatti, means "threethree cardscards". It is an The betting process Indian gambling game, also known as FlushFlush, and is almost identical to The betting starts with the player to the left of the dealer, and continues the British game 3 Card Brag. An international 52 card pack is used, with players taking turns in clockwise order around the table, for as many cards ranking in the usual order from ace (high) down to two (low). Any circuits as are needed. Each player in turn can either put an additional reasonable number of players can take part; it is probably best for about bet into the pot to stay in, or pay nothing further and foldfold. -

RBSA India Deals Snapshot – May to July 2014

India Deals Snapshot May – July, 2014 Index Snapshot of Mergers & Acquisitions Update for May 4 - 6 – July, 2014 Mergers & Acquisitions Deals for May – July, 2014 7 - 19 Summary of Mergers & Acquisitions Update 20 Snapshot of PE / VC Update for May – July, 2014 22 - 26 PE / VC Deals for May – July, 2014 27 - 39 Summary of PE / VC Update 40 Real Estate Deals for May – July, 2014 42 RBSA Range of Services 43 Contact Us 44 Merger & Acquisition Update 3 Snapshot of Mergers & Acquisitions Update for May 2014 Acquirer Target Sector Stake Cr. Capiflex EquityPandit Others N. A. N. A. Bharti Softbank (BSB) Y2CF Digital Media Others N. A. N. A. Ebix HealthcareMagic Healthcare N. A. ` 112.85 Cr SKS Trust Outreach Financial Services Others 70% N. A. Motherson Sumi System Stoneridge Manufacturing ` 400.77 Cr McNally Bharat Engineering Undisclosed Manufacturing & Engineering N. A. ` 12 Cr Company Ltd. (MBE) UST Global Kanchi Technologies Others N. A. N. A. Epicurean Nalasaappakadai Hotels & Hospitality 51% N. A. Pubmatic Mocean Mobile Others N. A. N. A. Times Internet CouponDunia Others N. A. N. A. Cancer Genetics, Inc.(CGI) BioServe-India Healthcare N. A. ` 11.59 Cr Mastercard Electracard IT/ITES N. A. N. A. Adani Port & SEZ Dhamra Port Others 100% Rs. 5500 Cr. Starwatch Talenthouse Media & Entertainment N. A. N. A. Tata Global Beverages Bronski Eleven Food & Beverages N. A. N. A. EID Parry Parry Phytoremedies Manufacturing 37% N. A. Sony Music Infibeam Others 26% N. A. Emperador USL Manufacturing N. A. ` 4446.9 Cr Kirusa Cooltook Others N. A. N. A. Groupe SEB Maharaja Whiteline Manufacturing 45% N.