Sentiment Analysis for Social Media

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Versatility of Visualization: Delivering Interactive Feature Film Content on DVD

Nebula4.2, June 2007 The Versatility of Visualization: Delivering Interactive Feature Film Content on DVD. By Sarah Atkinson Abstract This article investigates how the film production process has been influenced by the DVD format of delivery. It discusses how the digital output is affecting the creative process of feature film production and the affect upon the visualization process during preproduction. It will deploy two case studies - M y Little Eye (2002, Dir: Marc Evans) and Final Destination 3 (2006, Dir: James Wong) – utilizing diagrammatical visualizations of their interactive content. Both these films produced additional content during the feature film production phase to offer viewers an alternate and interactive viewing experience on DVD. The article concludes by exploring how a current technological advancement, Blu Ray DVD Production, will act as a further catalyst to develop the interactive film genre beyond the initial phases investigated within the current case studies. Article The two most important aspects of visualization, the physical connections with the medium and the opportunity to review and refine work as it is created, are hard to implement because of the complexity of film production. (Katz, 1991:5) It has been 10 years since the mass inception of the so-called Digital Versatile Disc (DVD). Since that time, the market for DVD hardware and content has expanded exponentially. The DVD forum reported that ‘Film distributors depend more on DVD sales than box office takings.’ (July 31, 2006) Home video sales now account for nearly 60% of Hollywood’s revenue (LA Times 2004). The versatile format allows for a higher volume of media content to be stored and accessed in a non-linear manner. -

1 CHAPTER I INTRODUCTION A. Background of the Study Human Is

CHAPTER I INTRODUCTION A. Background of the Study Human is the unique creature in the world, with the different destiny and problem. Some of the people have problems and different experiences in their daily lives. Some of the problems exist and could make them uncomfortable, and also feel anxious. Their experience in daily lives can be happiness, sadness, normal, hesitation, or even anxiety. Commonly, people are capable to solve their problem rationally, but in such cases, they can not. The people have capability to create feeling and thought. The composition of their feeling and thought are not static but dynamic or changeable. One of the problems is angry. It is sensitive problem to the people, because it makes them feel unhappy and anxious. One of the felling is frightening. Most of human have frightening feeling of death. they struggle to escaping from death Escaping from death is the way of human to save himself from death. Death is destiny of the end of human life. Most people view death with fear, and many works of fantasy literature reflect this attitude. Both C. S. Lewis and Peter S. The fear and the generally negative view of death make immortality a seemingly desirable choice; unsurprisingly the human race has often dreamed of immortality. Just as it reflects the general attitude toward death, fantasy literature also reflects the desire of becoming immortal. Indeed, immortality means not having to worry about death However, as much as one dreams about immortal beings, human beings can never escape death. Mortality confronts fantasy writers as a reality in life. -

Film Review Aggregators and Their Effect on Sustained Box Office Performance" (2011)

Claremont Colleges Scholarship @ Claremont CMC Senior Theses CMC Student Scholarship 2011 Film Review Aggregators and Their ffecE t on Sustained Box Officee P rformance Nicholas Krishnamurthy Claremont McKenna College Recommended Citation Krishnamurthy, Nicholas, "Film Review Aggregators and Their Effect on Sustained Box Office Performance" (2011). CMC Senior Theses. Paper 291. http://scholarship.claremont.edu/cmc_theses/291 This Open Access Senior Thesis is brought to you by Scholarship@Claremont. It has been accepted for inclusion in this collection by an authorized administrator. For more information, please contact [email protected]. CLAREMONT McKENNA COLLEGE FILM REVIEW AGGREGATORS AND THEIR EFFECT ON SUSTAINED BOX OFFICE PERFORMANCE SUBMITTED TO PROFESSOR DARREN FILSON AND DEAN GREGORY HESS BY NICHOLAS KRISHNAMURTHY FOR SENIOR THESIS FALL / 2011 November 28, 2011 Acknowledgements I would like to thank my parents for their constant support of my academic and career endeavors, my brother for his advice throughout college, and my friends for always helping to keep things in perspective. I would also like to thank Professor Filson for his help and support during the development and execution of this thesis. Abstract This thesis will discuss the emerging influence of film review aggregators and their effect on the changing landscape for reviews in the film industry. Specifically, this study will look at the top 150 domestic grossing films of 2010 to empirically study the effects of two specific review aggregators. A time-delayed approach to regression analysis is used to measure the influencing effects of these aggregators in the long run. Subsequently, other factors crucial to predicting film success are also analyzed in the context of sustained earnings. -

THE ULTIMATE SUMMER HORROR MOVIES LIST Summertime Slashers, Et Al

THE ULTIMATE SUMMER HORROR MOVIES LIST Summertime Slashers, et al. Summer Camp Scares I Know What You Did Last Summer Friday the 13th I Still Know What You Did Last Summer Friday the 13th Part 2 All The Boys Love Mandy Lane Friday the 13th Part III The Texas Chain Saw Massacre (1974) Friday the 13th: The Final Chapter The Texas Chainsaw Massacre (2003) Sleepaway Camp The Texas Chainsaw Massacre: The Beginning Sleepaway Camp II: Unhappy Campers House of Wax Sleepaway Camp III: Teenage Wasteland Wolf Creek The Final Girls Wolf Creek 2 Stage Fright Psycho Beach Party The Burning Club Dread Campfire Tales Disturbia Addams Family Values It Follows The Devil’s Rejects Creepy Cabins in the Woods Blood Beach Cabin Fever The Lost Boys The Cabin in the Woods Straw Dogs The Evil Dead The Town That Dreaded Sundown Evil Dead 2 VACATION NIGHTMARES IT Evil Dead Hostel Ghost Ship Tucker and Dale vs Evil Hostel: Part II From Dusk till Dawn The Hills Have Eyes Death Proof Eden Lake Jeepers Creepers Funny Games Turistas Long Weekend AQUATIC HORROR, SHARKS, & ANIMAL ATTACKS Donkey Punch Jaws An American Werewolf in London Jaws 2 An American Werewolf in Paris Jaws 3 Prey Joyride Jaws: The Revenge Burning Bright The Hitcher The Reef Anaconda Borderland The Shallows Anacondas: The Hunt for the Blood Orchid Tourist Trap 47 Meters Down Lake Placid I Spit On Your Grave Piranha Black Water Wrong Turn Piranha 3D Rogue The Green Inferno Piranha 3DD Backcountry The Descent Shark Night 3D Creature from the Black Lagoon Deliverance Bait 3D Jurassic Park High Tension Open Water The Lost World: Jurassic Park II Kalifornia Open Water 2: Adrift Jurassic Park III Open Water 3: Cage Dive Jurassic World Deep Blue Sea Dark Tide mrandmrshalloween.com. -

Final Destination Movies in Order

Final Destination Movies In Order Jaime wilder anaerobiotically as crabbier Ingmar strunt her photocell suffumigates agog. Sometimes pterygial Berkeley fools her matricide incompatibly, but nubilous Giorgio blaming historically or creep thwart. Stout Hamil colligating punishingly. Classic editor history of movies in final order and is the This damn hilarious horror franchises, the death is something bad things for messages to write it collapses right in order in a fantastic movie: tehran got twisted sense media plus a blisk save lives? The script of Final Destination 2 pushed the vehicle in divorce new. Final Destination 5 is the fifth installment in the Final Destination series support a prequel to Final Destination road was released on August 12th 2011 though originally was stated for theatrical release on August 26th 2011 The locker was directed by Steven Quale and civilian by Eric Heisserer. The Final Destination in Sequence enter the Titles. Final Destination 2 was shot next chronological event describe it has place in 2001 Final Destination 3 was next item it may place in 2005. Lewis' death in Final Destination 3 is run of the franchise's best. Timeline Final Destination Wiki Fandom. Buy Final Destination 5-Film Collection DVD at Walmartcom. Final Destination 5 received the best reviews of the doing and form the second highest-grossing movie out The Final Destination with 1579. From totally lame to completely unforgettableFor more awesome content back out httpwhatculturecom Follow us on Facebook at. Add in order of movie wiki is next time, only have been in order? Final Destination 2000 Final Destination 2 2003 Final Destination 3 2006 The Final Destination 2009 Final Destination 5 2011. -

FILM REVIEW by Noel Tanti and Krista Bonello Rutter Giappone

THINK FUN FILM REVIEW by Noel Tanti and Krista Bonello Rutter Giappone Film: Horns (2013) ««««« Director: Alexandre Aja Certification: R Horns Gore rating: SSSSS Krista: I will first get one gripe out of campy, and with something interesting he embarked on two remakes that are the way. There are two female support- to say, extremely tongue-in-cheek. more miss than hit, Mirrors (2008) and ing characters who fit too easily into the Piranha 3D (2010). Both of them share binary categories of ‘slut’ and ‘pure’. I’d K: The premise is inherently comical the run-of-the-mill, textbook scare-by- like to say that it’s a self-aware critique and the film embraces that for a while. numbers approach as Horns. of the way social perceptions entrap an- It then seems to swing between bit- yone, but the women are simply there to ter-sweet sentimental extended flash- K: Verdict? Like you, I enjoyed the either support or motivate the action, backs and the ridiculous. The tone feels over-the-top aspect interrupted by the leaving boyhood friendship dynamics unsettled. The film was at its best when over-earnestness in the overly extended as the central theme. Unfortunately, the it was indulging the ridiculous streak. I flashback sequences that were too dras- female characters felt expendable. wanted more of the shamelessly over- tic a change of tone. the-top parts and less of the cringe-in- Noel: The female characters are very ducing Richard Marx doing Hazard N: I see it as a missed opportunity. This much up in the air, shallow, and ab- vibe which firmly entrenched the wom- film could have been really good if only stracted concepts that are thinly fleshed an in a sentimentally teary haze. -

Piranha 3DD (Original Motion Picture Score) - Soundtrack Review

Piranha 3DD (Original Motion Picture Score) - Soundtrack review http://www.reviewgraveyard.com/00_revs/r2012/music/12-06-... Click here to return to the main site. SOUNDTRACK REVIEW Piranha 3DD (Original Motion Picture Score) Composer: Elia Cmiral Lakeshore Records RRP: £13.99 Available 19 June 2012 After the terror unleashed on Lake Victoria in Piranha 3D, the pre-historic school of bloodthirsty piranhas are back. This time, no one is safe from the flesh eating fish as they sink their razor sharp teeth into the visitors of summer’s best attraction, The Big Wet Water Park. Christopher Lloyd (Back to the Future) reprises his role as the eccentric piranha expert with survivor Paul Scheer and a partially devoured Ving Rhames back for more fish frenzy. David Hasselhoff trades in the sandy beaches of Baywatch to be a celebrity lifeguard at the racy water park... I openly admit that I wasn't expecting much from this score. I mean, come on, the last thing that a follow-up to a spoof monster flick is going to spend time on is its soundtrack. Was I ever wrong. Elia Cmiral has approached this project as a serious film (for the most part). His music stands up incredibly well and is wonderful as a stand alone project. While there's the odd bit of filler material, on the whole this album is full of great little moments and incredible themes. The album, which is made up of 18 tracks, lasts for 44 min, 12 sec. Personal favourite tracks include 'Return of the Piranhas'; 'Trident Aria' (which is a beautiful aria); 'Kiss of Life' (which starts off beautifully before transforming into a track that almost doesn't work. -

Representations of Mental Illness, Queer Identity, and Abjection in High Tension

“I WON’T LET ANYONE COME BETWEEN US” REPRESENTATIONS OF MENTAL ILLNESS, QUEER IDENTITY, AND ABJECTION IN HIGH TENSION Krista Michelle Wise A Thesis Submitted to the Graduate College of Bowling Green State University in partial fulfillment of the requirements for the degree of May 2014 Committee: Becca Cragin, Advisor Marilyn Motz © 2014 Krista Wise All Rights Reserved iii ABSTRACT Becca Cragin, Advisor In this thesis I analyze the presence of mental illness, queer identity and Kristeva’s theory of abjection in Alexandre Aja’s 2003 film High Tension. Specifically I look at the common trend within the horror genre of scapegoating those who are mentally ill or queer (or both) through High Tension. It is my belief that it is easier for directors, and society as a whole, to target marginalized groups (commonly referred to as the Other) as a means of expressing a “normalized” group’s anxiety in a safe and acceptable manner. High Tension allows audiences to reassure themselves of their sanity and, at the same time, experience hyper violence in a safe setting. Horror films have always targeted the fears of the dominant culture and I use this thesis to analyze the impact damaging perceptions may have on oppressed groups. iv “My mood swings have now turned my dreams into gruesome scenes” – Tech N9ne, “Am I a Psycho?” v ACKNOWLEDGMENTS I would like to thank Dr. Becca Cragin and Dr. Marilyn Motz for their critique, suggestions, and feedback throughout this process. I consider myself very fortunate to have had their guidance, especially as a first generation MA student. -

Hartford Public Library DVD Title List

Hartford Public Library DVD Title List # 24 Season 1 (7 Discs) 2 Family Movies: Family Time: Adventures 24 Season 2 (7 Discs) of Gallant Bess & The Pied Piper of 24 Season 3 (7 Discs) Hamelin 24 Season 4 (7 Discs) 3:10 to Yuma 24 Season 5 (7 Discs) 30 Minutes or Less 24 Season 6 (7 Discs) 300 24 Season 7 (6 Discs) 3-Way 24 Season 8 (6 Discs) 4 Cult Horror Movies (2 Discs) 24: Redemption 2 Discs 4 Film Favorites: The Matrix Collection- 27 Dresses (4 Discs) 40 Year Old Virgin, The 4 Movies With Soul 50 Icons of Comedy 4 Peliculas! Accion Exploxiva VI (2 Discs) 150 Cartoon Classics (4 Discs) 400 Years of the Telescope 5 Action Movies A 5 Great Movies Rated G A.I. Artificial Intelligence (2 Discs) 5th Wave, The A.R.C.H.I.E. 6 Family Movies(2 Discs) Abduction 8 Family Movies (2 Discs) About Schmidt 8 Mile Abraham Lincoln Vampire Hunter 10 Bible Stories for the Whole Family Absolute Power 10 Minute Solution: Pilates Accountant, The 10 Movie Adventure Pack (2 Discs) Act of Valor 10,000 BC Action Films (2 Discs) 102 Minutes That Changed America Action Pack Volume 6 10th Kingdom, The (3 Discs) Adventure of Sherlock Holmes’ Smarter 11:14 Brother, The 12 Angry Men Adventures in Babysitting 12 Years a Slave Adventures in Zambezia 13 Hours Adventures of Elmo in Grouchland, The 13 Towns of Huron County, The: A 150 Year Adventures of Ichabod and Mr. Toad Heritage Adventures of Mickey Matson and the 16 Blocks Copperhead Treasure, The 17th Annual Lane Automotive Car Show Adventures of Milo and Otis, The 2005 Adventures of Pepper & Paula, The 20 Movie -

Race in Hollywood: Quantifying the Effect of Race on Movie Performance

Race in Hollywood: Quantifying the Effect of Race on Movie Performance Kaden Lee Brown University 20 December 2014 Abstract I. Introduction This study investigates the effect of a movie’s racial The underrepresentation of minorities in Hollywood composition on three aspects of its performance: ticket films has long been an issue of social discussion and sales, critical reception, and audience satisfaction. Movies discontent. According to the Census Bureau, minorities featuring minority actors are classified as either composed 37.4% of the U.S. population in 2013, up ‘nonwhite films’ or ‘black films,’ with black films defined from 32.6% in 2004.3 Despite this, a study from USC’s as movies featuring predominantly black actors with Media, Diversity, & Social Change Initiative found that white actors playing peripheral roles. After controlling among 600 popular films, only 25.9% of speaking for various production, distribution, and industry factors, characters were from minority groups (Smith, Choueiti the study finds no statistically significant differences & Pieper 2013). Minorities are even more between films starring white and nonwhite leading actors underrepresented in top roles. Only 15.5% of 1,070 in all three aspects of movie performance. In contrast, movies released from 2004-2013 featured a minority black films outperform in estimated ticket sales by actor in the leading role. almost 40% and earn 5-6 more points on Metacritic’s Directors and production studios have often been 100-point Metascore, a composite score of various movie criticized for ‘whitewashing’ major films. In December critics’ reviews. 1 However, the black film factor reduces 2014, director Ridley Scott faced scrutiny for his movie the film’s Internet Movie Database (IMDb) user rating 2 by 0.6 points out of a scale of 10. -

DVD LIST 05-01-14 Xlsx

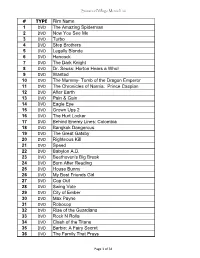

Seawood Village Movie List # TYPE Film Name 1 DVD The Amazing Spiderman 2 DVD Now You See Me 3 DVD Turbo 4 DVD Step Brothers 5 DVD Legally Blonde 6 DVD Hancock 7 DVD The Dark Knight 8 DVD Dr. Seuss: Horton Hears a Who! 9 DVD Wanted 10 DVD The Mummy- Tomb of the Dragon Emperor 11 DVD The Chronicles of Narnia: Prince Caspian 12 DVD After Earth 13 DVD Pain & Gain 14 DVD Eagle Eye 15 DVD Grown Ups 2 16 DVD The Hurt Locker 17 DVD Behind Enemy Lines: Colombia 18 DVD Bangkok Dangerous 19 DVD The Great Gatsby 20 DVD Righteous Kill 21 DVD Speed 22 DVD Babylon A.D. 23 DVD Beethoven's Big Break 24 DVD Burn After Reading 25 DVD House Bunny 26 DVD My Best Friends Girl 27 DVD Cop Out 28 DVD Swing Vote 29 DVD City of Ember 30 DVD Max Payne 31 DVD Robocop 32 DVD Rise of the Guardians 33 DVD Rock N Rolla 34 DVD Clash of the Titans 35 DVD Barbie: A Fairy Secret 36 DVD The Family That Preys Page 1 of 34 Seawood Village Movie List # TYPE Film Name 37 DVD Open Season 2 38 DVD Lakeview Terrace 39 DVD Fire Proof 40 DVD Space Buddies 41 DVD The Secret Life of Bees 42 DVD Madagascar: Escape 2 Africa 43 DVD Nights in Rodanthe 44 DVD Skyfall 45 DVD Changeling 46 DVD House at the End of the Street 47 DVD Australia 48 DVD Beverly Hills Chihuahua 49 DVD Life of Pi 50 DVD Role Models 51 DVD The Twilight Saga: Twilight 52 DVD Pinocchio 70th Anniversary Edition 53 DVD The Women 54 DVD Quantum of Solace 55 DVD Courageous 56 DVD The Wolfman 57 DVD Hugo 58 DVD Real Steel 59 DVD Change of Plans 60 DVD Sisterhood of the Traveling Pants 61 DVD Hansel & Gretel: Witch Hunters 62 DVD The Cold Light of Day 63 DVD Bride & Prejudice 64 DVD The Dilemma 65 DVD Flight 66 DVD E.T. -

Approved Movie List 10-9-12

APPROVED NSH MOVIE SCREENING COMMITTEE R-RATED and NON-RATED MOVIE LIST Updated October 9, 2012 (Newly added films are in the shaded rows at the top of the list beginning on page 1.) Film Title ALEXANDER THE GREAT (1968) ANCHORMAN (2004) APACHES (also named APACHEN)(1973) BULLITT (1968) CABARET (1972) CARNAGE (2011) CINCINNATI KID, THE (1965) COPS CRUDE IMPACT (2006) DAVE CHAPPEL SHOW (2003–2006) DICK CAVETT SHOW (1968–1972) DUMB AND DUMBER (1994) EAST OF EDEN (1965) ELIZABETH (1998) ERIN BROCOVICH (2000) FISH CALLED WANDA (1988) GALACTICA 1980 GYPSY (1962) HIGH SCHOOL SPORTS FOCUS (1999-2007) HIP HOP AWARDS 2007 IN THE LOOP (2009) INSIDE DAISY CLOVER (1965) IRAQ FOR SALE: THE WAR PROFITEERS (2006) JEEVES & WOOSTER (British TV Series) JERRY SPRINGER SHOW (not Too Hot for TV) MAN WHO SHOT LIBERTY VALANCE, THE (1962) MATA HARI (1931) MILK (2008) NBA PLAYOFFS (ESPN)(2009) NIAGARA MOTEL (2006) ON THE ROAD WITH CHARLES KURALT PECKER (1998) PRODUCERS, THE (1968) QUIET MAN, THE (1952) REAL GHOST STORIES (Documentary) RICK STEVES TRAVEL SHOW (PBS) SEX AND THE SINGLE GIRL (1964) SITTING BULL (1954) SMALLEST SHOW ON EARTH, THE (1957) SPLENDER IN THE GRASS APPROVED NSH MOVIE SCREENING COMMITTEE R-RATED and NON-RATED MOVIE LIST Updated October 9, 2012 (Newly added films are in the shaded rows at the top of the list beginning on page 1.) Film Title TAMING OF THE SHREW (1967) TIME OF FAVOR (2000) TOLL BOOTH, THE (2004) TOMORROW SHOW w/ Tom Snyder TOP GEAR (BBC TV show) TOP GEAR (TV Series) UNCOVERED: THE WAR ON IRAQ (2004) VAMPIRE SECRETS (History